My colleague Grace Huckins has a great story on OpenAI’s release of GPT-5, its long-awaited new flagship model. One of the takeaways, however, is that while GPT-5 may make for a better experience than the previous versions, it isn’t something revolutionary. “GPT-5 is, above all else,” Grace concludes, “a refined product.”

This is pretty much in line with my colleague Will Heaven’s recent argument that the latest model releases have been a bit like smartphone releases: Increasingly, what we are seeing are incremental improvements meant to enhance the user experience. (Casey Newton made a similar point in Friday’s Platformer.) At GPT-5’s release on Thursday, Open AI CEO Sam Altman himself compared it to when Apple released the first iPhone with a Retina display. Okay. Sure.

But where is the transition from the BlackBerry keyboard to the touch-screen iPhone? Where is the assisted GPS and the API for location services that enables real-time directions and gives rise to companies like Uber and Grindr and lets me order a taxi for my burrito? Where are the real breakthroughs?

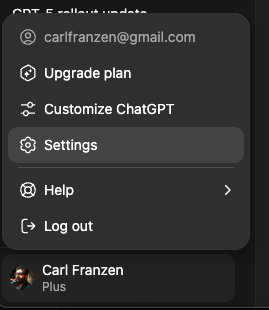

In fact, following the release of GPT-5, OpenAI found itself with something of a user revolt on its hands. Customers who missed GPT-4o’s personality successfully lobbied the company to bring it back as an option for its Plus users. If anything, that indicates the GPT-5 release was more about user experience than noticeable performance enhancements.

And yet, hours before OpenAI’s GPT-5 announcement, Altman teased it by tweeting an image of an emerging Death Star floating in space. On Thursday, he touted its PhD-level intelligence. He then went on the Mornings with Maria show to claim it would “save a lot of lives.” (Forgive my extreme skepticism of that particular brand of claim, but we’ve certainly seen it before.)

It’s a lot of hype, but Altman is not alone in his Flavor Flav-ing here. Last week Mark Zuckerberg published a long memo about how we are approaching AI superintelligence. Anthropic CEO Dario Amodei freaked basically everyone out earlier this year with his prediction that AI would harvest half of all entry-level jobs within, possibly, a year.

The people running these companies literally talk about the danger that the things they are building might take over the world and kill every human on the planet. GPT-5, meanwhile, still can’t tell you how many b’s there are in the word “blueberry.”

This is not to say that the products released by OpenAI or Anthropic or what have you are not impressive. They are. And they clearly have a good deal of utility. But the hype cycle around model releases is out of hand.

I say that as one of those people who use ChatGPT or Google Gemini most days, often multiple times a day. This week, for example, my wife was surfing and encountered a whale repeatedly slapping its tail on the water. Despite having seen very many whales, often in very close proximity, she had never seen anything like this. She sent me a video, and I was curious about it too. So I asked ChatGPT, “Why do whales slap their tails repeatedly on the water?” It came right back, confidently explaining that what I was describing was called “lobtailing,” along with a list of possible reasons why whales do that. Pretty cool.

But then again, a regular garden-variety Google search would also have led me to discover lobtailing. And while ChatGPT’s response summarized the behavior for me, it was also too definitive about why whales do it. The reality is that while people have a lot of theories, we still can’t really explain this weird animal behavior.

The reason I’m aware that lobtailing is something of a mystery is that I dug into actual, you know, search results. Which is where I encountered this beautiful, elegiac essay by Emily Boring. She describes her time at sea, watching a humpback slapping its tail against the water, and discusses the scientific uncertainty around this behavior. Is it a feeding technique? Is it a form of communication? Posturing? The action, as she notes, is extremely energy intensive. It takes a lot of effort from the whale. Why do they do it?

I was struck by one passage in particular, in which she cites another biologist’s work to draw a conclusion of her own:

Surprisingly, the complex energy trade-off of a tail-slap might be the exact reason why it’s used. Biologist Hal Whitehead suggests, “Breaches and lob-tails make good signals precisely because they are energetically expensive and thus indicative of the importance of the message and the physical status of the signaler.” A tail-slap means that a whale is physically fit, traveling at nearly maximum speed, capable of sustaining powerful activity, and carrying a message so crucial it is willing to use a huge portion of its daily energy to share it. “Pay attention!” the whale seems to say. “I am important! Notice me!”

In some ways, the AI hype cycle has to be out of hand. It has to justify the ferocious level of investment, the uncountable billions of dollars in sunk costs. The massive data center buildouts with their massive environmental consequences created at massive expense that are seemingly keeping the economy afloat and threatening to crash it. There is so, so, so much money at stake.

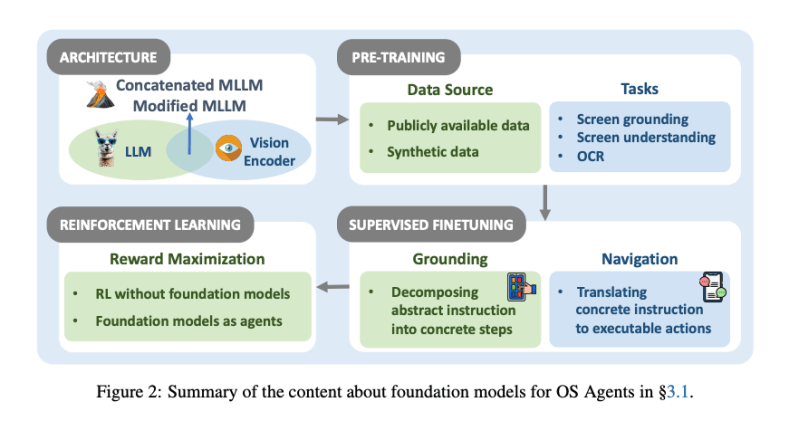

Which is not to say there aren’t really cool things happening in AI. And certainly there have been a number of moments when I have been floored by AI releases. ChatGPT 3.5 was one. Dall-E, NotebookLM, Veo 3, Synthesia. They can amaze. In fact there was an AI product release just this week that was a little bit mind-blowing. Genie 3, from Google DeepMind, can turn a basic text prompt into an immersive and navigable 3D world. Check it out—it’s pretty wild. And yet Genie 3 also makes a case that the most interesting things happening right now in AI aren’t happening in chatbots.

I’d even argue that at this point, most of the people who are regularly amazed by the feats of new LLM chatbot releases are the same people who stand to profit from the promotion of LLM chatbots.

Maybe I’m being cynical, but I don’t think so. I think it’s more cynical to promise me the Death Star and instead deliver a chatbot whose chief appeal seems to be that it automatically picks the model for you. To promise me superintelligence and deliver shrimp Jesus. It’s all just a lot of lobtailing. “Pay attention! I am important! Notice me!”

This article is from The Debrief, MIT Technology Review’s subscriber-only weekly email newsletter from editor in chief Mat Honan. Subscribers can sign up here to receive it in your inbox.