Where can you find lasers, electric guitars, and racks full of novel batteries, all in the same giant room? This week, the answer was the 2025 ARPA-E Energy Innovation Summit just outside Washington, DC.

Energy innovation can take many forms, and the variety in energy research was on display at the summit. ARPA-E, part of the US Department of Energy, provides funding for high-risk, high-reward research projects. The summit gathers projects the agency has funded, along with investors, policymakers, and journalists.

Hundreds of projects were exhibited in a massive hall during the conference, featuring demonstrations and research results. Here are four of the most interesting innovations MIT Technology Review spotted on site.

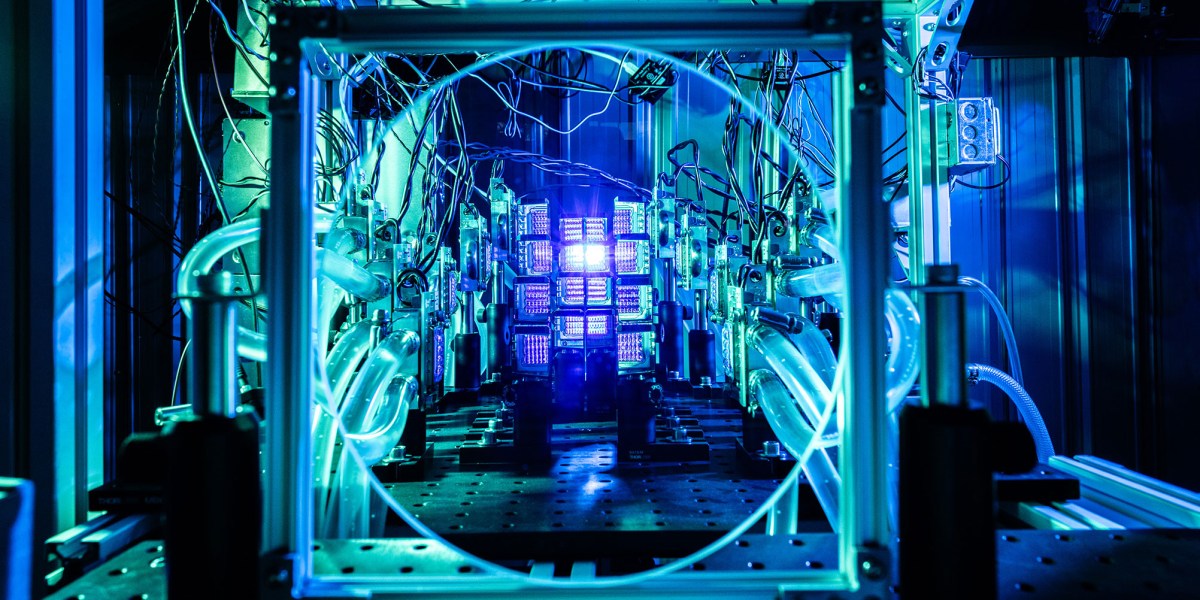

Steel made with lasers

Startup Limelight Steel has developed a process to make iron, the main component in steel, by using lasers to heat iron ore to super-high temperatures.

Steel production makes up roughly 8% of global greenhouse gas emissions today, in part because most steel is still made with blast furnaces, which rely on coal to hit the high temperatures that kick off the required chemical reactions.

Limelight instead shines lasers on iron ore, heating it to temperatures over 1,600 °C. Molten iron can then be separated from impurities, and the iron can be put through existing processes to make steel.

The company has built a small demonstration system with a laser power of about 1.5 kilowatts, which can process between 10 and 20 grams of ore. The whole system is made up of 16 laser arrays, each just a bit larger than a postage stamp.

The components in the demonstration system are commercially available; this particular type of laser is used in projectors. The startup has benefited from years of progress in the telecommunications industry that has helped bring down the cost of lasers, says Andy Zhao, the company’s cofounder and CTO.

The next step is to build a larger-scale system that will use 150 kilowatts of laser power and could make up to 100 tons of steel over the course of a year.

Rocks that can make fuel

The hunks of rock at a booth hosted by MIT might not seem all that high-tech, but someday they could help produce fuels and chemicals.

A major topic of conversation at the ARPA-E summit was geologic hydrogen—there’s a ton of excitement about efforts to find underground deposits of the gas, which can be used as a fuel across a wide range of industries, including transportation and heavy industry.

Last year, ARPA-E funded a handful of projects on the topic, including one in Iwnetim Abate’s lab at MIT. Abate is among the researchers who are aiming not just to hunt for hydrogen, but to actually use underground conditions to help produce it. Earlier this year, his team published research showing that by using catalysts and conditions common in the subsurface, scientists can produce hydrogen as well as other chemicals, like ammonia. Abate cofounded a spinout company, Addis Energy, to commercialize the research, which has since also received ARPA-E funding.

All the rocks on the table, from the chunk of dark, hard basalt to the softer talc, could be used to produce these chemicals.

An electric guitar powered by iron nitride magnets

The sound of music drifted from the Niron Magnetics booth across nearby walkways. People wandering by stopped to take turns testing out the company’s magnets, in the form of an electric guitar.

Most high-powered magnets today contain neodymium—demand for them is set to skyrocket in the coming years, especially as the world builds more electric vehicles and wind turbines. Supplies could stretch thin, and the geopolitics are complicated because most of the supply comes from China.

Niron is making new magnets that don’t contain rare earth metals. Instead, Niron’s technology is based on more abundant materials: nitrogen and iron.

The guitar is a demonstration product—today, magnets in electric guitars typically contain aluminum, nickel, and cobalt-based magnets that help translate the vibrations from steel strings into an electric signal that is broadcast through an amplifier. Niron made an instrument using its iron nitride magnets instead. (See photos of the guitar from an event last year here.)

Niron opened a pilot commercial facility in late 2024 that has the capacity to produce 10 tons of magnets annually. Since we last covered Niron, in early 2024, the company has announced plans for a full-scale plant, which will have an annual capacity of about 1,500 tons of magnets once it’s fully ramped up.

Batteries for powering high-performance data centers

The increasing power demand from AI and data centers was another hot topic at the summit, with server racks dotting the showcase floor to demonstrate technologies aimed at the sector. One stuffed with batteries caught my eye, courtesy of Natron Energy.

The company is making sodium-ion batteries to help meet power demand from data centers.

Data centers’ energy demands can be incredibly variable—and as their total power needs get bigger, those swings can start to affect the grid. Natron’s sodium-ion batteries can be installed at these facilities to help level off the biggest peaks, allowing computing equipment to run full out without overly taxing the grid, says Natron cofounder and CTO Colin Wessells.

Sodium-ion batteries are a cheaper alternative to lithium-based chemistries. They’re also made without lithium, cobalt, and nickel, materials that are constrained in production or processing. We’re seeing some varieties of sodium-ion batteries popping up in electric vehicles in China.

Natron opened a production line in Michigan last year, and the company plans to open a $1.4 billion factory in North Carolina.