What lies ahead for the data center industry in 2025? At Data Center Frontier, our eyes are always on the horizon, and we’re constantly talking with industry thought leaders to get their take on key trends. Our Magic 8 Ball prognostications did pretty well last year, so now it’s time to look ahead at what’s in store for the industry over the next 12 months, as we identify eight themes that stand to shape the data center business going forward. We’ll be writing in more depth about many of these trends, but this list provides a view of the topics that we believe will be most relevant in 2025.

A publication about the future frontiers of data centers and AI shouldn’t be afraid to put it’s money where its mouth is, and that’s why we used AI tools to help research and compose this year’s annual industry trends forecast. The article is meant to be a bit encyclopedic in the spirit of a digest, less than an exactly prescriptive forecast – although we try to go there as well. The piece contains some dark horse trends. Do we think immersion cooling is going to explode this year, suddenly giving direct-to-chip a run for its money? Not exactly. But do we think that, given the enormous and rapidly expanding parameters of the AI and HPC boom, the sector for immersion cooling could see some breakthroughs this year? Seems reasonable.

Ditto for the trends forecasting natural gas and quantum computing advancements. Such topics are definitely on the horizon and highly visible on the frontier of data centers, so we’d better learn more about them, was our thought. Because as borne out by recent history, data center industry trends that start at the bleeding edge (pun intended – also, on the list) sometimes have a way of sorting to the forefront, especially as driven by AI – which of course was last year’s overarching trend as defined by DCF founder Rich Miller. Exhibits A and B: liquid cooling and nuclear power. Most would agree they’re at industry center stage now, after years lurking in the wings. All because of the AI boom, which now goes on to define the rest of the decade, probably, while the industry sorts out how to adapt – an idea which we hope this article also somewhat informs.

This is a long piece. Be sure to scroll to the bottom to see the full list, which extends to 15 this year, with some essential, red-hot “honorable mentions” included at end. This was due to the volume of our recent and ongoing editorial coverage in areas like nuclear energy, particularly via SMRs or microreactors currently in development, which publications like DCF can’t fail to keep in view for the entire year.

1. Truth and Consequences Escalate for Data Center and AI Energy Demand

Advancing into 2025, the data center industry approaches looming challenges related to energy consumption. As you might have heard, the proliferation of AI technologies is driving a massive increase in data center energy demands. As of last November, Deloitte forecasts that global data center electricity usage could nearly double from 536 terawatt-hours (TWh) in 2025 to approximately 1,065 TWh by 2030. Concerns about grid reliability are also rising with the AI tsunami; to wit, recent Bloomberg analysis as (rather tartly) cited by Business Insider suggests that the intensified energy requirements of data centers are already straining existing electrical grids, potentially affecting power quality and reliability for surrounding communities. The analysis purports instances where the high energy consumption of AI data centers has led to power quality issues, posing risks to residential electronics.

Also, data centers’ need for significant combined land and energy resources in the race to build out AI digital infrastructure is already producing loggerhead conflicts surrounding other urban development needs. For example, as recently explored by the Wall Street Journal, in Atlanta, rapid data center growth has been criticized for consuming land and power at the expense of housing and retail development, prompting local regulatory interventions. ProPublica reporting from last summer describes how other states are handling the data center industry’s impact. Local regulatory and policy initiatives globally now also often trend toward qualifying and stemming data center growth, as in response to the sector’s looming energy demands and fear of associated environmental impacts, local policymakers are implementing stricter regulations on facility operations. For instance, as noted recently by AP News, in Ireland, the government has halted new data center developments near Dublin until 2028 to manage energy consumption and ensure grid stability. (And of course, we’ve been tracking a similar situation in Virginia, home of the world’s largest data center market surrounding Ashburn’s Data Center Alley.)

Elsewhere in Europe, Amsterdam’s data center moratorium remains in effect (no doubt redounding to the sector’s vibrancy in nearby Germany and France). Meanwhile in Asia, the pace and urgency of data center demand last year saw industry hotspot Singapore to thaw its 2019 industry moratorium, with 300 MW of additional power being made available “in the short term,” while more capacity could be allocated for companies that use green energy. Reporting by Capacity Media noted that the latter will be challenge, as Singapore gets over 90% of its energy from natural gas (hold that thought), and green credentials without the use of carbon credits has been a challenge for operators looking to meet sustainability goals. It will be interesting to see in 2025 if other industry moratoriums are rescinded or altered, or if more arise, in the face of the staggering demand for data centers.

Meanwhile, data center industry environmental and sustainability conundrums are steepening. The industry’s efforts to decarbonize will be complicated by the staggering growth in power demand, which could outpace the expansion of renewable energy infrastructure. To wit, October 2024 analysis from S&P Global warned that the surge in energy consumption by data centers, particularly those powered by non-renewable sources, raises environmental concerns. “Data center emissions could nearly double by 2030 as growing energy demands will likely rely on gas-fired power generation, which will slow down wider grid decarbonization efforts,” stated the analysis, adding:

“Major tech players are seeking low-carbon power and have ambitious decarbonization goals but sourcing will prove challenging given the speed and size of increases in their energy consumption. While carbon emission concerns are unlikely to be a major impediment to near-term tech sector growth, significant long-term increases in energy needs could lead to challenges, including greater environmental scrutiny from regulators, investors, and other stakeholders […] S&P Global Ratings’ estimates that U.S data center power demand will increase at 12% per year until the end of 2030. That could double the tech sectors’ current carbon emissions as constraints on renewable generation growth, coupled with data centers’ requirement for stable power, mean about 60% of new demand in the U.S, to 2030, could be met by natural gas.”

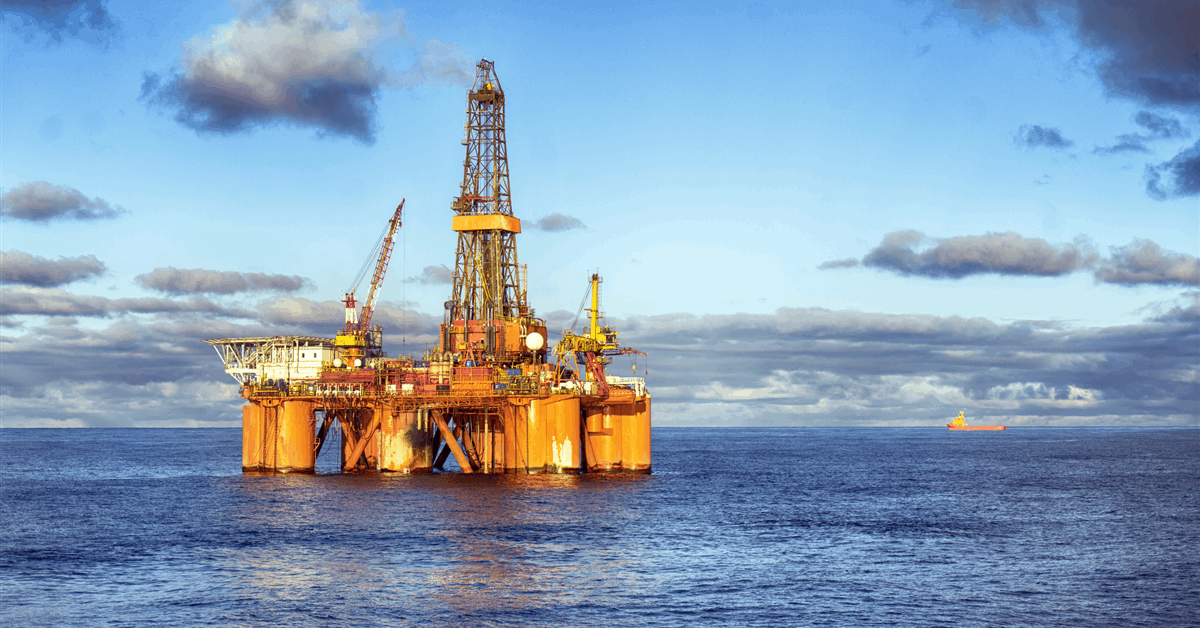

2. The (Un)Easy Button: Natural Gas Bridges The Data Center Energy Revolution

Going forward in 2025, natural gas energy generation continues to represent a bridge to both hydrogen-powered and nuclear energy data centers that compromises on decarbonization for the time being. Natural gas’ lower carbon intensity stems from its molecular composition, combustion efficiency, and cleaner emissions profile compared to coal and oil. While it is less carbon-intensive, achieving its full climate benefits requires minimizing methane leakage and integrating it as part of a broader strategy to transition to renewable energy.

As noted by recent Reuters reporting, in a worst case scenario leading from this year, hyperscale and colocation providers facing unprecedented energy demands driven by AI and cloud computing could see data center development significantly outpacing the growth of green renewable energy infrastructure. Hyperscalers like AWS, Microsoft, and Google could struggle to meet ambitious sustainability targets (e.g., carbon-free energy by 2030) and might be forced to rely more on fossil fuels (e.g. natural gas), increasing their carbon footprints and attracting regulatory penalties globally or public backlash at home. Smaller colocation operators might find it difficult to procure clean energy due to slow growth in renewables, leading to higher operational costs or losing customers seeking greener alternatives. Therefore the appeal of natural gas for data center on-site power generation for primary and backup purposes is growing as grids manage intermittency and a potential dearth of renewables. Yes, the industry’s natural gas lock-in may delay full decarbonization efforts, prolonging dependence on fossil fuels. But the fact is, the U.S. data center industry is already experiencing a significant increase in reliance on natural gas to meet its escalating energy demands driven by the expansion of cloud computing and artificial intelligence (AI) applications. With regard to the data center industry, key NG developments in recent months have included:

Increased Natural Gas Demand: As cited by Data Center Dynamics, analysts from S&P Global project that data centers could require up to 3 billion cubic feet per day (bcf/d) of natural gas by 2030, with potential increases to 6 bcf/d depending on the energy mix. This surge is attributed to the need for reliable, continuous power to support data center operations.

- Pipeline Infrastructure Expansion: Energy industry analyst Enverus noted in Fall 2024 that midstream energy companies are observing heightened interest from data center operators seeking direct natural gas pipeline connections for on-site generation. For example, TC Energy reported that nearly 60% of the 300 data centers under development in the U.S. are within 15 miles of its pipeline systems, indicating a strategic alignment between data center locations and natural gas infrastructure.

- Dedicated Natural Gas Power Plants: Meanwhile, last month ExxonMobil announced plans to construct a 1.5-gigawatt natural gas-fired power plant exclusively to supply energy to data centers, an ‘Exhibit A’ -type initiative which underscores the industry’s move towards securing dedicated energy sources to ensure operational reliability.

- Discernible Impact on Energy Markets: Oil and gas industry reporting suggests that growing energy consumption by data centers is influencing natural gas markets. Moody’s Ratings posits that the sector’s substantial power requirements, alongside increasing liquefied natural gas (LNG) exports, could drive natural gas prices above $3 in 2025.

- Uneasy Environmental Considerations: As recently noted by Reuters reporting, the reliance on natural gas for near-term AI and cloud computing presents challenges to holistic decarbonization efforts. The expansion of data centers, coupled with the current pace of renewable energy deployment, may delay the worldwide transition to cleaner energy sources, raising concerns about the industry’s environmental impact.

The bottom line is that the data center industry’s increasing dependence on natural gas to meet its energy needs carries significant implications for energy infrastructure, market dynamics, and environmental sustainability. Yet in this area, it definitely needs to be noted that natural gas is considered less carbon-intensive than other fossil fuels, such as coal and oil, for several technical reasons. According to CO₂ Emissions Data provided by the U.S. Energy Information Administration (EIA), natural gas consists primarily of methane (CH₄), which has a higher hydrogen-to-carbon ratio than coal or oil. When burned, natural gas produces more energy per unit of carbon dioxide (CO₂) emitted compared to coal and oil. (Natural gas produces ~117 pounds of CO₂ per million British thermal units (MMBtu), while oil yields ~160 pounds of CO₂ per MMBtu, and coal produces ~210 pounds of CO₂ per MMBtu.) Then too, natural gas burns more cleanly and efficiently than coal or oil, with a combustion process that releases fewer pollutants, such as sulfur dioxide (SO₂) and particulate matter, in addition to producing less CO₂. Per the International Energy Agency’s (IEA) recent Energy Efficiency Data, advanced natural gas power operations, such as combined-cycle gas turbine (CCGT) plants, can achieve thermal efficiencies of up to 60%, compared to coal plants, which typically operate at 33-40% efficiency.

Admittedly, while natural gas emits less CO₂ than coal and oil, methane leakage during production and transportation can negate its climate benefits. Methane is a potent greenhouse gas, with a global warming potential approximately 25 times greater than CO₂ over a 100-year period. Efforts to minimize methane leakage and improve monitoring are critical to ensuring natural gas remains a relatively cleaner fossil fuel. It should be noted that methane emissions primarily occur during extraction, processing, and transportation of natural gas. And increasingly at the point of combustion (e.g., in power plants), methane is reportedly almost entirely converted into CO₂ and water vapor. The Environmental Defense Fund’s (EDF) Methane Emissions and Control practice suggests that advanced technologies, such as leak detection and repair (LDAR) systems and improved pipeline infrastructure, can significantly reduce methane leakage during production and distribution.

Finally, as further pointed out by a paper from the MIT Energy Initiative, natural gas is often considered a “bridge fuel” in the transition to renewable energy because it can integrate well with intermittent renewables like wind and solar. Gas turbines can quickly ramp up or down to balance grid demand, reducing reliance on coal or oil-based backup power. This flexibility basically supports decarbonization while renewable energy capacity scales. Also, as noted by International Journal of Greenhouse Gas Control in a recent paper titled, “Advances in CCS for Natural Gas Plants,” NG plants are more compatible with carbon capture and storage (CCS) technology due to their lower volumetric flow rates of flue gas compared to coal plants. This makes their CO₂ capture more efficient and cost-effective.

Natural gas also plays a significant role as a stepping stone toward hydrogen energy for several technical reasons. Natural gas infrastructure, including pipelines and storage systems, can be adapted to transport hydrogen, reducing the need for entirely new infrastructure. Meanwhile, steam methane reforming is the dominant method for producing hydrogen today, where natural gas (methane, CH₄) reacts with steam (H₂O) at high temperatures to produce hydrogen (H₂) and carbon monoxide (CO). This process is followed by a water-gas shift reaction, converting CO into additional H₂ and CO₂. When combined with carbon capture and storage (CCS), the process can produce “blue hydrogen,” a low-carbon form of hydrogen, and currently achieves about 65-75% efficiency, making it an efficient starting point for hydrogen production, according to the DOE’s Hydrogen Production Processes guidelines. The National Renewable Energy Laboratory’s (NREL) Hydrogen Blending Research found that existing natural gas power plants can blend up to 20% hydrogen by volume with natural gas, reducing carbon emissions without requiring significant modifications to plant equipment. Research is underway to increase this percentage.

Finally, as the deployment of nuclear energy, particularly from small modular reactors (SMRs) and microreactors, faces challenges related to regulatory approvals, construction timelines, and fuel availability, natural gas stands to fill the energy gap during this transition. The U.S. Energy Information Administration’s (EIA) Natural Gas Plant Deployment guidance notes that natural gas power plants can be constructed relatively quickly (i.e. in 12-24 months) compared to SMRs and microreactors, which most think will probably require between 5 and 20 years to achieve widespread deployment due to licensing and construction complexities.

Natural gas plants’ speed of deployment of course enables data center providers to meet immediate power demands for energy-intensive AI and advanced HPC workloads. On the pure renewables front, in explaining the role of gas in flexible power systems, the IEA also notes that natural gas provides dispatchable energy, meaning it can ramp up or down quickly to balance intermittent renewable sources like wind and solar. Such flexibility is of course crucial for hyperscale and colocation data centers that require consistent power reliability for AI workloads and cloud services.

It remains to be seen whether dependence on natural gas could tarnish the environmental credentials of hyperscale players, affecting customer trust and competitive positioning. Meanwhile, could rising natural gas prices accompanied by potential supply chain disruptions disproportionately affect smaller colocation providers with less negotiating power? To find out, we’ll be listening carefully to what the data center industry says in 2025.

3. Rising Data Center AI Infrastructure Integration Meets GPUaaS Uncertainty

Hyperscalers are integrating AI at every level of the data center, from managing energy efficiency and predictive maintenance to building specialized AI infrastructure for machine learning workloads. They are also heavily investing in AI chip design (e.g., AWS Inferentia, Google TPU, etc.) to optimize their cloud offerings. Meanwhile, most colocation providers have been seen adopting AI more cautiously, focusing on operational efficiency and tenant support. Colos don’t usually design AI hardware, but may offer AI-ready spaces with optimized power and cooling capabilities for enterprise clients deploying their own AI systems.

As we expect AI to become integral to data center operations, enhancing efficiency through predictive maintenance, workload optimization, and energy management, the increasing demand for user AI capabilities is of course also driving the development of specialized infrastructure within data centers for serving users and customers. The future roadmap for data center generative AI architecture advancements in 2025 is founded on significant breakthroughs in 2024 for GPUs and CPU chips, connectivity and rack architectures from the likes of providers such as NVIDA, Dell, Supermicro, Intel, and cloud hyperscalers including Microsoft, Google, Meta and AWS.

The roadmap for data center AI architecture in 2025 will be characterized by significant enhancements in processing power, connectivity, and integrated solutions, all aimed at supporting the next generation of AI technologies. Anticipated progress includes:

- GPU and CPU Innovations: NVIDIA’s introduction of the Blackwell GPU architecture in 2024 sets up the industry for substantial performance enhancements in AI workloads. These GPUs are designed to support trillion-parameter-scale AI models, offering improved efficiency and scalability. In terms of AI-optimized CPUs, Intel has been developing CPUs with integrated AI acceleration, aiming to enhance performance for AI inference tasks. These processors are also expected to play a crucial role in absorbing data center AI workloads with efficiency.

- Connectivity Enhancements and High-Bandwidth Networking: The increasing demand for AI workloads necessitates advancements in high-bandwidth data center networking. Companies like Synopsys have introduced 1.6T Ethernet IP offerings, enabling faster data transfer rates and reducing latency, which are critical for AI applications. Also, the evolution of NVIDIA’s NVLink technology will continue to provide higher bandwidth and improved connectivity between GPUs, facilitating efficient scaling of AI models across multiple processors.

- Advancement of AI Rack Architectures: Dell Technologies has been collaborating with NVIDIA to develop integrated AI solutions, such as the Dell AI Factory, which combines compute, storage, and networking tailored for AI workloads. Such integration simplifies deployment and enhances performance for data center operators. Meanwhile Supermicro has been focusing on modular AI rack designs that allow data centers to scale their AI capabilities efficiently. These designs offer flexibility in configuring compute and storage resources to meet varying AI workload demands.

- Major Hyperscaler Initiatives: Discerning its “golden opportunity for American AI” in $80 billion of just-announced funds for AI data center development this fiscal year alone, Microsoft has been investing heavily in AI infrastructure, including the development of AI-optimized data centers and the integration of AI capabilities across its cloud services. This includes the deployment of specialized hardware to accelerate AI workloads. Google continues to advance its Tensor Processing Units (TPUs), custom-designed for AI workloads, and Arm-based Axion CPUs for cloud customers. These accelerators are integral to Google’s AI and cloud services and are expected to see further enhancements in performance and efficiency. Meta continues to focus on developing AI infrastructure to support its vast social platforms. This includes investments in custom hardware and data center architectures optimized for AI processing. Finally, Amazon Web Services (AWS) continues to expand its AI capabilities to meet growing customer demand by offering a range of AI and machine learning services, underpinned by a robust infrastructure that includes custom AI chips like its Inferentia and Trainium products.

Looking ahead, the convergence of these advancements is expected to lead to data centers that are more efficient, scalable, and capable of handling increasingly complex AI workloads. The collaboration between hardware vendors and hyperscalers will be pivotal in driving innovation and meeting the evolving demands of AI applications.

Returing to the theme of AI-driven data center automation and robotics, this is definitely going to be an innovation trend to watch this year. Key data center industry players including Google (with its DeepMind AI for energy efficiency), Schneider Electric, IBM, and Juniper Networks, as well as government agencies such as National Institute of Standards and Technology (NIST) who is working on AI standards), are investing in AI-based platforms for automating everything from energy management to predictive maintenance, enhancing uptime and reducing operational costs. For starters, such automation could improve Power Usage Effectiveness (PUE) metrics in unforeseen ways, enabling more sustainable and autonomous data center operations.

However, in the area of AI silicon expenditures, certain unsettling outcomes regarding GPU overbuilding for LLMs and depreciation in the intensely expanding sphere of AI data center computing may need attention this year, especially as infrastructure growth accelerates and thought leaders assess the implications. Key risks here could include:

- Depreciation of Specialized AI Hardware: GPU hardware, especially high-end models like NVIDIA’s H100 or A100, is being acquired at unprecedented rates to power AI workloads. However, the lifecycle of AI GPUs could be shorter than anticipated due to rapid hardware innovation or stagnation in AI application growth. If new generations of GPUs or specialized accelerators (e.g., purpose-built chips like TPUs or neuromorphic processors) make existing hardware obsolete, depreciation could occur, leaving operators with stranded or underutilized assets. Operators overcommitted to GPU-heavy deployments could find their capital investments unsustainable as hardware loses value faster than it can generate returns. Data center industry thought leaders are starting to warn against the “tech debt” of over-provisioning GPUs for generative AI use cases without diversification into broader, longer-term workloads.

- Energy and Resource Bottlenecks: As AI GPUs are extremely power-intensive, overbuilding GPU clusters could overwhelm existing power and cooling infrastructures, potentially creating grid instability and environmental backlash. Energy costs could skyrocket, making AI deployments economically untenable, especially for companies relying on low-margin AI services or hosting. Public perception of AI infrastructure could trend increasingly negative as excessive power consumption competes with societal energy needs, triggering regulatory and/or community resistance. As energy efficiency is becoming a critical competitive factor, some industry analysts suggest the need for AI-specific sustainability benchmarks, fearing current growth trends may outpace the deployment of efficient cooling and power systems.

- Overcapacity and Market Saturation: The unprecedented demand for LLMs in 2023–2024 prompted a rush to build AI-focused data centers. However, if AI demand plateaus or enterprises scale back on LLM deployment (e.g., due to high costs or limited ROI), the industry may face significant overcapacity. Analysts warn that overbuilt infrastructure could lead to widespread financial losses as supply outstrips demand, causing a “GPU glut” similar to earlier semiconductor market cycles. Smaller players or late entrants to the market may struggle to compete, leading to consolidation or exit. Some thought leaders foresee this as a looming “AI winter” risk, where expectations for continuous AI innovation and market demand outpace real-world use cases.

To help alleviate such risks, data centers may need to focus on ROI and avoid overcommitting to GPU-based infrastructure unless there’s clear, sustainable demand. They should invest in flexibility and consider modular or multi-use designs for data centers to pivot between workloads. Data centers might also have to diversify AI infrastructure, looking beyond GPUs to emerging hardware like ASICs or RISC-V-based accelerators. Ultimately, operators will need to cultivate strategic foresight and adaptability in navigating AI infrastructure growth amidst an unpredictable and still essentially brand-new market.

Finally in 2025, the data center industry should also be on the watch for the emergence of revolutionary chip architectures beyond GPUs. Innovations presently being developed by key semiconductor industry players such as NVIDIA, Intel, AMD, Cerebras Systems, and Lightmatter to leapfrog current GPU/CPU performance limitations include custom AI accelerators, neuromorphic chips, and photonic processors. Such new chips are purpose-built for AI inference and training at scale. Government research agencies, such as DARPA with its AI Next campaign and the European Processor Initiative (EPI), are also examining the potential impact of new chip technologies in driving down energy costs per computation and enabling new AI capabilities previously thought unattainable.

4. It Takes a MegaCampus: Hyperscale Growth Continues At Full Speed, Reliant on Utility Partnershps

To say the least, the hyperscale data center sector in the United States experienced significant growth in 2024, driven as we know by escalating demands for AI capabilities, cloud services, and digital infrastructure transformation. This trend should continue into 2025, presenting both opportunities and challenges for the industry.

The foundation of hyperscale data center growth in 2024 included significantly increased investment and capacity expansion. As a benchmark, Synergy Research reported in early 2024 that the number of large data centers operated by hyperscale providers surpassed 1,000 globally, with half of these facilities located in the U.S. According to CBRE, hyperscale supply in primary U.S. markets increased by 10% (515.0 megawatts) in the first half of 2024 and by 24% (1,100.5 MW) year-over-year, reflecting significant capacity expansion. Meanwhile, McKinsey analysis from last Fall said the demand for AI-ready data center capacity is projected to rise at an average rate of 33% annually between 2023 and 2030, with AI workloads expected to constitute around 70% of total data center demand by 2030.

A number of notable megacampus deals in U.S. hyperscale data center market between 2023 and 2024 reflect the industry’s rapid expansion. In December 2023, Blackstone and Digital Realty announced a $7 billion joint venture to develop AI-ready data centers in Frankfurt, Paris, and Northern Virginia. In the widening arena of gigawatt-scale campuses, DCF’s Rich Miller reported last year on how Texas-based Lancium is planning five new campuses, each with 2.5 GW of power capacity, aiming to scale up to 6 GW to support AI workloads. A 200 MW planned AI data center campus from Crusoe in Abiliene is a highlight of this development. Also in Texas, Prime Data Centers is planning a $1.3 billion data center campus in Caldwell County, as reported by Data Center Knowledge in March 2024. Also in August 2024, Panattoni Development revealed its intention to enter the data center market, announcing plans to develop 1 GW of capacity within five years, with significant investments anticipated in the U.S.

Also, significant reporting from Reuters last year tabulated how U.S. utilities are planning to service booming data center demand as AI computing and the demand for it takes root. Of course in 2024, the year that re-energized nuclear energy for data centers, a pair of huge nuclear deals were a centerpiece. Talen Energy announced a deal to supply electricity and its 960 MW data center campus to AWS in Pennsylvania. Also in that state, Constellation Energy last year signed an exclusive deal with Microsoft to restart one of the units at the Three Mile Island nuclear plant. The utility plans to provide 835 MW of energy to the cloud giant’s data centers in a deal that would also mark the first-ever restart of a nuclear power plant in the U.S. after it was shut down.

Other big deals of recent note between utilities and data centers which illustrate the substantial investments and rapid growth in the U.S. hyperscale sector during 2023-24, as driven by the escalating demands of AI and cloud computing, as cited by Reuters and elsewhere, include the following:

- Meta has partnered with Entergy to power its new $10 billion AI data center in northeast Louisiana. Entergy plans to deploy three new natural gas power plants, providing over 2,200 megawatts of energy over 15 years, pending approval by the Louisiana Public Service Commission.

- Google has launched a partnership with clean energy developer Intersect Power and investment firm TPG, planning to invest $20 billion in renewable power infrastructure by 2030. This initiative aims to support the development of new data center capacity in the U.S., powered by clean energy.

- Private equity firms KKR and ECP have formed a $50 billion strategic partnership to accelerate the development of data center and power generation infrastructure. Te collaboration aims to support the growing energy needs of hyperscalers and other market participants.

- As mentioned above, Panattoni Development in August 2024 revealed plans to enter the data center market, with plans to develop 1 GW of capacity within five years and significant U.S. investments anticipated.

- Operating in Missouri and Illinois, Ameren secured a 250 MW power supply deal with data center operators, indicating substantial campus developments in its service area.

- American Electric Power (AEP) announced intentions to power 15 GW of data centers by the end of the decade, indicating large-scale campus projects underway.

- Xcel Energy is planning to supply power to Meta’s upcoming data center in Minnesota, expected to commence operations in 2025.

- AES has secured agreements with Google to provide over 1 GW of power for data center expansions in Ohio and Texas.

- Of particular note on the renewable energy front, NextEra’s renewables segment reported an increase of 3 GW in projects, including 860 MW to meet Google’s data center energy demands.

To conclude, while the hyperscale data center industry in the U.S. is poised for ongoing growth in 2025, to maintain this upward trajectory, it will be challenged in a variety of ways to address factors of sustainability, supply chain, cost, technology innovation and manufacturing partnerships, and regulatory issues. We’ll definitely be keeping an eye on this trend, and will report back to you.

5. Steep Pricing and Rental Rates Highlight Data Center Secondary and Tertiary Market Attractions

CBRE’s Data Centers practice expects rental rates for data centers to approach record highs in 2025, surging due to sustained demand and limited supply, particularly in major markets. Operators and tenants should anticipate increased costs for data center space across the board, necessitating strategic planning to manage expenses effectively. Continuing what appears to now be an annual trend, preleasing rates are projected to exceed 90% in 2025, indicating that a significant portion of new data center capacity will be committed before construction is complete. One upshot of this trend is that tenants may face intensified competition for available data center space, prompting earlier engagement in the leasing process to secure necessary capacity. Meanwhile, despite the 70% increase in data center construction supply cited recently by Reuters, vacancy rates have reached a record low of 2.8%, highlighting ongoing supply constraints. Power availability issues in key markets are contributing to these constraints. Limited availability may lead to further rental rate increases and could drive further development in emerging data center markets with more accessible resources.

Supply constraints and rising costs in traditional data center hubs have been prompting developers to take up in alternative locations with more favorable conditions. This shift could lead to more competitive pricing for tenants in emerging markets, which offer advantages like more affordable land and more supportive regulatory environments, making them magnets for large-scale data center projects. A set-piece example of such development is hyperscale giant Meta’s newly announced plan for a $10 billion data center campus in Richland Parish, Louisiana. As noted by DatacenterHawk, the expansion into secondary and tertiary markets is not limited to the U.S. In Europe, countries like Germany and Spain are witnessing data center developments in multiple cities, a trend being driven by the availability of cheaper land, power, and fiber infrastructure in these regions.

Ten key U.S. secondary and tertiary markets anticipated to experience significant data center growth in 2025, along with their key advantages, include the following locations:

- Columbus, Ohio – Advantages: Strategic location with access to major fiber routes, affordable real estate, supportive economic policies.

- Kansas City, Missouri – Advantages: Central U.S. location, robust infrastructure, competitive costs.

- San Antonio, Texas – Advantages: Lower risk of natural disasters, affordable energy, business-friendly environment.

- Denver, Colorado – Advantages: Strategic location between East and West coasts, favorable climate, growing tech ecosystem.

- Indianapolis, Indiana – Advantages: Low cost of living, central location, growing tech community.

- Minneapolis, Minnesota – Advantages: Reliable power grid, cool climate aiding in natural cooling solutions, supportive local government.

- Salt Lake City, Utah – Advantages: Seismic stability, low energy costs, growing tech workforce.

- Raleigh-Durham, North Carolina – Advantages: Research Triangle presence, skilled labor force, favorable business climate.

- Charlotte, North Carolina – Advantages: Financial industry presence, skilled workforce, favorable tax environment.

- Nashville, Tennessee – Advantages: Central location, expanding tech scene, competitive operational costs.

- Pittsburgh, Pennsylvania – Advantages: Emerging tech hub, access to academic institutions, presence of revitalized industrial spaces suitable for data centers.

6. Data Center Liquid Cooling Advances at Scale As DLC Becomes Table Stakes and the Immersion Inflection Point Nears

We feel it’s safe to say that the data center liquid cooling market is on a trajectory for substantial growth in 2025, as the technology has now come fully into its own after years on periphery of designs, driven primarily now by the escalating demands of AI and HPC applications. “This is a long-term trend,” said Vertiv CEO Giovanni Albertazzi in a recent interview. This year we should expect to see data liquid cooling technologies being integrated at even more increased scale in data center infrastructure, impacting building planning, structural loading solutions, and rack power densities. Consensus received last year from various data center industry events, including our own, indicated that data center liquid cooling had officially moved to center stage, as traditional air cooling methods are simply inadequate and inefficient for managing the heat generated by the higher power densities AI workloads require.

Analysts project substantial expansion in the data center liquid cooling sector going forward. The global market is expected to grow from $4.9 billion in 2024 to $21.3 billion by 2030, at an astonishing compound annual growth rate (CAGR) of 27.6%. In the United States, the market is anticipated to exhibit a CAGR of 17.1% from 2025 through 2033.

Several data center liquid cooling methods are now gaining traction in various degrees of adoption in a now rapidly evolving technical landscape. Single-phase direct-to-chip liquid cooling (DLC) is leading the market in deployment due to its maturity and effectiveness. Notably, NVIDIA has specified single-phase DLC for its upcoming GB200 compute nodes. Both single-phase and two-phase immersion cooling is seeing more deployment as well as undergoing much testing and validation, with increasing interest from data center operators (hold that thought). However, two-phase immersion cooling may face extra challenges, particularly concerning regulatory issues related to the use of certain dielectric fluids. Hybrid cooling technologies such as the ubiquitous rear door heat exchanger are of course now becoming commonplace in data center designs, particularly in retrofit, especially as standard air cooling proves inadequate for AI’s increased power demands.

Despite the optimistic outlook, several factors could impact market growth for liquid cooling. As pointed out by Dell’Oro Group, data center stakeholders should remain cognizant of potential challenges. For instance, possibly recurrent supply chain instabilities including component shortages and manufacturing delays could hinder the deployment of liquid cooling systems. Economic downturns or fluctuations could lead to reduced capital expenditures by data center operators, potentially slowing the adoption of liquid cooling technologies. Environmental regulations, particularly those related to the use of specific cooling fluids, may inhibit the pace of adoption and market expansion certain liquid cooling technologies such as immersion.

To wit, immersion cooling, which involves submerging electronic components in thermally conductive dielectric liquids, may be poised for an inflection point beginning this year, emerging as a critical means of meeting data centers’ escalating demands for efficiency and sustainability in addressing increased heat density. Managing the highest thermal loads of AI will necessitate all the advantages of more effective solutions like immersion cooling. With data centers projected to consume approximately 2% of global electricity by 2025, there is an ongoing, urgent imperative to enhance facilities’ energy efficiency. Immersion cooling can reduce energy consumption by up to 30% or more, aligning with sustainability goals. As environmental concerns intensify, data centers face increasing pressure to adopt eco-friendly practices. Immersion cooling supports these initiatives by lowering carbon footprints and enabling effective waste heat reuse.

Luckily, the industry is approaching a juncture where immersion cooling solutions are becoming more standardized, facilitating broader scalable adoption across various data center types. The upfront costs associated with immersion cooling systems can be substantial, potentially deterring investment despite possible long-term savings beyond initial capital expenditure, and not all existing data center equipment is suitable for immersion cooling, necessitating hardware modifications or replacements. Then too, specialized knowledge is required to maintain and operate immersion-cooled systems, highlighting the need for targeted training and skill development. But the development of AI-specific hardware optimized for integration with immersion cooling is accelerating, and as the technology matures, the cost of implementing immersion cooling is decreasing, making it a more economically feasible option for data center operators.

Immersion cooling could be poised to become a greater cornerstone of data center operations beginning 2025, driven by the demands of AI infrastructure and stringent sustainability requirements. While challenges remain, the convergence of technological advancements and environmental imperatives is steering the industry toward greater adoption of this innovative cooling methodology. The race toward data center AI isn’t slowing down, and as the digital infrastructure boom expands, within that gap may reside immersion cooling’s inflection point. How soon we reach that point remains to be seen, but the convergence of technological advancements and environmental imperatives could be steering the industry toward greater adoption of immersion cooling. We’ll be avidly watching in 2025 to see if and when this prediction unfolds.

7. Chicken or Egg: Accelerated Data Center Deployment Strategies Meet the AI Edge Scale-Out

The interplay between rapid advancements in AI and the data center industry’s ability to adapt to these demands will define the digital infrastructure landscape of 2025. And as hyperscalers, colocation providers, and edge players scale their operations, they may face an intricate balancing act: accelerating infrastructure development while gauging, if not grappling with, supply chain constraints and the unpredictability of AI-driven workloads.

The surging proliferation of AI, particularly generative AI applications, has ushered in an unprecedented demand for data center capacity. Gartner estimates that global spending on AI-related infrastructure will exceed $300 billion in 2025, with hyperscalers AWS, Google Cloud, Microsoft Azure, and Meta leading the charge. But AI inference—the process of deploying trained models for real-time applications—should soon become an equivalent catalyst for this growth. AI inference workloads require high computational power, low latency, and scalability, necessitating new modular data center designs optimized for GPUs, TPUs, and other accelerators, as well as liquid cooling, at the AI edge. However, the urgency to meet this all forms of data center demand may present a “chicken or egg” problem: should infrastructure scale in anticipation of AI’s growth, or should it be reactive to avoid overbuilding?

To accommodate AI’s computational intensity, data center developers are employing innovative construction and deployment strategies. To wit, modular data centers with prefabricated components are now transforming construction timelines from years to months while enabling scalability on demand. Building on the success of government agencies such as U.S. Department of Defense (DoD) deploying modular data centers for military applications, the largest data center providers in the world including Equinix, Digital Realty, Vertiv and Schneider Electric have embraced prefabrication to deploy capacity faster. The elusive rise of edge computing, now driven by latency-sensitive AI applications, is also driving the market into secondary and tertiary locations and regions primed for edge deployments, such as Salt Lake City, Raleigh-Durham, and Minneapolis, which provide strategic proximity to end users and lower costs.

While challenges abound, AI inference at the edge offers transformative potential. Modular and prefabricated data centers reduce deployment times, increase flexibility, and generally make edge and remote deployments more viable. Then too, driven by key players including Dell Technologies, HPE, NVIDIA, and EdgeConneX, the edge is becoming smarter in processing AI-enhanced microdata for latency-sensitive applications like gaming and healthcare. Edge computing reduces latency by bringing data processing closer to users, unlocking real-time capabilities for burgeoning IoT applications such as autonomous vehicles, telemedicine, AR/VR, and industrial automation. The “edge-first” approach, championed by players like Dell Technologies and Supermicro, emphasizes compact, energy-efficient, and scalable solutions for inference workloads. These strategies align with market trends projecting that edge deployments could account for 30% of data center growth by this year.

The data center industry’s response to the dual pressures of AI-driven growth and possible supply chain constraints will define its trajectory in 2025 and beyond. The industry finds itself at a critical juncture and AI inference computing at the edge should represent a transformational opportunity. Emphasizing sustainability by meeting regulatory and societal expectations and will be non-negotiable for most operators, particularly in energy-intensive AI deployments, and adopting flexible modular architectures for AI should help in this area. But the success of AI infrastructure expansion will hinge on developers balancing growth with prudence. Overbuilding in anticipation of demand could lead to underutilized capacity, while underestimating AI’s trajectory risks data center providers falling behind their competitors.

That being said, modular and software-defined solutions can future-proof operators against evolving AI requirements. In achieving scale-out for the AI edge, strategic partnerships with suppliers and investments in local manufacturing can mitigate risks. By adopting innovative construction strategies, addressing logistical challenges, and capitalizing on scalable edge opportunities, the industry should be able to navigate any “chicken or egg” dilemmas and emerge as a cornerstone of the AI revolution for both LLMs and inference purposes, whether computed in a data hall or via containerized, prefabricated modular designs.

8. The Data Center Quantum Computing Event Horizon May Be Approaching

In 2025, quantum computing is no longer a distant dream but an emerging reality in the data center industry. According to Deloitte’s 2024 Technology Predictions, quantum computing is now transitioning from experimental to practical use cases, particularly in optimization and cryptography, making significant strides in industries dependent on advanced computation. While challenges persist, advancements in qubit stability, scalability, and hybrid integration are paving the way for broader adoption. By 2025, quantum computing is poised to complement classical systems, offering revolutionary capabilities that could redefine how data centers operate. However, realizing its full potential will require continued investment, innovation, and collaboration across the industry.

As a revolutionary leap in computational power, quantum computing uses the principles of quantum mechanics to process information. According to a recent study by Nature Reviews Physics, quantum computing “leverages superposition and entanglement” to outperform classical systems in specific applications, such as cryptography and optimization. Unlike classical computers that use bits (0s and 1s) to represent data, quantum computers use quantum bits, or qubits. Qubits can exist in multiple states simultaneously due to a property called superposition, and they can also be entangled, allowing instantaneous correlations between them regardless of distance. These properties enable quantum computers to solve certain problems exponentially faster than classical systems, such as complex optimization tasks, cryptographic analysis, and molecular modeling.

However, the technology remains elusive due to significant challenges in stability, scalability, and error correction. Quantum systems are incredibly sensitive to environmental disturbances, requiring sophisticated cryogenic environments and constant error management. Nonetheless, in emerging use cases, data center operators are leveraging quantum algorithms to optimize energy consumption, server placement, and traffic routing. For example, Google’s collaboration with Unitary Fund focuses on using quantum algorithms to improve server allocation efficiency, while AWS is exploring energy optimization through quantum-classical hybrid approaches via the Amazon Braket cloud quantum computing service.

Interestingly, quantum computing represents both a threat and a solution in cryptography for cybersecurity. While it poses risks to classical encryption methods, it also enables the development of quantum-resistant algorithms. Meanwhile, quantum systems are beginning to accelerate AI and ML training and inference, especially for complex neural networks. In the realm of scientific simulations, companies are exploring quantum computing for drug discovery, climate modeling, and material science. In terms of key players, IBM is deploying quantum systems via its IBM Quantum Network, providing cloud-based quantum computing services. Google is making a bid for quantum supremacy with its Sycamore processors, experimenting with practical applications in optimization and AI. AWS is offering quantum access through the aforementioned Amazon Braket, a service integrating quantum platforms from D-Wave, IonQ, and Rigetti Computing. For its part, Microsoft, is developing its Azure Quantum platform and investing in topological qubit research. And quantum startups like Rigetti Computing, IonQ, and Xanadu are pushing both hardware and software innovation.

The global quantum computing market is projected to grow from $1 billion in 2023 to $4 billion through 2025, with significant investment from hyperscalers, startups, and governments, according to a report by MarketsandMarkets. By the end of this year, quantum computing is expected to gain traction in industries such as finance, healthcare, and logistics, all of which rely heavily on data center infrastructure. Advances in error correction and qubit coherence are enabling larger, more reliable quantum systems; companies aim to scale quantum processors to thousands of qubits within the next decade. Quantum computers are not intended to replace classical data center systems but will complement them through hybrid integration, functioning as accelerators for specific tasks within traditional facilities. Finally, most quantum systems in 2025 will be accessible through the cloud, allowing operators to experiment without owning the costly infrastructure.

In terms of challenges to growth and technological hurdles, quantum computers often require cryogenic environments, leading to energy-intensive cooling demands, so serious innovations in energy-efficient cooling will be critical to quantum advancements. Also, maintaining qubit coherence remains a significant challenge. Current quantum systems also require extensive error correction, consuming resources and complicating scalability. Then too, quantum hardware is expensive to build and maintain, limiting its access to well-funded organizations and governments. Further, there is a significant gap in quantum computing expertise, which will require collaboration between academia and industry to train a specialized workforce.

But quantum computing will enable data centers to tackle workloads previously deemed impossible, particularly in AI, cryptography, and real-time optimization. And as indicated above, Quantum-as-a-Service (QaaS) appears to be emerging as a viable business model, allowing data center operators to monetize quantum capabilities through cloud platforms.

More Key Data Center Trends to Watch In 2025

9. Sustainability Optimization

11. Digital Twins Implementations

12. Cybersecurity Enhancements