Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Researchers have published the most comprehensive survey to date of so-called “OS Agents” — artificial intelligence systems that can autonomously control computers, mobile phones and web browsers by directly interacting with their interfaces. The 30-page academic review, accepted for publication at the prestigious Association for Computational Linguistics conference, maps a rapidly evolving field that has attracted billions in investment from major technology companies.

“The dream to create AI assistants as capable and versatile as the fictional J.A.R.V.I.S from Iron Man has long captivated imaginations,” the researchers write. “With the evolution of (multimodal) large language models ((M)LLMs), this dream is closer to reality.”

The survey, led by researchers from Zhejiang University and OPPO AI Center, comes as major technology companies race to deploy AI agents that can perform complex digital tasks. OpenAI recently launched “Operator,” Anthropic released “Computer Use,” Apple introduced enhanced AI capabilities in “Apple Intelligence,” and Google unveiled “Project Mariner” — all systems designed to automate computer interactions.

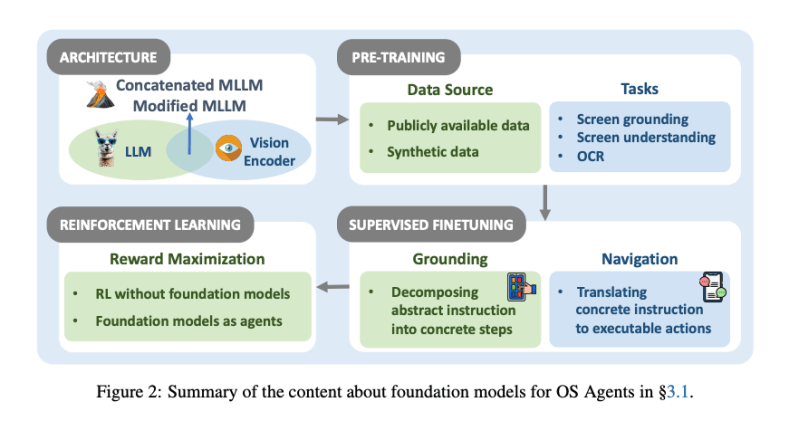

The speed at which academic research has transformed into consumer-ready products is unprecedented, even by Silicon Valley standards. The survey reveals a research explosion: over 60 foundation models and 50 agent frameworks developed specifically for computer control, with publication rates accelerating dramatically since 2023.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

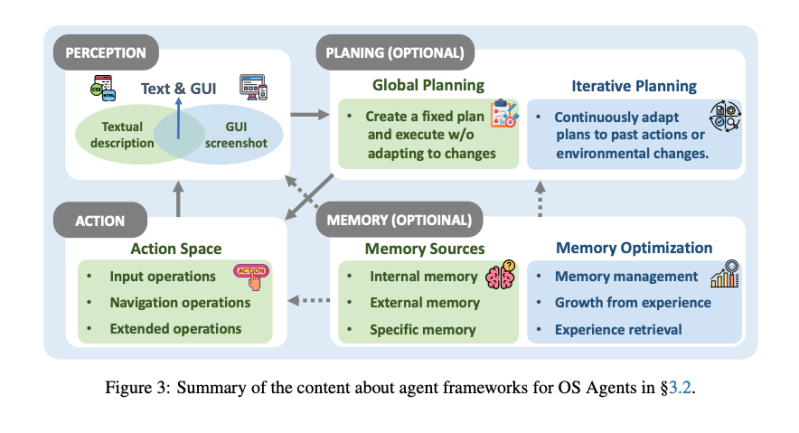

This isn’t just incremental progress. We’re witnessing the emergence of AI systems that can genuinely understand and manipulate the digital world the way humans do. Current systems work by taking screenshots of computer screens, using advanced computer vision to understand what’s displayed, then executing precise actions like clicking buttons, filling forms, and navigating between applications.

“OS Agents can complete tasks autonomously and have the potential to significantly enhance the lives of billions of users worldwide,” the researchers note. “Imagine a world where tasks such as online shopping, travel arrangements booking, and other daily activities could be seamlessly performed by these agents.”

The most sophisticated systems can handle complex multi-step workflows that span different applications — booking a restaurant reservation, then automatically adding it to your calendar, then setting a reminder to leave early for traffic. What took humans minutes of clicking and typing can now happen in seconds, without human intervention.

Why security experts are sounding alarms about AI-controlled corporate systems

For enterprise technology leaders, the promise of productivity gains comes with a sobering reality: these systems represent an entirely new attack surface that most organizations aren’t prepared to defend.

The researchers dedicate substantial attention to what they diplomatically term “safety and privacy” concerns, but the implications are more alarming than their academic language suggests. “OS Agents are confronted with these risks, especially considering its wide applications on personal devices with user data,” they write.

The attack methods they document read like a cybersecurity nightmare. “Web Indirect Prompt Injection” allows malicious actors to embed hidden instructions in web pages that can hijack an AI agent’s behavior. Even more concerning are “environmental injection attacks” where seemingly innocuous web content can trick agents into stealing user data or performing unauthorized actions.

Consider the implications: an AI agent with access to your corporate email, financial systems, and customer databases could be manipulated by a carefully crafted web page to exfiltrate sensitive information. Traditional security models, built around human users who can spot obvious phishing attempts, break down when the “user” is an AI system that processes information differently.

The survey reveals a concerning gap in preparedness. While general security frameworks exist for AI agents, “studies on defenses specific to OS Agents remain limited.” This isn’t just an academic concern — it’s an immediate challenge for any organization considering deployment of these systems.

The reality check: Current AI agents still struggle with complex digital tasks

Despite the hype surrounding these systems, the survey’s analysis of performance benchmarks reveals significant limitations that temper expectations for immediate widespread adoption.

Success rates vary dramatically across different tasks and platforms. Some commercial systems achieve success rates above 50% on certain benchmarks — impressive for a nascent technology — but struggle with others. The researchers categorize evaluation tasks into three types: basic “GUI grounding” (understanding interface elements), “information retrieval” (finding and extracting data), and complex “agentic tasks” (multi-step autonomous operations).

The pattern is telling: current systems excel at simple, well-defined tasks but falter when faced with the kind of complex, context-dependent workflows that define much of modern knowledge work. They can reliably click a specific button or fill out a standard form, but struggle with tasks that require sustained reasoning or adaptation to unexpected interface changes.

This performance gap explains why early deployments focus on narrow, high-volume tasks rather than general-purpose automation. The technology isn’t yet ready to replace human judgment in complex scenarios, but it’s increasingly capable of handling routine digital busywork.

What happens when AI agents learn to customize themselves for every user

Perhaps the most intriguing — and potentially transformative — challenge identified in the survey involves what researchers call “personalization and self-evolution.” Unlike today’s stateless AI assistants that treat every interaction as independent, future OS agents will need to learn from user interactions and adapt to individual preferences over time.

“Developing personalized OS Agents has been a long-standing goal in AI research,” the authors write. “A personal assistant is expected to continuously adapt and provide enhanced experiences based on individual user preferences.”

This capability could fundamentally change how we interact with technology. Imagine an AI agent that learns your email writing style, understands your calendar preferences, knows which restaurants you prefer, and can make increasingly sophisticated decisions on your behalf. The potential productivity gains are enormous, but so are the privacy implications.

The technical challenges are substantial. The survey points to the need for better multimodal memory systems that can handle not just text but images and voice, presenting “significant challenges” for current technology. How do you build a system that remembers your preferences without creating a comprehensive surveillance record of your digital life?

For technology executives evaluating these systems, this personalization challenge represents both the greatest opportunity and the largest risk. The organizations that solve it first will gain significant competitive advantages, but the privacy and security implications could be severe if handled poorly.

The race to build AI assistants that can truly operate like human users is intensifying rapidly. While fundamental challenges around security, reliability, and personalization remain unsolved, the trajectory is clear. The researchers maintain an open-source repository tracking developments, acknowledging that “OS Agents are still in their early stages of development” with “rapid advancements that continue to introduce novel methodologies and applications.”

The question isn’t whether AI agents will transform how we interact with computers — it’s whether we’ll be ready for the consequences when they do. The window for getting the security and privacy frameworks right is narrowing as quickly as the technology is advancing.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.