Power utilities today are confronting a perfect storm of explosive demand, aging assets and unpredictable policy shifts, making strategic alignment more critical and more challenging to achieve than ever before. A surge in demand from large, energy-intensive loads, such as data operations, electric vehicle manufacturing and hydrogen production facilities, complicates forecasting and challenges existing planning processes. Simultaneously, aging grid assets, some nearing or exceeding their design lives, are increasingly vulnerable. These technical challenges are compounded by heightened regulatory scrutiny, decarbonization goals and shifting policy around energy affordability, reliability and sustainability. The unique circumstances companies are operating in today make it essential for executive teams to proactively address and shape company strategy.

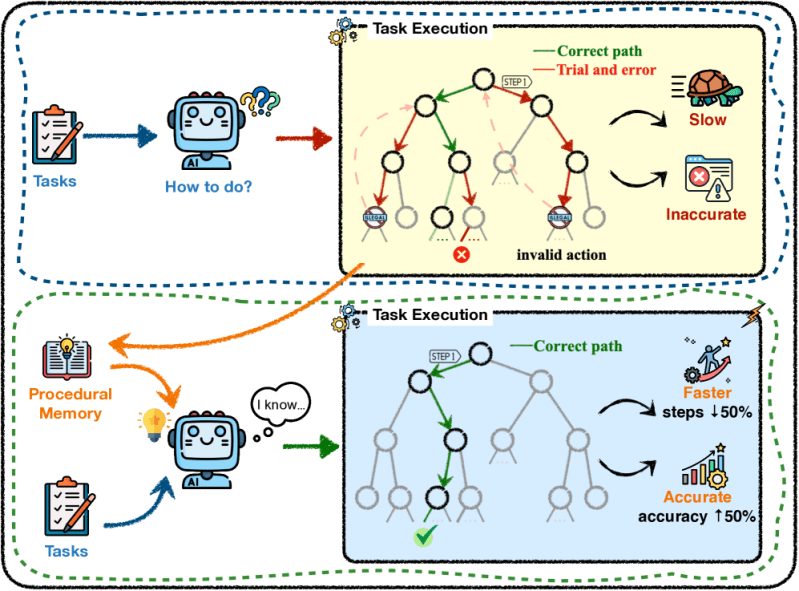

In today’s dynamic environment, strategic planning must be an essential capability of the executive team. It requires a deliberate, structured approach that is grounded in alignment at the top, provides clarity in execution, resourcing and goals, and sustains leadership attention for frequent assessment and adaptation. Despite the best intentions, strategic initiatives can miss the mark if they lack this foundation.

Why strategy can often fail with utilities

For today’s power utilities, especially those governed by elected boards or serving highly engaged communities, leadership and board perspectives can vary widely. One board member may be exclusively focused on affordability, while another may advocate for decarbonization and renewables integration. These different viewpoints can be a strength in the development of the company strategy, but without an intentional process that drives alignment, they can often create confusion and slow progress.

Common symptoms of executive misalignment include:

- Decision-making paralysis, where little progress is made on strategic priorities

- Conflicting interpretations leading to diluted execution, as different leaders act on their own interpretations of “the strategy”

- Wasted effort, as incomplete or poorly supported initiatives don’t move the needle

- Frustration at all levels, as priorities seem to shift and unifying objectives remains unclear

Misalignment is not always the result of disagreement or differences in opinion. It often stems from a lack of consistent and transparent engagement with company leadership. Without a forum to discuss trade-offs, assumptions and risks, organizations will produce strategic plans that look good on paper and likely include “something for everyone” but fail to guide strategic action. This creates organizational inefficiencies, where operational teams invest time and money in projects that lack support or do not align with true strategic priorities.

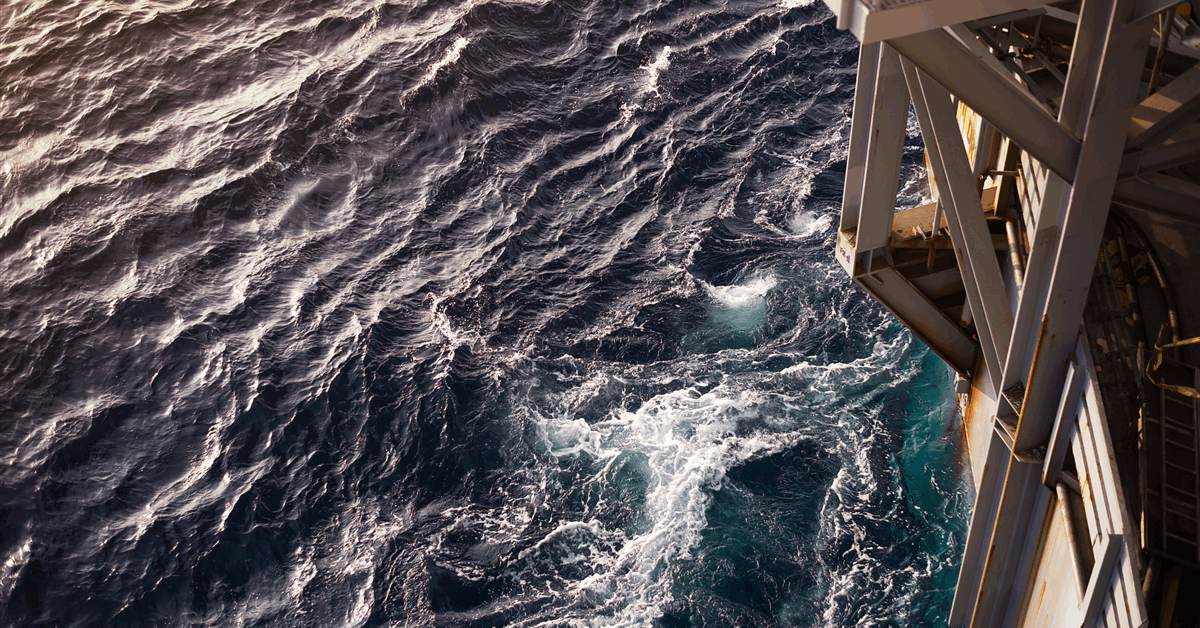

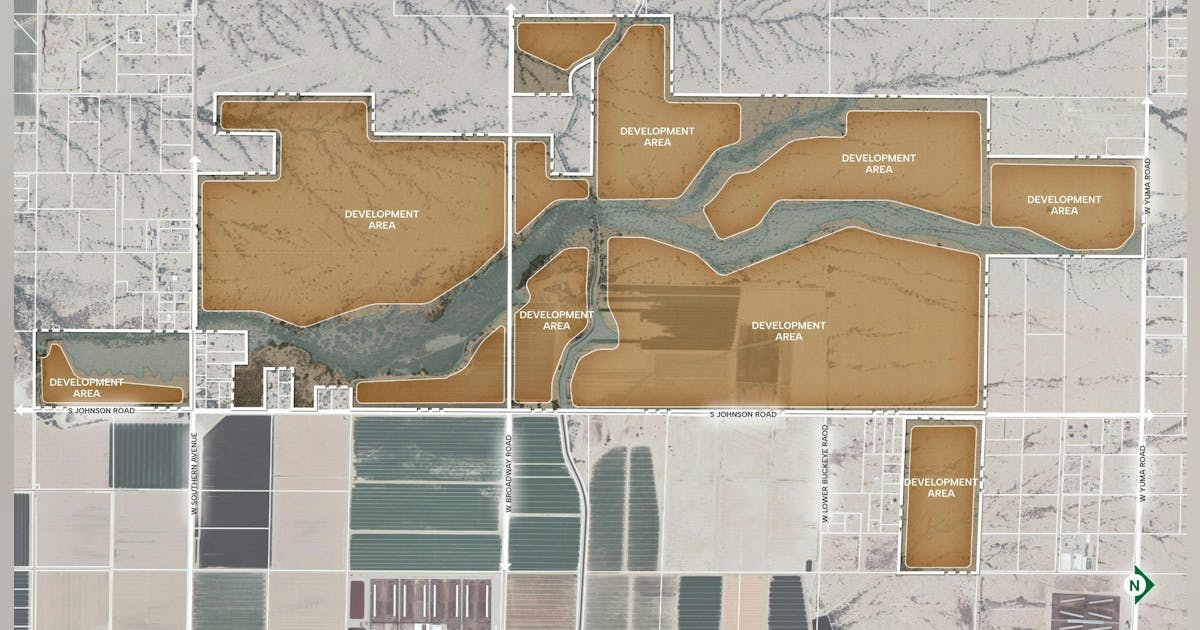

The hidden cost of misalignment: A case of data center growth

Across the United States, power utilities are currently seeing an unprecedented surge in requests from data operations (hyperscalers, edge data centers, data miners, etc.). While each opportunity promises economic development, they also present seismic shifts in demand profiles, reliability risks and strain on infrastructure. Misaligned planning efforts in this scenario could lead to serious adverse outcomes.

Consider an example where a utility board in the northeastern United States enthusiastically supported new data operations to stimulate economic development. Some executives advocated for “accelerated service” and proposed quick solutions such as backup gas generation and temporary interconnections to fast-track the load and ensure the opportunity was captured. Meanwhile, operations and engineering executives were advocating to prioritize system reliability through capital investment in upgrades and refurbishing aging infrastructure. Ultimately, the utility caved to pressure from the potential new customer and moved forward with the “accelerated service” plan to capture the data operation. Leadership received initial acclaim in the press and with policymakers for enabling “efficient economic growth,” but as the data operations came online, issues arose:

- Peak demand surges: Record summer heat coincided with ramping data operation activity. System balancing became fragile, and rolling outages were narrowly avoided using emergency market purchases

- Backfeed and grid instability: Temporary interconnections caused unanticipated backfeed in parts of the grid, and the system operator initiated emergency curtailment protocols

- Capital cost overruns: Deferred grid upgrades eventually proceeded to address the realization of reliability issues, but the cost was significantly higher due to rushed design work and inflation of material and equipment costs

- Public trust and regulator friction: Reliability issues and overspending spurred the state commission to launch an investigation into whether the utility misrepresented system readiness and cost exposure

This scenario reveals an important insight. This utility didn’t fail because it lacked foresight but because its strategic vision was fragmented.

What should utilities do differently?

To successfully navigate an evolving landscape, strategic planning must be treated as a deliberate, structured process and not a one-time document. That process begins with a clear understanding of what strategy is and what it must do:

- Diagnose the current state – Why will the status quo no longer work? What disruptions, risks, or opportunities demand a new path?

- Establish guiding principles and assumptions – What parameters, trade-offs and guardrails will shape the strategy? What values or priorities are non-negotiable?

- Prioritize and sequence strategic inputs – What actions or initiatives will move the strategy forward? In what order and with what resources?

- Create alignment through dialogue – How will leaders debate, communicate and sustain a shared understanding of the strategy? What forums or rhythms ensure that alignment holds over time?

Executives and boards must shift from treating strategy as a static plan to a living framework that evolves with conditions and is continuously tested for alignment, clarity and impact.

Tools and tactics for strategic alignment

The following practices are worth considering to create alignment and increase the effectiveness of strategy development and execution:

- Structured executive interviews: Conduct confidential discussions with leadership to understand strategic perspectives, identify divergent views and surface areas of friction

- Strategic alignment assessment: Measure how aligned executive leadership is by comparing strategic perspectives across functions, business units, etc. This comparison should be used as a foundation to facilitate discussion and debate that creates alignment around strategic priorities and performance gaps

- Facilitated retreats or strategy workshops: Create forums away from the daily office grind where leaders can work through differences in real time and build alignment

- Performance scorecards: Develop metrics that track progress against strategic priorities

- Regular strategic check-ins: Frequently revisit the strategy to ensure it remains aligned with evolving regulatory, financial and system conditions

Why strategic alignment is critical now

The transformation underway in the utility industry has raised the stakes for every energy provider. With recent changes in the legal, regulatory and economic landscape, leaders must have a deliberate approach to aligning around company strategy to successfully navigate the road ahead. Long-term success hinges on the ability to unify leadership behind a shared direction through an integrated process that builds alignment as conditions and risks evolve. For utilities navigating this turbulent landscape, aligning leadership around a shared strategic vision is more than best practice. It is a competitive advantage.