@import url(‘https://fonts.googleapis.com/css2?family=Inter:[email protected]&display=swap’);

a { color: #c19a06; }

.ebm-page__main h1, .ebm-page__main h2, .ebm-page__main h3, .ebm-page__main h4,

.ebm-page__main h5, .ebm-page__main h6 {

font-family: Inter;

}

body {

line-height: 150%;

letter-spacing: 0.025em;

font-family: Inter;

}

button, .ebm-button-wrapper { font-family: Inter; }

.label-style {

text-transform: uppercase;

color: var(–color-grey);

font-weight: 600;

font-size: 0.75rem;

}

.caption-style {

font-size: 0.75rem;

opacity: .6;

}

#onetrust-pc-sdk [id*=btn-handler], #onetrust-pc-sdk [class*=btn-handler] {

background-color: #c19a06 !important;

border-color: #c19a06 !important;

}

#onetrust-policy a, #onetrust-pc-sdk a, #ot-pc-content a {

color: #c19a06 !important;

}

#onetrust-consent-sdk #onetrust-pc-sdk .ot-active-menu {

border-color: #c19a06 !important;

}

#onetrust-consent-sdk #onetrust-accept-btn-handler,

#onetrust-banner-sdk #onetrust-reject-all-handler,

#onetrust-consent-sdk #onetrust-pc-btn-handler.cookie-setting-link {

background-color: #c19a06 !important;

border-color: #c19a06 !important;

}

#onetrust-consent-sdk

.onetrust-pc-btn-handler {

color: #c19a06 !important;

border-color: #c19a06 !important;

background-color: undefined !important;

}

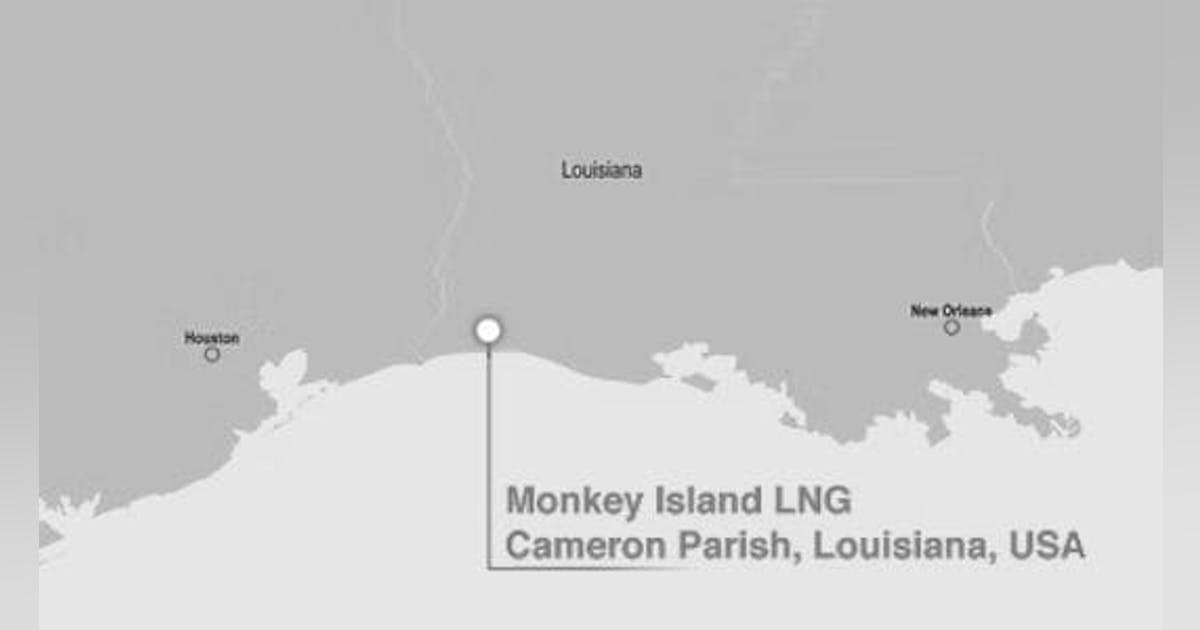

Monkey Island LNG has selected ConocoPhillips’ Optimized Cascade Process liquefaction technology for its planned 26 million tonnes/year (tpy) natural gas liquefaction and export plant in Cameron Parish, La.

The 246-acre project site on Monkey Island in Cameron Parish, is positioned with access to deepwater shipping channels and US natural gas supply.

The LNG plant is expected to utilize cryogenic technology to liquefy about 3.4 bcfd of natural gas to produce LNG to serve both US domestic offtakers and global export markets, according to Monkey Island LNG’s website.

Monkey Island LNG expects to develop up to five liquefaction trains (5-million tpy each). The project design calls for three LNG storage tanks on site, each with capacity of about 180,000 cu m, the website shows.

In addition to ConocoPhillips, the privately held company has selected McDermott for engineering, procurement, and construction services on the $25-billion project. ERM is serving as environmental consultant.