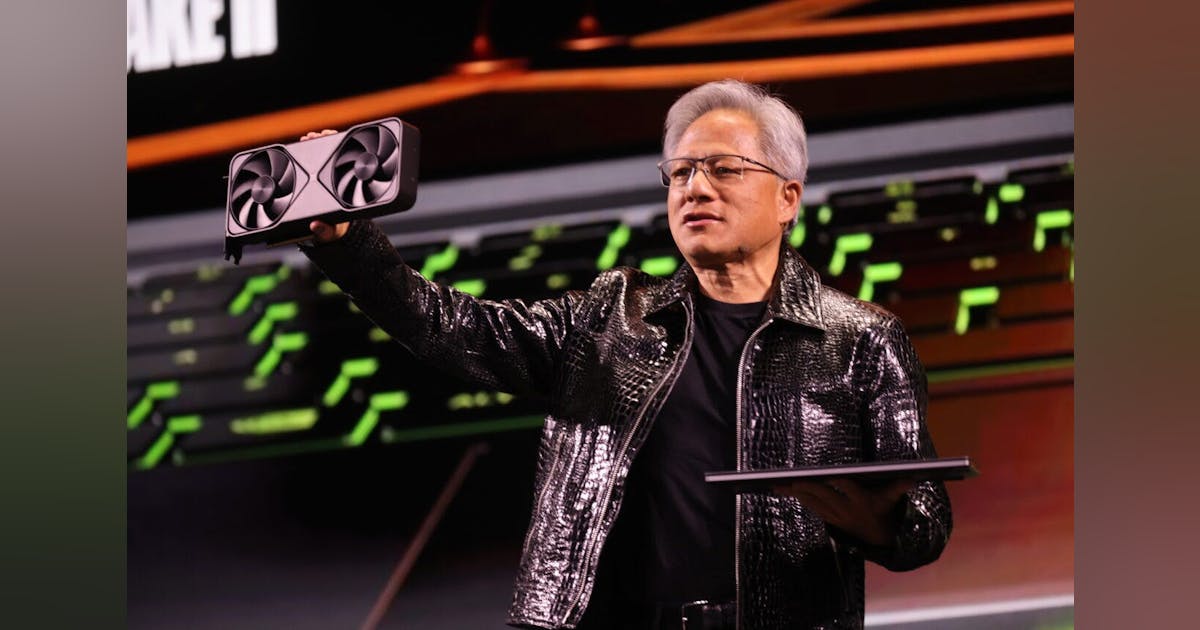

In our latest episode of the Data Center Frontier Show, we explore how powerhouse AI infrastructure is moving inland—anchored by the first NVIDIA HGX B200 cluster deployment in Columbus, Ohio.

Cologix, Lambda, and Supermicro have partnered on the project, which combines Lambda’s 1-Click Clusters™, Supermicro’s energy-efficient hardware, and Cologix’s carrier-dense Scalelogix℠ COL4 facility. It’s a milestone that speaks to the rapid decentralization of AI workloads and the emergence of the Midwest as a serious player in the AI economy.

Joining me for the conversation were Bill Bentley, VP Hyperscale and Cloud Sales at Cologix, and Ken Patchett, VP Data Center Infrastructure at Lambda.

Why Columbus, Why Now?

Asked about the significance of launching in Columbus, Patchett framed the move in terms of the coming era of “superintelligence.”

“The shift to superintelligence is happening now—systems that can reason, adapt, and accelerate human progress,” Patchett said. “That requires an entirely new type of infrastructure, which means capital, vision, and the right partners. Columbus with Cologix made sense because beyond being centrally located, they’re highly connected, cost-efficient, and built to scale. We’re not chasing trends. We’re laying the groundwork for a future where intelligence infrastructure is as ubiquitous as electricity.”

Bentley pointed to the city’s underlying strengths in connectivity, incentives, and utility economics.

“Columbus is uniquely situated at the intersection of long-haul fiber,” Bentley said. “You’ve got state tax incentives, low-cost utilities, and a growing concentration of hyperscalers and local enterprises. The ecosystem is ripe for growth. It’s a natural geography for AI workloads that need geographic diversity without sacrificing performance.”

Shifting—or Expanding—the Map for AI

The guests agreed that deployments like this don’t represent a wholesale shift away from coastal hyperscale markets, but rather the expansion of AI’s footprint across multiple geographies.

“I like to think of Lambda as an AI hyperscaler,” Bentley noted. “The line between AI workloads and traditional hyperscale is blurred now. They’re intertwined.”

Patchett added:

“I wouldn’t call it a shift either—it’s an addition to. Columbus isn’t a Tier 2 market anymore. The biggest hyperscalers have been building there for years. Workloads are commingling across regions. At Lambda, our megawatt footprint will multiply by four between 2025 and 2026. We’re in Texas, Ohio, California, Illinois. We’re democratizing AI infrastructure, and we have to deliver it where it’s needed.”

Hyperscale Edge: Scalability Meets Real-Time Responsiveness

One of the most interesting parts of the discussion came when the conversation turned to Cologix’s description of the Columbus project as a “hyperscale edge” deployment.

Bentley explained that in practice, that means combining the strengths of hyperscale—megawatt capacity, scalability, and wholesale facility capabilities—with the interconnection density traditionally associated with edge data centers.

“The Columbus campus is the interconnection hub in the market,” Bentley said. “It directly connects enterprise customers with carriers, service providers, and AI-specific workloads like Lambda. So to us, it’s both hyperscale and edge.”

Patchett added his own spin, describing it as “the aggregated edge.”

“This aggregated edge is where most real-world AI workloads happen,” he explained. “About 80% of tasks—things like inference, fine-tuning, and smaller training jobs—don’t need frontier model training. They need to be close to users, with real-time responsiveness, but still connected back to the larger models. That’s exactly what deployments like this provide.”

One-Click Clusters, Faster Time to Market

From there, the conversation shifted to Lambda’s one-click cluster model. Patchett stressed that this approach has fundamentally changed procurement and deployment timelines for enterprises.

“What used to take weeks or months—lining up GPUs, getting data center space, configuring systems—we can now do in days,” he said. “We’ve proven we can deploy thousands of GPUs in under 90 days. Time to market is everything, and one-click clusters give enterprises speed they simply can’t afford to miss out on.”

Bentley agreed, noting that Lambda’s on-demand scalability has also created new operational challenges and opportunities for facilities like Cologix’s.

“We have to be prepared to scale from zero to megawatts within seconds,” he said. “That’s a whole new dynamic in the industry and it raises the stakes for operational excellence.”

Patchett emphasized that this isn’t just about speed—it’s about choosing facilities capable of supporting such scale and complexity.

“You don’t put GPUs in a quonset hut in the middle of South America and call it good,” he said. “Partners like Cologix bring the facility engineering and reliability that this level of infrastructure requires.”

Flexible GPU Models Lower Barriers, Expand Access

The discussion then turned to how flexible consumption models are reshaping the economics of AI infrastructure.

Patchett explained that Lambda’s GPU Flex Commitment is designed to open doors for startups, researchers, and smaller teams who previously couldn’t afford access to cutting-edge GPU infrastructure.

“Historically, this kind of compute power has been reserved for companies with super deep pockets,” Patchett said. “With GPU Flex Commitment, startups and smaller players can now tap into this infrastructure without massive upfront investment. Enterprises benefit too—they can start with hundreds of GPUs for proof-of-concept, then scale to thousands when they’re ready.”

He described the model as not only lowering the barrier to entry, but also unlocking both time-to-market and total cost of ownership (TCO) advantages for a much wider range of customers.

Bentley echoed the point from the data center side:

“It enables smaller innovators to adopt AI technology, while letting larger customers scale faster,” he said. “At Cologix, we support both ends of the spectrum, and flexible models like this make that possible.”

Supermicro’s Role in Efficiency and Sustainability

From there, the conversation shifted toward the role of Supermicro’s energy-efficient systems in the Columbus deployment.

Bentley noted that hardware requirements for GPU clusters are evolving at a pace the industry has never seen before—sometimes changing every six months. This is pushing both density and cooling requirements to new limits.

“If you rewind not too long ago, standard design density was around 5 kW per rack,” Bentley said. “With Lambda and Supermicro, we’re now supporting 44 kW air-cooled racks—and already planning for hybrid air-to-liquid cooling and eventually dedicated liquid cooling.”

He described the shift as a wholesale transformation of data center design, not just incremental innovation.

“It’s a complete industry transformation,” Bentley emphasized. “And it’s exciting to be adapting alongside partners like Lambda and Supermicro who are pushing the envelope.”

Sustainability and Transformation Through Partnerships

Patchett emphasized that the current moment isn’t just about stretching legacy infrastructure further—it’s about building entirely new models for data center design.

“In the ’90s we went from 2–4 kW per rack to 44 kW by just squeezing the same sponge—more air, more water,” he said. “We can’t do that anymore. Now it’s a complete transformation, and it takes partnerships across the board to make it happen.”

Patchett noted that Lambda has relied on Supermicro since its early days, but also stressed that the sustainability challenge requires a much wider ecosystem of partners—including colocation providers like Cologix.

“A rising tide floats all boats,” he said. “Sustainability needs to become unconscious competence—just built in, automatic. We want to work with partners who take that as seriously as we do.”

Bentley agreed, pointing out that Lambda’s use of Supermicro’s efficient systems also strengthens Cologix’s ability to hit its ESG goals.

“These systems help us continually reduce PUE, lower scope one and two emissions, and improve water usage effectiveness,” Bentley said. “It’s a circle—and it takes all of us working together.”

Why Interconnection Matters for AI Workloads

The conversation then turned to how Cologix’s dense interconnection ecosystem in Columbus supports AI workload performance.

Bentley explained that the city’s geography—at the crossroads of Midwest long-haul fiber routes—makes it one of the best U.S. locations for low-latency connectivity to major population centers.

“Network matters as much as compute for latency-sensitive AI inference,” Bentley said. “On our campus, customers can interconnect directly with Lambda’s AI workloads or pursue hybrid strategies with traditional cloud providers.”

Patchett noted that interconnection requirements are workload-dependent—what’s critical for inference may not be the same for full model training.

“Everybody asks, what’s your latency requirement? The answer is always, it depends,” he said. “With dense, reliable interconnection like this, we can serve both inference and large language model training from the same highly connected location.”

He even joked about Columbus’s place in what the industry sometimes calls the “NFL city” framework.

“Either we change the term or Columbus is about to land a new NFL franchise,” Patchett quipped.

AI Infrastructure Empowering Midwest Industries

Patchett highlighted that the Columbus deployment is more than just a technical milestone—it’s about enabling AI where it’s needed, across sectors such as healthcare, logistics, and manufacturing.

“AI is already integrated into these industries whether people realize it or not,” he said. “The difference now is scale and accessibility. Regional AI infrastructure supports real-world use cases across multiple sectors. That’s why we call this the aggregated edge—it brings intelligence closer to the point of need.”

Bentley added that the deployment allows enterprises to move away from traditional Tier 1 data center markets on the coasts, enabling ultra low-latency access to AI workloads:

“Columbus has tremendous industry density with healthcare, life sciences, finance, insurance, manufacturing, and more. Now these models can be trained and deployed closer to where they’re used.”

A Repeatable, Forward-Looking Playbook

The executives emphasized that the Lambda–Cologix collaboration is not a one-off project, but a template for regional AI cluster deployment across the Cologix footprint.

“As we expand into new markets, we need partners who are ahead of the curve,” Patchett said. “Cologix builds the place where our platform can be managed, run, and operated efficiently. We’re lowering the barrier for enterprises to access production-ready AI compute.”

Bentley agreed, noting that adapting to the rapid pace of AI hardware innovation requires a flexible approach to data center design:

“We plan for what customers need now, the flexibility for what they might need tomorrow, and the future requirements like liquid cooling. You can’t build monolithic anymore—you have to build adaptable facilities that can pivot quickly.”

Future-Proofing in a Rapidly Evolving AI Landscape

Both executives underscored that GPU hardware cycles and densification trends demand a rethink of traditional data center strategies:

“The DNA of the hardware changes every six months, but it takes years to plan and build a data center,” Patchett said. “We have to design for constant change, manage unit economics, and support a mix of older and newer GPUs simultaneously.”

Bentley reinforced that forward-looking flexibility is essential for maintaining efficiency, sustainability, and performance:

“We support high-density air-cooled racks up to 44 kilowatts now, and our facilities under development allow for both air and liquid cooling in the future. We need to adopt new technologies and plan years ahead to stay ahead of the AI curve.”

Closing Takeaway

The Columbus deployment demonstrates that regional AI infrastructure, when combined with interconnection-rich campuses and flexible GPU models, can deliver speed, scalability, and sustainability for enterprises of all sizes. For the Midwest, it represents a major leap forward, providing low-latency access to AI workloads, a repeatable deployment playbook, and a foundation for the next wave of AI innovation.