Raising the Thermal Ceiling for AI Hardware

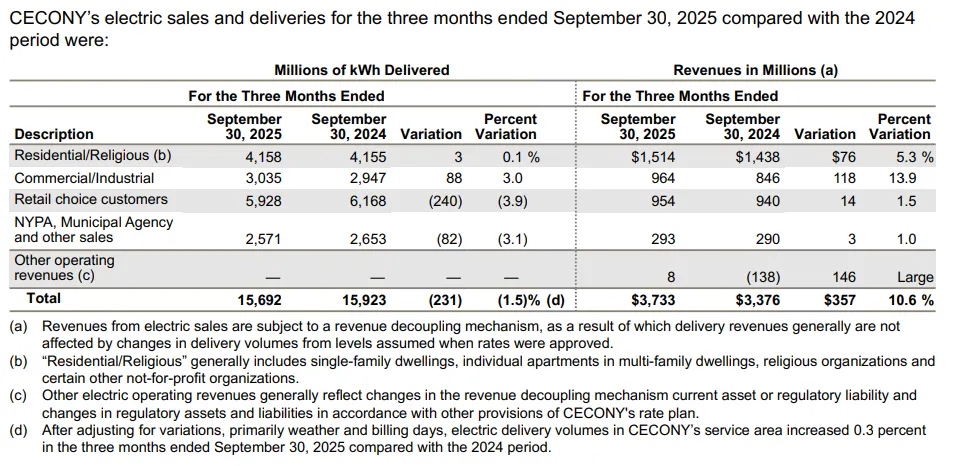

As Microsoft positions it, the significance of in-chip microfluidics goes well beyond a novel way to cool silicon. By removing heat at its point of generation, the technology raises the thermal ceiling that constrains today’s most power-dense compute devices. That shift could redefine how next-generation accelerators are designed, packaged, and deployed across hyperscale environments.

Impact of this cooling change:

- Higher-TDP accelerators and tighter packing. Where thermal density has been the limiting factor, in-chip microfluidics could enable denser server sleds—such as NVL- or NVL-like trays—or allow higher per-GPU power budgets without throttling.

- 3D-stacked and HBM-heavy silicon. Microsoft’s documentation explicitly ties microfluidic cooling to future 3D-stacked and high-bandwidth-memory (HBM) architectures, which would otherwise be heat-limited. By extracting heat inside the package, the approach could unlock new levels of performance and packaging density for advanced AI accelerators.

Implications for the AI Data Center

If microfluidics can be scaled from prototype to production, its influence will ripple through every layer of the data center, from the silicon package to the white space and plant. The technology touches not only chip design but also rack architecture, thermal planning, and long-term cost models for AI infrastructure.

Rack densities, white space topology, and facility thermals

Raising thermal efficiency at the chip level has a cascading effect on system design:

- GPU TDP trajectory. Press materials and analysis around Microsoft’s collaboration with Corintis suggest the feasibility of far higher thermal design power (TDP) envelopes than today’s roughly 1–2 kW per device. Corintis executives have publicly referenced dissipation targets in the 4 kW to 10 kW range, highlighting how in-chip cooling could sustain next-generation GPU power levels without throttling.

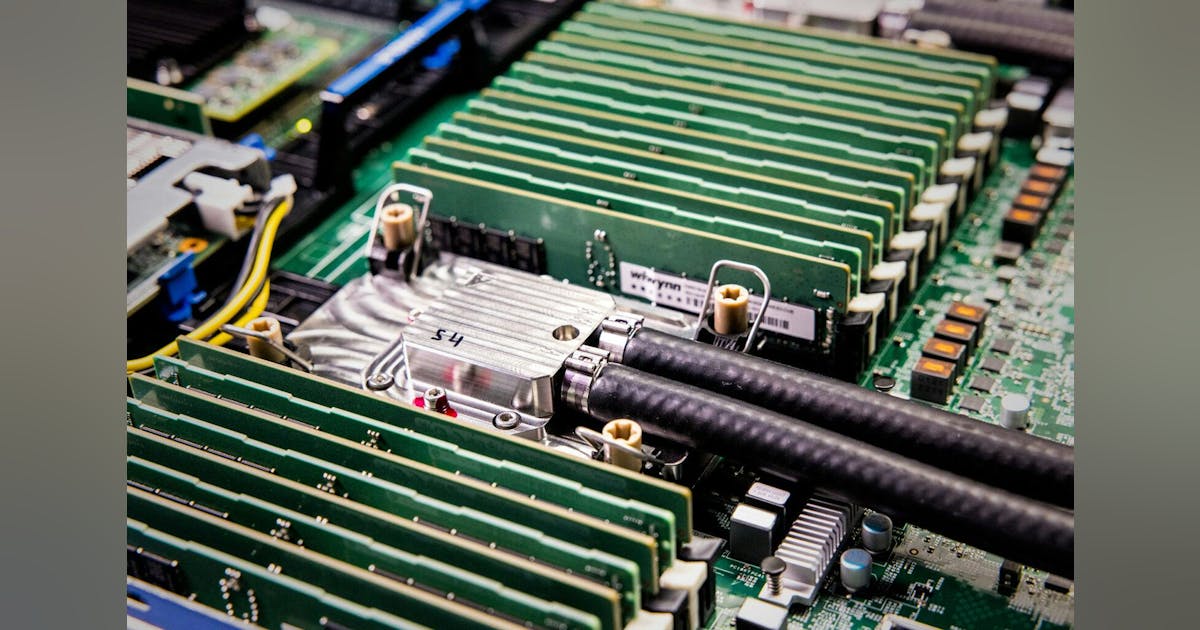

- Rack, ring, and row design. By removing much of the heat directly within the package, microfluidics could reduce secondary heat spread into boards and chassis. That simplification could enable smaller or fewer cold-distribution units (CDUs) per rack and drive designs toward liquid-first rows with minimal air assist, or even liquid-only aisles dedicated to accelerator pods.

- Facility cooling chain. Improved junction temperature control allows operators to raise supply-water temperatures on secondary loops, broadening heat-rejection options—such as more economizer hours or smaller chiller plants. Microsoft’s materials emphasize the potential for incremental PUE gains from cooling efficiency and reduced WUE by relying less on evaporative systems in suitable climates.

Capex, Opex, and Total Cost of Ownership

Beyond thermal performance, microfluidics shifts both cost and complexity within the compute and facility stack:

- Silicon and package cost. Etching microchannels and integrating fluidic interfaces introduces new steps at the fabrication and packaging stages, increasing early unit costs and yield risks. However, at scale, the ability to downsize CDUs and operate at higher coolant temperatures could offset these premiums. Microsoft’s own projections (and early analyst interpretations) point toward lower operational cooling costs compared with cold-plate baselines at similar performance.

- Retrofit versus greenfield deployment. Retrofitting existing servers would require compatible modules and manifold redesigns, limiting near-term adoption. The largest efficiency gains will come from greenfield deployments built around microfluidics from the start, featuring shorter loop paths, right-sized CDUs, higher leaving-water temperatures (LWTs), and simplified air support.

Reliability and Serviceability

Even with these advances, the concern about liquid near electronics remains. In this design, the leak risk shifts closer to the die itself.

Microsoft and Corintis emphasize that their back-side channel approach and reinforced interfaces isolate fluid paths from front-side wiring, localizing potential failures. So far, these reliability assessments are based on Microsoft’s internal testing; no independent, fleet-scale data has yet been published.

Supply Chain and Standards

Implementing backside fluid channels requires tight coordination across the semiconductor supply chain, from foundries performing through-silicon via (TSV) or backside processing to OSAT partners managing package assembly and fluidic I/O.

Microsoft says it is working with its fabrication and silicon partners to prepare for production integration across its data centers. Broader ecosystem partnerships or open standards have not yet been announced, suggesting that interoperability and standardization could become important next steps for adoption.

Constraints and Open Questions

Despite Microsoft’s promising results, significant manufacturing and ecosystem challenges remain before in-chip microfluidics can scale beyond prototypes:

- Manufacturing yield and throughput. There are clearly ongoing questions about whether semiconductor fabs can integrate back-side fluid channels at volume without introducing unacceptable defect rates. Each additional step in the process—etching channels, adding seals, bonding interfaces—creates new potential failure points. Responsibility for rework or warranty in the event of a post-assembly fluidic leak also remains undefined. Microsoft says it is working with its fabrication and silicon partners toward production readiness, but timelines and yield targets have not been disclosed.

- Ecosystem adoption. Even if chipmakers broadly endorse the technology, it could take years for vendors such as Nvidia, AMD, or Intel to ship GPUs or accelerators with native microfluidic support. Until then, early adopters would need custom modules or retrofitted packages, limiting near-term deployment. While ecosystem signals are positive, public production commitments remain preliminary. The technology’s promise is clear, but scaling to high-volume manufacturing will be the decisive test.

- Standards and interoperability. Another key unknown is whether the industry will converge on common fluidic interfaces and specifications. Without something akin to OCP-style standards for manifolds, fittings, and leak detection, operators may struggle to mix OEM hardware within the same rack or pod without extensive customization. Establishing these standards will be critical for multi-vendor compatibility and long-term adoption.

Beyond the Cold Plate: A New Thermal Frontier

Microsoft’s microfluidics initiative is less a one-off cooling experiment than an attempt to reset the thermal ceiling that limits AI compute growth. By moving heat extraction inside the silicon package and using AI to shape coolant flow to each chip’s hotspots, the company is opening the door to higher-power accelerators, denser racks, warmer water loops, and simpler plant infrastructure, all while maintaining or improving overall efficiency.

The concept carries credibility: Microsoft has published detailed figures showing up to 3× better heat removal versus top cold-plate systems and up to 65 percent lower peak temperatures in prototype testing, as well as acknowledging Corintis as its design partner. Still, the path from lab to large-scale deployment depends on manufacturing throughput, ecosystem standards, and fleet-level reliability data that have yet to emerge.

If those pieces fall into place on hyperscaler timelines, in-chip microfluidics could evolve from a laboratory innovation into a foundational design principle for next-generation AI data halls; one that redefines how the industry manages power, density, and efficiency at scale.