At this year’s OCP Global Summit, Flex made a declaration that resonated across the industry: the era of slow, bespoke data center construction is over. AI isn’t just stressing the grid or forcing new cooling techniques—it’s overwhelming the entire design-build process.

To meet this moment, Flex introduced a globally manufactured, fully integrated data center platform aimed directly at multi-gigawatt AI campuses. The company claims it can cut deployment timelines by as much as 30 percent by shifting integration upstream into the factory and unifying power, cooling, compute, and lifecycle services into pre-engineered modules.

This is not a repositioning on the margins. Flex is effectively asserting that the future hyperscale data center will be manufactured like a complex industrial system, not built like a construction project.

On the latest episode of The Data Center Frontier Show, we spoke with Rob Campbell, President of Flex Communications, Enterprise & Cloud, and Chris Butler, President of Flex Power, about why Flex believes this new approach is not only viable but necessary in the age of AI.

The discussion revealed a company leaning heavily on its global manufacturing footprint, its cross-industry experience, and its expanding cooling and power technology stack to redefine what deployment speed and integration can look like at scale.

AI Has Broken the Old Data Center Model

From the outset, Campbell and Butler made clear that Flex’s strategy is a response to a structural shift. AI workloads no longer allow power, cooling, and compute to evolve independently. Densities have jumped so quickly—and thermals have risen so sharply—that the white space, gray space, and power yard are now interdependent engineering challenges. Higher chip TDPs, liquid-cooled racks approaching one to two megawatts, and the need to assemble entire campuses in record time have revealed deep fragility in traditional workflows.

As Butler put it, AI has fused what used to be separate silos. “Power, heat, and scale are now the same problem,” he said. “Solving one without solving the others doesn’t work anymore.” Flex’s platform is built around that reality: the belief that only tightly integrated, factory-built systems can keep pace with AI’s acceleration curve.

The Case for Factory Integration—and a 30% Faster Build Cycle

Flex’s claim of up to 30 percent acceleration in time-to-market comes from collapsing large portions of the design, integration, and commissioning process into their global network of factories. Instead of rolling truckloads of components to a bare site and stitching them together under pressure, Flex assembles power systems, cooling modules, and compute blocks into transportable pods and skids long before they reach the data center footprint.

Campbell emphasized that integration is the critical innovation. By the time a unit arrives on site, the heavy work—testing, configuration, quality validation—has already been completed in controlled environments. What remains is final hookup. In practice, this transforms an 18-month schedule into something closer to a year, and does so with fewer surprises, fewer coordination breakdowns, and far less risk for the customer.

This model also aligns with Flex’s longstanding expertise in modular industrial systems across automotive, aerospace, and electronics—industries that long ago recognized the productivity gains in building complex systems in factories rather than fields.

JetCool, Modular CDUs, and the Push Toward Rack-Scale Liquid Cooling

One of the most interesting dimensions of Flex’s approach is how its 2024 acquisition of JetCool now sits at the center of its integrated platform. JetCool’s chip-level liquid cooling technology provides the fine-grained thermal control that AI and HPC workloads increasingly require, but the real value emerges when it plugs into Flex’s rack-level and pod-level cooling architecture.

Butler explained that Flex’s modular CDU design allows operators to scale cooling capacity from 600 kilowatts up to 1.2 or even 1.8 megawatts by daisy-chaining 300-kilowatt building blocks. Existing racks can be upgraded, or newly deployed racks can arrive at full capacity. Multiple 1.8 MW CDU racks can be combined in a pod or skid, creating a fluid-cooled building block ready for deployment.

The throughline is clear: by integrating coolant flow from chip to rack to pod, Flex is aiming to eliminate the fragmentation and integration gaps that slow down high-density deployments today. And their strategy implicitly treats liquid cooling not as an exception or a retrofit, but as the default for AI-oriented rack design going forward.

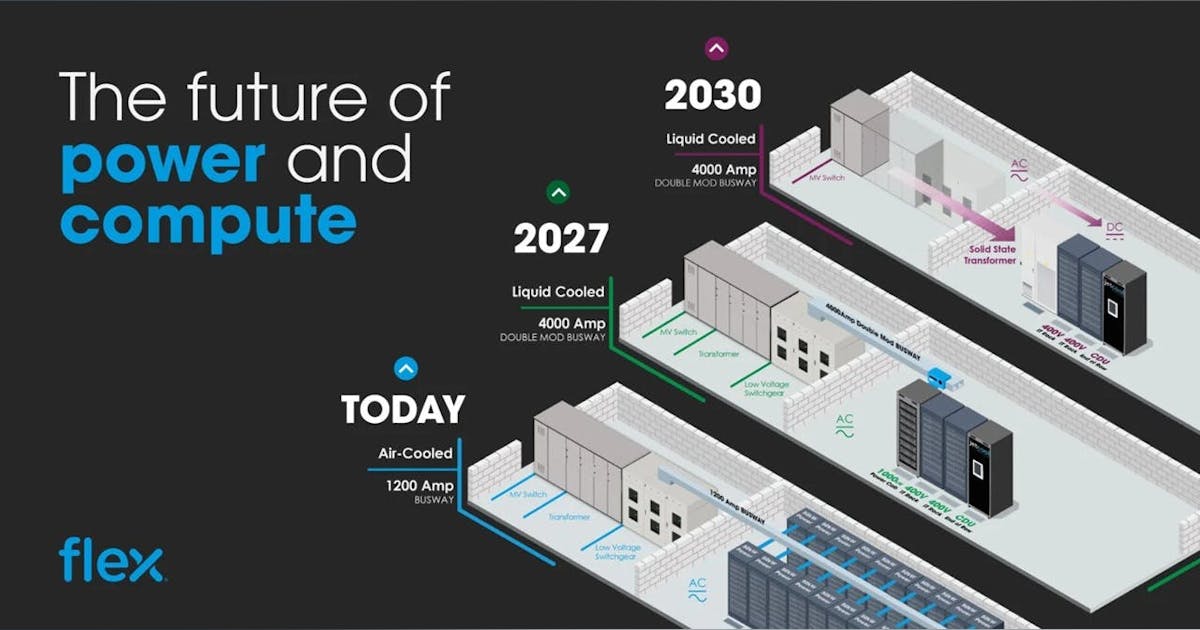

Higher-Voltage DC Power: From 400V Today to 800V Tomorrow

Power delivery is undergoing a similar transformation, and Flex is deeply embedded in that shift as well. The company is already working on a 400-volt DC, 1-megawatt rack architecture with a major hyperscaler and partnering with NVIDIA on the emerging 800-volt workstream. The move toward higher DC voltages is driven by simple math: more power in smaller footprints, improved efficiency through fewer conversion stages, and reduced copper requirements.

Butler was straightforward about the challenges. Higher-voltage DC eliminates the zero crossing that makes AC more forgiving, raising the stakes for arc flash, incident energy, and NFPA70E compliance. Flex leans on decades of power conversion experience to validate safety at every step, arguing that it can bring the right testing infrastructure and engineering rigor to a space that is moving quickly.

Looking further ahead, Butler anticipates a future in which data centers effectively erase the boundary between IT and OT. Medium-voltage distribution, including 34.5 kV in U.S. markets, may eventually extend directly to the rack, with compact conversion technology stepping down to 400 or 800 volts at the point of use. That kind of convergence would radically simplify power rooms, shrink footprints, and reduce losses.

Global Manufacturing as Strategic Advantage

If Flex’s technology stack is important, their manufacturing scale may be even more central to their vision. The company operates more than 110 manufacturing sites across 30 countries, with the ability to build regionally for regional markets and shift production across geographies to navigate tariffs, geopolitical issues, or supply disruptions.

Campbell described this footprint as Flex’s “superpower,” noting that the company has added eight million square feet of manufacturing space in the last two years alone, including a newly opened 400,000-square-foot power facility in Dallas and a doubled European footprint for power products. In a world where hyperscalers are racing to build AI campuses across multiple continents simultaneously, Flex believes this globally diversified model offers a unique structural advantage in speed, cost, and resilience.

Lifecycle Intelligence and Closing the Operational Gap

One of the candid revelations during the conversation was the extent to which data center equipment is often mismanaged after commissioning. Butler pointed to real-world examples where operators had insufficient visibility into the health of power and cooling systems, leading to performance degradation over time.

Flex’s answer is what it calls Lifecycle Intelligence—a platform-layer capability that draws together monitoring, predictive analytics, and system-level optimization across power, cooling, and IT. By designing these capabilities into the architecture from the start, Flex hopes to give operators not just dashboards, but actionable insights that detect and respond to changes in equipment behavior. Just as importantly, software-defined updates allow the platform to evolve over time, closing the operational gap that emerges in static or siloed deployments.

Sustainability Through Modular Design and Circularity

Sustainability is a recurring thread in Flex’s strategy, tied directly to its commitment to circular economy principles. Campbell noted that the company designs its modules for reuse and disassembly rather than disposal, and that its global footprint allows decommissioned equipment to be reworked or recycled locally rather than shipped across continents.

The modularity of the platform itself supports this circular approach. Pods, racks, and cooling modules can be redeployed, upgraded, or refreshed without scrapping large portions of infrastructure, which reduces embodied carbon and aligns with emerging sustainability requirements from hyperscalers and regulators alike.

Looking Toward 2030: Convergence, Modularity, and Rapid Assembly

Campbell and Butler closed the conversation by sketching a vision of the data center landscape as 2030 approaches. Both anticipate the dissolution of traditional boundaries—between IT and power, between rack and room, and between white space and gray space. They foresee data centers built from hundreds of modular pods and skids, each integrating liquid cooling, power systems, and compute hardware, and capable of being deployed and brought online within 30 to 60 days.

In this model, rapid deployment becomes the defining competitive advantage. Traditional 12-to-24-month construction timelines will give way to a manufacturing-driven approach where integration happens in the factory and the data center arrives on-site largely complete. If their forecasts hold, the physics of AI—and the economics required to support it—will leave little alternative.

Bottom Line: Flex Is Betting Big on Integration as the Foundation of the AI Era

Flex’s entrance into the data center sector is not incremental. It is a full-stack proposition built around modular pods, rack-to-chip liquid cooling, high-voltage DC power architectures, global manufacturing, lifecycle intelligence, and circular design. Together, these elements form a vision of the AI data center as a manufactured system—repeatable, standardized, and deployable at the pace of today’s AI growth curve.

In an era defined by gigawatt-scale campuses, skyrocketing densities, and unprecedented deployment pressure, Flex is betting that factory integration is the only model capable of keeping up. Whether the industry fully pivots in that direction remains to be seen, but Flex’s opening salvo signals that the conversation around how to build the AI infrastructure of the next decade has decisively shifted.