A Massive TPU Commitment—and a Strategic Signal From Google

This is not a casual “we spun up a few pods on GCP.” Anthropic is effectively reserving a substantial share of Google’s future TPU capacity and tying that scale directly into Google Cloud’s enterprise AI go-to-market.

The compute commitment has been described as being worth tens of billions of dollars over the life of the agreement, signaling Google’s intention to anchor Anthropic as a marquee external TPU customer.

Google’s Investment Outlook: Still Early, But Potentially Transformational

Reports from Reuters (citing Business Insider) on November 6 indicate that Google is exploring whether to deepen its financial investment in Anthropic. The discussions are early and non-binding, but the structures under consideration (including convertible notes or a new priced round paired with additional TPU and cloud commitments) suggest a valuation that could exceed $350 billion. Google has not commented publicly.

This would come on top of Alphabet’s existing position, reported at more than $3 billion invested and 14% ownership, and follows Anthropic’s September 2025 $13 billion Series F, which valued the company at $183 billion. None of these prospective terms are final, but the direction of travel is clear: Google is looking to bind TPU productization, cloud consumption, and strategic alignment more tightly together.

Anthropic’s Multi-Cloud Architecture Reaches Million-Accelerator Scale

Anthropic’s strategy depends on not being bound to a single hyperscaler or silicon roadmap. In November 2024, the company named AWS its primary cloud and training partner; a deal that brought Amazon’s total investment to $8 billion and committed Anthropic to Trainium for its largest models. The two companies recently activated Project Rainier, an AI supercomputer cluster in Indiana with roughly 500,000 Trainium2 chips, with Anthropic expected to scale to more than 1 million Trainium2 chips on AWS by the end of 2025.

The Google Cloud expansion mirrors that scale on a parallel stack. Anthropic is now positioned to run on million-chip footprints across both AWS (Trainium2) and Google Cloud (TPUs), supplemented by continued significant use of Nvidia GPUs. This symmetrical hyperscale deployment—across two clouds, two accelerator families, and multiple software stacks—is extraordinarily rare in a market where most foundation-model labs rely overwhelmingly on a single hyperscaler and a single accelerator vendor.

Anthropic has, in effect, become a multi-cloud, multi-accelerator AI factory operator, using architectural diversification as leverage for supply, pricing, and roadmap resilience.

TPUs Go Fully Productized and Anthropic Becomes the Proof Point

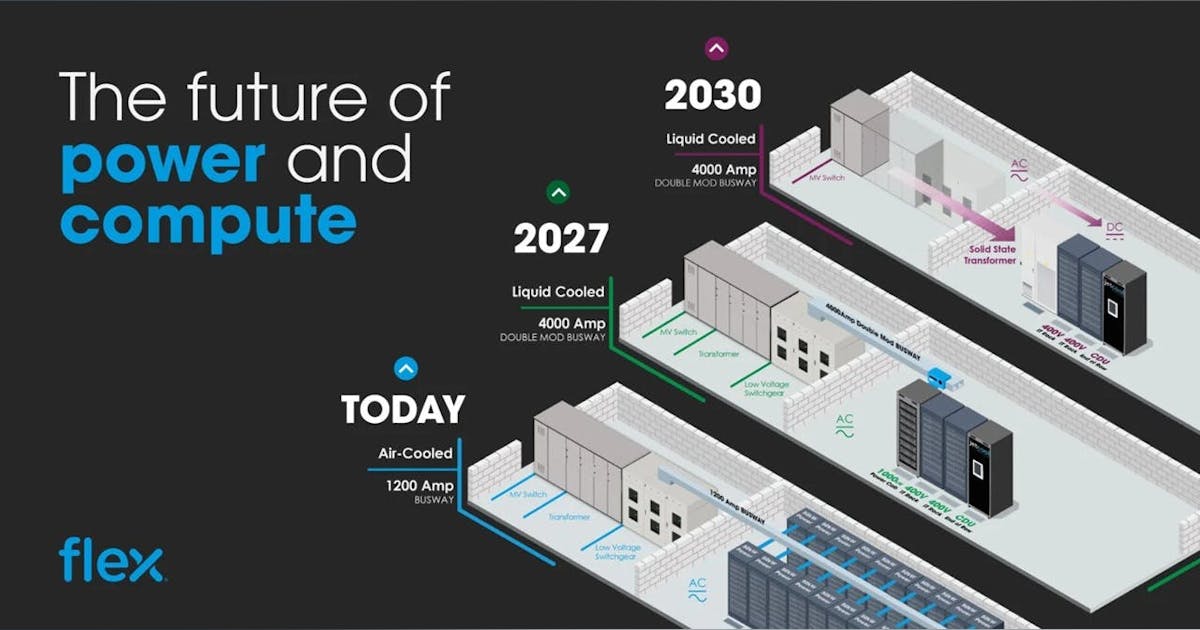

For most of their history, TPUs were effectively an internal accelerator for Google’s own workloads. That shifted over the last 18 months as Google Cloud began aggressively productizing TPUs for external customers, positioning the chips as a lower-cost, more power-efficient alternative to Nvidia GPUs and a way to diversify supply at scale.

Google has been courting major AI labs—including Anthropic, Meta, and Oracle—to validate TPUs for frontier-model training. With the expanded Anthropic deal, Google secures a marquee independent lab running TPUs at million-chip scale. It’s a powerful signal that TPUs are no longer just for Gemini or first-party Google services; they are now being positioned as credible, production-grade silicon for third-party frontier training.

Strategically, Alphabet is aligning TPU productization, cloud consumption, and model distribution so that whether an enterprise standardizes on Gemini, Claude, or uses a mix, Google Cloud monetizes both the workloads and the underlying accelerator fabric.

And while both AWS and Google’s financial investments in Anthropic are contractually constrained (no board seats, no voting rights) they retain substantial commercial influence. Control of pricing, priority access to new silicon generations, integration depth, and co-marketing all function as informal levers.

The result is not a picture of a “wholly independent lab,” but rather a multi-anchored AI infrastructure tenant operating across two hyperscalers—each with negotiated guardrails, each with meaningful influence, and neither with structural control.

Why Anthropic Makes This Deal

Anthropic has been clear that it does not want to be dependent on any single vendor’s silicon roadmap, pricing structure, or data center build-out timeline. The expanded TPU agreement with Google, as layered on top of AWS Trainium2 and ongoing Nvidia GPU use, gives Anthropic strategic flexibility across several fronts:

- First, it strengthens supply-chain resilience. If Nvidia experiences shortages, or if either Trainium or TPU capacity is constrained by outages, export controls, or regional grid curtailments, Anthropic can dynamically shift workloads across clouds and architectures.

- Second, it enhances pricing leverage. By maintaining large-scale deployments across AWS and Google Cloud, Anthropic can benchmark performance and negotiate per-FLOP or per-token economics from a position of comparative strength.

- Third, it preserves chip-level optionality. Different architectures excel with different model sizes, training regimes, sequence lengths, and serving patterns. TPUs are deeply aligned with Google’s software stack, while Trainium is optimized for AWS’s ecosystem, giving Anthropic the ability to match hardware to workload.

Beyond compute, the Google partnership expands Anthropic’s enterprise distribution. Claude is becoming more deeply integrated into Vertex AI and available via the Google Cloud Marketplace, enabling tens of thousands of GCP customers to adopt Anthropic models with minimal friction. Planned integrations into Google Workspace (alongside or in parallel with Gemini) could expose Claude-powered capabilities to hundreds of millions of users through co-branded or white-label features.

This distribution complements Anthropic’s existing reach through Amazon Bedrock and other partners, further reinforcing the company’s strategy of broad, multi-channel access rather than dependence on any single platform.

Where Anthropic Now Fits in the Competitive AI Landscape

The hyperscaler–AI lab ecosystem is crystallizing into a few dominant alignments:

-

Microsoft + OpenAI + Nvidia, anchored in Azure

-

Amazon + Anthropic + Trainium & Nvidia, anchored in AWS

-

Google + Anthropic + TPUs & Nvidia, anchored in GCP

What makes Anthropic unusual is that it maintains deep, strategic ties to two hyperscalers—AWS and Google—while continuing to position itself as an independent frontier-model lab. Its Claude 4.x and 4.5 models are performing at or above peers in key enterprise benchmarks such as reasoning and coding, which strengthens its role as a credible third pole in the foundation-model market.

This dual-hyperscaler architecture also provides regulatory insulation. In an environment where antitrust authorities are increasingly skeptical of exclusive cloud–model lock-in, Anthropic’s multi-cloud posture is a defensible shield: it can credibly claim it is not structurally beholden to any single provider.

Anthropic’s influence extends beyond technology and into policy. The company is already active in AI safety and compute-governance discussions. Its unique position – as a frontier lab operating simultaneously across AWS, Google Cloud, and Nvidia ecosystems – may give it outsized weight in shaping debates over compute allocation, safety standards, and access to next-generation systems.

With Anthropic reportedly laying the groundwork for a public listing as soon as 2026, this multimillion-TPU commitment from Google Cloud may serve as more than a technical strategic play. It could help underpin one of the biggest IPOs in tech history. For investors and regulators watching, the deal begins to look like a concrete signal that Anthropic is positioning itself not just as a frontier AI lab, but as a long-term, enterprise-scale infrastructure and services business.