(Update) January 23, 2026, 8:33 PM GMT: Article updated.

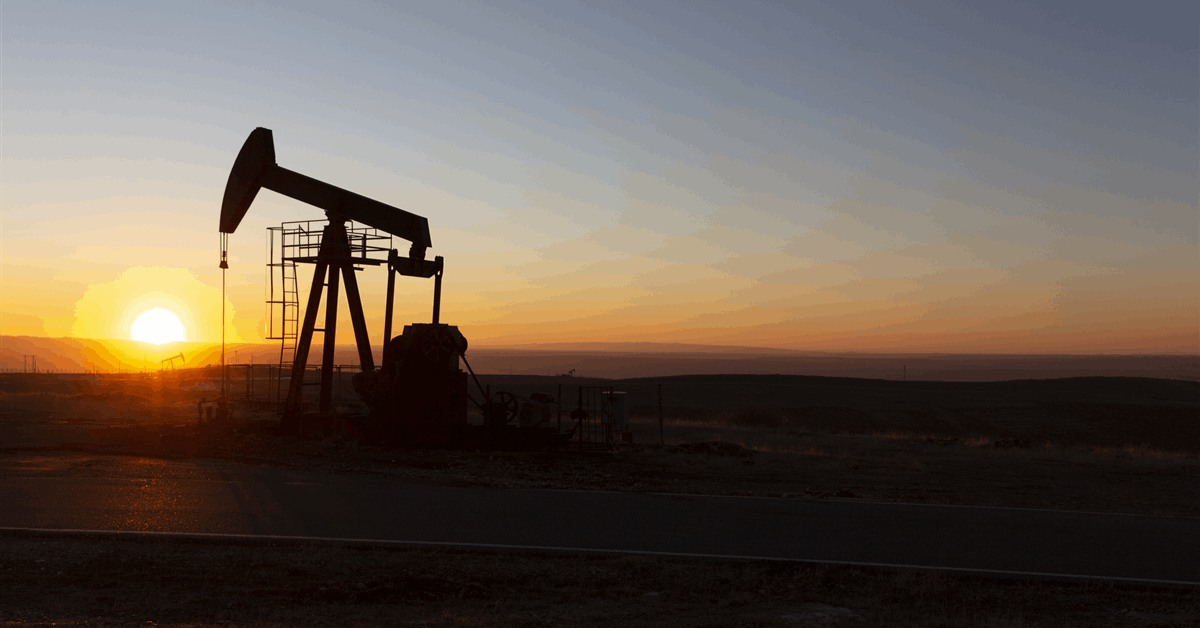

US natural gas futures for February delivery surged by 70% this week as the market braced for a historic winter storm that’s poised to send temperatures plummeting and boost demand for the heating fuel.

Futures settled up 4.6%, or by 23 cents, to $5.275 per million British thermal units. That settlement was up by $2.172 per million Btu from the end of last week and capped the largest weekly percentage gain by far in records going back to 1990.

Natural gas prices for near-term delivery at regional trading hubs across the US also jumped. So-called cash prices for gas at the benchmark Henry Hub in Louisiana to be delivered over the weekend surged on Friday to higher than $28 per million British thermal units, according to traders. That compares with $8.42 on Thursday.

Spot prices at the SoCal Citygate hub in California traded as high as $8 per million Btu as gas volumes delivered via pipeline from the Permian Basin in West Texas to the West Coast have likely been reduced, traders said. That’s up from $4.42 on Thursday. Spot prices at the Houston Chip Channel were trading at $30 per million Btu on Friday morning, traders said. The Transco zone 6 non-New York index, an indicator for prices from Baltimore to New Jersey, traded Friday afternoon at $58 per million Btu, traders said.

This week’s surge has been driven by forecasts for below-normal temperatures across most of the country, threatening to boost gas consumption and drain inventories. The freeze — particularly in the southern gas-producing states — has raised concerns about water icing in pipelines, potentially disrupting output starting this weekend. One energy consultancy, Energy Aspects, raised its forecast for how much production will be lost to so-called freeze-offs over the next 14 days to 86.4 billion cubic feet, equivalent to 5.5% of US gas production, from 63.7 bcf on Thursday.

The shift in US weather forecasts came days after hedge funds turned more bearish on gas at the end of last week, leaving the market poised for a rally as traders rushed to close out those wagers. Gas prices briefly climbed above $5.50 per million Btu on Thursday, a level that a Citigroup Inc. analysis on Thursday showed would wipe out all shorts.

WHAT DO YOU THINK?

Generated by readers, the comments included herein do not reflect the views and opinions of Rigzone. All comments are subject to editorial review. Off-topic, inappropriate or insulting comments will be removed.