Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

If you were in one of the nearly 40 million U.S. households that tuned into the NFL Super Bowl LIX this year, in addition to watching the Philadelphia Eagles trounce the Kansas City Chiefs, you may have caught an advertisement for OpenAI.

This is the company’s first Super Bowl ad, and it cost a reported $14 million — in keeping with the astronomical sums commanded by ads during the big game, which some come to see instead of the football. As you’ll see in a copy embedded below, the OpenAI ad depicts various advancements throughout human history, leading up to ChatGPT today, what OpenAI calls the “Intelligence Age.“

While reaction to the ad was mixed — I’ve seen more praise and defense for it than criticism in my feeds — it clearly indicates that OpenAI has arrived as a major force in American culture, and quite obviously seeks to connect to a long lineage of invention, discovery and technological progress that’s taken place here.

On it’s own, the OpenAI Super Bowl ad seems to me to be a totally inoffensive and simple message designed to appeal to the widest possible audience — perfect for the Super Bowl and its large audience across demographics. In a way, it’s even so smooth and uncontroversial that it is forgettable.

But coupled with a blog post OpenAI CEO Sam Altman published on his personal website earlier on Sunday, entitled “Three Observations,” and suddenly OpenAI’s assessment of the current moment and the future becomes much more dramatic and stark.

Altman begins the blog post with a pronouncement about artificial general intelligence (AGI), the raison d’etre of OpenAI’s founding and its ongoing efforts to release more and more powerful AI models such as the latest o3 series. This pronouncement, like OpenAI’s Super Bowl ad, also seeks to connect OpenAI’s work building these models and approaching this goal of AGI with the history of human innovation more broadly.

“Systems that start to point to AGI* are coming into view, and so we think it’s important to understand the moment we are in. AGI is a weakly defined term, but generally speaking we mean it to be a system that can tackle increasingly complex problems, at human level, in many fields.

People are tool-builders with an inherent drive to understand and create, which leads to the world getting better for all of us. Each new generation builds upon the discoveries of the generations before to create even more capable tools—electricity, the transistor, the computer, the internet, and soon AGI.“

A few paragraphs later, he even seems to concede that AI — as many developers and users of the tech agree — is simply another new tool. Yet he immediately flips to suggest this may be a much different tool than anyone in the world has ever experienced to date. As he writes:

“In some sense, AGI is just another tool in this ever-taller scaffolding of human progress we are building together. In another sense, it is the beginning of something for which it’s hard not to say “this time it’s different”; the economic growth in front of us looks astonishing, and we can now imagine a world where we cure all diseases, have much more time to enjoy with our families, and can fully realize our creative potential.“

The idea of “curing all diseases,” while certainly appealing — mirrors something rival tech boss Mark Zuckerberg of Meta also sought out to do with his Chan-Zuckerberg Initiative medical research nonprofit co-founded with his wife, Prisicilla Chan. As of two years ago, the timeline proposed for the Chan-Zuckerberg’s initiative to reach this goal was by 2100. Yet now thanks to the progress of AI, Altman seems to believe it’s attainable even sooner, writing: “In a decade, perhaps everyone on earth will be capable of accomplishing more than the most impactful person can today.”

Altman and Zuck are hardly the one two high-profile tech billionaires interested in medicine and longevity science in particular. Google’s co-founders, especially Sergey Brin, have put money towards analogous efforts, and in fact, there were (or are) at one point so many leaders in the tech industry interested in prolonging human life and ending disease that back in 2017, The New Yorker magazine ran a feature article entitled: “Silicon Valley’s Quest to Live Forever.”

This utopian notion of ending disease and ultimately death seems patently hubristic to me on the face of it — how many folklore stories and fairy tales are there about the perils of trying to cheat death? — but it aligns neatly with the larger techno-utopian beliefs of some in the industry, which have been helpfully grouped by AGI critics and researchers Timnit Gebru and Émile P. Torres under the umbrella term TESCREAL, an acronym for “transhumanism, Extropianism, singularitarianism, (modern) cosmism, Rationalism, Effective Altruism, and longtermism,” in their 2023 paper.

As these authors elucidate, the veneer of progress sometimes masks uglier beliefs such as in the inherent racial superiority or humanity of those with higher IQs, specific demographics, and ultimately evoking racial science and phrenology of more openly discriminatory and oppressive ages past.

There’s nothing to suggest in Altman’s note that he shares such beliefs, mind you…in fact, rather the opposite. He writes:

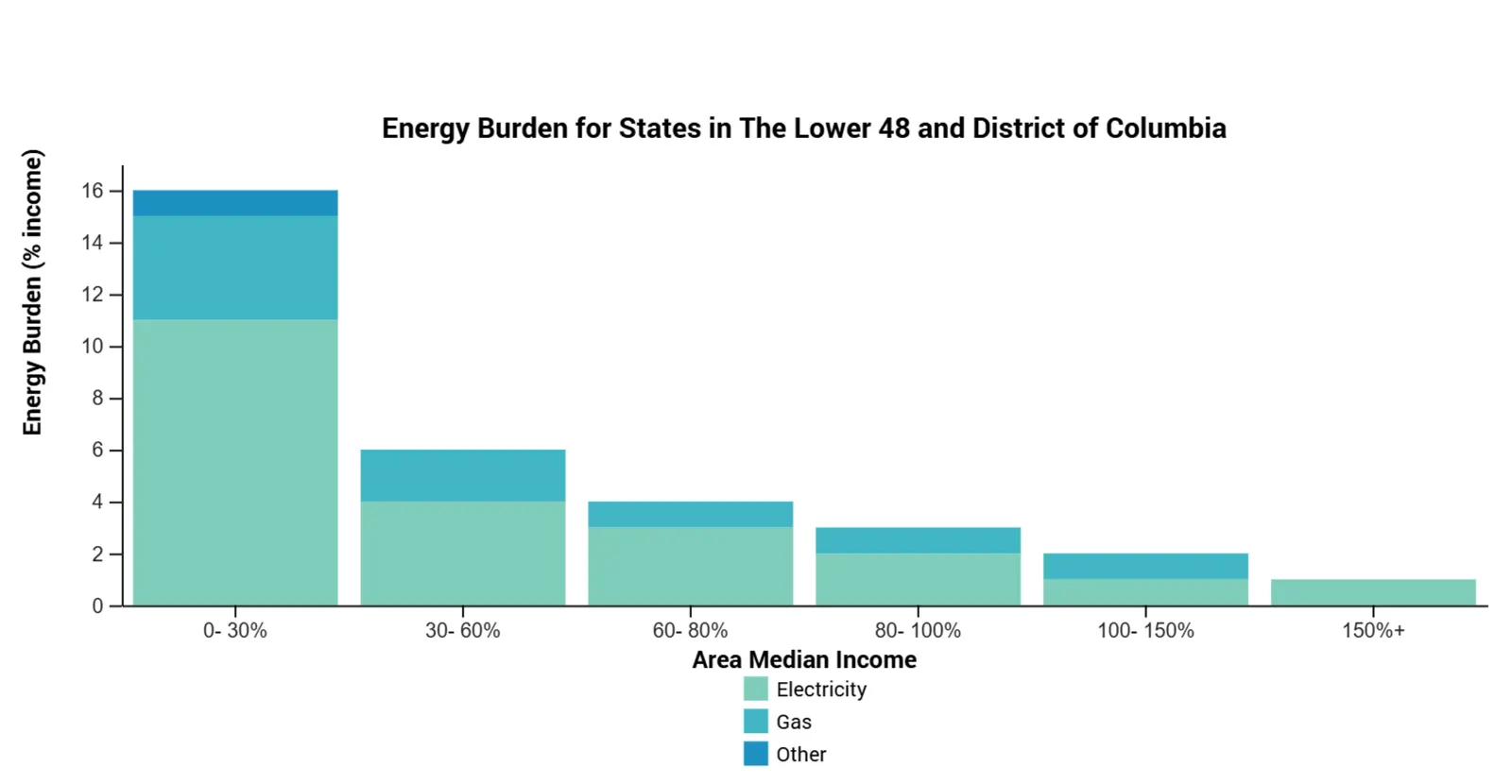

“Ensuring that the benefits of AGI are broadly distributed is critical. The historical impact of technological progress suggests that most of the metrics we care about (health outcomes, economic prosperity, etc.) get better on average and over the long-term, but increasing equality does not seem technologically determined and getting this right may require new ideas.”

In other words: he wants to ensure everyone’s life gets better with AGI, but is uncertain how to achieve that. It’s a laudable notion, and one that maybe AGI itself could help answer, but for one thing, OpenAI’s latest and greatest models remain closed and proprietary as opposed to competitors such as Llama’s Meta family and DeepSeek’s R1, though the latter has apparently caused Altman to re-assess OpenAI’s approach to the open source community as he mentioned on a recent separate Reddit AMA thread. Perhaps OpenAI could start by open sourcing more of its technology to ensure it spreads wider to more users, more equally?

Meanwhile, speaking of specific timelines, Altman seems to project that while the next few years may not be wholly remade by AI or AGI, he’s more confident of a visible impact by the end of the decade 2035. As he puts it:

“The world will not change all at once; it never does. Life will go on mostly the same in the short run, and people in 2025 will mostly spend their time in the same way they did in 2024. We will still fall in love, create families, get in fights online, hike in nature, etc.

But the future will be coming at us in a way that is impossible to ignore, and the long-term changes to our society and economy will be huge. We will find new things to do, new ways to be useful to each other, and new ways to compete, but they may not look very much like the jobs of today.

Anyone in 2035 should be able to marshall [sic] the intellectual capacity equivalent to everyone in 2025; everyone should have access to unlimited genius to direct however they can imagine. There is a great deal of talent right now without the resources to fully express itself, and if we change that, the resulting creative output of the world will lead to tremendous benefits for us all.”

Where does this leave us? Critics of OpenAI would say it’s more empty hype designed to continue placating OpenAI’s big-pocketed investors such as Softbank and put off any pressure to have working AGI for a while longer.

But having used these tools myself, watched and reported on other users and sene what they’ve been able to accomplish — such as writing up complex software within mere minutes without much background in the field — I’m inclined to believe Altman is serious in his prognostications, and hopeful in his commitment to equal distribution.

But keeping all the best models closed up under a subscription bundle clearly is not the way to attain equal access to AGI — so my biggest question remains on what the company does under his leadership to ensure it moves in this direction he so clearly articulated and that the Super Bowl ad also celebrated.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.