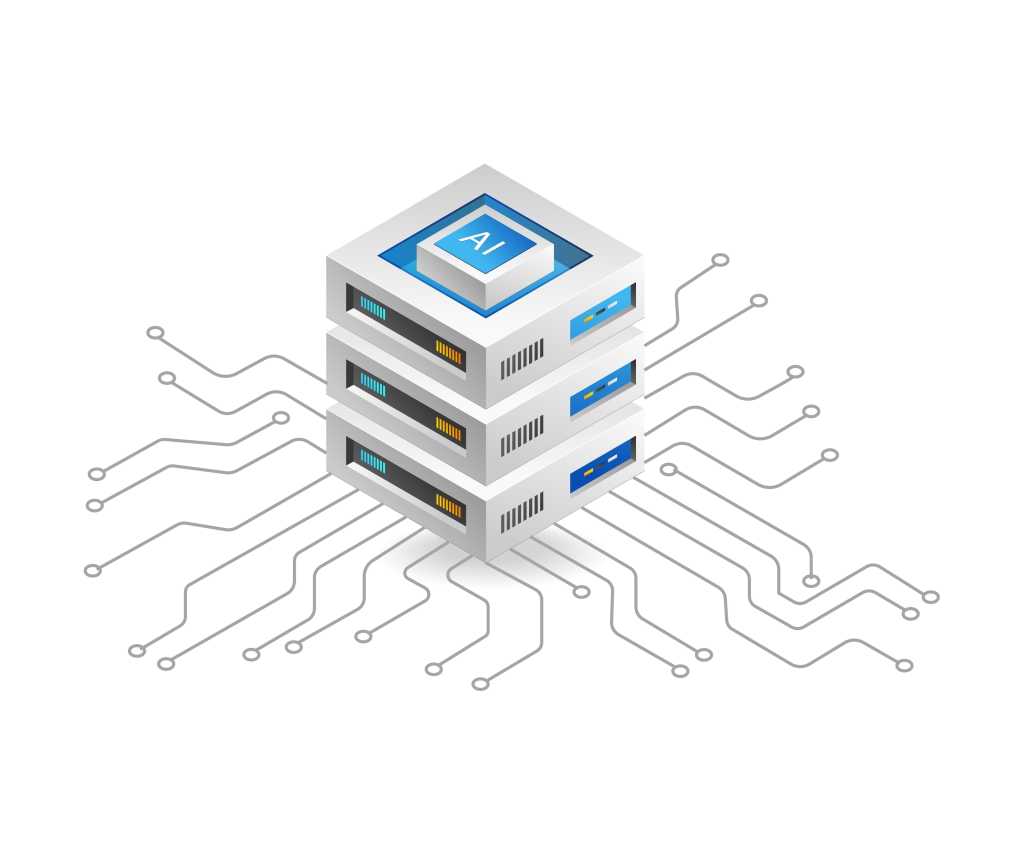

Always remember: Design AI infrastructure for scalability, so you can add more capability when you need it.

Comparison of different AI server models and configurations

All the major players — Nvidia, Supermicro, Google, Asus, Dell, Intel, HPE — as well as smaller entrants are offering purpose-built AI hardware. Here’s a look at tools powering AI servers:

– Graphics processing units (GPUs): These specialized electronic circuits were initially designed to support real-time graphics for gaming. But their capabilities have translated well to AI, and their strengths are in their high processing power, scalability, security, quick execution and graphics rendering.

– Data processing units (DPUs): These systems on a chip (SoC) combine a CPU with a high-performance network interface and acceleration engines that can parse, process and transfer data at the speed of the rest of the network to improve AI performance.

– Application-specific integrated circuits (ASICs): These integrated circuits (ICs) are custom-designed for particular tasks. They are offered as gate arrays (semi-custom to minimize upfront design work and cost) and full-custom (for more flexibility and to process greater workflows).

– Tensor processing units (TPUs): Designed by Google, these cloud-based ASICs are suitable for a broad range of aI workloads, from training to fine-tuning to inference.