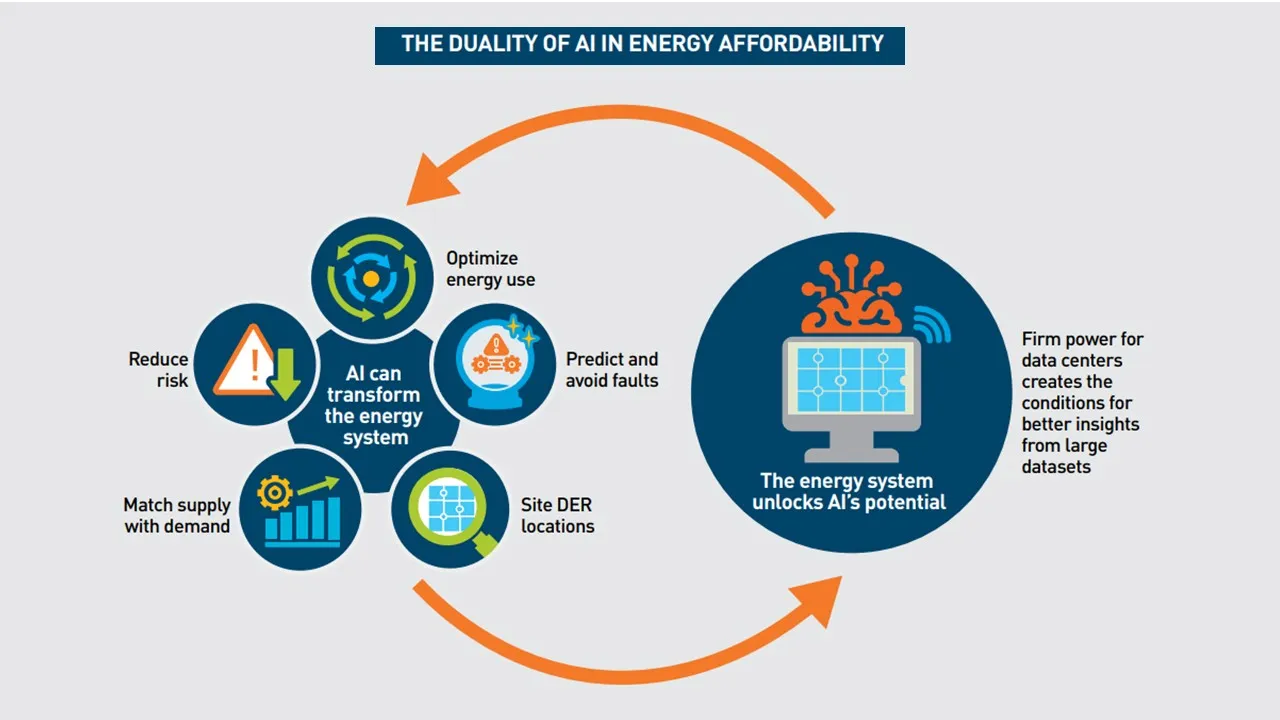

Utilities and system operators are discovering new ways for artificial intelligence and machine learning to help meet reliability threats in the face of growing loads, utilities and analysts say.

There has been an “explosion into public consciousness of generative AI models,” according to a 2024 Electric Power Research Institute, or EPRI, paper. The explosion has resulted in huge 2025 AI financial commitments like the $500 billion U.S. Stargate Project and the $206 billion European Union fund. And utilities are beginning to realize the new possibilities.

“Utility executives who were skeptical of AI even five years ago are now using cloud computing, drones, and AI in innovative projects,” said Electric Power Research Institute Executive Director, AI and Quantum, Jeremy Renshaw. “Utilities rapid adoption may make what is impossible today standard operating practice in a few years.”

Concerns remain that artificial intelligence and machine learning, or AI/ML, algorithms, could bypass human decision-making and cause the reliability failures they are intended to avoid.

“But any company that has not taken its internal knowledge base into a generative AI model that can be queried as needed is not leveraging the data it has long paid to store,” said NVIDIA Senior Managing Director Marc Spieler. For now, humans will remain in the loop and AI/ML algorithms will allow better decision-making by making more, and more relevant, data available faster, he added.

In real world demonstrations, utilities and software providers are using AI/ML algorithms to improve tasks as varied as nuclear power plant design and electric vehicle, or EV, charging. But utilities and regulators must face the conundrum of making proprietary data more accessible for the new digital intelligence to increase reliability and reduce customer costs while also protecting it.

The old renewed

The power system has already put AI/ML algorithms to work in cybersecurity applications with cutting-edge learning capabilities to better recognize attackers.

Checkpoint Software, the global AI chip maker NVIDIA’s security provider, is working with standards certifier Underwriters Laboratories on new levels of security for consumer devices, said Peter Nicoletti, Checkpoint’s global chief information security officer. Smart devices “will be required to meet a security standard protecting against hackers during software updates,” he said.

Another proven power system application for advanced computing is market price forecasting based on weather, load and available generation.

Amperon has done weather, demand and market price forecasting with AI/ML algorithms since 2018, said Sean Kelly, its co-founder and CEO. But Amperon’s short-term modeling now “runs every hour and continuously retrains smarter and faster using less energy, combining the strengths from each iteration in a way that humans could never touch,” he added.

Hitachi Energy’s Nostradomus AI forecasting tool, with the newest AI/ML capabilities, “has improved price forecasting accuracy 20% over human market price forecasting” since November, said Jason Durst, Hitachi Energy general manager, asset and work management, enterprise software solutions.

AI/ML-assisted technology has also emerged “as a critical pillar of wildfire mitigation strategy,” said Rob Brook senior vice president and managing director, Americas, for predictive software provider Neara. It helps utilities identify wildfire risks “across their networks by proactively assessing more variables than a human can assimilate,” he added.

AI/ML algorithms have, in the last year, accelerated the use of robotics for solar construction, said Deise Yumi Asami, developer of the Maximo robot for power provider AES. The six months once needed to retrain Maximo have been eliminated because its AI/ML algorithms autonomously learn the unique characteristics of each solar project before it begins work, she added.

The new and more autonomous AI/ML capabilities will offer “increased stability, predictability, and reliability at scale,” said Nate Melby, vice president and chief information officer of Midwestern generation and transmission cooperative Dairyland Power Cooperative. Management of system complexity “is where AI could shine,” he added.

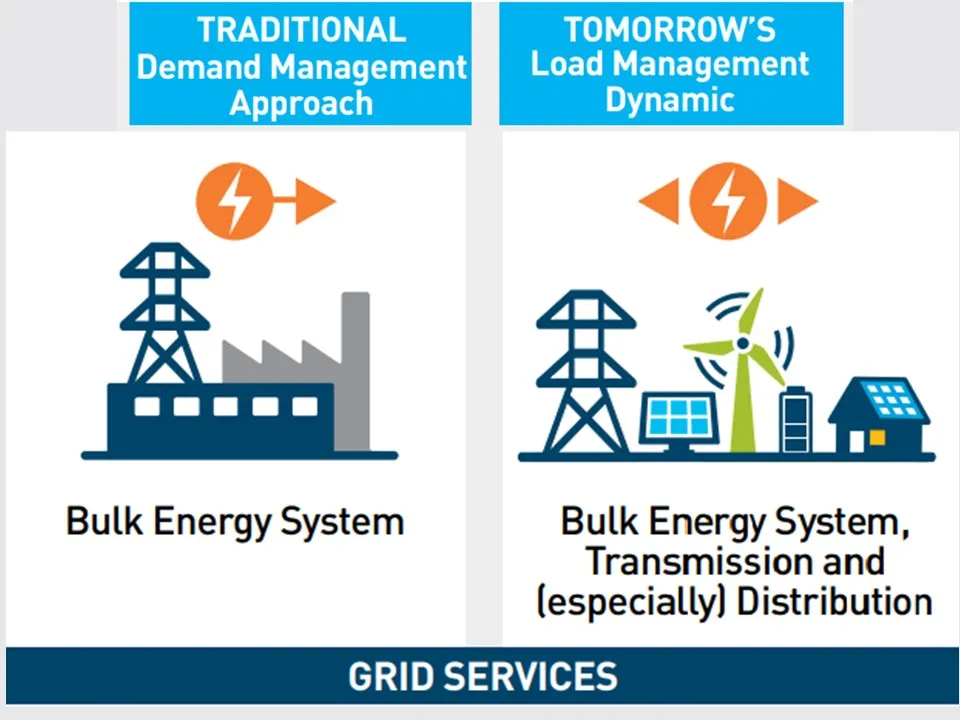

Utilities are increasingly using new AI/ML capabilities to meet the accelerating complexities of variable loads, proliferating distributed energy resources, or DER, and other power system challenges.

Optional Caption

Permission granted by PG&E

New needs, new capabilities

A power system without adequate flexibility “can lead to decreased reliability and safety, increased operational costs, and capacity costs,” Pacific Gas and Electric, or PG&E, concluded in its 2024 R&D Strategy Report. “AI/ML and other novel technologies can not only bolster our immediate response capabilities but also inform long-term planning and policymaking,” it added.

PG&E’s total electricity consumption will double in the next five to 10 years, but it can limit peak load growth to 10% with AI/ML-based grid optimization of DER on the existing infrastructure, PG&E CEO Patti Poppe said at the utility’s November Innovation Summit.

Access to AI/ML algorithms is now commercially viable, and their capabilities can optimize multiple large scenarios in parallel to support decision-making for the power system’s millions of variables, NVIDIA’s Spieler said. The algorithms can also write software code to allow utilities to use “the petabytes of stored system data they have but have not used to optimize more operations,” he added.

Utilities can upload and query their internal knowledge bases of research papers, rate cases and analyses of wildfire and safety issues into a generative AI model, Spieler said. The query responses can then explain system anomalies based on performance and maintenance histories or deliver needed data and precedents for writing general rate case and other regulatory proceeding filings, he added.

Utility demonstrations are verifying the new AI/ML capabilities.

Optional Caption

Permission granted by PG&E

From DER to nuclear plants

Several demonstrations have focused on how AI/ML algorithms can optimize distribution system resources.

Utilidata’s Karman software platform and an NVIDIA GPU-empowered chip are embedded in Aclara smart meters and will soon be in other distribution system hardware, said Utilidata VP, Product, Yingchen Zhang. Karman reads high resolution distribution system raw data 32,000 times per second and identifies individual customer electricity usages in real time, he added.

A real world demonstration, with Karman reading and reacting to granular real-time data, found utilities can quickly stabilize EV charging-induced voltage fluctuations, a University of Michigan-Utilidata study noted.

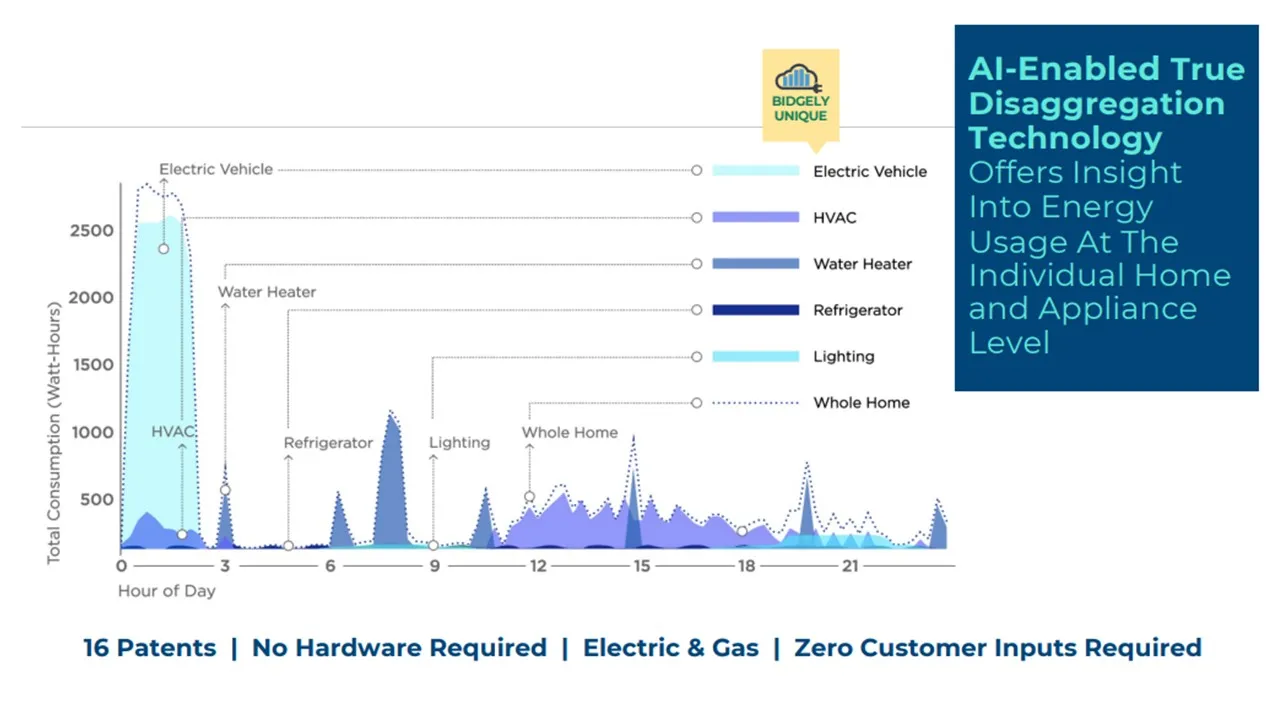

Within one year of implementing software from data disaggregation specialist Bidgley, Avista Utilities reduced service calls in response to high bill complaints by 27%, reported Avista Corp. Products and Services Manager Andrew Barrington. Instead of a service call to check the customer’s meter, Bidgley’s software analysis identified the customer usage causing the bill spike, he added.

A Bidgely disaggregation analysis evaluated EV charging for 10,000 Ameren Missouri customers, reported Caroline Cochran, its VP, Delivery, in a Stanford-EPRI conference presentation. The analysis identified the 73 customers that could utilize better management to avoid or defer costly infrastructure expenditures that otherwise would have been needed to manage EV charging loads, she added.

Bidgley’s similar 2023 disaggregation analysis of 100,000 NV Energy EV charger owners identified “hot spots where infrastructure investment will likely be needed first,” which limited larger distribution system capital investment, reported the Smart Electric Power Alliance’s January AI for Transportation Electrification Insight Brief.

AI/ML algorithms are also finding efficiencies that reduce nuclear power plant costs and safety challenges.

PG&E is using Atomic Canyon’s Generative AI software, trained to Nuclear Regulatory Commission standards, at its Diablo Canyon Nuclear Power Plant, said Nuclear Innovation Alliance Research Director Patrick White. And innovative AI/ML-based plant designs, operations and predictive preventive maintenance are limiting costs and increasing plant safety, he added.

There are, however, things utilities must do to more fully take advantage of the accelerating AI/ML capabilities, utilities and providers recognize.

Permission granted by Bidgley

The work ahead for utilities

Effectively capturing the benefits of AI/ML algorithms begins with recognizing the potential and acquiring and using the right hardware and software, utilities and third parties say.

Avista’s successful adoption of third-party AI/ML “began with a mindset,” said Barrington. The key questions were “how to enhance customer engagement, how to integrate customer data with system operations, and how to enhance system visibility and enable proactive strategies,” he added.

AI/ML algorithms are now extracting real-time data and making actionable suggestions, Utilidata’s Zhang said. But “utilities cannot take advantage of the suggestions because they do not have the technology and communications ecosystems in place,” he added.

Utilities need communications technologies, advanced metering and edge computing infrastructure, and data processing and storage technologies, EPRI’s Renshaw said. And, at the distribution system level, utilities should also have software that can be securely updated for new technologies as customers adopt them, Utilidata’s Zhang added.

Balancing the protection of security and customer privacy with the need to provide data to train AI/ML algorithms continues to be a significant challenge.

Protecting utility data requires “strong cybersecurity practices,” said Dairyland Power’s Melby. But utilities need to access and manage data in a way “AI platforms can leverage,” he added.

Recently, “utilities have begun doing penetration testing to prove their data is as secure in our system as in theirs,” said Bidgley’s Cochran. They also “have developed AI committees to do extra thorough reviews of the users of their data,” she added.

“There is good reason for utilities to be conservative about data privacy, but AI/ML power system applications are not yet any threat,” Utilidata’s Zhang said. Federated learning or foundation models are ways to both protect privacy and provide data for algorithm training, he added.

Federated learning allows utilities to protect proprietary data by building synthetic models of their data about specific challenges that can be shared at a secure location for further training, Zhang said.

But some think federated learning may be too limited for power system complexities. Foundation models would use orders of magnitude more data that has been anonymized and pre-trained with as much power system information as possible, EPRI’s Renshaw and others said.

Utilities may be able to create a foundation model to enable shared learning and protect their data, said PG&E Senior Director of Grid Research, Innovation and Development Quinn Nakayama.

“The bottom line is — gather more high-quality data, use, store and protect it properly, and feed it into models that are trained and updated for the right tasks,” Renshaw concluded.