In Game Theory, the players typically have to make assumptions about the other players’ actions. What will the other player do? Will he use rock, paper or scissors? You never know, but in some cases, you might have an idea of the probability of some actions being higher than others. Adding such a notion of probability or randomness opens up a new chapter in game theory that lets us analyse more complicated scenarios.

This article is the third in a four-chapter series on the fundamentals of game theory. If you haven’t checked out the first two chapters yet, I’d encourage you to do that to become familiar with the basic terms and concepts used in the following. If you feel ready, let’s go ahead!

Mixed Strategies

So far we have always considered games where each player chooses exactly one action. Now we will extend our games by allowing each player to select different actions with given probabilities, which we call a mixed strategy. If you play rock-paper-scissors, you do not know which action your opponent takes, but you might guess that they select each action with a probability of 33%, and if you play 99 games of rock-paper-scissors, you might indeed find your opponent to choose each action roughly 33 times. With this example, you directly see the main reasons why we want to introduce probability. First, it allows us to describe games that are played multiple times, and second, it enables us to consider a notion of the (assumed) likelihood of a player’s actions.

Let me demonstrate the later point in more detail. We come back to the soccer game we saw in chapter 2, where the keeper decides on a corner to jump into and the other player decides on a corner to aim for.

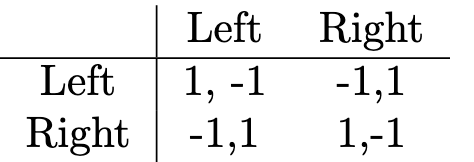

If you are the keeper, you win (reward of 1) if you choose the same corner as the opponent and you lose (reward of -1) if you choose the other one. For your opponent, it is the other way round: They win, if you select different corners. This game only makes sense, if both the keeper and the opponent select a corner randomly. To be precise, if one player knows that the other always selects the same corner, they know exactly what to do to win. So, the key to success in this game is to choose the corner by some random mechanism. The main question now is, what probability should the keeper and the opponent assign to both corners? Would it be a good strategy to choose the right corner with a probability of 80%? Probably not.

To find the best strategy, we need to find the Nash equilibrium, because that is the state where no player can get any better by changing their behaviour. In the case of mixed strategies, such a Nash Equilibrium is described by a probability distribution over the actions, where no player wants to increase or decrease any probability anymore. In other words, it is optimal (because if it were not optimal, one player would like to change). We can find this optimal probability distribution if we consider the expected reward. As you might guess, the expected reward is composed of the reward (also called utility) the players get (which is given in the matrix above) times the likelihood of that reward. Let’s say the shooter chooses the left corner with probability p and the right corner with probability 1-p. What reward can the keeper expect? Well, if they choose the left corner, they can expect a reward of p*1 + (1-p)*(-1). Do you see how this is derived from the game matrix? If the keeper chooses the left corner, there is a probability of p, that the shooter chooses the same corner, which is good for the keeper (reward of 1). But with a chance of (1-p), the shooter chooses the other corner and the keeper loses (reward of -1). In a likewise fashion, if the keeper chooses the right corner, he can expect a reward of (1-p)*1 + p*(-1). Consequently, if the keeper chooses the left corner with probability q and the right corner with probability (1-q), the overall expected reward for the keeper is q times the expected reward for the left corner plus (1-q) times the reward for the right corner.

Now let’s take the perspective of the shooter. He wants the keeper to be indecisive between the corners. In other words, he wants the keeper to see no advantage in any corner so he chooses randomly. Mathematically that means that the expected rewards for both corners should be equal, i.e.

which can be solved to p=0.5. So the optimal strategy for the shooter to keep the keeper indecisive is to choose the right corner with a Probability of p=0.5 and hence choose the left corner with an equal probability of p=0.5.

But now imagine a shooter who is well known for his tendency to choose the right corner. You might not expect a 50/50 probability for each corner, but you assume he will choose the right corner with a probability of 70%. If the keeper stays with their 50/50 split for choosing a corner, their expected reward is 0.5 times the expected reward for the left corner plus 0.5 times the expected reward for the right corner:

That does not sound too bad, but there is a better option still. If the keeper always chooses the right corner (i.e., q=1), they get a reward of 0.4, which is better than 0. In this case, there is a clear best answer for the keeper which is to favour the corner the shooter prefers. That, however, would lower the shooter’s reward. If the keeper always chooses the right corner, the shooter would get a reward of -1 with a probability of 70% (because the shooter themself chooses the right corner with a probability of 70%) and a reward of 1 in the remaining 30% of cases, which yields an expected reward of 0.7*(-1) + 0.3*1 = -0.4. That is worse than the reward of 0 they got when they chose 50/50. Do you remember that a Nash equilibrium is a state, where no player has any reason to change his action unless any other player does? This scenario is not a Nash equilibrium, because the shooter has an incentive to change his action more towards a 50/50 split, even if the keeper does not change his strategy. This 50/50 split, however, is a Nash equilibrium, because in that scenario neither the shooter nor the keeper gains anything from changing their probability of choosing the one or the other corner.

Fighting birds

From the previous example we saw, that a player’s assumptions about the other player’s actions influence the first player’s action selection as well. If a player wants to behave rationally (and this is what we always expect in game theory), they would choose actions such that they maximize their expected reward given the other players’ mixed action strategies. In the soccer scenario it is quite simple to more often jump into a corner, if you assume that the opponent will choose that corner more often, so let us continue with a more complicated example, that takes us outside into nature.

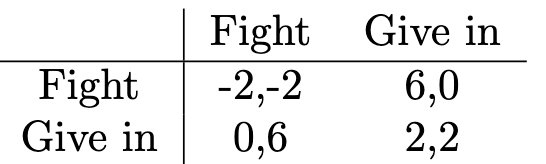

As we walk across the forest, we notice some interesting behaviour in wild animals. Say two birds come to a place where there is something to eat. If you were a bird, what would you do? Would you share the food with the other bird, which means less food for both of you? Or would you fight? If you threaten your opponent, they might give in and you have all the food for yourself. But if the other bird is as aggressive as you, you end up in a real fight and you hurt each other. Then again you might have preferred to give in in the first place and just leave without a fight. As you see, the outcome of your action depends on the other bird. Preparing to fight can be very rewarding if the opponent gives in, but very costly if the other bird is willing to fight as well. In matrix notation, this game looks like this:

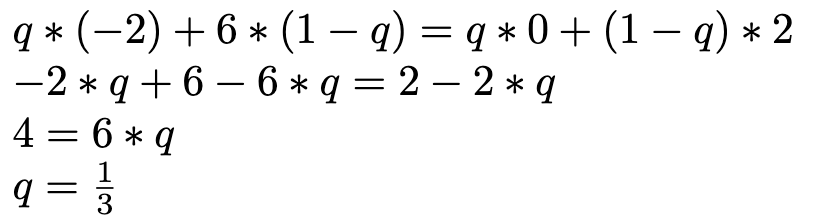

The question is, what would be the rational behaviour for a given distribution of birds who fight or give in? If you are in a very dangerous environment, where most birds are known to be aggressive fighters, you might prefer giving in to not get hurt. But if you assume that most other birds are cowards, you might see a potential benefit in preparing for a fight to scare the others away. By calculating the expected reward, we can figure out the exact proportions of birds fighting and birds giving in, which forms an equilibrium. Say the probability to fight is denoted p for bird 1 and q for bird 2, then the probability for giving in is 1-p for bird 1 and 1-q for bird 2. In a Nash equilibrium, no player wants to change their strategies unless any other payer does. Formally that means, that both options need to yield the same expected reward. So, for bird 2 fighting with a probability of q needs to be as good as giving in with a probability of (1-q). This leads us to the following formula we can solve for q:

For bird 2 it would be optimal to fight with a probability of 1/3 and give in with a probability of 2/3, and the same holds for bird 1 because of the symmetry of the game. In a big population of birds, that would mean that a third of the birds are fighters, who usually seek the fight and the other two-thirds prefer giving in. As this is an equilibrium, these ratios will stay stable over time. If it were to happen that more birds became cowards who always give in, fighting would become more rewarding, as the chance of winning increased. Then, however, more birds would choose to fight and the number of cowardly birds decreases and the stable equilibrium is reached again.

Report a crime

Now that we have understood that we can find optimal Nash equilibria by comparing the expected rewards for the different options, we will use this strategy on a more sophisticated example to unleash the power game theory analyses can have for realistic complex scenarios.

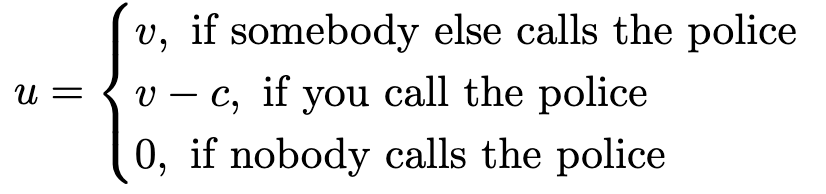

Say a crime happened in the middle of the city centre and there are multiple witnesses to it. The question is, who calls the police now? As there are many people around, everybody might expect others to call the police and hence refrain from doing it themself. We can model this scenario as a game again. Let’s say we have n players and everybody has two options, namely calling the police or not calling it. And what is the reward? For the reward, we distinguish three cases. If nobody calls the police, the reward is zero, because then the crime is not reported. If you call the police, you have some costs (e.g. the time you have to spend to wait and tell the police what happened), but the crime is reported which helps keep your city safe. If somebody else reports the crime, the city would still be kept safe, but you didn’t have the costs of calling the police yourself. Formally, we can write this down as follows:

v is the reward of keeping the city safe, which you get either if somebody else calls the police (first row) or if you call the police yourself (second row). However, in the second case, your reward is diminished a little by the costs c you have to take. However, let us assume that c is smaller than v, which means, that the costs of calling the police never exceed the reward you get from keeping your city safe. In the last case, where nobody calls the police, your reward is zero.

This game looks a little different from the previous ones we had, mainly because we didn’t display it as a matrix. In fact, it is more complicated. We didn’t specify the exact number of players (we just called it n), and we also didn’t specify the rewards explicitly but just introduced some values v and c. However, this helps us model a quite complicated real situation as a game and will allow us to answer more interesting questions: First, what happens if more people witness the crime? Will it become more likely that somebody will report the crime? Second, how do the costs c influence the likelihood of the crime being reported? We can answer these questions with the game-theoretic concepts we have learned already.

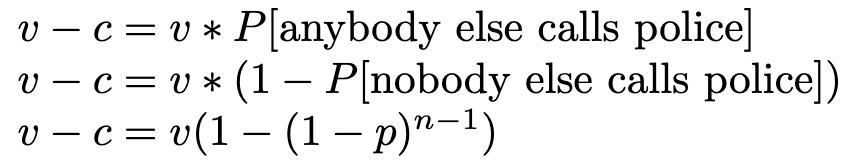

As in the previous examples, we will use the Nash equilibrium’s property that in an optimal state, nobody should want to change their action. That means, for every individual calling the police should be as good as not calling it, which leads us to the following formula:

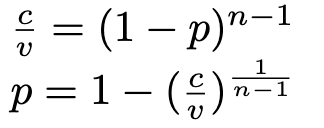

On the left, you have the reward if you call the police yourself (v-c). This should be as good as a reward of v times the likelihood that anybody else calls the police. Now, the probability of anybody else calling the police is the same as 1 minus the probability that nobody else calls the police. If we denote the probability that an individual calls the police with p, the probability that a single individual does not call the police is 1-p. Consequently, the probability that two individuals don’t call the police is the product of the single probabilities, (1-p)*(1-p). For n-1 individuals (all individuals except you), this gives us the term 1-p to the power of n-1 in the last row. We can solve this equation and finally arrive at:

This last row gives you the probability of a single individual calling the police. What happens, if there are more witnesses to the crime? If n gets larger, the exponent becomes smaller (1/n goes towards 0), which finally leads to:

Given that x to the power of 0 is always 1, p becomes zero. In other words, the more witnesses are around (higher n), the less likely it becomes that you call the police, and for an infinite amount of other witnesses, the probability drops to zero. This sounds reasonable. The more other people around, the more likely you are to expect that anybody else will call the police and the smaller you see your responsibility. Naturally, all other individuals will have the same chain of thought. But that also sounds a little tragic, doesn’t it? Does this mean that nobody will call the police if there are many witnesses?

Well, not necessarily. We just saw that the probability of a single person calling the police declines with higher n, but there are still more people around. Maybe the sheer number of people around counteracts this diminishing probability. A hundred people with a small probability of calling the police each might still be worth more than a few people with moderate individual probabilities. Let us now take a look at the probability that anybody calls the police.

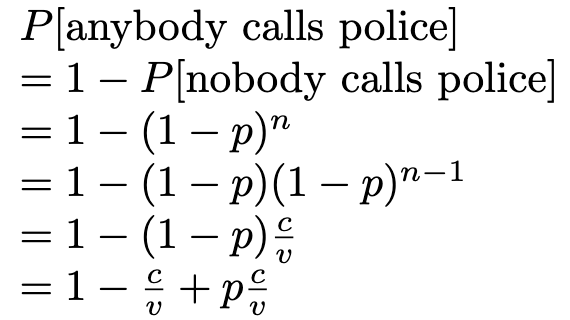

The probability that anybody calls the police is equal to 1 minus the probability that nobody calls the police. Like in the example before, the probability of nobody calling the police is described by 1-p to the power of n. We then use an equation we derived previously (see formulas above) to replace (1-p)^(n-1) with c/v.

When we look at the last line of our calculations, what happens for big n now? We already know that p drops to zero, leaving us with a probability of 1-c/v. This is the likelihood that anybody will call the police if there are many people around (note that this is different from the probability that a single individual calls the police). We see that this likelihood heavily depends on the ratio of c and v. The smaller c, the more likely it is that anybody calls the police. If c is (close to) zero, it is almost certain that the police will be called, but if c is almost as big as v (that is, the costs of calling the police eat up the reward of reporting the crime), it becomes unlikely that anybody calls the police. This gives us a lever to influence the probability of reporting crimes. Calling the police and reporting a crime should be as effortless and low-threshold as possible.

Summary

In this chapter on our journey through the realms of game theory, we have introduced so-called mixed strategies, which allowed us to describe games by the probabilities with which different actions are taken. We can summarize our key findings as follows:

- A mixed strategy is described by a probability distribution over the different actions.

- In a Nash equilibrium, the expected reward for all actions a player can take must be equal.

- In mixed strategies, a Nash equilibrium means that no player wants to change the probabilities of their actions

- We can find out the probabilities of different actions in a Nash equilibrium by setting the expected rewards of two (or more) options equal.

- Game-theoretic concepts allow us to analyze scenarios with an infinite amount of players. Such analyses can also tell us how the exact shaping of the reward can influence the probabilities in a Nash equilibrium. This can be used to inspire decisions in the real world, as we saw in the crime reporting example.

We are almost through with our series on the fundamentals of game theory. In the next and final chapter, we will introduce the idea of taking turns in games. Stay tuned!

References

The topics introduced here are typically covered in standard textbooks on game theory. I mainly used this one, which is written in German though:

- Bartholomae, F., & Wiens, M. (2016). Spieltheorie. Ein anwendungsorientiertes Lehrbuch. Wiesbaden: Springer Fachmedien Wiesbaden.

An alternative in English language could be this one:

- Espinola-Arredondo, A., & Muñoz-Garcia, F. (2023). Game Theory: An Introduction with Step-by-step Examples. Springer Nature.

Game theory is a rather young field of research, with the first main textbook being this one:

- Von Neumann, J., & Morgenstern, O. (1944). Theory of games and economic behavior.

Like this article? Follow me to be notified of my future posts.