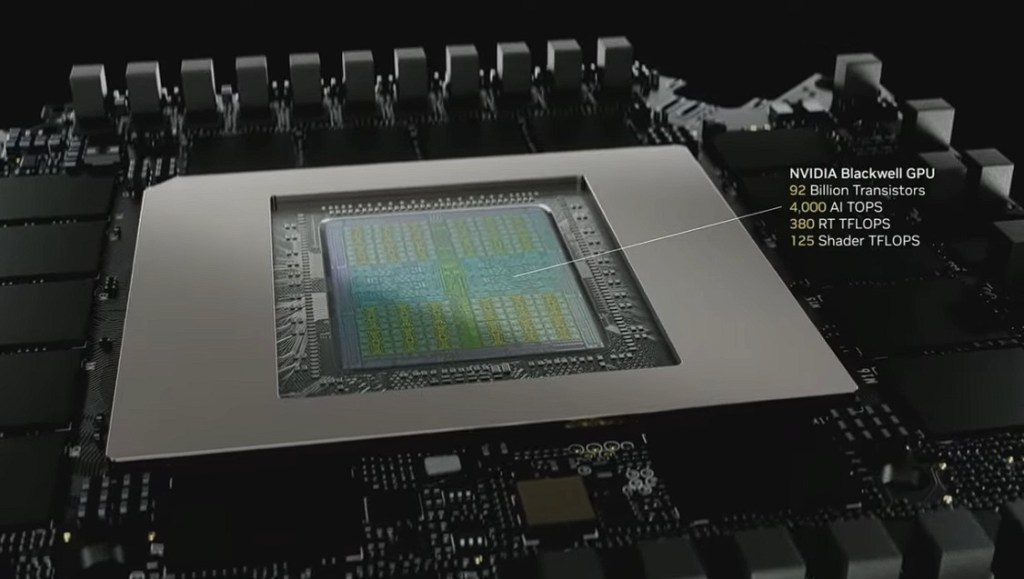

Nvidia today announced the Nvidia RTX Pro Blackwell series — a new generation of workstation and server graphics processing units.

Nvidia said the GPUs redefine workflows for AI, technical, creative, engineering and design professionals with accelerated computing, AI inference, ray tracing and neural rendering technologies. The company unveiled the news during the keynote speech of Jensen Huang, CEO of Nvidia, at the GTC 2025 event.

For everything from agentic AI, simulation, extended reality, 3D design and complex visual effects to developing physical AI powering autonomous robots, vehicles and smart spaces, the RTX Pro Blackwell series provides professionals across industries with compute power, memory capacity and data throughput right at their fingertips — from their desktop, on the go with mobile workstations or powered by data center GPUs.

The new lineup includes:

- Data center GPU: Nvidia RTX PRO 6000 Blackwell Server Edition

- Desktop GPUs: Nvidia RTX PRO 6000 Blackwell Workstation Edition, Nvidia RTX

- PRO 6000 Blackwell Max-Q Workstation Edition, Nvidia RTX PRO 5000 Blackwell,

- Nvidia RTX PRO 4500 Blackwell and Nvidia RTX PRO 4000 Blackwell

- Laptop GPUs: Nvidia RTX PRO 5000 Blackwell, Nvidia RTX PRO 4000 Blackwell,

- Nvidia RTX PRO 3000 Blackwell, Nvidia RTX PRO 2000 Blackwell, Nvidia RTX PRO

- 1000 Blackwell and Nvidia RTX PRO 500 Blackwell

“Software developers, data scientists, artists, designers and engineers need powerful AI and graphics performance to push the boundaries of visual computing and simulation, helping tackle incredible industry challenges,” said Bob Pette, vice president of enterprise platforms at Nvidia, in a statement. “Bringing Nvidia Blackwell to workstations and servers will take productivity, performance and speed to new heights, accelerating AI inference serving, data science, visualization and content creation.”

Nvidia Blackwell technology comes to workstations and data centers

RTX Pro Blackwell GPUs unlock the potential of generative, agentic and physical AI by delivering exceptional performance, efficiency and scale.

Nvidia RTX Pro Blackwell GPUs feature:

- Nvidia Streaming Multiprocessor: Offers up to 1.5 times faster throughput and new neural shaders that integrate AI inside of programmable shaders to drive the next decade of AI-augmented graphics innovations.

- Fourth-Generation RT Cores: Delivers up to two times the performance of the previous generation to create photoreal, physically accurate scenes and complex 3D designs with optimizations for Nvidia RTX Mega Geometry.

- Fifth-Generation Tensor Cores: Delivers up to 4,000 AI trillion operations per second and adds support for FP4 precision and Nvidia DLSS 4 Multi Frame Generation, enabling a new era of AI-powered graphics and the ability to run and prototype larger AI models faster.

- Larger, Faster GDDR7 Memory: Boosts bandwidth and capacity — up to 96GB for workstations and servers and up to 24GB on laptops. This enables applications to run faster and work with larger, more complex datasets for everything from tackling massive 3D and AI projects to exploring large-scale virtual reality environments.

- Ninth-Generation Nvidia NVENC: Accelerates video encoding speed and improves quality for professional video applications with added support for 4:2:2 encoding.

- Sixth-Generation Nvidia NVDEC: Provides up to double the H.264 decoding throughput and offers support for 4:2:2 H.264 and HEVC decode. Professionals can benefit from high-quality video playback, accelerate video data ingestion and use advanced AI-powered video editing features.

- Fifth-Generation PCIe: Support for fifth-generation PCI Express provides double the bandwidth over the previous generation, improving data transfer speeds from CPU memory and unlocking faster performance for data-intensive tasks.

- DisplayPort 2.1: Drives high-resolution displays at up to 4K at 480Hz and 8K at 165Hz. Increased bandwidth enables seamless multi-monitor setups, while high dynamic range and higher color depth support deliver more precise color accuracy for tasks like video editing, 3D design and live broadcasting.

- Multi-Instance GPU (MIG): The RTX PRO 6000 data center and desktop GPUs and 5000 series desktop GPUs feature MIG technology, enabling secure partitioning of a single GPU into up to four instances (6000 series) or two instances (5000 series).

Nvidia said Fault isolation is designed to prevent workload interference for secure, efficient resource allocation for diverse workloads, maximizing performance and flexibility.

The new laptop GPUs also support the latest Nvidia Blackwell Max-Q technologies, which intelligently and continually optimize laptop performance and power efficiency with AI.

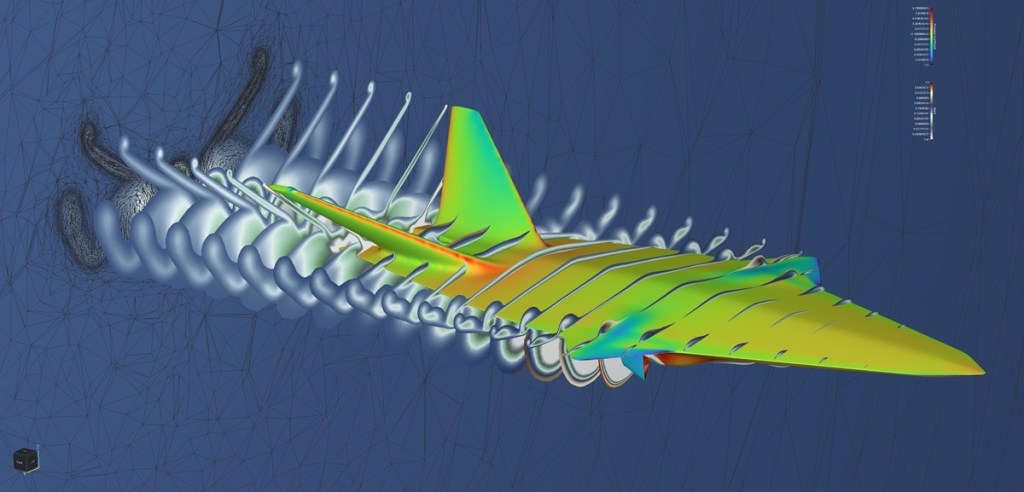

With neural rendering and AI-augmented tools, Nvidia RTX PRO Blackwell GPUs enable the creation of stunning visuals, digital twins of real-world environments and immersive experiences with unprecedented speed and efficiency. The GPUs are built to elevate 3D computer-aided design and building information model workflows, offering designers and engineers exceptional performance for complex modeling, rendering and visualization.

Designed for enterprise data center deployments, the RTX PRO 6000 Blackwell Server Edition features a passively cooled thermal design and can be configured with up to eight GPUs per server. For workloads that require the compute density and scale that data centers offer, the RTX PRO 6000 Blackwell Server Edition delivers powerful performance for next-generation AI, scientific and visual computing applications across industries such as healthcare, manufacturing, retail and media and entertainment.

In addition, this powerful data center GPU can be combined with Nvidia vGPU software to power AI workloads across virtualized environments and deliver high-performance virtual workstation instances to remote users. Nvidia vGPU support for the Nvidia RTX PRO 6000 Blackwell Server Edition GPU is expected in the latter half of this year.

“Foster + Partners has tested the Nvidia RTX PRO 6000 Blackwell Max-Q Workstation Edition GPU on Cyclops, our GPU-based ray-tracing product,” said Martha Tsigkari, head of applied research and development and senior partner at Foster + Partners, in a statement. “The new Nvidia Blackwell GPU has managed to outperform everything we have tested before. For example, when using it with Cyclops, it has performed at 5x the speed of Nvidia RTX A6000 GPUs. Rendering speeds also increased five times, allowing tools like Cyclops to provide feedback on how well our design solutions perform in real time as we design them and resulting in intuitive yet informed decision-making from early conceptual stages.”

“Nvidia RTX PRO 6000 Blackwell Workstation Edition GPUs enable incredibly sharp and photorealistic graphics,” said Jeff Hammoud, chief design officer at Rivian. “In conjunction with a Varjo XR4 headset and Autodesk VRED, the system delivered the level of crispness necessary for immersive automotive design reviews. With Nvidia Blackwell support for PCIe Gen 5, we used two powerful 600W GPUs via VR SLI, allowing us to achieve the highest pixel density and the most stunning visuals we have ever experienced in VR.”

RTX PRO GPUs run on the Nvidia AI platform and feature larger memory capacity and the latest Tensor Cores to accelerate a deep ecosystem of AI-accelerated applications built on Nvidia CUDA and RTX technology. With everything from the latest AI-based content creation tools and new reasoning models, such as the Nvidia Llama Nemotron Reason family of models and Nvidia NIM microservices unveiled today, inferencing is faster than ever. And with over 400 Nvidia CUDA-X libraries, developers can easily build, optimize, deploy and scale new AI applications, from workstations to the data center or cloud.

Enterprises can fast-track their AI development and deployments by prototyping locally with an Nvidia RTX PRO GPU and the Nvidia Omniverse and Nvidia AI Enterprise platforms, Nvidia Blueprints and Nvidia NIM, which gives access to easy-to-use inference microservices backed by enterprise-level support. They can also run these applications at scale on the ultimate universal data center GPU for AI and visual computing, delivering breakthrough acceleration for the most demanding compute-intensive enterprise workloads with the RTX Pro 6000 Blackwell Server Edition.

Availability

The Nvidia RTX Pro 6000 Blackwell Server Edition will soon be available in server configurations from leading data center system partners including Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro.

Cloud service providers and GPU cloud providers including AWS, Google Cloud, Microsoft Azure and CoreWeave will be among the first to offer instances powered by the Nvidia RTX Pro 6000 Blackwell Server Edition later this year. In addition, the server edition GPU will be available in data center platforms from ASUS, GIGABYTE, Ingrasys, Quanta Cloud Technology (QCT) and other global system partners.

The Nvidia RTX PRO 6000 Blackwell Workstation Edition and Nvidia RTX PRO 6000 Blackwell Max-Q Workstation Edition will be available through global distribution partners such as PNY and TD Synnex starting in April, with availability from manufacturers, such as BOXX, Dell, HP Inc., Lambda and Lenovo, starting in May.

The Nvidia RTX PRO 5000, RTX PRO 4500 and RTX PRO 4000 Blackwell GPUs will be available in the summer from Boxx, Dell, HP and Lenovo and through global distribution partners.

Nvidia RTX PRO Blackwell laptop GPUs will be available from Dell, HP, Lenovo and Razer starting later this year.

GB Daily

Stay in the know! Get the latest news in your inbox daily

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.