Speaking at Hampton Court Palace on the sidelines of the Terra Carta Sustainable Markets Initiative sustainable transition summit, Tokamak Energy chief executive Warrick Matthews described how his job involves “myth busting” around nuclear fusion.

In an exclusive interview, he explains how the company is on a path to commercialising nuclear fusion technology after raising $150 million from existing and strategic investors in November.

Energy Voice: Can you tell me any more about Tokamak’s tech scale-up prototype for wind power?

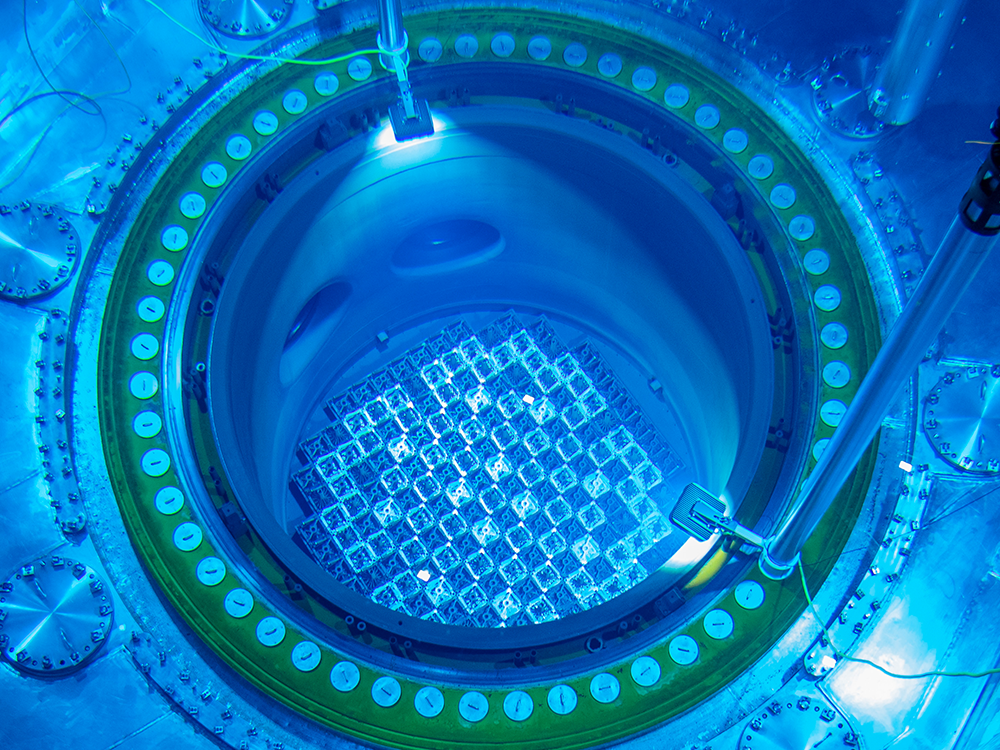

Warrick Matthews: We’re 15 years old as a company and our founders spun out of UKAEA (the UK Atomic Energy Authority). They had operated a conventional tokamak. A tokamak is a device to hold plasma in a strong magnetic field. The conventional tokamak looks like a doughnut, a Torus. Our device looks a bit more like a cored apple shape.

Future machines have to have superconductors. You put energy into them and at an operating temperature they have zero resistance. They can carry very high currents, which is why they’re suddenly interesting for power transmission.

Or you can wind them into coil packs and produce very high-field magnets – that’s the magnet that goes in a big sort of D-shape, or in a ring around the plasma.

You mentioned how tokamaks can be used for power generation. Please elaborate.

We’re engaged across numerous verticals [with] strategic partners now in propulsion – in water, on land for rail, in the air for new hybrid propulsion and then in space.

When you look at the biggest, highest power-output offshore wind turbines, they’re enormous and expensive and a lot of the design is based around the nacelle at the top – which is extremely heavy because it’s got hundreds of tonnes of rare-earth permanent magnet installed in the machine.

If you replace that with the technology that we now use, you would literally take out 99.9% of the rare earth magnet material. You would produce a higher magnetic field device, so it can be much lighter.

You would also decouple your supply chain from certain countries, specifically those rare-earth magnets coming from China. It gives you more flexibility.

If you looked at it, it looks like a metal ribbon, but within that metal ribbon there is a layer of the superconductor which is between one and three microns thick; which is a 20th of a human hair.

You’re really minimising the amount of copper that you would use or aluminium you’d use in a data centre.

In November you secured $125 million (£115m) of financing. What will that be put towards?

We’ve raised a total of $330 million historically and a lot of that was developing technology and, of course, building our machine that we rely upon for our development, ST40.

The money that we’ve raised in the series C rounds; firstly, we’ve had some very loyal shareholders and investors across our history. And they followed their investment into this series. But we wanted to also add in new investors.

So, we’re really pleased in this round that we added in the likes of Lingotto, which is part of the Exor and Agnelli group. We added in Furukawa Electric who are one of our supply chain partners, who now invest in us and as a result, we’ve got two unique opportunities in Japan.

We are also rapidly developing this magnet technology within the company, which is revenue generating already. And as we identify new verticals, that gives us the ability to really grow a business within the business.

Is it true that you have a demonstrator already in place specifically for nuclear fusion?

Controlling burning plasma within a device naturally sounds really quite hard. It’s not quite the power of a volcano. But it’s containing the fourth state of matter. It’s never going to be so easy, right? Machine learning and AI genuinely does have a huge promise for that.

In ST40, we’ve taken plasma temperatures to seven to eight times the temperature of the sun; and so over 100 million degrees, which is one of the thresholds you need for fusion. Essentially, fusion on Earth is just trying to replicate what nature does really well in the sun or in a star…

Get plasma really hot, have very strong gravitational fields that we replicate with a magnet, and then force isotopes of hydrogen that would naturally want to repel themselves from each other, to fuse together, make helium, shed a neutron; and there’s your energy.

How is fusion dealing with different risks around nuclear waste and hydrogen?

The reason why so many companies and governments are driven to develop fusion – it’s limitless energy. It’s incredibly energy dense and it’s an inherently fail-safe reaction that’s going on – so to unpack a few of those things then, fuel-wise; it’s limitless.

Effectively you’re using deuterium, from seawater and tritium in a commercial device, which the device needs to manufacture a surplus of, and we know how you can do that.

You impact lithium with the neutrons, you create a surplus of that, and governments like the UK are very proactive in working that part of the plan.

You’ve got fuel that you don’t have to go to the far corners of the earth to find; it’s sea water.

The energy-dense bit is probably one of my favourite ever stats for fusion. That a one-litre bottle of fusion fuel is equivalent to 10 million litres of oil; which is a heck of a prize to go after and much cleaner so no CO2. Back to the inherent failsafe, unlike a fission device – and the first thing I’d say is there are lots of fission devices around the world that are incredibly safe, so I’m not knocking fission as a bad thing – but it’s very different. It’s the opposite of fusion.

Clearly, in fission you are splitting a heavy atom and you’re containing a chain reaction, and your fuel is right there in the reactor. Go over to a fusion device, and you are forcing fusion to occur with these incredible parameters around temperature and pressure. And if any of those dropped, so if your temperature dropped below that threshold, a hundred million, then everything stops, because it always wants to stop.

In the case of nuclear fusion, there’s no need to get rid of nuclear waste, is that right?

I think looking at nuclear fission as an industry, the amount of materials that you’re having to deal with versus the upside of baseload power that’s not emitting I would still say it’s worth the equation, but you have very long-lived nuclear waste. You’re into activated materials at the thousands or tens of thousands of years, which is quite a hard thing to comprehend.

Fusion is nothing like fission. You do activate some components that are there, like the first wall of the device, but you’re into tens of years, not tens of thousands of years, that you would have to take care of those components and store them. It’s a very, very different prospect. It is a low to intermediate level of waste on a key number of components in the device.

The whole safety point leads us to another, which is regulation; because one of the things that holds it back is the cost and speed of deployment, a lot of which loops back to its nuclear regulated rights.

Fusion, whether that’s our own device that we operate or up the road at UKAEA, that’s operated for 40 years. None of that is nuclear regulated. It’s regulated by the Environment Agency and the Health and Safety Executive. It’s equivalent to operating a big hospital.

Do you think the UK is doing enough in terms of funding for fusion right now?

The UK can look at itself and say we are a global leader in fusion, which is a good start.

We have 40 years of operating the Joint European Torus, the most powerful tokamak that’s existed at Culham in Oxfordshire. There are 3,000 people who work around fusion. It has spun out companies like ours to drive fusion forward. So, we’re in a very good place right now.

But the global competition is hot. From the US, from partner countries and now increasingly China, it’s really driving both financial and people resources into fusion. They want to advance that quickly.

That’s good for the world, but we don’t want to lose the position that we’ve got, so we’ve really got to keep up the intensity of the activity.

And how far away are we from having the first nuclear fusion generated power, potentially in homes or in industrial works at commercial scale?

The consensus view of those in the US milestone programme, which includes talking about energy, is that you can design and build a fusion pilot plant generating net energy that could be going to the grid, or could be heat for an industrial process, by the mid-2030(s), a decade away.

The UK’s big project that follows JET (Joint European Torus) in Oxfordshire is called STEP (Spherical Tokamak for Energy Production), which is being built at the site of an old coal-fired power station in Nottinghamshire.

It really does become the power for humanity after that – not just green electrons, but also industrial applications that need heat rather than electricity. It’s great that we can talk about SAF (sustainable aviation fuel) and hydrogen and ammonia; it’s going to power everything, but you’ve got to produce it.

How do you think fusion might compete with or complement small modular reactors (SMRs) in future?

SMR technology is more available and near return than fusion. It’s out there right now, so we’re big supporters of getting going with that. But we see it as an important step on the way to fusion.