Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Productivity platform Notion is betting on large language models (LLMs) powering more of its new enterprise capabilities, including building OpenAI’s GPT-4.1 and Anthropic’s Claude 3.7 into their dashboard.

Even as both OpenAI and Anthropic start building productivity features into their respective chat platforms, bringing these LLMs into a separate service shows how competitive the space is.

Notion announced its new all-in-one AI toolkit inside the Notion workspace today, including AI meeting notes, enterprise search, research mode and the ability to switch between GPT-4.1 and Anthropic’s Claude 3.7.

One of the new features lets users chat with LLMs inside the Notion workspace and switch between models. Right now, Notion only supports GPT-4.1 and Claude 3.7. The idea is to reduce window and context switching.

The company said early adopters of the new feature include OpenAI, Ramp, Vercel and Harvey.

Model mixing and fine-tuning

Notion built the features with a mix of LLMs from OpenAI and Claude, and its own models.

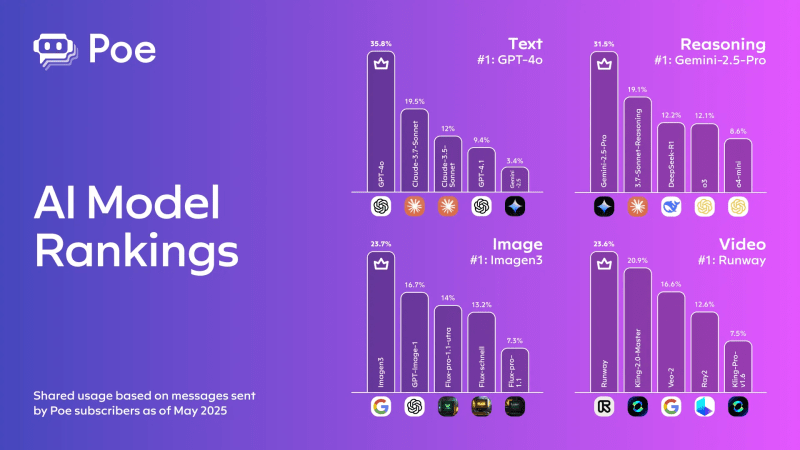

The move away from pure reasoning models makes the model choice interesting for Notion. GPT-4.1 is technically not a reasoning model, while Claude 3.7 is a hybrid model— capable of acting as a regular LLM and a reasoning model.

Reasoning models are having a moment, though many warn that these models can still sometimes lie. However, while reasoning models like OpenAI’s o3 (and yes, Claude 3.7 Sonnet) take their time to answer, going through different scenarios, they are not the best for quick thinking and data-gathering tasks. Many productivity tasks, like meeting transcriptions or searches for task data, don’t need the power of a reasoning model behind them.

Sarah Sachs, Notion AI Engineering Lead, told VentureBeat in an email that the company aimed for features that didn’t sacrifice accuracy, safety and privacy, along with responding to queries in the speed enterprises need.

“In order to achieve a low-latency experience, we fine-tuned the models with internal usage and feedback from trusted testers, in order to make the AI specialized in Notion retrieval tasks,” Sachs said. “This setup helps Notion AI understand business needs, give relevant answers, serve customers with sub-second latency, and keep customer data safe and compliant.”

Sachs said hosting and building with different models allows users to “pick the option that best fits their needs— whether that’s a more conversational tone, better coding capabilities, or faster response times.”

AI meeting notes and more

Notion AI for Work tracks and transcribes meetings for users, especially if they added Notion to their calendar, and it can listen in on calls.

Users can use Notion for enterprise search by connecting apps like Slack, Microsoft Teams, GitHub, Google Drive, Sharepoint and Gmail. Sachs said Notion AI will search an organization’s internal documents, databases and the connected apps.

The enterprise search results, along with other uploaded documents or a web search, allow Notion users to access the new Research Mode. It will draft documents directly from Notion while “analyzing all of your sources—plus the web—and think through the best response.”

Notion also added both GPT-4.1 and Claude 3.7 as chat options. OpenAI noted that Notion users can chat with GPT-4.1 on the workspace and create a Notion template directly from the conversation. Sachs said the company is working on adding more models to its chat feature.

GPT-4.1 is now built into Notion!

You can ask GPT-4.1 to help with anything, then create a Notion page directly from the convo.

(btw GPT-4.1’s output in this video wasn’t sped up.) https://t.co/RMivVBMuEr pic.twitter.com/PVrZPUpWrf

— edwin (@edwinarbus) May 13, 2025

Subscribers to Notion’s Business and Enterprise plans with the Notion AI add-on get immediate access to the new features.

Compete with the model providers

Even though Notion users can access both Anthropic and OpenAI on the platform, Notion still has to compete with model providers.

OpenAI’s Deep Research has been hailed as a game-changer for agentic retrieval augmented generation (RAG). Google also has its version of Deep Research. And Anthropic can search the internet for you.

Not to mention, Notion needs to compete with other platforms that already leverage AI. The meeting space is chock full of companies tracking, transcribing, summarizing and pulling insights from calls with AI.

However, Notion’s big selling point is that it has all these capabilities on one single platform. Enterprises can use all those different services but live outside their chosen productivity platform. Notion said having all of these features in one place, with one all-in-one pricing, will save enterprises from subscribing to different platforms.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.