Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Surprise! Just days after reports emerged suggesting OpenAI was buying white-hot coding startup Windsurf, the former company appears to be launching its own competitor service as a research preview under its brand name Codex, going head-to-head against Windsurf, Cursor, and the growing list of AI coding tools offered by startups and large tech companies including Microsoft and Amazon.

Unlike OpenAI’s previous Codex code completion AI model, the new version is a full cloud-based AI software engineering (SWE) agent built atop a fine-tuned version of OpenAI’s o3 reasoning model that can execute multiple development tasks in parallel.

Starting today it will be available for ChatGPT Pro, Enterprise, and Team users, with support for Plus and Edu users expected soon.

Codex’s evolution: from model to autonomous AI coding agent

This release marks a significant step forward in Codex’s development. The original Codex debuted in 2021 as a model for translating natural language into code available through OpenAI’s nascent application programming interface.

It was the engine behind GitHub Copilot, the popular autocomplete-style coding assistant designed to work within IDEs like Visual Studio Code.

That initial iteration focused on code generation and completion, trained on billions of lines of public source code.

However, the early version came with limitations. It was prone to syntactic errors, insecure code suggestions, and biases embedded in its training data. Codex occasionally proposed superficially correct code that failed functionally, and in some cases, made problematic associations based on prompts.

Despite those flaws, it showed enough promise to establish AI coding tools as a rapidly growing product category. That original model has since been deprecated and turned into the name of a new suite of products, according to an OpenAI spokesperson.

GitHub Copilot officially transitioned off OpenAI’s Codex model in March 2023, adopting GPT-4 as part of its Copilot X upgrade to enable deeper IDE integration, chat capabilities, and more context-aware code suggestions.

Agentic visions

The new Codex goes far beyond its predecessor. Now built to act autonomously over longer durations, Codex can write features, fix bugs, answer codebase-specific questions, run tests, and propose pull requests—each task running in a secure, isolated cloud sandbox.

The design reflects OpenAI’s broader ambition to move beyond quick answers and into collaborative work.

Josh Tobin, who leads the Agents Research Team at OpenAI, said during a recent briefing: “We think of agents as AI systems that can operate on your behalf for a longer period of time to accomplish big chunks of work by interacting with the real world.” Codex fits squarely into this definition. “Our vision is that ChatGPT will become almost like a virtual coworker—not just answering quick questions, but collaborating on substantial work across a range of tasks,” he added.

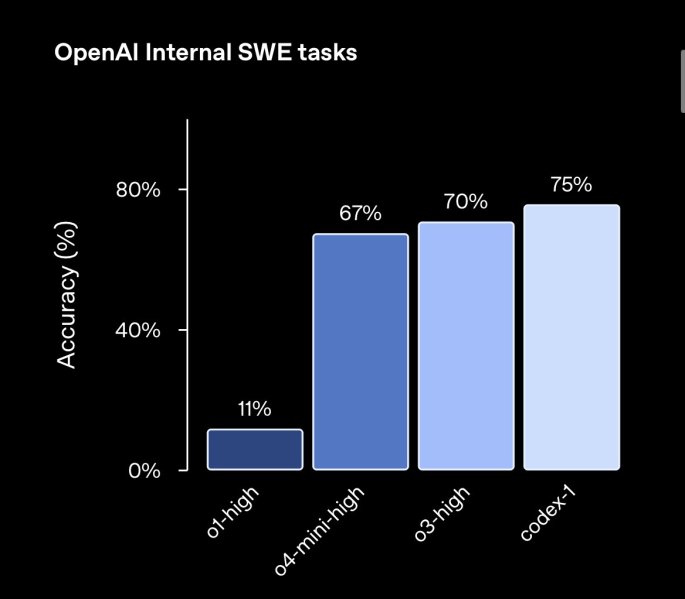

Figures released by OpenAI show that the new Codex-1 SWE agent outperforms all of OpenAI’s latest reasoning models on internal SWE tasks.

New capabilities, new interface, new workflows

Codex tasks are initiated through a sidebar interface in ChatGPT, allowing users to prompt the agent with tasks or questions.

The agent processes each request in an air-gapped environment loaded with the user’s repository and configured to mirror the development setup. It logs its actions, cites test outputs, and summarizes changes—making its work traceable and reviewable.

Alexander Embiricos, head of OpenAI’s Desktop & Agents team (and the former CEO and co-founder of screenshare collaboration startup Multi that OpenAI acquired for an undisclosed sum last year) said in a briefing with journalists that “the Codex agent is a cloud-based software engineering agent that can work on many tasks in parallel, with its own computer to run safely and independently.”

Internally, he said, engineers already use it “like a morning to-do list—fire off tasks to Codex and return to a batch of draft solutions ready to review or merge.”

Codex also supports configuration through AGENTS.md files—project-level guides that teach the agent how to navigate a codebase, run specific tests, and follow house coding styles.

“We trained our model to read code and infer style—like whether or not to use an Oxford comma—because code style matters as much as correctness,” Embiricos said.

Security and practical use

Codex executes tasks without internet access, drawing only on user-provided code and dependencies. This design ensures secure operation and minimizes potential misuse.

“This is more than just a model API,” said Embiricos. “Because it runs in an air-gapped environment with human review, we can give the model a lot more freedom safely.”

OpenAI also reports early external use cases. Cisco is evaluating Codex for accelerating engineering work across its product lines. Temporal uses it to run background tasks like debugging and test writing. Superhuman leverages Codex to improve test coverage and enable non-engineers to suggest lightweight code changes. Kodiak, an autonomous vehicle firm, applies it to improve code reliability and gain insights into unfamiliar stack components.

OpenAI is also rolling out updates to Codex CLI, its lightweight terminal agent for local development. The CLI now uses a smaller model—codex-mini-latest—optimized for low-latency editing and Q&A.

The pricing is set at $1.50 per million input tokens and $6 per million output tokens, with a 75% caching discount. Codex is currently free to use during the rollout period, with rate limits and on-demand pricing options planned.

Does this mean OpenAI IS NOT buying Windsurf? *Thinking face emoji*

The release of Codex comes amid increased competition in the AI coding tools space—and signals that OpenAI is intent on building, rather than buying, its next phase of products.

According to recent data from SimilarWeb, traffic to developer-focused AI tools has surged by 75% over the past 12 weeks, underscoring the growing demand for coding assistants as essential infrastructure rather than experimental add-ons.

Reports from TechCrunch and Bloomberg suggest OpenAI held acquisition talks with fast-growing AI dev tool startups Cursor and Windsurf. Cursor allegedly walked away from the table; Windsurf reportedly agreed in principle to be acquired by OpenAI for a price of $3 billion, though no deal has been officially confirmed by either OpenAI or Windsurf.

Just yesterday, in fact, Windsurf debuted its own family of coding-focused foundation models, SWE-1, purpose-built to support the full software engineering lifecycle, from debugging to long-running project maintenance. SWE-1 models were reported custom made, trained entirely in-house using a new sequential data model tailored to real-world development workflows.

Many things may be happening behind the scenes between the two companies, but to me, the timing of Windsurf launching its own coding foundation model — instead of its strategy to-date of using Llama variants and giving users the option to slot in OpenAI and Anthropic models — followed one day later by OpenAI releasing its own Windsurf competitor, seems to suggest the two are not aligning soon.

But on the other hand, the fact that this new Codex AI SWE agent is in “research preview” to start may be a form of OpenAI pressuring Windsurf or Cursor or anyone else to come to the bargaining table and strike a deal. Asked about the potential for a Windsurf acquisition and reports of one thereof, an OpenAI spokesperson told VentureBeat they had nothing to share on that front.

In either case, Embiricos frames Codex as far more than a mere code tool or assistant.

“We’re about to undergo a seismic shift in how developers work with agents—not just pairing with them in real time, but fully delegating tasks,” he said. “The first experiments were just reasoning models with terminal access. The experience was magical—they started doing things for us.”

Built for dev teams, not merely solo devs

Codex is designed with professional developers in mind, but Embiricos noted that even product managers have found it helpful for suggesting or validating changes before pulling in human SWEs. This versatility reflects OpenAI’s strategy of building tools that augment productivity across technical teams.

Trini, an engineering lead on the project, summarized the broader ambition behind Codex: “This is a transformative change in how software engineers interface with AI and computers in general. It amplifies each person’s potential.”

OpenAI envisions Codex as the centerpiece of a new development workflow where engineers assign high-level tasks to agents and collaborate with them asynchronously. The company is building toward deeper integrations across GitHub, ChatGPT Desktop, issue trackers, and CI systems. The long-term goal is to blend real-time pairing and long-horizon task delegation into a seamless development experience.

As Josh Tobin put it, “Coding underpins so many useful things across the economy. Accelerating coding is a particularly high-leverage way to distribute the benefits of AI to humanity, including ourselves.”

Whether or not OpenAI closes deals for competitors, the message is clear: Codex is here, and OpenAI is betting on its own agents to lead the next chapter in developer productivity.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.