Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Microsoft launched a comprehensive strategy to position itself at the center of what it calls the “open agentic web” at its annual Build conference this morning, introducing dozens of AI tools and platforms designed to help developers create autonomous systems that can make decisions and complete tasks with limited human intervention.

The Redmond, Wash.-based technology giant introduced more than 50 announcements spanning its entire product portfolio, from GitHub and Azure to Windows and Microsoft 365, all focused on advancing AI agent technologies that can work independently or collaboratively to solve complex business problems.

“We’ve entered the era of AI agents,” said Frank Shaw, Microsoft’s Chief Communications Officer, in a blog post coinciding with the Build announcements. “Thanks to groundbreaking advancements in reasoning and memory, AI models are now more capable and efficient, and we’re seeing how AI systems can help us all solve problems in new ways.”

How AI agents transform software development through autonomous capabilities

The concept of the “agentic web” moves far beyond today’s AI assistants. While current AI tools mainly respond to human questions and commands, agents actively initiate tasks, make decisions independently, coordinate with other AI systems, and complete complex workflows with minimal human supervision. This marks a fundamental shift in how AI systems operate and interact with both users and other technologies.

Kevin Scott, Microsoft’s CTO, described this shift during a press conference as fundamentally changing how humans interact with technology: “Reasoning will continue to improve. We’re going to see great progress there. But there are a handful of new things that have to start happening pretty quickly in order for agents to be the recipients of more complicated work.”

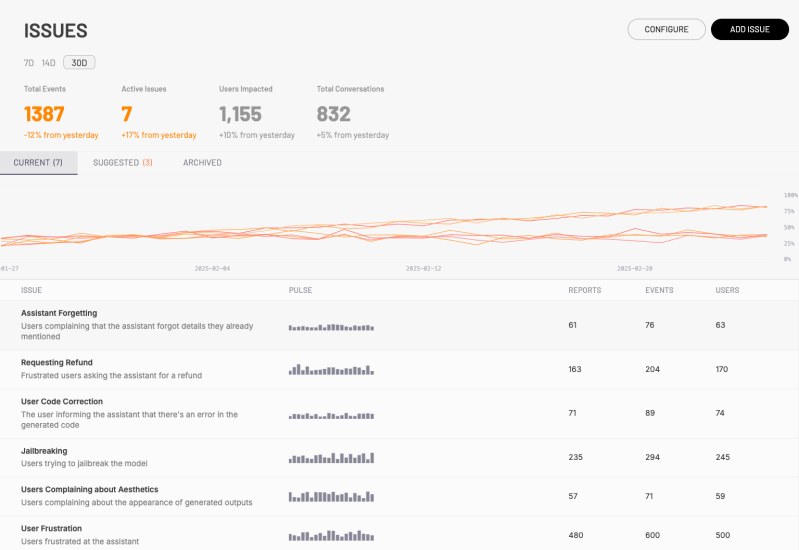

One critical missing element, according to Scott, is memory: “One of the things that is quite conspicuously missing right now in agents is memory.” To address this, Microsoft is introducing several memory-related technologies, including structured RAG (Retrieval-Augmented Generation), which helps AI systems more precisely recall information from large volumes of data.

“You will likely have a personal agent and a work agent, and the work agent is going to have a whole bunch of your employer’s information that belongs to both you and your employer,” explained Steven Bathiche, CVP and technical fellow at Microsoft, during a presentation about agents.

Bathiche emphasized that this contextual awareness is crucial for creating agents that “understand you well, contextualize where you are and what you want to do, and ultimately understand you so that you can click fewer buttons at the end of the day.” This shift from purely reactive AI to systems with persistent memory represents one of the most profound aspects of the agentic revolution.

GitHub evolves from code completion to autonomous developer experience

Microsoft is placing GitHub, its popular developer platform, at the forefront of its agentic strategy with the introduction of the GitHub Copilot coding agent, which goes beyond suggesting code snippets to autonomously solving programming tasks.

The new GitHub Copilot coding agent can now operate as a member of software development teams, autonomously refactoring code, improving test coverage, fixing defects, and even implementing new features. For complex tasks, GitHub Copilot can collaborate with other agents across all stages of the software lifecycle.

Microsoft is also open-sourcing GitHub Copilot Chat in Visual Studio Code, allowing the developer community to contribute to its evolution. This reflects Microsoft’s dual approach of both leading AI innovation while embracing open-source principles.

“Over the next few months, the AI-powered capabilities from the GitHub Copilot extensions will be part of the VS Code open-source repository, the same open-source repository that drives the most popular software development tool,” the company explained in its announcement, emphasizing its commitment to transparency and community-driven innovation.

Multi-agent systems enable complex business workflows and process automation

For businesses looking to deploy AI agents, Microsoft unveiled significant updates to its Azure AI Foundry, a platform for developing and managing AI applications and agents.

Ray Smith, VP of AI Agents at Microsoft, highlighted the importance of multi-agent systems in an exclusive interview with VentureBeat: “Multi-agent invocation, debugging and drilling down into those multiple agents is key, and that extends beyond just Copilot Studio to what’s coming with Azure AI Foundry agents. Our customers have consistently emphasized that this multi-agent capability is essential for their needs.”

Smith explained why splitting tasks across multiple agents is crucial: “It’s very hard to create a reliable process that you squeeze into one agent. Breaking it up into parts improves maintainability and makes building solutions easier, but it also significantly enhances reliability as well.”

The Azure AI Foundry Agent Service, now generally available, allows developers to build enterprise-grade AI agents with support for multi-agent workflows and open protocols like Agent2Agent (A2A) and Model Context Protocol (MCP). This enables organizations to orchestrate multiple specialized agents to handle complex tasks.

Local AI capabilities expand as processing power shifts to client devices

While cloud-based AI has dominated headlines, Microsoft is making a significant push toward local, on-device AI with several announcements targeting developers who want to deploy AI directly on user devices.

Windows AI Foundry, an evolution of Windows Copilot Runtime, provides a unified platform for local AI development on Windows. It includes Windows ML, a built-in AI inferencing runtime, and tools for preparing and optimizing models for on-device deployment.

“Foundry Local will make it easy to run AI models, tools and agents directly on-device, whether Windows 11 or MacOS,” the company announced. “Leveraging ONNX Runtime, Foundry Local is designed for situations where users can save on internet data usage, prioritize privacy and reduce costs.”

Steven Bathiche explained during a presentation how client-side AI has advanced remarkably fast: “We’re super busy trying to essentially predict and stay ahead. Most of our predictions come true within three or four months, which is kind of crazy, because I’m used to predicting a year or two years out, and then feeling good about that timeline. Now it’s like we’re stressed all the time, but it’s all fun.”

Security and identity management address enterprise AI governance challenges

As agent usage proliferates across organizations, Microsoft is addressing the critical need for security, governance, and compliance with several new capabilities designed to prevent what it calls “agent sprawl.”

“Microsoft Entra Agent ID, now in preview, agents that developers create in Microsoft Copilot Studio or Azure AI Foundry are automatically assigned unique identities in an Entra directory, helping enterprises securely manage agents right from the start and avoid ‘agent sprawl’ that could lead to blind spots,” according to the announcement.

Microsoft is also integrating its Purview data security and compliance controls with its AI platforms, allowing developers to build AI solutions with enterprise-grade security and compliance features. This includes Data Loss Prevention controls for Microsoft 365 Copilot agents and new capabilities for detecting sensitive data in AI interactions.

Ray Smith advised IT teams managing security: “Building solutions from the ground up gives you total flexibility, but then you have to add in a lot of the controls around these frameworks yourself. The beauty of Copilot Studio is we’re giving you a managed infrastructure framework with lifecycle management and many governance and observability capabilities built in.”

Scientific discovery platform demonstrates how AI agents transform R&D timelines

Perhaps one of the most ambitious applications of AI agents announced at Build is Microsoft Discovery, a platform designed to accelerate scientific research and development across industries from pharmaceuticals to materials science.

Jason Zander, the CVP of Advanced Communications & Technologies at Microsoft, described in an exclusive interview with VentureBeat how this platform was used to discover a non-PFAS immersion coolant for data centers in just 200 hours — a process that traditionally takes years.

“In our area, our data centers are huge for us because we’re a hyperscaler,” Zander said. “Using this framework, we were able to screen 367,000 potential candidates in just 200 hours. We then took this to a partner who helped synthesize the results.”

Zander elaborated on how this represents a dramatic acceleration of traditional R&D timelines: “The meta point is, all those things took, in some cases, years or even a decade to create. Now they’ve been banned due to regulatory constraints. And the real business question companies need to answer is: you need to replace these products because you have offerings that are now banned…and it took you years to create your existing products. How do you compress that development timeline going forward?”

Industry standards create ecosystem for interoperable agents across platforms

Central to Microsoft’s vision is the advancement of open standards that enable agent interoperability across different platforms and services, with the Model Context Protocol (MCP) playing a particularly important role.

The company announced its joining of the MCP Steering Committee and introduced two new contributions to the MCP ecosystem: an updated authorization specification and a design for an MCP server registry service.

Jay Parikh, who leads Microsoft’s Core AI team, emphasized the importance of openness and interoperability: “Inside Microsoft, this is all about learning faster. Speed is essential because the world is changing so rapidly with new technologies, applications, and competitors emerging constantly.”

Microsoft also introduced NLWeb, a new open project that “can play a similar role to HTML for the agentic web,” allowing websites to provide conversational interfaces for users with the model of their choice and their own data.

Microsoft’s agent strategy positions it at center of next computing paradigm

The breadth and depth of Microsoft’s announcements at Build 2025 underscore the company’s all-in approach to AI agents as the next major computing paradigm.

“The last time that I was as excited about being a software developer or a technologist as I am now was in the 90s,” Kevin Scott said during the press conference. “One of the reasons why is I had this kid-in-a-candy-store feeling with building blocks that even someone like me could fully understand. I could grasp how each of these individual pieces worked and how they composed together, and I could just go play.”

Industry analysts note that Microsoft’s approach — combining cloud and edge AI, open standards with proprietary technologies, and developer tools with business applications — positions the company as a central player in the emerging agentic ecosystem.

For enterprise customers, the immediate impact may be most visible in increased automation of complex workflows, more intelligent responses to business events, and the ability to build custom agents that incorporate domain-specific knowledge and processes.

As we transition from a web of information to a web of agents, Microsoft’s strategy mirrors its earlier approach to cloud computing — providing comprehensive tools, platforms, and infrastructure while simultaneously advancing open standards.

The question now isn’t whether AI agents will transform business operations, but how quickly organizations can adapt to a world where machines don’t just respond to commands, but anticipate needs, make decisions, and fundamentally reshape how work gets done.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.