Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Facebook creator and Meta CEO Mark “Zuck” Zuckerberg shook the world again today when he announced sweeping changes to the way his company moderates and handles user-generated posts and content in the U.S.

Citing the “recent elections” as a “cultural tipping point,” Zuck explained in a roughly 5-minute long video posted to his Facebook and Instagram accounts this morning (Tuesday, January 7) that Meta would cease using independent third-party fact checkers and fact-checking organizations to help moderate and append notes to user posts shared across the company’s suite of social networking and messaging apps, including Facebook, Instagram, WhatsApp, Threads, and more.

Instead, Zuck said that Meta would rely on a “Community Notes” style approach, crowdsourcing information from the users across Meta’s apps to give context and veracity to posts, similar to (and Zuck acknowledged this in his video) the rival social network X (formerly Twitter).

Zuck casted the changes as a return to Facebook’s “roots” around free expression and a reduction of over-broad “censorship.” See the full transcript of his remarks at the bottom of this article.

Why this announcement and policy change matters to businesses

With more than 3 billion users across its services and products worldwide, Meta remains the largest social network to date. In addition, as of 2022, more than 200 million businesses worldwide used the company’s apps and services — most of them small — and 10 million were active paying advertisers on the platform, according to one executive.

Meta’s new chief global affairs officer Joe Kaplan, a former Deputy Chief of Staff for Republican President George W. Bush who recently took on the role in what many viewed as a signal to lawmakers and the wider world of Meta’s willingness to work with the GOP-led Congress and White House following the 2024 election, also published a note to Meta’s corporate website describing some of the changes in greater detail.

Already, some business executives such as Shopify’s CEO Tobi Lutke have seemingly embraced the announcement. As Lutke wrote on X today: “Huge and important change.”

Founders Fund chief marketing officer and tech influencer Mike Solana also hailed the move, writing in a post on X: “There’s already been a dramatic decrease in censorship across the meta platforms. but a public statement of this kind plainly speaking truth (the “fact checkers” were biased, and the policy was immoral) is really and finally the end of a golden age for the worst people alive.”

However, others are less optimistic and receptive to the changes, viewing them as less about freedom of expression, and more about currying favor with the incoming Republican presidential administration of recently elected President Donald J. Trump (to his second non-consecutive term) and GOP-led Congress, as other business executives and firms have seemingly moved to do.

“More free expression on social media is a good thing,” wrote the non-profit Freedom of the Press Foundation on the social network BlueSky (disclosure: my wife is a board member of the non-profit). “But based on Meta’s track record, it seems more likely that this is about sucking up to Donald Trump than it is about free speech.”

George Washington University political communication professor Dave Karpf seemed to agree, writing on BlueSky: “Two salient facts about Facebook replacing its fact-checking program with community notes: (1) community notes are cheaper. (2) the incoming political regime dislikes fact-checking. So community notes are less trouble. The rest is just framing. Zuck’s sole principle is to do what’s best for Zuck.”

And Kate Starbird, professor at the University of Washington and co-founder of the UW Center for an Informed Public, wrote on BlueSky that: “Meta is dropping its support for fact-checking, which, in addition to degrading users’ ability to verify content, will essentially defund all of the little companies that worked to identify false content online. But our FB feeds are basically just AI slop at this point, so?”

When will the changes take place?

Both Zuck and Kaplan stated in their respective video and text posts that the changes to Meta’s content moderation policies and practices would be coming to the U.S. in “the next couple of months.”

Meta will discontinue its independent fact-checking program in the United States, launched in 2016, in favor of a Community Notes model inspired by X (formerly Twitter). This system will rely on users to write and rate notes, requiring agreement across diverse perspectives to ensure balance and prevent bias.

According to its website, Meta had been working with a variety of organizations “certified through the non-partisan International Fact-Checking Network (IFCN) or European Fact-Checking Standards Network (EFCSN) to identify, review and take action” on content deemed “misinformation.”

However, as Zuck opined in his video post, “after Trump first got elected in 2016 the legacy media wrote non-stop about how misinformation was a threat to democracy. We tried, in good faith, to address those concerns without becoming the arbiters of truth, but the fact checkers have just been too politically biased and have destroyed more trust than they’ve created, especially in the U.S.”

Zuck also added that: “There’s been widespread debate about potential harms from online content. Governments and legacy media have pushed to censor more and more. A lot of this is clearly political.”

According to Kaplan, the shift aims to reduce the perceived censorship that arose from the previous fact-checking program, which often applied intrusive labels to legitimate political speech.

Loosening restrictions on political and sensitive topics

Meta is revising its content policies to allow more discourse on politically sensitive topics like immigration and gender identity. Kaplan pointed out that it is inconsistent for such topics to be debated in public forums like Congress or on television but restricted on Meta’s platforms.

Automated systems, which have previously been used to enforce policies across a wide range of issues, will now focus primarily on tackling illegal and severe violations, such as terrorism and child exploitation.

For less critical issues, the platform will rely more on user reports and human reviewers. Meta will also reduce content demotions for material flagged as potentially problematic unless there is strong evidence of a violation.

However, the reduction of automated systems would seem to fly in the face of Meta’s promotion of AI as a valuable tool in its own business offerings — why should anyone else trust Meta’s AI models such as the Llama family if Meta itself isn’t content to use them to moderate content?

A reduction in content takedowns coming?

As Zuck put it, a big problem with Facebook’s automated systems is overly broad censorship.

He stated in his video address, “we built a lot of complex systems to moderate content, but the problem with complex systems is they make mistakes, even if they accidentally censor just 1% posts, that’s millions of people, and we’ve reached a point where it’s just too many mistakes and too much censorship.”

Meta acknowledges that mistakes in content moderation have been a persistent issue. Kaplan noted that while less than 1% of daily content is removed, an estimated 10-20% of these actions may be errors. To address this, Meta plans to:

• Publish transparency reports detailing moderation mistakes and progress.

• Require multiple reviewers to confirm decisions before content is removed.

• Use advanced AI systems, including large language models, for second opinions on enforcement actions.

Additionally, the company is relocating its trust and safety teams from California to other U.S. locations, including Texas, to address perceptions of bias — which already, some have poked fun at on various social channels: are people in Texas really less biased than those in California?

The return of political content…and ‘fake news’?

Since 2021, Meta has limited the visibility of civic and political content on its platforms in response to user feedback.

However, the company now plans to reintroduce this content in a more personalized manner.

Users who wish to see more political content will have greater control over their feeds, with Meta using explicit signals like likes and implicit behaviors such as post views to determine preferences.

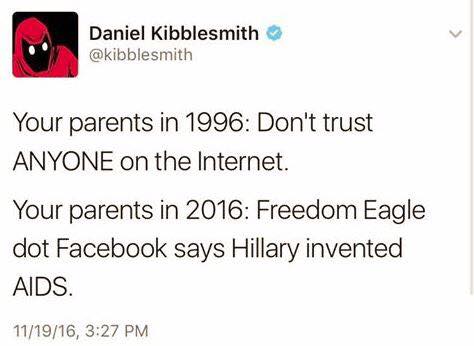

However, this reinstating of political content could run the risk of once again allowing for the spread of politically charged misinformation from U.S. adversaries — as we saw in the run-up to the 2016 election, when numerous Facebook pages spewed disinformation and conspiracy theories that favored Republicans and disfavored Democratic candidates and policies.

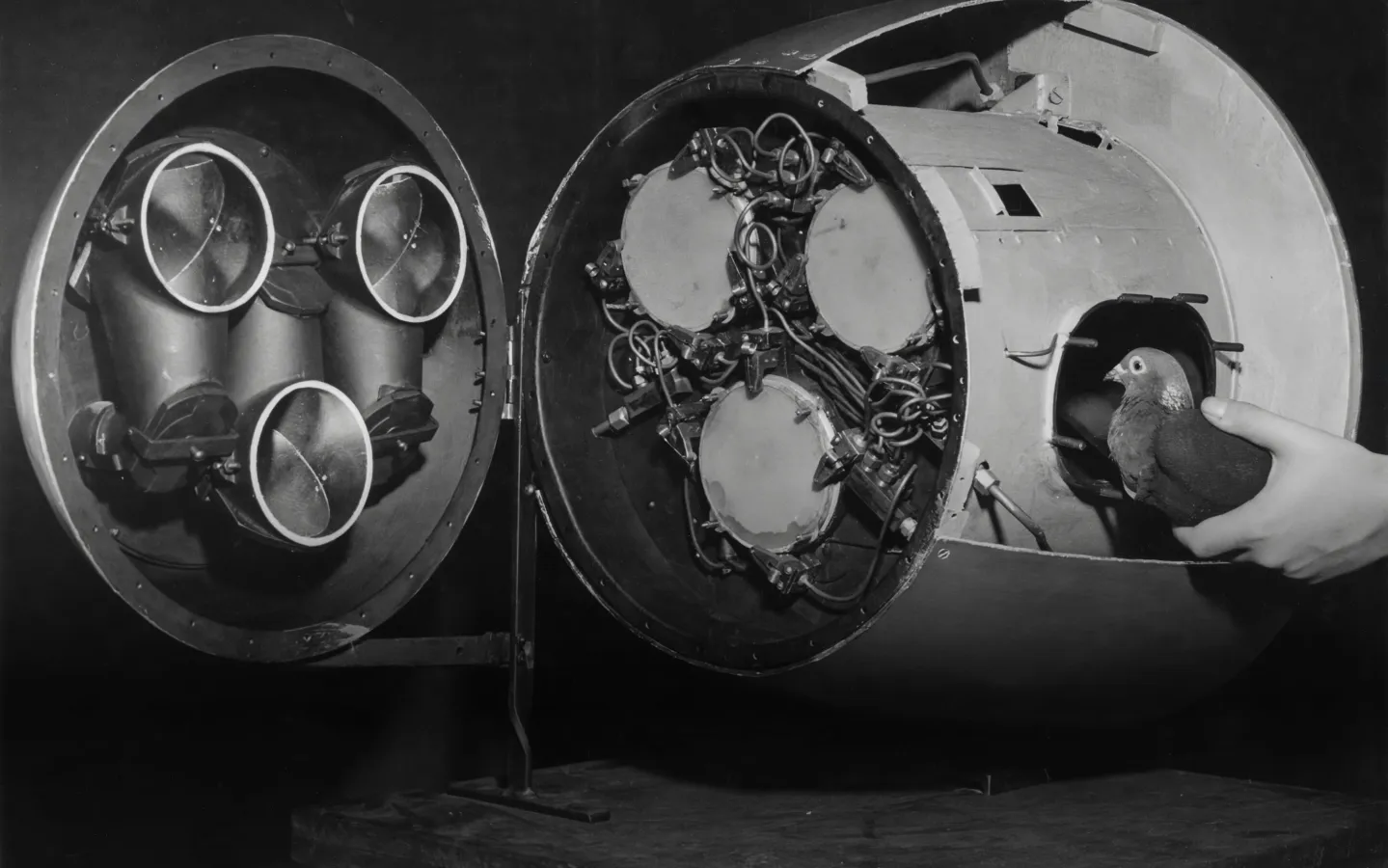

One admitted “fake news” creator told NPR that while they had tried to create content for both liberal and conservative audiences, the latter were more interested and gullible towards sharing and re-sharing fake content that aligned with their views.

Such “fake news” was so widespread, it was even joked about on social media itself and in The Onion.

My analysis on what it means for businesses and brand pages

I’ve never owned a business, but I have managed several Facebook and Instagram accounts on behalf of large corporate and smaller startup/non-profit organizations, so I know firsthand about the work that goes into maintaining them, posting, and growing their audiences/followings.

I think that while Meta’s stated commitment to restoring more freedom of expression to its products is laudable, the jury is out on how it will actually impact the desire for businesses to speak to their fans and customers using said products.

At best, it will be a double-edged sword: less strict content moderation policies will give brands and businesses the chance to post more controversial, experimental, and daring content — and those that take advantage of this may see their messages reach wider audiences, i.e., “go viral.”

On the flip side, brands and businesses may now struggle to get their posts seen and reacted upon in the face of other pages posting even more controversial, politically pointed content.

In addition, the changes could make it easier for users to criticize brands or implicate them in conspiracies, and it may be harder for the brands to force takedowns of such unflattering content about them — even when untrue.

What’s next?

The rollout of Community Notes and policy adjustments is expected to begin in the coming months in the U.S. Meta plans to improve and refine these systems throughout the year.

These initiatives, Kaplan said, aim to balance the need for safety and accuracy with the company’s core value of enabling free expression.

Kaplan said Meta is focused on creating a platform where individuals can freely express themselves. He also acknowledged the challenges of managing content at scale, describing the process as “messy” but essential to Meta’s mission.

For users, these changes promise fewer intrusive interventions and a greater opportunity to shape the conversation on Meta’s platforms.

Whether the new approach will succeed in reducing frustration and fostering open dialogue remains to be seen.

Hey, everyone. I want to talk about something important today, because it’s time to get back to our roots around free expression on Facebook and Instagram. I started building social media to give people a voice. I gave a speech at Georgetown five years ago about the importance of protecting free expression, and I still believe this today, but a lot has happened over the last several years.

There’s been widespread debate about potential harms from online content. Governments and legacy media have pushed to censor more and more. A lot of this is clearly political, but there’s also a lot of legitimately bad stuff out there: drugs, terrorism, child exploitation. These are things that we take very seriously, and I want to make sure that we handle responsibly. So we built a lot of complex systems to moderate content, but the problem with complex systems is they make mistakes. Even if they accidentally censor just 1% of posts, that’s millions of people, and we’ve reached a point where it’s just too many mistakes and too much censorship.

The recent elections also feel like a cultural tipping point towards, once again, prioritizing speech. So we’re going to get back to our roots and focus on reducing mistakes, simplifying our policies, and restoring free expression on our platforms. More specifically, here’s what we’re going to do.

First, we’re going to get rid of fact-checkers and replace them with community notes similar to X, starting in the US. After Trump first got elected in 2016, the legacy media wrote nonstop about how misinformation was a threat to democracy. We tried, in good faith, to address those concerns without becoming the arbiters of truth, but the fact-checkers have just been too politically biased and have destroyed more trust than they’ve created, especially in the US. So over the next couple of months, we’re going to phase in a more comprehensive community notes system.

Second, we’re going to simplify our content policies and get rid of a bunch of restrictions on topics like immigration and gender that are just out of touch with mainstream discourse. What started as a movement to be more inclusive has increasingly been used to shut down opinions and shut out people with different ideas, and it’s gone too far. So I want to make sure that people can share their beliefs and experiences on our platforms.

Third, we’re changing how we enforce our policies to reduce the mistakes that account for the vast majority of censorship on our platforms. We used to have filters that scanned for any policy violation. Now we’re going to focus those filters on tackling illegal and high-severity violations, and for lower-severity violations, we’re going to rely on someone reporting an issue before we take action. The problem is that the filters make mistakes, and they take down a lot of content that they shouldn’t. So by dialing them back, we’re going to dramatically reduce the amount of censorship on our platforms. We’re also going to tune our content filters to require much higher confidence before taking down content. The reality is that this is a tradeoff. It means we’re going to catch less bad stuff, but we’ll also reduce the number of innocent people’s posts and accounts that we accidentally take down.

Fourth, we’re bringing back civic content. For a while, the community asked to see less politics because it was making people stressed, so we stopped recommending these posts. But it feels like we’re in a new era now, and we’re starting to get feedback that people want to see this content again. So we’re going to start phasing this back into Facebook, Instagram, and Threads, while working to keep the communities friendly and positive.

Fifth, we’re going to move our trust and safety and content moderation teams out of California, and our US-based content review is going to be based in Texas. As we work to promote free expression, I think that will help us build trust to do this work in places where there is less concern about the bias of our teams.

Finally, we’re going to work with President Trump to push back on governments around the world that are going after American companies and pushing to censor more. The US has the strongest constitutional protections for free expression in the world. Europe has an ever-increasing number of laws institutionalizing censorship and making it difficult to build anything innovative there. Latin American countries have secret courts that can order companies to quietly take things down. China has censored our apps from even working in the country. The only way that we can push back on this global trend is with the support of the US government, and that’s why it’s been so difficult over the past four years. When even the US government has pushed for censorship by going after us and other American companies, it has emboldened other governments to go even further. But now we have the opportunity to restore free expression, and I am excited to take it.

It’ll take time to get this right, and these are complex systems. They’re never going to be perfect. There’s also a lot of illegal stuff that we still need to work very hard to remove. But the bottom line is that after years of having our content moderation work focused primarily on removing content, it is time to focus on reducing mistakes, simplifying our systems, and getting back to our roots about giving people voice.

I’m looking forward to this next chapter. Stay good out there and more to come soon.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.