Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy. Learn more

Zip, the $2.2 billion procurement platform startup, unveiled a suite of 50 specialized artificial intelligence agents on Tuesday designed to automate the tedious manual work that plagues enterprise purchasing departments worldwide. This marks what industry analysts call the most significant advancement in procurement technology in decades.

The AI agents, announced at Zip’s inaugural AI Summit in New York, can autonomously handle complex tasks ranging from contract reviews and tariff assessments to regulatory compliance checks — work that currently consumes millions of hours across corporate America. Early adopters, including OpenAI, Canva and Webflow are already testing the technology, which Zip says represents a fundamental shift from AI-assisted workflows to fully autonomous task completion.

“Today Zip is cutting through the agentic AI hype with AI agents that actually work,” said Rujul Zaparde, Zip’s co-founder and CEO, in an exclusive interview with VentureBeat. “Not vague chatbots. Not generic assistants. Real, specialized AI agents that do one job and do it perfectly.”

The announcement comes as enterprises increasingly struggle with procurement bottlenecks that can involve 30 or more approval steps for major purchases, particularly in heavily regulated industries like financial services. Procurement represents the second-largest corporate expense category after payroll, yet remains largely managed through manual, error-prone processes that leave trillions of dollars inefficiently managed.

How AI agents tackle the 30-step procurement approval process

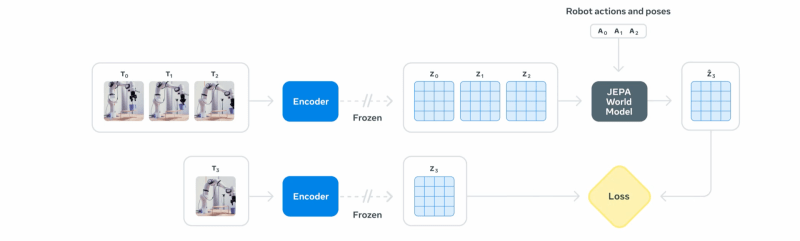

Zip’s approach centers on what the company calls “agentic procurement orchestration”—embedding specialized AI agents directly into existing procurement workflows rather than requiring employees to adopt separate AI tools. The system addresses a critical enterprise challenge: while companies have top-down mandates to adopt AI, most employees don’t know how to integrate tools like ChatGPT effectively into their daily procurement tasks.

“The unique insight we’ve had is that the technology actually is good enough to solve very specific tasks,” explained Lu Cheng, Zip’s co-founder and chief technology officer, in an interview with VentureBeat. “It’s effectively a junior level employee that’s very good at following specific instructions.”

The agents tackle diverse procurement pain points with surgical precision. A tariff analysis agent dynamically assesses how global trade policies affect vendor pricing, while a GDPR compliance agent flags potential privacy risks in vendor documents. An intake validation agent can spot discrepancies in purchase requests — for instance, catching when an employee claims a software purchase won’t involve customer data sharing. At the same time, the vendor’s documentation indicates otherwise.

One enterprise customer processing 1,410 procurement requests in their first month with Zip would traditionally require human review of every request’s pricing, categorization and compliance details. With Zip’s agents, that work happens automatically.

From 4.6 million AI insights to $4.4 billion in enterprise savings

Zip’s aggressive push into AI automation builds on substantial momentum. Since it was founded in 2020, the company has already delivered over 4.6 million AI insights to customers and helped enterprises save $4.4 billion in trackable procurement costs. In 2024 alone, Zip processed 14 million reviews across its customer base — work that previously required human analysts to manually examine contracts, security documentation and compliance materials.

“We had a customer just went live — an 8,000 person, well-regarded tech company — in their first month they processed 1,410 requests,” Zaparde said. “The first step for all 1,410 requests was someone in procurement checking if the price was correct, if the categories aligned. With this agent, they basically don’t have to do that 1,410 times.”

The company has set an ambitious goal: within five years, as Zip processes over one billion reviews annually, 90% should be handled entirely by AI agents. That scale of automation could reshape how enterprises manage supplier relationships and spending decisions.

Why Zip’s data access gives it an edge over SAP and Oracle

Zip’s agents gain effectiveness through privileged access to comprehensive enterprise data that competitors cannot easily replicate. As the orchestration layer connecting finance, legal, procurement, IT and security teams, Zip already integrates with an average of seven enterprise systems per customer, including contract management platforms, risk assessment tools and ERP financial systems.

“We have a really deep understanding of what a legal review, what a security review actually constitutes because we literally have the documents that they’re reviewing thousands or hundreds of thousands of times across our customer base,” Zaparde explained. This data advantage allows Zip agents to access contract renewal dates, payment histories, vendor relationships spanning decades, and real-time regulatory changes — context that isolated enterprise systems cannot provide.

The company built its agents using a no-code platform that enterprise customers can customize for their specific needs. Configuration typically takes two to four hours per agent, though complex implementations can require up to 20 hours for customers with intricate approval processes.

OpenAI and Canva lead early adoption of automated procurement

OpenAI, which has partnered closely with Zip through the startup’s AI Lab initiative, exemplifies the early adoption trend. “We’ve worked closely with the Zip team to power their agentic platform and it’s been really exciting to see how quickly they’ve turned real-world procurement pain points into focused AI task agents,” said Kathryn Devlin, Head of Procure-to-Pay Operations, Travel and Expense at OpenAI.

The collaboration reflects a broader enterprise imperative: procurement automation has become strategically critical as companies face mounting pressure to optimize spending and control costs. Research firm IDC projects the global procurement software market will grow from $8.03 billion in 2024 to $18.28 billion by 2032, with AI-powered solutions driving much of that expansion.

Wiz Technology Procurement leader Idan Cohen highlighted the strategic shift AI enables: “We’ll save so much time on the technical work and day-to-day tasks that we need to do as part of the procurement process, and be enabled to really focus on what we’re supposed to do — being a true partner to the business and to our vendors.”

Building enterprise trust with citations and human oversight

Zip has designed its agent architecture to address enterprise concerns about AI accuracy and data security. The system provides detailed citations for every recommendation, allowing human reviewers to verify the sources behind AI decisions. Customers can configure agents to either provide insights for human review or automatically approve certain transactions based on predefined parameters.

“If our agent is saying we don’t believe there is security risk because of X, Y and Z, it’s forced to actually cite where it got X from,” Zaparde said. “You can see, ‘Oh, in the MSA and the contract, it says this. That’s why I think it’s not risky.’”

The company maintains strict data isolation, never training its AI models on customer data to prevent cross-company information leakage — a critical consideration for enterprises handling sensitive supplier relationships and pricing negotiations.

A $2.2 billion valuation positions Zip against procurement giants

Zip’s agent launch comes from a position of significant market strength. The company raised $190 million in Series D funding in October 2024 at a $2.2 billion valuation, marking what the company called the largest investment in procurement technology in over two decades. The funding round, led by BOND, attracted new investors including DST Global and Alkeon alongside existing backers Y Combinator and CRV.

Rather than competing directly with enterprise resource planning giants like SAP and Oracle, Zip positions itself as a complementary orchestration layer. “Those systems are great systems, but they’re systems of record,” Zaparde explained. “Zip is really the orchestration, the procurement orchestration layer that sits on top of those systems.”

The company’s customer roster includes hundreds of large enterprises across technology, financial services, and healthcare sectors. Notable clients include Snowflake, Discover, Reddit, Northwestern Mutual and Arm Holdings, collectively processing over $107 billion in spending through Zip’s platform.

The future of enterprise automation beyond procurement

Industry analysts view Zip’s agent suite as validation of a broader shift toward task-specific AI automation in enterprise software. “Zip created an entirely new category of procurement applications, so it is appropriate to see them pressing forward and launching a suite of AI Agents,” said Patrick Reymann, Research Director for Procurement and Enterprise Applications at IDC.

The agents will become available in late 2025, with early access granted to select customers now undergoing beta testing. Zip plans to expand beyond its initial 50 agents, developing new capabilities in partnership with consulting firms KPMG and The Hackett Group.

As Zip’s Q1 2025 marked the company’s largest quarter ever with 155% growth in its strategic enterprise segment, the startup’s trajectory suggests AI-powered procurement automation has moved from experimental to essential. The success of Zip’s specialized agent architecture could accelerate similar automation initiatives across other enterprise functions, potentially reshaping how large organizations handle complex, multi-stakeholder business processes far beyond purchasing departments.

But perhaps the most telling indicator of the technology’s transformative potential lies in a simple prediction from Zaparde: “I think in 10 years, people are going to look back and be like, ‘Wait, humans were approving all this stuff?’” If Zip’s vision proves correct, the question won’t be whether AI agents will automate enterprise workflows — it will be why it took so long to deploy them.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.