Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy. Learn more

The generative AI boom has given us powerful language models that can write, summarize and reason over vast amounts of text and other types of data. But when it comes to high-value predictive tasks like predicting customer churn or detecting fraud from structured, relational data, enterprises remain stuck in the world of traditional machine learning.

Stanford professor and Kumo AI co-founder Jure Leskovec argues that this is the critical missing piece. His company’s tool, a relational foundation model (RFM), is a new kind of pre-trained AI that brings the “zero-shot” capabilities of large language models (LLMs) to structured databases.

“It’s about making a forecast about something you don’t know, something that has not happened yet,” Leskovec told VentureBeat. “And that’s a fundamentally new capability that is, I would argue, missing from the current purview of what we think of as gen AI.”

Why predictive ML is a “30-year-old technology”

While LLMs and retrieval-augmented generation (RAG) systems can answer questions about existing knowledge, they are fundamentally retrospective. They retrieve and reason over information that is already there. For predictive business tasks, companies still rely on classic machine learning.

For example, to build a model that predicts customer churn, a business must hire a team of data scientists who spend a considerably long time doing “feature engineering,” the process of manually creating predictive signals from the data. This involves complex data wrangling to join information from different tables, such as a customer’s purchase history and website clicks, to create a single, massive training table.

“If you want to do machine learning (ML), sorry, you are stuck in the past,” Leskovec said. Expensive and time-consuming bottlenecks prevent most organizations from being truly agile with their data.

How Kumo is generalizing transformers for databases

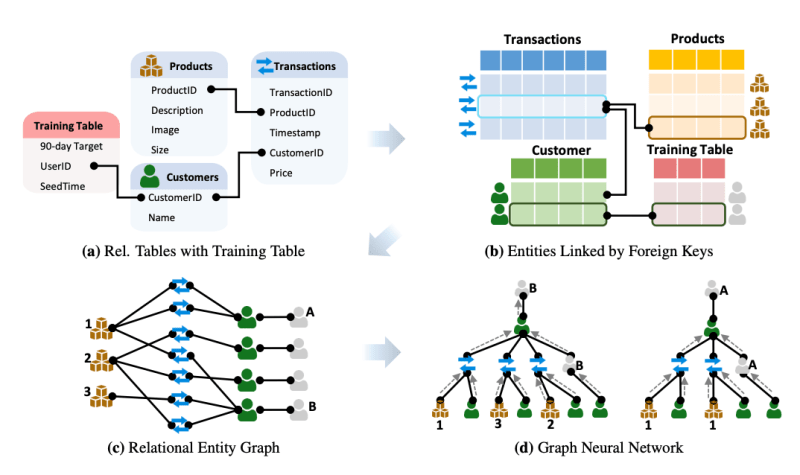

Kumo’s approach, “relational deep learning,” sidesteps this manual process with two key insights. First, it automatically represents any relational database as a single, interconnected graph. For example, if the database has a “users” table to record customer information and an “orders” table to record customer purchases, every row in the users table becomes a user node, every row in an orders table becomes an order node, and so on. These nodes are then automatically connected using the database’s existing relationships, such as foreign keys, creating a rich map of the entire dataset with no manual effort.

Second, Kumo generalized the transformer architecture, the engine behind LLMs, to learn directly from this graph representation. Transformers excel at understanding sequences of tokens by using an “attention mechanism” to weigh the importance of different tokens in relation to each other.

Kumo’s RFM applies this same attention mechanism to the graph, allowing it to learn complex patterns and relationships across multiple tables simultaneously. Leskovec compares this leap to the evolution of computer vision. In the early 2000s, ML engineers had to manually design features like edges and shapes to detect an object. But newer architectures like convolutional neural networks (CNN) can take in raw pixels and automatically learn the relevant features.

Similarly, the RFM ingests raw database tables and lets the network discover the most predictive signals on its own without the need for manual effort.

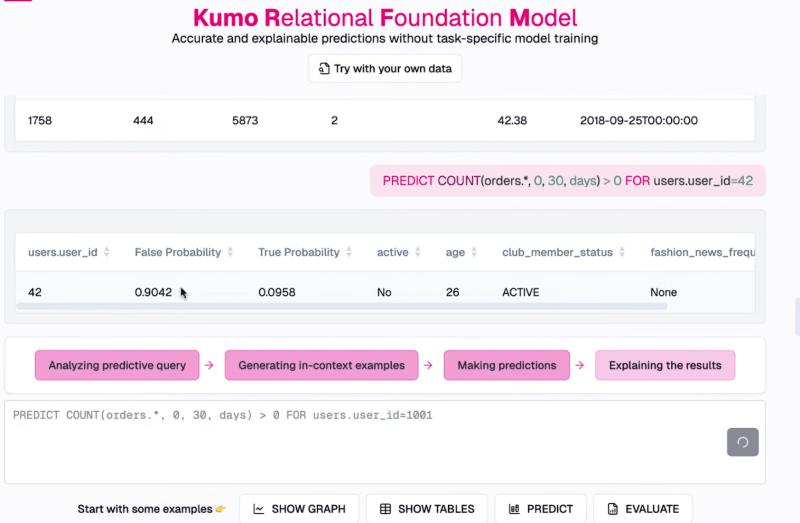

The result is a pre-trained foundation model that can perform predictive tasks on a new database instantly, what’s known as “zero-shot.” During a demo, Leskovec showed how a user could type a simple query to predict whether a specific customer would place an order in the next 30 days. Within seconds, the system returned a probability score and an explanation of the data points that led to its conclusion, such as the user’s recent activity or lack thereof. The model was not trained on the provided database and adapted to it in real time through in-context learning.

“We have a pre-trained model that you simply point to your data, and it will give you an accurate prediction 200 milliseconds later,” Leskovec said. He added that it can be “as accurate as, let’s say, weeks of a data scientist’s work.”

The interface is designed to be familiar to data analysts, not just machine learning specialists, democratizing access to predictive analytics.

Powering the agentic future

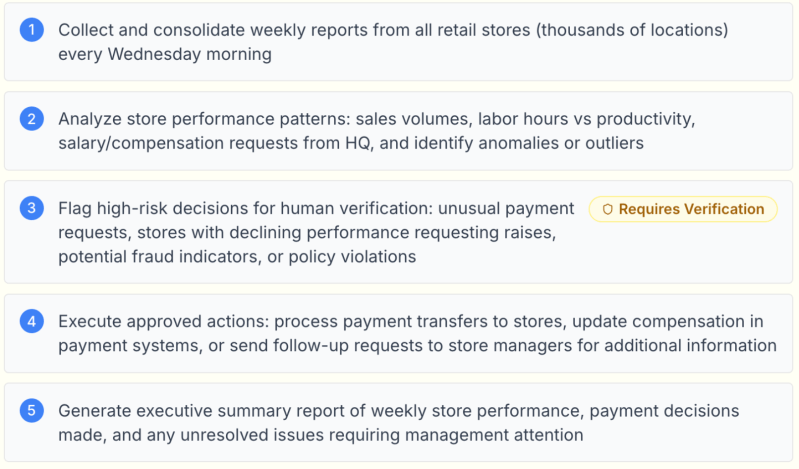

This technology has significant implications for the development of AI agents. For an agent to perform meaningful tasks within an enterprise, it needs to do more than just process language; it must make intelligent decisions based on the company’s private data. The RFM can serve as a predictive engine for these agents. For example, a customer service agent could query the RFM to determine a customer’s likelihood of churning or their potential future value, then use an LLM to tailor its conversation and offers accordingly.

“If we believe in an agentic future, agents will need to make decisions rooted in private data. And this is the way for an agent to make decisions,” Leskovec explained.

Kumo’s work points to a future where enterprise AI is split into two complementary domains: LLMs for handling retrospective knowledge in unstructured text, and RFMs for predictive forecasting on structured data. By eliminating the feature engineering bottleneck, the RFM promises to put powerful ML tools into the hands of more enterprises, drastically reducing the time and cost to get from data to decision.

The company has released a public demo of the RFM and plans to launch a version that allows users to connect their own data in the coming weeks. For organizations that require maximum accuracy, Kumo will also offer a fine-tuning service to further boost performance on private datasets.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.