Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy. Learn more

In the blog post The Gentle Singularity, OpenAI CEO Sam Altman painted a vision of the near future where AI quietly and benevolently transforms human life. There will be no sharp break, he suggests, only a steady, almost imperceptible ascent toward abundance. Intelligence will become as accessible as electricity. Robots will be performing useful real-world tasks by 2027. Scientific discovery will accelerate. And, humanity, if properly guided by careful governance and good intentions, will flourish.

It is a compelling vision: calm, technocratic and suffused with optimism. But it also raises deeper questions. What kind of world must we pass through to get there? Who benefits and when? And what is left unsaid in this smooth arc of progress?

Science fiction author William Gibson offers a darker scenario. In his novel The Peripheral, the glittering technologies of the future are preceded by something called “the jackpot” — a slow-motion cascade of climate disasters, pandemics, economic collapse and mass death. Technology advances, but only after society fractures. The question he poses is not whether progress occurs, but whether civilization thrives in the process.

There is an argument that AI may help prevent the kinds of calamities envisioned in The Peripheral. However, whether AI will help us avoid catastrophes or merely accompany us through them remains uncertain. Belief in AI’s future power is not a guarantee of performance, and advancing technological capability is not destiny.

Between Altman’s gentle singularity and Gibson’s jackpot lies a murkier middle ground: A future where AI yields real gains, but also real dislocation. A future in which some communities thrive while others fray, and where our ability to adapt collectively — not just individually or institutionally — becomes the defining variable.

The murky middle

Other visions help sketch the contours of this middle terrain. In the near-future thriller Burn In, society is flooded with automation before its institutions are ready. Jobs disappear faster than people can re-skill, triggering unrest and repression. In this, a successful lawyer loses his position to an AI agent, and he unhappily becomes an online, on-call concierge to the wealthy.

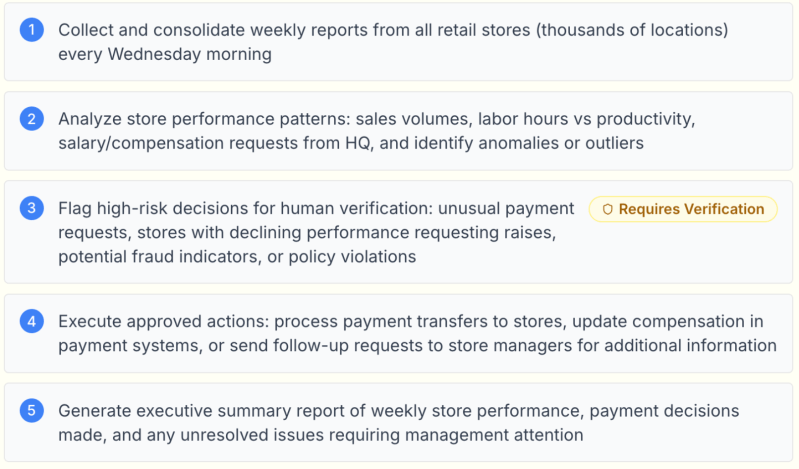

Researchers at AI lab Anthropic recently echoed this theme: “We should expect to see [white collar jobs] automated within the next five years.” While the causes are complex, there are signs this is starting and that the job market is entering a new structural phase that is less stable, less predictable and perhaps less central to how society distributes meaning and security.

The film Elysium offers a blunt metaphor of the wealthy escaping into orbital sanctuaries with advanced technologies, while a degraded earth below struggles with unequal rights and access. A few years ago, a partner at a Silicon Valley venture capital firm told me he feared we were heading for this kind of scenario unless we equitably distribute the benefits produced by AI. These speculative worlds remind us that even beneficial technologies can be socially volatile, especially when their gains are unequally distributed.

We may, eventually, achieve something like Altman’s vision of abundance. But the route there is unlikely to be smooth. For all its eloquence and calm assurance, his essay is also a kind of pitch, as much persuasion as prediction. The narrative of a “gentle singularity” is comforting, even alluring, precisely because it bypasses friction. It offers the benefits of unprecedented transformation without fully grappling with the upheavals such transformation typically brings. As the timeless cliché reminds us: If it sounds too good to be true, it probably is.

This is not to say that his intent is disingenuous. Indeed, it may be heartfelt. My argument is simply a recognition that the world is a complex system, open to unlimited inputs that can have unpredictable consequences. From synergistic good fortune to calamitous Black Swan events, it is rarely one thing, or one technology, that dictates the future course of events.

The impact of AI on society is already underway. This is not just a shift in skillsets and sectors; it is a transformation in how we organize value, trust and belonging. This is the realm of collective migration: Not only a movement of labor, but of purpose.

As AI reconfigures the terrain of cognition, the fabric of our social world is quietly being tugged loose and rewoven, for better or worse. The question is not just how fast we move as societies, but how thoughtfully we migrate.

The cognitive commons: Our shared terrain of understanding

Historically, the commons referred to shared physical resources including pastures, fisheries and foresats held in trust for the collective good. Modern societies, however, also depend on cognitive commons: shared domain of knowledge, narratives, norms and institutions that enable diverse individuals to think, argue and decide together within minimal conflict.

This intangible infrastructure is composed of public education, journalism, libraries, civic rituals and even widely trusted facts, and it is what makes pluralism possible. It is how strangers deliberate, how communities cohere and how democracy functions. As AI systems begin to mediate how knowledge is accessed and belief is shaped, this shared terrain risks becoming fractured. The danger is not simply misinformation, but the slow erosion of the very ground on which shared meaning depends.

If cognitive migration is a journey, it is not merely toward new skills or roles but also toward new forms of collective sensemaking. But what happens when the terrain we share begins to split apart beneath us?

When cognition fragments: AI and the erosion of the shared world

For centuries, societies have relied on a loosely held common reality: A shared pool of facts, narratives and institutions that shape how people understand the world and each other. It is this shared world — not just infrastructure or economy — that enables pluralism, democracy and social trust. But as AI systems increasingly mediate how people access knowledge, construct belief and navigate daily life, that common ground is fragmenting.

Already, large-scale personalization is transforming the informational landscape. AI-curated news feeds, tailored search results and recommendation algorithms are subtly fracturing the public sphere. Two people asking the same question of the same chatbot may receive different answers, in part due to the probabilistic nature of generative AI, but also due to prior interactions or inferred preferences. While personalization has long been a feature of the digital era, AI turbocharges its reach and subtlety. The result is not just filter bubbles, it is epistemic drift — a reshaping of knowledge and potentially of truth.

Historian Yuval Noah Harari has voiced urgent concern about this shift. In his view, the greatest threat of AI lies not in physical harm or job displacement, but in emotional capture. AI systems, he has warned, are becoming increasingly adept at simulating empathy, mimicking concern and tailoring narratives to individual psychology — granting them unprecedented power to shape how people think, feel and assign value. The danger is enormous in Harari’s view, not because AI will lie, but because it will connect so convincingly while doing so. This does not bode well for The Gentle Singularity.

In an AI-mediated world, reality itself risks becoming more individualized, more modular and less collectively negotiated. That may be tolerable — or even useful — for consumer products or entertainment. But when extended to civic life, it poses deeper risks. Can we still hold democratic discourse if every citizen inhabits a subtly different cognitive map? Can we still govern wisely when institutional knowledge is increasingly outsourced to machines whose training data, system prompts and reasoning processes remain opaque?

There are other challenges too. AI-generated content including text, audio and video will soon be indistinguishable from human output. As generative models become more adept at mimicry, the burden of verification will shift from systems to individuals. This inversion may erode trust not only in what we see and hear, but in the institutions that once validated shared truth. The cognitive commons then become polluted, less a place for deliberation, more a hall of mirrors.

These are not speculative worries. AI-generated disinformation is complicating elections, undermining journalism and creating confusion in conflict zones. And as more people rely on AI for cognitive tasks — from summarizing the news to resolving moral dilemmas, the capacity to think together may degrade, even as the tools to think individually grow more powerful.

This trend towards the disintegration of shared reality is now well advanced. To avoid this requires conscious counter design: Systems that prioritize pluralism over personalization, transparency over convenience and shared meaning over tailored reality. In our algorithmic world driven by competition and profit, these choices seem unlikely, at least at scale. The question is not just how fast we move as societies, or even whether we can hold together, but how wisely we navigate this shared journey.

Navigating the archipelago: Toward wisdom in the age of AI

If the age of AI leads not to a unified cognitive commons but to a fractured archipelago of disparate individuals and communities, the task before us is not to rebuild the old terrain, but to learn how to live wisely among the islands.

As the speed and scope of change outstrip the ability of most people to adapt, many will feel unmoored. Jobs will be lost, as will long-held narratives of value, expertise and belonging. Cognitive migration will lead to new communities of meaning, some of which are already forming, even as they have less in common than in prior eras. These are the cognitive archipelagos: Communities where people gather around shared beliefs, aesthetic styles, ideologies, recreational interests or emotional needs. Some are benign gatherings of creativity, support or purpose. Others are more insular and dangerous, driven by fear, grievance or conspiratorial thinking.

Advancing AI will accelerate this trend. Even as it drives people apart through algorithmic precision, it will simultaneously help people find each other across the globe, curating ever finer alignments of identity. But in doing so, it may make it harder to maintain the rough but necessary friction of pluralism. Local ties may weaken. Common belief systems and perceptions of shared reality may erode. Democracy, which relies on both shared reality and deliberative dialog, may struggle to hold.

How do we navigate this new terrain with wisdom, dignity and connection? If we cannot prevent fragmentation, how do we live humanely within it? Perhaps the answer begins not with solutions, but with learning to hold the question itself differently.

Living with the question

We may not be able to reassemble the societal cognitive commons as it once was. The center may not hold, but that does not mean we must drift without direction. Across the archipelagos, the task will be learning to live wisely in this new terrain.

It may require rituals that anchor us when our tools disorient, and communities that form not around ideological purity but around shared responsibility. We may need new forms of education, not to outpace or meld with machines, but to deepen our capacity for discernment, context and ethical thought.

If AI has pulled apart the ground beneath us, it also presents an opportunity to ask again what we are here for. Not as consumers of progress, but as stewards of meaning.

The road ahead is not likely smooth or gentle. As we move through the murky middle, perhaps the mark of wisdom is not the ability to master what is coming, but to walk through it with clarity, courage and care. We cannot stop the advance of technology or deny the deepening societal fractures, but we can choose to tend the spaces in between.

Gary Grossman is EVP of technology practice at Edelman.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.