How do you balance risk management and safety with innovation in agentic systems — and how do you grapple with core considerations around data and model selection? In this VB Transform session, Milind Naphade, SVP, technology, of AI Foundations at Capital One, offered best practices and lessons learned from real-world experiments and applications for deploying and scaling an agentic workflow.

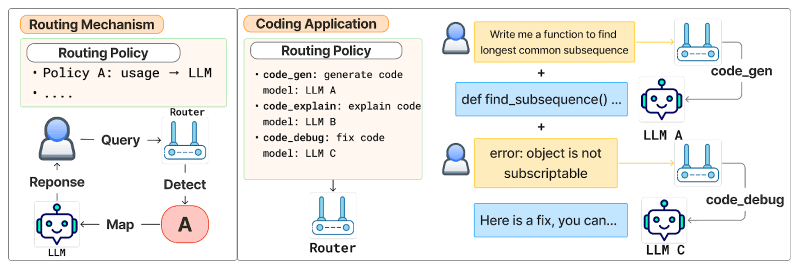

Capital One, committed to staying at the forefront of emerging technologies, recently launched a production-grade, state-of-the-art multi-agent AI system to enhance the car-buying experience. In this system, multiple AI agents work together to not only provide information to the car buyer, but to take specific actions based on the customer’s preferences and needs. For example, one agent communicates with the customer. Another creates an action plan based on business rules and the tools it is allowed to use. A third agent evaluates the accuracy of the first two, and a fourth agent explains and validates the action plan with the user. With over 100 million customers using a wide range of other potential Capital One use case applications, the agentic system is built for scale and complexity.

“When we think of improving the customer experience, delighting the customer, we think of, what are the ways in which that can happen?” Naphade said. “Whether you’re opening an account or you want to know your balance or you’re trying to make a reservation to test a vehicle, there are a bunch of things that customers want to do. At the heart of this, very simply, how do you understand what the customer wants? How do you understand the fulfillment mechanisms at your disposal? How do you bring all the rigors of a regulated entity like Capital One, all the policies, all the business rules, all the constraints, regulatory and otherwise?”

Agentic AI was clearly the next step, he said, for internal as well as customer-facing use cases.

Designing an agentic workflow

Financial institutions have particularly stringent requirements when designing any workflow that supports customer journeys. And Capital One’s applications include a number of complex processes as customers raise issues and queries leveraging conversational tools. These two factors made the design process especially complex, requiring a holistic view of the entire journey — including how both customers and human agents respond, react, and reason at every step.

“When we looked at how humans do reasoning, we were struck by a few salient facts,” Naphade said. “We saw that if we designed it using multiple logical agents, we would be able to mimic human reasoning quite well. But then you ask yourself, what exactly do the different agents do? Why do you have four? Why not three? Why not 20?”

They studied customer experiences in the historic data: where those conversations go right, where they go wrong, how long they should take and other salient facts. They learned that it often takes multiple turns of conversation with an agent to understand what the customer wants, and any agentic workflow needs to plan for that, but also be completely grounded in an organization’s systems, available tools, APIs, and organizational policy guardrails.

“The main breakthrough for us was realizing that this had to be dynamic and iterative,” Naphade said. “If you look at how a lot of people are using LLMs, they’re slapping the LLMs as a front end to the same mechanism that used to exist. They’re just using LLMs for classification of intent. But we realized from the beginning that that was not scalable.”

Taking cues from existing workflows

Based on their intuition of how human agents reason while responding to customers, researchers at Capital One developed a framework in which a team of expert AI agents, each with different expertise, come together and solve a problem.

Additionally, Capital One incorporated robust risk frameworks into the development of the agentic system. As a regulated institution, Naphade noted that in addition to its range of internal risk mitigation protocols and frameworks,”Within Capital One, to manage risk, other entities that are independent observe you, evaluate you, question you, audit you,” Naphade said. “We thought that was a good idea for us, to have an AI agent whose entire job was to evaluate what the first two agents do based on Capital One policies and rules.”

The evaluator determines whether the earlier agents were successful, and if not, rejects the plan and requests the planning agent to correct its results based on its judgement of where the problem was. This happens in an iterative process until the appropriate plan is reached. It’s also proven to be a huge boon to the company’s agentic AI approach.

“The evaluator agent is … where we bring a world model. That’s where we simulate what happens if a series of actions were to be actually executed. That kind of rigor, which we need because we are a regulated enterprise – I think that’s actually putting us on a great sustainable and robust trajectory. I expect a lot of enterprises will eventually go to that point.”

The technical challenges of agentic AI

Agentic systems need to work with fulfillment systems across the organization, all with a variety of permissions. Invoking tools and APIs within a variety of contexts while maintaining high accuracy was also challenging — from disambiguating user intent to generating and executing a reliable plan.

“We have multiple iterations of experimentation, testing, evaluation, human-in-the-loop, all the right guardrails that need to happen before we can actually come into the market with something like this,” Naphade said. “But one of the biggest challenges was we didn’t have any precedent. We couldn’t go and say, oh, somebody else did it this way. How did that work out? There was that element of novelty. We were doing it for the first time.”

Model selection and partnering with NVIDIA

In terms of models, Capital One is keenly tracking academic and industry research, presenting at conferences and staying abreast of what’s state of the art. In the present use case, they used open-weights models, rather than closed, because that allowed them significant customization. That’s critical to them, Naphade asserts, because competitive advantage in AI strategy relies on proprietary data.

In the technology stack itself, they use a combination of tools, including in-house technology, open-source tool chains, and NVIDIA inference stack. Working closely with NVIDIA has helped Capital One get the performance they need, and collaborate on industry-specific opportunities in NVIDIA’s library, and prioritize features for the Triton server and their TensoRT LLM.

Agentic AI: Looking ahead

Capital One continues to deploy, scale, and refine AI agents across their business. Their first multi-agentic workflow was Chat Concierge, deployed through the company’s auto business. It was designed to support both auto dealers and customers with the car-buying process. And with rich customer data, dealers are identifying serious leads, which has improved their customer engagement metrics significantly — up to 55% in some cases.

“They’re able to generate much better serious leads through this natural, easier, 24/7 agent working for them,” Naphade said. “We’d like to bring this capability to [more] of our customer-facing engagements. But we want to do it in a well-managed way. It’s a journey.”