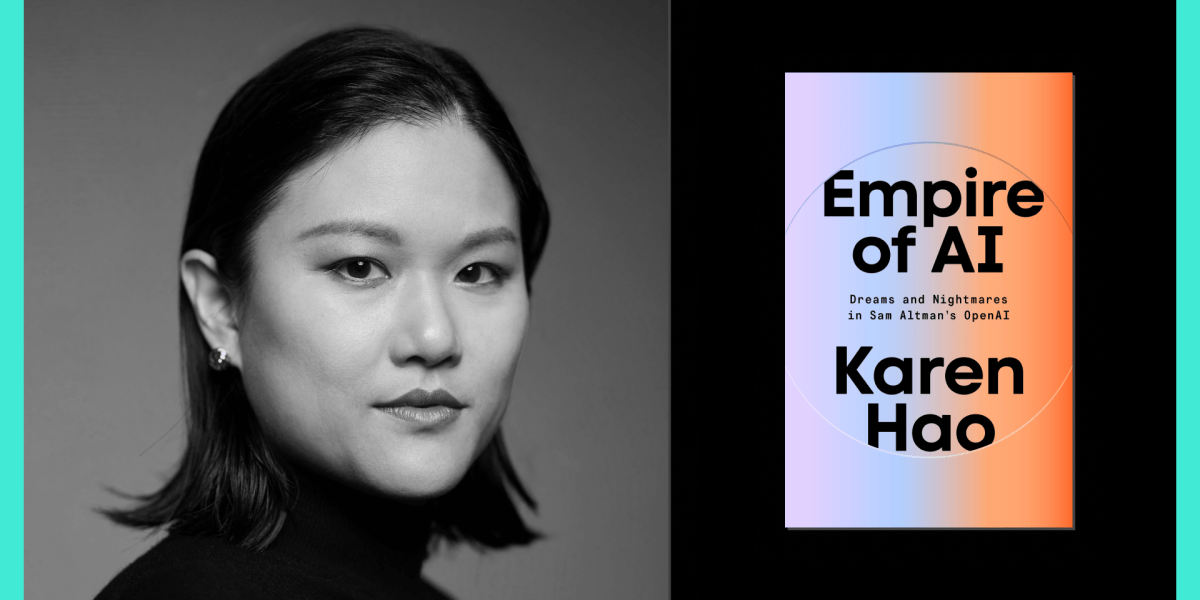

Niall Firth: Hello, everyone, and welcome to this special edition of Roundtables. These are our subscriber-only events where you get to listen in to conversations between editors and reporters. Now, I’m delighted to say we’ve got an absolute cracker of an event today. I’m very happy to have our prodigal daughter, Karen Hao, a fabulous AI journalist, here with us to talk about her new book. Hello, Karen, how are you doing?

Karen Hao: Good. Thank you so much for having me back, Niall.

Niall Firth: Lovely to have you. So I’m sure you all know Karen and that’s why you’re here. But to give you a quick, quick synopsis, Karen has a degree in mechanical engineering from MIT. She was MIT Technology Review’s senior editor for AI and has won countless awards, been cited in Congress, written for the Wall Street Journal and The Atlantic, and set up a series at the Pulitzer Center to teach journalists how to cover AI.

But most important of all, she’s here to discuss her new book, which I’ve got a copy of here, Empire of AI. The UK version is subtitled “Inside the reckless race for total domination,” and the US one, I believe, is “Dreams and nightmares in Sam Altman’s OpenAI.”

It’s been an absolute sensation, a New York Times chart topper. An incredible feat of reporting—like 300 interviews, including 90 with people inside OpenAI. And it’s a brilliant look at not just OpenAI’s rise, and the character of Sam Altman, which is very interesting in its own right, but also a really astute look at what kind of AI we’re building and who holds the keys.

Karen, the core of the book, the rise and rise of OpenAI, was one of your first big features at MIT Technology Review. It’s a brilliant story that lifted the lid for the first time on what was going on at OpenAI … and they really hated it, right?

Karen Hao: Yes, and first of all, thank you to everyone for being here. It’s always great to be home. I do still consider MIT Tech Review to be my journalistic home, and that story was—I only did it because Niall assigned it after I said, “Hey, it seems like OpenAI is kind of an interesting thing,” and he was like, you should profile them. And I had never written a profile about a company before, and I didn’t think that I would have it in me, and Niall believed that I would be able to do it. So it really didn’t happen other than because of you.

I went into the piece with an open mind about—let me understand what OpenAI is. Let me take what they say at face value. They were founded as a nonprofit. They have this mission to ensure artificial general intelligence benefits all of humanity. What do they mean by that? How are they trying to achieve that ultimately? How are they striking this balance between mission-driven AI development and the need to raise money and capital?

And through the course of embedding within the company for three days, and then interviewing dozens of people outside the company or around the company … I came to realize that there was a fundamental disconnect between what they were publicly espousing and accumulating a lot of goodwill from and how they were operating. And that is what I ended up focusing my profile on, and that is why they were not very pleased.

Niall Firth: And how have you seen OpenAI change even since you did the profile? That sort of misalignment feels like it’s got messier and more confusing in the years since.

Karen Hao: Absolutely. I mean, it’s kind of remarkable that OpenAI, you could argue that they are now one of the most capitalistic corporations in Silicon Valley. They just raised $40 billion, in the largest-ever private fundraising round in tech industry history. They’re valued at $300 billion. And yet they still say that they are first and foremost a nonprofit.

I think this really gets to the heart of how much OpenAI has tried to position and reposition itself throughout its decade-long history, to ultimately play into the narratives that they think are going to do best with the public and with policymakers, in spite of what they might actually be doing in terms of developing their technologies and commercializing them.

Niall Firth: You cite Sam Altman saying, you know, the race for AGI is what motivated a lot of this, and I’ll come back to that a bit before the end. But he talks about it as like the Manhattan Project for AI. You cite him quoting Oppenheimer (of course, you know, there’s no self-aggrandizing there): “Technology happens because it’s possible,” he says in the book.

And it feels to me like this is one of the themes of the book: the idea that technology doesn’t just happen because it comes along. It comes because of choices that people make. It’s not an inevitability that things are the way they are and that people are who they are. What they think is important—that influences the direction of travel. So what does this mean, in practice, if that’s the case?

Karen Hao: With OpenAI in particular, they made a very key decision early on in their history that led to all of the AI technologies that we see dominating the marketplace and dominating headlines today. And that was a decision to try and advance AI progress through scaling the existing techniques that were available to them. At the time when OpenAI started, at the end of 2015, and then, when they made that decision, in roughly around 2017, this was a very unpopular perspective within the broader AI research field.

There were kind of two competing ideas about how to advance AI progress, or rather a spectrum of ideas, bookended by two extremes. One extreme being, we have all the techniques we need, and we should just aggressively scale. And the other one being that we don’t actually have the techniques we need. We need to continue innovating and doing fundamental AI research to get more breakthroughs. And largely the field assumed that this side of the spectrum [focusing on fundamental AI research] was the most likely approach for getting advancements, but OpenAI was anomalously committed to the other extreme—this idea that we can just take neural networks and pump ever more data, and train on ever larger supercomputers, larger than have ever been built in history.

The reason why they made that decision was because they were competing against Google, which had a dominant monopoly on AI talent. And OpenAI knew that they didn’t necessarily have the ability to beat Google simply by trying to get research breakthroughs. That’s a very hard path. When you’re doing fundamental research, you never really know when the breakthrough might appear. It’s not a very linear line of progress, but scaling is sort of linear. As long as you just pump more data and more compute, you can get gains. And so they thought, we can just do this faster than anyone else. And that’s the way that we’re going to leap ahead of Google. And it particularly aligned with Sam Altman’s skillset, as well, because he is a once-in-a-generation fundraising talent, and when you’re going for scale to advance AI models, the primary bottleneck is capital.

And so it was kind of a great fit for what he had to offer, which is, he knows how to accumulate capital, and he knows how to accumulate it very quickly. So that is ultimately how you can see that technology is a product of human choices and human perspectives. And they’re the specific skills and strengths that that team had at the time for how they wanted to move forward.

Niall Firth: And to be fair, I mean, it works, right? It was amazing, fabulous. You know the breakthroughs that happened, GPT-2 to GPT-3, just from scale and data and compute, kind of were mind-blowing really, as we look back on it now.

Karen Hao: Yeah, it is remarkable how much it did work, because there was a lot of skepticism about the idea that scale could lead to the kind of technical progress that we’ve seen. But one of my biggest critiques of this particular approach is that there’s also an extraordinary amount of costs that come with this particular pathway to getting more advancements. And there are many different pathways to advancing AI, so we could have actually gotten all of these benefits, and moving forward, we could continue to get more benefits from AI, without actually engaging in a hugely consumptive, hugely costly approach to its development.

Niall Firth: Yeah, so in terms of consumptive, that’s something we’ve touched on here quite recently at MIT Technology Review, like the energy costs of AI. The data center costs are absolutely extraordinary, right? Like the data behind it is incredible. And it’s only gonna get worse in the next few years if we continue down this path, right?

Karen Hao: Yeah … so first of all, everyone should read the series that Tech Review put out, if you haven’t already, on the energy question, because it really does break down everything from what is the energy consumption of the smallest unit of interacting with these models, all the way up until the highest level.

The number that I have seen a lot, and that I’ve been repeating, is there was a McKinsey report that was looking at if we continue to just look at the pace at which data centers and supercomputers are being built and scaled, in the next five years, we would have to add two to six times the amount of energy consumed by California onto the grid. And most of that will have to be serviced by fossil fuels, because these data centers and supercomputers have to run 24/7, so we cannot rely solely on renewable energy. We do not have enough nuclear power capacity to power these colossal pieces of infrastructure. And so we’re already accelerating the climate crisis.

And we’re also accelerating a public-health crisis, the pumping of thousands of tons of air pollutants into the air from coal plants that are having their lives extended and methane gas turbines that are being built in service of powering these data centers. And in addition to that, there’s also an acceleration of the freshwater crisis, because these pieces of infrastructure have to be cooled with freshwater resources. It has to be fresh water, because if it’s any other type of water, it corrodes the equipment, it leads to bacterial growth.

And Bloomberg recently had a story that showed that two-thirds of these data centers are actually going into water-scarce areas, into places where the communities already do not have enough fresh water at their disposal. So that is one dimension of many that I refer to when I say, the extraordinary costs of this particular pathway for AI development.

Niall Firth: So in terms of costs and the extractive process of making AI, I wanted to give you the chance to talk about the other theme of the book, apart from just OpenAI’s explosion. It’s the colonial way of looking at the way AI is made: the empire. I’m saying this obviously because we’re here, but this is an idea that came out of reporting you started at MIT Technology Review and then continued into the book. Tell us about how this framing helps us understand how AI is made now.

Karen Hao: Yeah, so this was a framing that I started thinking a lot about when I was working on the AI Colonialism series for Tech Review. It was a series of stories that looked at the way that, pre-ChatGPT, the commercialization of AI and its deployment into the world was already leading to entrenchment of historical inequities into the present day.

And one example was a story that was about how facial recognition companies were swarming into South Africa to try and harvest more data from South Africa during a time when they were getting criticized for the fact that their technologies did not accurately recognize black faces. And the deployment of those facial recognition technologies into South Africa, into the streets of Johannesburg, was leading to what South African scholars were calling a recreation of a digital apartheid—the controlling of black bodies, movement of black people.

And this idea really haunted me for a really long time. Through my reporting in that series, there were so many examples that I kept hitting upon of this thesis, that the AI industry was perpetuating. It felt like it was becoming this neocolonial force. And then, when ChatGPT came out, it became clear that this was just accelerating.

When you accelerate the scale of these technologies, and you start training them on the entirety of the Internet, and you start using these supercomputers that are the size of dozens—if not hundreds—of football fields. Then you really start talking about an extraordinary global level of extraction and exploitation that is happening to produce these technologies. And then the historical power imbalances become even more obvious.

And so there are four parallels that I draw in my book between what I have now termed empires of AI versus empires of old. The first one is that empires lay claim to resources that are not their own. So these companies are scraping all this data that is not their own, taking all the intellectual property that is not their own.

The second is that empires exploit a lot of labor. So we see them moving to countries in the Global South or other economically vulnerable communities to contract workers to do some of the worst work in the development pipeline for producing these technologies—and also producing technologies that then inherently are labor-automating and engage in labor exploitation in and of themselves.

And the third feature is that the empires monopolize knowledge production. So, in the last 10 years, we’ve seen the AI industry monopolize more and more of the AI researchers in the world. So AI researchers are no longer contributing to open science, working in universities or independent institutions, and the effect on the research is what you would imagine would happen if most of the climate scientists in the world were being bankrolled by oil and gas companies. You would not be getting a clear picture, and we are not getting a clear picture, of the limitations of these technologies, or if there are better ways to develop these technologies.

And the fourth and final feature is that empires always engage in this aggressive race rhetoric, where there are good empires and evil empires. And they, the good empire, have to be strong enough to beat back the evil empire, and that is why they should have unfettered license to consume all of these resources and exploit all of this labor. And if the evil empire gets the technology first, humanity goes to hell. But if the good empire gets the technology first, they’ll civilize the world, and humanity gets to go to heaven. So on many different levels, like the empire theme, I felt like it was the most comprehensive way to name exactly how these companies operate, and exactly what their impacts are on the world.

Niall Firth: Yeah, brilliant. I mean, you talk about the evil empire. What happens if the evil empire gets it first? And what I mentioned at the top is AGI. For me, it’s almost like the extra character in the book all the way through. It’s sort of looming over everything, like the ghost at the feast, sort of saying like, this is the thing that motivates everything at OpenAI. This is the thing we’ve got to get to before anyone else gets to it.

There’s a bit in the book about how they’re talking internally at OpenAI, like, we’ve got to make sure that AGI is in US hands where it’s safe versus like anywhere else. And some of the international staff are openly like—that’s kind of a weird way to frame it, isn’t it? Why is the US version of AGI better than others?

So tell us a bit about how it drives what they do. And AGI isn’t an inevitable fact that’s just happening anyway, is it? It’s not even a thing yet.

Karen Hao: There’s not even consensus around whether or not it’s even possible or what it even is. There was recently a New York Times story by Cade Metz that was citing a survey of long-standing AI researchers in the field, and 75% of them still think that we don’t have the techniques yet for reaching AGI, whatever that means. And the most classic definition or understanding of what AGI is, is being able to fully recreate human intelligence in software. But the problem is, we also don’t have scientific consensus around what human intelligence is. And so one of the aspects that I talk about a lot in the book is that, when there is a vacuum of shared meaning around this term, and what it would look like, when would we have arrived at it? What capabilities should we be evaluating these systems on to determine that we’ve gotten there? It can basically just be whatever OpenAI wants.

So it’s kind of just this ever-present goalpost that keeps shifting, depending on where the company wants to go. You know, they have a full range, a variety of different definitions that they’ve used throughout the years. In fact, they even have a joke internally: If you ask 13 OpenAI researchers what AGI is, you’ll get 15 definitions. So they are kind of self-aware that this is not really a real term and it doesn’t really have that much meaning.

But it does serve this purpose of creating a kind of quasi-religious fervor around what they’re doing, where people think that they have to keep driving towards this horizon, and that one day when they get there, it’s going to have a civilizationally transformative impact. And therefore, what else should you be working on in your life, but this? And who else should be working on it, but you?

And so it is their justification not just for continuing to push and scale and consume all these resources—because none of that consumption, none of that harm matters anymore if you end up hitting this destination. But they also use it as a way to develop their technologies in a very deeply anti-democratic way, where they say, we are the only people that have the expertise, that have the right to carefully control the development of this technology and usher it into the world. And we cannot let anyone else participate because it’s just too powerful of a technology.

Niall Firth: You talk about the factions, particularly the religious framing. AGI has been around as a concept for a while—it was very niche, very kind of nerdy fun, really, to talk about—to suddenly become extremely mainstream. And they have the boomers versus doomers dichotomy. Where are you on that spectrum?

Karen Hao: So the boomers are people who think that AGI is going to bring us to utopia, and the doomers think AGI is going to devastate all of humanity. And to me these are actually two sides of the same coin. They both believe that AGI is possible, and it’s imminent, and it’s going to change everything.

And I am not on this spectrum. I’m in a third space, which is the AI accountability space, which is rooted in the observation that these companies have accumulated an extraordinary amount of power, both economic and political power, to go back to the empire analogy.

Ultimately, the thing that we need to do in order to not return to an age of empire and erode a lot of democratic norms is to hold these companies accountable with all the tools at our disposal, and to recognize all the harms that they are already perpetuating through a misguided approach to AI development.

Niall Firth: I’ve got a couple of questions from readers. I’m gonna try to pull them together a little bit because Abbas asks, what would post-imperial AI look like? And there was a question from Liam basically along the same lines. How do you make a more ethical version of AI that is not within this framework?

Karen Hao: We sort of already touched a little bit upon this idea. But there are so many different ways to develop AI. There are myriads of techniques throughout the history of AI development, which is decades long. There have been various shifts in the winds of which techniques ultimately rise and fall. And it isn’t based solely on the scientific or technical merit of any particular technique. Oftentimes certain techniques become more popular because of business reasons or because of the funder’s ideologies. And that’s sort of what we’re seeing today with the complete indexing of AI development on large-scale AI model development.

And ultimately, these large-scale models … We talked about how it’s a remarkable technical leap, but in terms of social progress or economic progress, the benefits of these models have been kind of middling. And the way that I see us shifting to AI models that are going to be A) more beneficial and B) not so imperial is to refocus on task-specific AI systems that are tackling well-scoped challenges that inherently lend themselves to the strengths of AI systems that are inherently computational optimization problems.

So I’m talking about things like using AI to integrate more renewable energy into the grid. This is something that we definitely need. We need to more quickly accelerate our electrification of the grid, and one of the challenges of using more renewable energy is the unpredictability of it. And this is a key strength of AI technologies, being able to have predictive capabilities and optimization capabilities where you can match the energy generation of different renewables with the energy demands of different people that are drawing from the grid.

Niall Firth: Quite a few people have been asking, in the chat, different versions of the same question. If you were an early-career AI scientist, or if you were involved in AI, what can you do yourself to bring about a more ethical version of AI? Do you have any power left, or is it too late?

Karen Hao: No, I don’t think it’s too late at all. I mean, as I’ve been talking with a lot of people just in the lay public, one of the biggest challenges that they have is they don’t have any alternatives for AI. They want the benefits of AI, but they also do not want to participate in a supply chain that is really harmful. And so the first question is, always, is there an alternative? Which tools do I shift to? And unfortunately, there just aren’t that many alternatives right now.

And so the first thing that I would say to early-career AI researchers and entrepreneurs is to build those alternatives, because there are plenty of people that are actually really excited about the possibility of switching to more ethical alternatives. And one of the analogies I often use is that we kind of need to do with the AI industry what happened with the fashion industry. There was also a lot of environmental exploitation, labor exploitation in the fashion industry, and there was enough consumer demand that it created new markets for ethical and sustainably sourced fashion. And so we kind of need to see just more options occupying that space.

Niall Firth: Do you feel optimistic about the future? Or where do you sit? You know, things aren’t great as you spell them out now. Where’s the hope for us?

Karen Hao: I am. I’m super optimistic. Part of the reason why I’m optimistic is because you know, a few years ago, when I started writing about AI at Tech Review, I remember people would say, wow, that’s a really niche beat. Do you have enough to write about?

And now, I mean, everyone is talking about AI, and I think that’s the first step to actually getting to a better place with AI development. The amount of public awareness and attention and scrutiny that is now going into how we develop these technologies, how we use these technologies, is really, really important. Like, we need to be having this public debate and that in and of itself is a significant step change from what we had before.

But the next step, and part of the reason why I wrote this book, is we need to convert the awareness into action, and people should take an active role. Every single person should feel that they have an active role in shaping the future of AI development, if you think about all of the different ways that you interface with the AI development supply chain and deployment supply chain—like you give your data or withhold your data.

There are probably data centers that are being built around you right now. If you’re a parent, there’s some kind of AI policy being crafted at [your kid’s] school. There’s some kind of AI policy being crafted at your workplace. These are all what I consider sites of democratic contestation, where you can use those opportunities to assert your voice about how you want AI to be developed and deployed. If you do not want these companies to use certain kinds of data, push back when they just take the data.

I closed all of my personal social media accounts because I just did not like the fact that they were scraping my personal photos to train their generative AI models. I’ve seen parents and students and teachers start forming committees within schools to talk about what their AI policy should be and to draft it collectively as a community. Same with businesses. They’re doing the same thing. If we all kind of step up to play that active role, I am super optimistic that we’ll get to a better place.

Niall Firth: Mark, in the chat, mentions the Māori story from New Zealand towards the end of your book, and that’s an example of sort of community-led AI in action, isn’t it?

Karen Hao: Yeah. There was a community in New Zealand that really wanted to help revitalize the Māori language by building a speech recognition tool that could recognize Māori, and therefore be able to transcribe a rich repository of archival audio of their ancestors speaking Māori. And the first thing that they did when engaging in that project was they asked the community, do you want this AI tool?

Niall Firth: Imagine that.

Karen Hao: I know! It’s such a radical concept, this idea of consent at every stage. But they first asked that; the community wholeheartedly said yes. They then engaged in a public education campaign to explain to people, okay, what does it take to develop an AI tool? Well, we are going to need data. We’re going to need audio transcription pairs to train this AI model. So then they ran a public contest in which they were able to get dozens, if not hundreds, of people in their community to donate data to this project. And then they made sure that when they developed the model, they actively explained to the community at every step how their data was being used, how it would be stored, how it would continue to be protected. And any other project that would use the data has to get permission and consent from the community first.

And so it was a completely democratic process, for whether they wanted the tool, how to develop the tool, and how the tool should continue to be used, and how their data should continue to be used over time.

Niall Firth: Great. I know we’ve gone a bit over time. I’ve got two more things I’m going to ask you, basically putting together lots of questions people have asked in the chat about your view on what role regulations should play. What are your thoughts on that?

Karen Hao: Yeah, I mean, in an ideal world where we actually had a functioning government, regulation should absolutely play a huge role. And it shouldn’t just be thinking about once an AI model is built, how to regulate that. But still thinking about the full supply chain of AI development, regulating the data and what’s allowed to be trained in these models, regulating the land use. And what pieces of land are allowed to build data centers? How much energy and water are the data centers allowed to consume? And also regulating the transparency. We don’t know what data is in these training data sets, and we don’t know the environmental costs of training these models. We don’t know how much water these data centers consume and that is all information that these companies actively withhold to prevent democratic processes from happening. So if there were one major intervention that regulators could have, it should be to dramatically increase the amount of transparency along the supply chain.

Niall Firth: Okay, great. So just to bring it back around to OpenAI and Sam Altman to finish with. He famously sent an email around, didn’t he? After your original Tech Review story, saying this is not great. We don’t like this. And he didn’t want to speak to you for your book, either, did he?

Karen Hao: No, he did not.

Niall Firth: No. But imagine Sam Altman is in the chat here. He’s subscribed to Technology Review and is watching this Roundtables because he wants to know what you’re saying about him. If you could talk to him directly, what would you like to ask him?

Karen Hao: What degree of harm do you need to see in order to realize that you should take a different path?

Niall Firth: Nice, blunt, to the point. All right, Karen, thank you so much for your time.

Karen Hao: Thank you so much, everyone.

MIT Technology Review Roundtables is a subscriber-only online event series where experts discuss the latest developments and what’s next in emerging technologies. Sign up to get notified about upcoming sessions.