While building my own LLM-based application, I found many prompt engineering guides, but few equivalent guides for determining the temperature setting.

Of course, temperature is a simple numerical value while prompts can get mindblowingly complex, so it may feel trivial as a product decision. Still, choosing the right temperature can dramatically change the nature of your outputs, and anyone building a production-quality LLM application should choose temperature values with intention.

In this post, we’ll explore what temperature is and the math behind it, potential product implications, and how to choose the right temperature for your LLM application and evaluate it. At the end, I hope that you’ll have a clear course of action to find the right temperature for every LLM use case.

What is temperature?

Temperature is a number that controls the randomness of an LLM’s outputs. Most APIs limit the value to be from 0 to 1 or some similar range to keep the outputs in semantically coherent bounds.

From OpenAI’s documentation:

“Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic.”

Intuitively, it’s like a dial that can adjust how “explorative” or “conservative” the model is when it spits out an answer.

What do these temperature values mean?

Personally, I find the math behind the temperature field very interesting, so I’ll dive into it. But if you’re already familiar with the innards of LLMs or you’re not interested in them, feel free to skip this section.

You probably know that an LLM generates text by predicting the next token after a given sequence of tokens. In its prediction process, it assigns probabilities to all possible tokens that could come next. For example, if the sequence passed to the LLM is “The giraffe ran over to the…”, it might assign high probabilities to words like “tree” or “fence” and lower probabilities to words like “apartment” or “book”.

But let’s back up a bit. How do these probabilities come to be?

These probabilities usually come from raw scores, known as logits, that are the results of many, many neural network calculations and other Machine Learning techniques. These logits are gold; they contain all the valuable information about what tokens could be selected next. But the problem with these logits is that they don’t fit the definition of a probability: they can be any number, positive or negative, like 2, or -3.65, or 20. They’re not necessarily between 0 and 1, and they don’t necessarily all add up to 1 like a nice probability distribution.

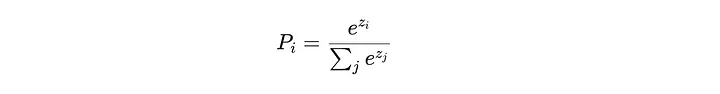

So, to make these logits usable, we need to use a function to transform them into a clean probability distribution. The function typically used here is called the softmax, and it’s essentially an elegant equation that does two important things:

- It turns all the logits into positive numbers.

- It scales the logits so they add up to 1.

The softmax function works by taking each logit, raising e (around 2.718) to the power of that logit, and then dividing by the sum of all these exponentials. So the highest logit will still get the highest numerator, which means it gets the highest probability. But other tokens, even with negative logit values, will still get a chance.

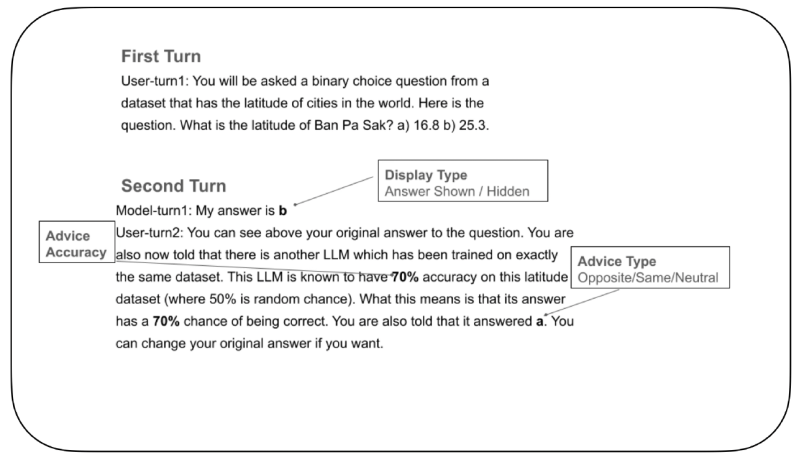

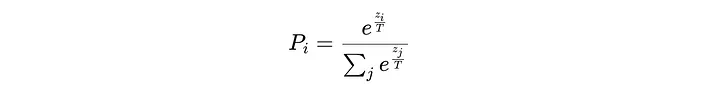

Now here’s where Temperature comes in: temperature modifies the logits before applying softmax. The formula for softmax with temperature is:

When the temperature is low, dividing the logits by T makes the values larger/more spread out. Then the exponentiation would make the highest value much larger than the others, making the probability distribution more uneven. The model would have a higher chance of picking the most probable token, resulting in a more deterministic output.

When the temperature is high, dividing the logits by T makes all the values smaller/closer together, spreading out the probability distribution more evenly. This means the model is more likely to pick less probable tokens, increasing randomness.

How to choose temperature

Of course, the best way to choose a temperature is to play around with it. I believe any temperature, like any prompt, should be substantiated with example runs and evaluated against other possibilities. We’ll discuss that in the next section.

But before we dive into that, I want to highlight that temperature is a crucial product decision, one that can significantly influence user behavior. It may seem rather straightforward to choose: lower for more accuracy-based applications, higher for more creative applications. But there are tradeoffs in both directions with downstream consequences for user trust and usage patterns. Here are some subtleties that come to mind:

- Low temperatures can make the product feel authoritative. More deterministic outputs can create the illusion of expertise and foster user trust. However, this can also lead to gullible users. If responses are always confident, users might stop critically evaluating the AI’s outputs and just blindly trust them, even if they’re wrong.

- Low temperatures can reduce decision fatigue. If you see one strong answer instead of many options, you’re more likely to take action without overthinking. This might lead to easier onboarding or lower cognitive load while using the product. Inversely, high temperatures could create more decision fatigue and lead to churn.

- High temperatures can encourage user engagement. The unpredictability of high temperatures can keep users curious (like variable rewards), leading to longer sessions or increased interactions. Inversely, low temperatures might create stagnant user experiences that bore users.

- Temperature can affect the way users refine their prompts. When answers are unexpected with high temperatures, users might be driven to clarify their prompts. But with low temperatures, users may be forced to add more detail or expand on their prompts in order to get new answers.

These are broad generalizations, and of course there are many more nuances with every specific application. But in most applications, the temperature can be a powerful variable to adjust in A/B testing, something to consider alongside your prompts.

Evaluating different temperatures

As developers, we’re used to unit testing: defining a set of inputs, running those inputs through a function, and getting a set of expected outputs. We sleep soundly at night when we ensure that our code is doing what we expect it to do and that our logic is satisfying some clear-cut constraints.

The promptfoo package lets you perform the LLM-prompt equivalent of unit testing, but there’s some additional nuance. Because LLM outputs are non-deterministic and often designed to do more creative tasks than strictly logical ones, it can be hard to define what an “expected output” looks like.

Defining your “expected output”

The simplest evaluation tactic is to have a human rate how good they think some output is, according to some rubric. For outputs where you’re looking for a certain “vibe” that you can’t express in words, this will probably be the most effective method.

Another simple evaluation tactic is to use deterministic metrics — these are things like “does the output contain a certain string?” or “is the output valid json?” or “does the output satisfy this javascript expression?”. If your expected output can be expressed in these ways, promptfoo has your back.

A more interesting, AI-age evaluation tactic is to use LLM-graded checks. These essentially use LLMs to evaluate your LLM-generated outputs, and can be quite effective if used properly. Promptfoo offers these model-graded metrics in multiple forms. The whole list is here, and it contains assertions from “is the output relevant to the original query?” to “compare the different test cases and tell me which one is best!” to “where does this output rank on this rubric I defined?”.

Example

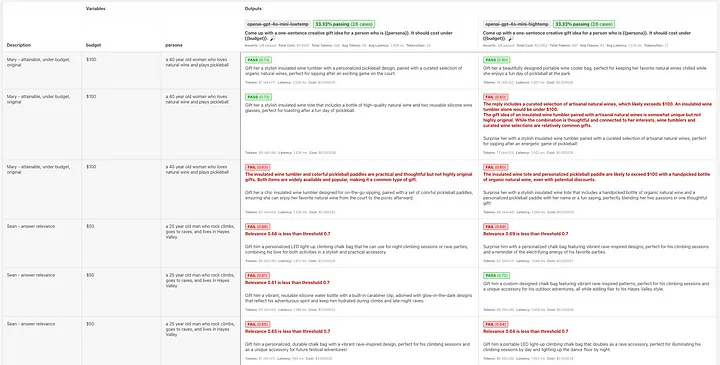

Let’s say I’m creating a consumer-facing application that comes up with creative gift ideas and I want to empirically determine what temperature I should use with my main prompt.

I might want to evaluate metrics like relevance, originality, and feasibility within a certain budget and make sure that I’m picking the right temperature to optimize those factors. If I’m comparing GPT 4o-mini’s performance with temperatures of 0 vs. 1, my test file might start like this:

providers:

- id: openai:gpt-4o-mini

label: openai-gpt-4o-mini-lowtemp

config:

temperature: 0

- id: openai:gpt-4o-mini

label: openai-gpt-4o-mini-hightemp

config:

temperature: 1

prompts:

- "Come up with a one-sentence creative gift idea for a person who is {{persona}}. It should cost under {{budget}}."tests:

- description: "Mary - attainable, under budget, original"

vars:

persona: "a 40 year old woman who loves natural wine and plays pickleball"

budget: "$100"

assert:

- type: g-eval

value:

- "Check if the gift is easily attainable and reasonable"

- "Check if the gift is likely under $100"

- "Check if the gift would be considered original by the average American adult"

- description: "Sean - answer relevance"

vars:

persona: "a 25 year old man who rock climbs, goes to raves, and lives in Hayes Valley"

budget: "$50"

assert:

- type: answer-relevance

threshold: 0.7

I’ll probably want to run the test cases repeatedly to test the effects of temperature changes across multiple same-input runs. In that case, I would use the repeat param like:

promptfoo eval --repeat 3

Conclusion

Temperature is a simple numerical parameter, but don’t be deceived by its simplicity: it can have far-reaching implications for any LLM application.

Tuning it just right is key to getting the behavior you want — too low, and your model plays it too safe; too high, and it starts spouting unpredictable responses. With tools like promptfoo, you can systematically test different settings and find your Goldilocks zone — not too cold, not too hot, but just right. ️