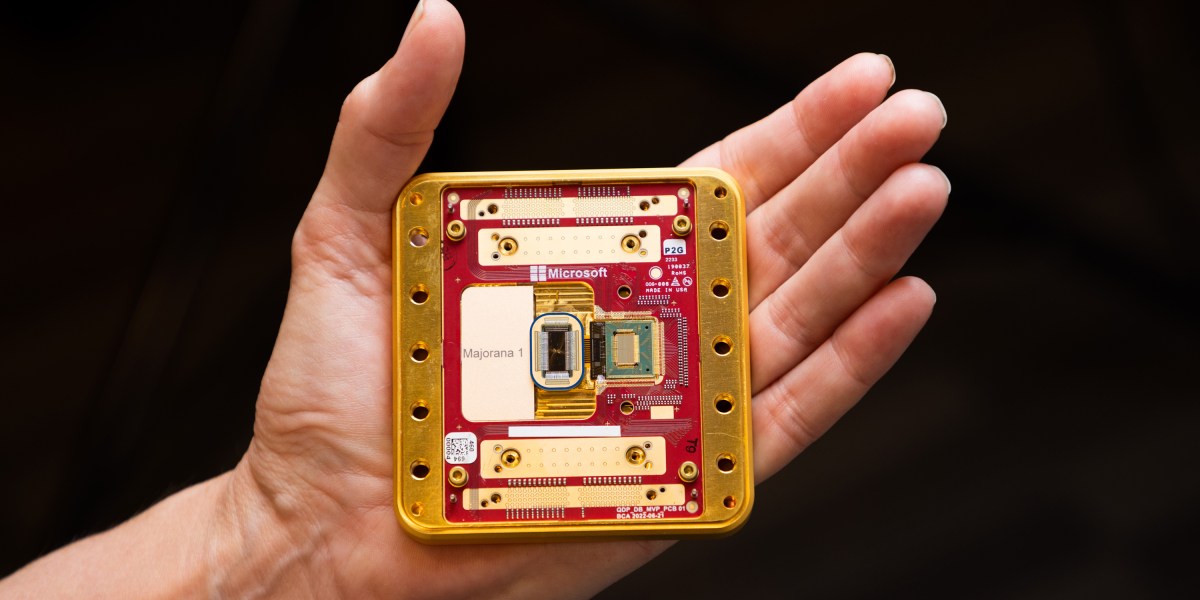

Microsoft announced today that it has made significant progress in its 20-year quest to make topological quantum bits, or qubits—a special approach to building quantum computers that could make them more stable and easier to scale up.

Researchers and companies have been working for years to build quantum computers, which could unlock dramatic new abilities to simulate complex materials and discover new ones, among many other possible applications.

To achieve that potential, though, we must build big enough systems that are stable enough to perform computations. Many of the technologies being explored today, such as the superconducting qubits pursued by Google and IBM, are so delicate that the resulting systems need to have many extra qubits to correct errors.

Microsoft has long been working on an alternative that could cut down on the overhead by using components that are far more stable. These components, called Majorana quasiparticles, are not real particles. Instead, they are special patterns of behavior that may arise inside certain physical systems and under certain conditions.

The pursuit has not been without setbacks, including a high-profile paper retraction by researchers associated with the company in 2018. But the Microsoft team, which has since pulled this research effort in house, claims it is now on track to build a fault-tolerant quantum computer containing a few thousand qubits in a matter of years and that it has a blueprint for building out chips that each contain a million qubits or so, a rough target that could be the point at which these computers really begin to show their power.

This week the company announced a few early successes on that path: piggybacking on a Nature paper published today that describes a fundamental validation of the system, the company says it has been testing a topological qubit, and that it has wired up a chip containing eight of them.

“You don’t get to a million qubits without a lot of blood, sweat, and tears and solving a lot of really difficult technical challenges along the way. And I do not want to understate any of that,” says Chetan Nayak, a Microsoft technical fellow and leader of the team pioneering this approach. That said, he says, “I think that we have a path that we very much believe in, and we see a line of sight.”

Researchers outside the company are cautiously optimistic. “I’m very glad that [this research] seems to have hit a very important milestone,” says computer scientist Scott Aaronson, who heads the Quantum Information Center at the University of Texas at Austin. “I hope that this stands, and I hope that it’s built up.”

Even and odd

The first step in building a quantum computer is constructing qubits that can exist in fragile quantum states—not 0s and 1s like the bits in classical computers, but rather a mixture of the two. Maintaining qubits in these states and linking them up with one another is delicate work, and over the years a significant amount of research has gone into refining error correction schemes to make up for noisy hardware.

For many years, theorists and experimentalists alike have been intrigued by the idea of creating topological qubits, which are constructed through mathematical twists and turns and have protection from errors essentially baked into their physics. “It’s been such an appealing idea to people since the early 2000s,” says Aaronson. “The only problem with it is that it requires, in a sense, creating a new state of matter that’s never been seen in nature.”

Microsoft has been on a quest to synthesize this state, called a Majorana fermion, in the form of quasiparticles. The Majorana was first proposed nearly 90 years ago as a particle that is its own antiparticle, which means two Majoranas will annihilate when they encounter one another. With the right conditions and physical setup, the company has been hoping to get behavior matching that of the Majorana fermion within materials.

In the last few years, Microsoft’s approach has centered on creating a very thin wire or “nanowire” from indium arsenide, a semiconductor. This material is placed in close proximity to aluminum, which becomes a superconductor close to absolute zero, and can be used to create superconductivity in the nanowire.

Ordinarily you’re not likely to find any unpaired electrons skittering about in a superconductor—electrons like to pair up. But under the right conditions in the nanowire, it’s theoretically possible for an electron to hide itself, with each half hiding at either end of the wire. If these complex entities, called Majorana zero modes, can be coaxed into existence, they will be difficult to destroy, making them intrinsically stable.

”Now you can see the advantage,” says Sankar Das Sarma, a theoretical physicist at the University of Maryland, College Park, who did early work on this concept. “You cannot destroy a half electron, right? If you try to destroy a half electron, that means only a half electron is left. That’s not allowed.”

In 2023, the Microsoft team published a paper in the journal Physical Review B claiming that this system had passed a specific protocol designed to assess the presence of Majorana zero modes. This week in Nature, the researchers reported that they can “read out” the information in these nanowires—specifically, whether there are Majorana zero modes hiding at the wires’ ends. If there are, that means the wire has an extra, unpaired electron.

“What we did in the Nature paper is we showed how to measure the even or oddness,” says Nayak. “To be able to tell whether there’s 10 million or 10 million and one electrons in one of these wires.” That’s an important step by itself, because the company aims to use those two states—an even or odd number of electrons in the nanowire—as the 0s and 1s in its qubits.

If these quasiparticles exist, it should be possible to “braid” the four Majorana zero modes in a pair of nanowires around one another by making specific measurements in a specific order. The result would be a qubit with a mix of these two states, even and odd. Nayak says the team has done just that, creating a two-level quantum system, and that it is currently working on a paper on the results.

Researchers outside the company say they cannot comment on the qubit results, since that paper is not yet available. But some have hopeful things to say about the findings published so far. “I find it very encouraging,” says Travis Humble, director of the Quantum Science Center at Oak Ridge National Laboratory in Tennessee. “It is not yet enough to claim that they have created topological qubits. There’s still more work to be done there,” he says. But “this is a good first step toward validating the type of protection that they hope to create.”

Others are more skeptical. Physicist Henry Legg of the University of St Andrews in Scotland, who previously criticized Physical Review B for publishing the 2023 paper without enough data for the results to be independently reproduced, is not convinced that the team is seeing evidence of Majorana zero modes in its Nature paper. He says that the company’s early tests did not put it on solid footing to make such claims. “The optimism is definitely there, but the science isn’t there,” he says.

One potential complication is impurities in the device, which can create conditions that look like Majorana particles. But Nayak says the evidence has only grown stronger as the research has proceeded. “This gives us confidence: We are manipulating sophisticated devices and seeing results consistent with a Majorana interpretation,” he says.

“They have satisfied many of the necessary conditions for a Majorana qubit, but there are still a few more boxes to check,” Das Sarma said after seeing preliminary results on the qubit. “The progress has been impressive and concrete.”

Scaling up

On the face of it, Microsoft’s topological efforts seem woefully behind in the world of quantum computing—the company is just now working to combine qubits in the single digits while others have tied together more than 1,000. But both Nayak and Das Sarma say other efforts had a strong head start because they involved systems that already had a solid grounding in physics. Work on the topological qubit, on the other hand, has meant starting from scratch.

“We really were reinventing the wheel,” Nayak says, likening the team’s efforts to the early days of semiconductors, when there was so much to sort out about electron behavior and materials, and transistors and integrated circuits still had to be invented. That’s why this research path has taken almost 20 years, he says: “It’s the longest-running R&D program in Microsoft history.”

Some support from the US Defense Advanced Research Projects Agency could help the company catch up. Early this month, Microsoft was selected as one of two companies to continue work on the design of a scaled-up system, through a program focused on underexplored approaches that could lead to utility-scale quantum computers—those whose benefits exceed their costs. The other company selected is PsiQuantum, a startup that is aiming to build a quantum computer containing up to a million qubits using photons.

Many of the researchers MIT Technology Review spoke with would still like to see how this work plays out in scientific publications, but they were hopeful. “The biggest disadvantage of the topological qubit is that it’s still kind of a physics problem,” says Das Sarma. “If everything Microsoft is claiming today is correct … then maybe right now the physics is coming to an end, and engineering could begin.”

This story was updated with Henry Legg’s current institutional affiliation.