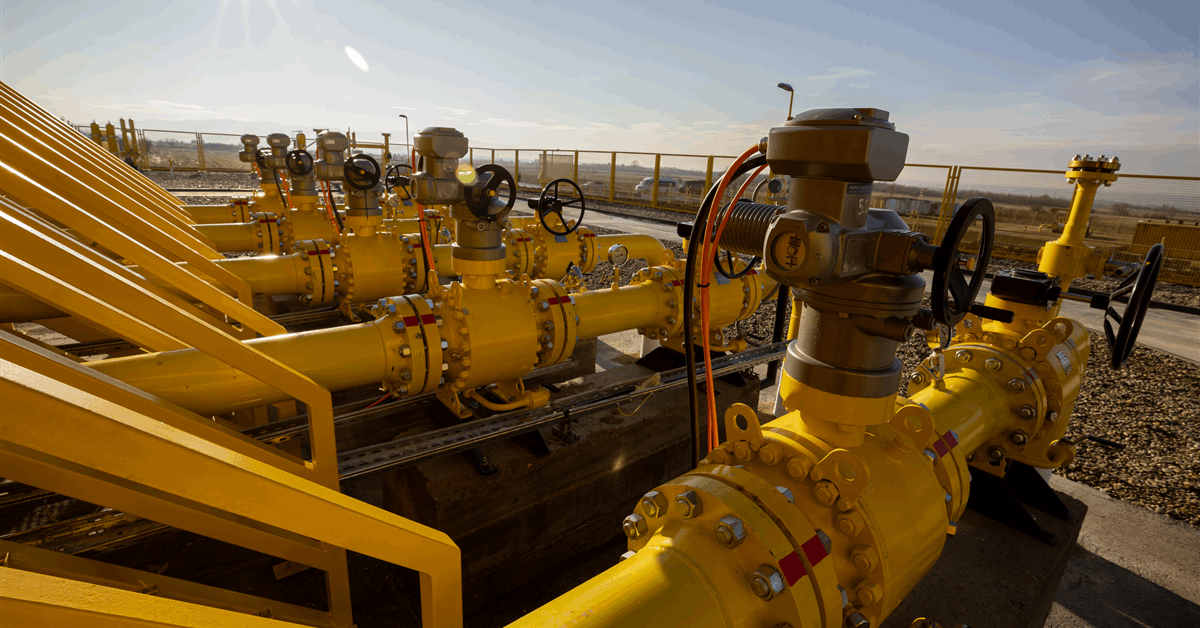

The European Commission has matched almost 20 billion cubic meters (706.29 billion cubic feet) of demand from European Union gas buyers with offers from potential suppliers under the second midterm round of AggregateEU.

Vendors offered 31 Bcm, exceeding the 29 Bcm of demand pooled during the matchmaking round opened this month, according to an online statement Wednesday from the Commission’s Directorate-General for Energy.

“All participants have been informed about the matching results and will now be able to negotiate contracts bilaterally”, the Directorate said.

Energy and Housing Commissioner Dan Jørgensen commented, “As we fast track our decarbonization efforts in the EU, it is also key that European buyers are able to secure competitive gas offers from reliable international suppliers”.

“The positive results of this second matching round on joint gas purchasing show the strong interest from the market and the value in providing increased transparency to European gas users and buyers”, Jørgensen added.

Announcing the second midterm round March 12, 2025, the Directorate said LNG buyers and sellers not only can name their preferred terminal of delivery as before but can now also express preference to have the LNG delivered free-on-board. This option has been added “to better reflect LNG trade practices and attract additional international suppliers”, the Directorate said.

AggregateEU, a mechanism in which gas suppliers compete to book demand placed by companies in the EU and its Energy Community partner countries, was initially only meant for the 2023-24 winter season. However, citing lessons from the prolonged effects of the energy crisis, the EU has made it a permanent mechanism under “Regulation (EU) 2024/1789 on the internal markets for renewable gas, natural gas and hydrogen”, adopted June 13, 2024.

Midterm rounds offer six-month contracts for potential suppliers during a buyer-seller partnership of up to five years.

“In early 2024, with the effects of the energy crisis still not over, AggregateEU is introducing a different concept of mid-term tenders in order to address the growing demand for stability and predictability from buyers and sellers of natural gas”, the Directorate said February 1, 2024, announcing the first midterm tender.

“Under such tenders, buyers will be able to submit their demand for seasonal 6-month periods (for a minimum 1,800,000 MWh for LNG and 30,000 for NBP per period), going from April 2024 to October 2029. This is intended to support sellers in identifying buyers who might be interested in a longer trading partnership – i.e. up to 5 years.

“Mid-term tenders will not only increase security of supply but also help European industrial players increase their competitiveness”.

NBP gas, or National Balancing Point gas, refers to gas from the national transmission systems of EU states.

The first midterm round aggregated 34 Bcm of demand from 19 companies including industrial players. Offers totaled 97.4 Bcm, almost triple the demand, the Commission said February 28, 2024.

A total of 7 rounds have been conducted under AggregateEU, pooling over 119 Bcm of demand and attracting 191 Bcm of offers. Nearly 100 Bcm have been matched, according to Thursday’s results announcement.

AggregateEU, created under Council Regulation 2022/2576 of December 19, 2022, is part of the broader EU Energy Platform for coordinated purchases of gas and hydrogen. The Energy Platform was formed 2022 as part of the REPowerEU strategy for achieving energy independence from Russia.

To contact the author, email [email protected]