Cognitive migration is underway. The station is crowded. Some have boarded while others hesitate, unsure whether the destination justifies the departure.

Future of work expert and Harvard University Professor Christopher Stanton commented recently that the uptake of AI has been tremendous and observed that it is an “extraordinarily fast-diffusing technology.” That speed of adoption and impact is a critical part of what differentiates the AI revolution from previous technology-led transformations, like the PC and the internet. Demis Hassabis, CEO of Google DeepMind, went further, predicting that AI could be “10 times bigger than the Industrial Revolution, and maybe 10 times faster.”

Intelligence, or at least thinking, is increasingly shared between people and machines. Some people have begun to regularly use AI in their workflows. Others have gone further, integrating it into their cognitive routines and creative identities. These are the “willing,” including the consultants fluent in prompt design, the product managers retooling systems and those building their own businesses that do everything from coding to product design to marketing.

For them, the terrain feels new but navigable. Exciting, even. But for many others, this moment feels strange, and more than a little unsettling. The risk they face is not just being left behind. It is not knowing how, when and whether to invest in AI, a future that seems highly uncertain, and one that is difficult to imagine their place in. That is the double risk of AI readiness, and it is reshaping how people interpret the pace, promises and pressure of this transition.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Is it real?

Across industries, new roles and teams are forming, and AI tools are reshaping workflows faster than norms or strategies can keep up. But the significance is still hazy, the strategies unclear. The end game, if there is one, remains uncertain. Yet the pace and scope of change feels portentous. Everyone is being told to adapt, but few know exactly what that means or how far the changes will go. Some AI industry leaders claim huge changes are coming, and soon, with superintelligent machines emerging possibly within a few years.

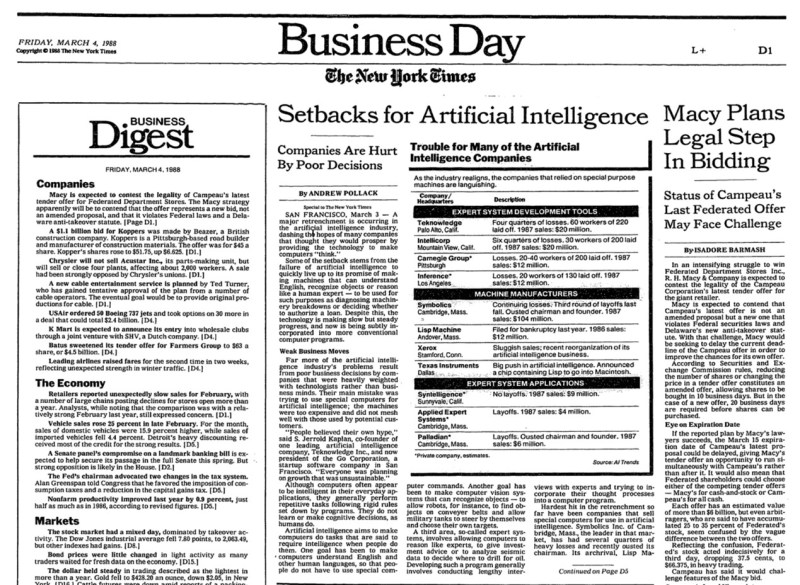

But maybe this AI revolution will go bust, as others have before, with another “AI winter” to follow. There have been two notable winters. The first was in the 1970s, brought about by computational limits. The second began in the late 1980s after a wave of unmet expectations with high-profile failures and under-delivery of “expert systems.” These winters were characterized by a cycle of lofty expectations followed by profound disappointment, leading to significant reductions in funding and interest in AI.

Should the excitement around AI agents today mirror the failed promise of expert systems, this could lead to another winter. However, there are major differences between then and now. Today, there is far greater institutional buy-in, consumer traction and cloud computing infrastructure compared to the expert systems of the 1980s. There is no guarantee that a new winter will not emerge, but if the industry fails this time, it will not be for lack of money or momentum. It will be because trust and reliability broke first.

Cognitive migration has started

If “the great cognitive migration” is real, this remains the early part of the journey. Some have boarded the train while others still linger, unsure about whether or when to get onboard. Amidst the uncertainty, the atmosphere at the station has grown restless, like travelers sensing a trip itinerary change that no one has announced.

Most people have jobs, but they wonder about the degree of risk they face. The value of their work is shifting. A quiet but mounting anxiety hums beneath the surface of performance reviews and company town halls.

Already, AI can accelerate software development by 10 to 100X, generate the majority of client-facing code and compress project timelines dramatically. Managers are now able to use AI to create employee performance evaluations. Even classicists and archaeologists have found value in AI, having used the technology to understand ancient Latin inscriptions.

The “willing” have an idea of where they are going and may find traction. But for the “pressured,” the “resistant” and even those not yet touched by AI, this moment feels like something between anticipation and grief. These groups have started to grasp that they may not be staying in their comfort zones for long.

For many, this is not just about tools or a new culture, but whether that culture has space for them at all. Waiting too long is akin to missing the train and could lead to long-term job displacement. Even those I have spoken with who are senior in their careers and have begun using AI wonder if their positions are threatened.

The narrative of opportunity and upskilling hides a more uncomfortable truth. For many, this is not a migration. It is a managed displacement. Some workers are not choosing to opt out of AI. They are discovering that the future being built does not include them. Belief in the tools is different from belonging in the system tools are reshaping. And without a clear path to participate meaningfully, “adapt or be left behind” begins to sound less like advice and more like a verdict.

These tensions are precisely why this moment matters. There is a growing sense that work, as they have known it, is beginning to recede. The signals are coming from the top. Microsoft CEO Satya Nadella acknowledged as much in a July 2025 memo following a reduction in force, noting that the transition to the AI era “might feel messy at times, but transformation always is.” But there is another layer to this unsettling reality: The technology driving this urgent transformation remains fundamentally unreliable.

The power and the glitch: Why AI still cannot be trusted

And yet, for all the urgency and momentum, this increasingly pervasive technology itself remains glitchy, limited, strangely brittle and far from dependable. This raises a second layer of doubt, not only about how to adapt, but about whether the tools we are adapting to can deliver. Perhaps these shortcomings should not be a surprise, considering that it was only several years ago when the output from large language models (LLMs) was barely coherent. Now, however, it is like having a PhD in your pocket; the idea of on-demand ambient intelligence once science fiction almost realized.

Beneath their polish, however, chatbots built atop these LLMs remain fallible, forgetful and often overconfident. They still hallucinate, meaning that we cannot entirely trust their output. AI can answer with confidence, but not accountability. This is probably a good thing, as our knowledge and expertise are still needed. They also do not have persistent memory and have difficulty carrying forward a conversation from one session to another.

They can also get lost. Recently, I had a session with a leading chatbot, and it answered a question with a complete non-sequitur. When I pointed this out, it responded again off-topic, as if the thread of our conversation had simply vanished.

They also do not learn, at least not in any human sense. Once a model is released, whether by Google, Anthropic, OpenAI or DeepSeek, its weights are frozen. Its “intelligence” is fixed. Instead, continuity of a conversation with a chatbot is limited to the confines of its context window, which is, admittedly, quite large. Within that window and conversation, the chatbots can absorb knowledge and make connections that serve as learning in the moment, and they appear increasingly like savants.

These gifts and flaws add up to an intriguing, beguiling presence. But can we trust it? Surveys such as the 2025 Edelman Trust Barometer show that AI trust is divided. In China, 72% of people express trust in AI. But in the U.S., that number drops to 32%. This divergence underscores how public faith in AI is shaped as much by culture and governance as by technical capability. If AI did not hallucinate, if it could remember, if it learned, if we understood how it worked, we would likely trust it more. But trust in the AI industry itself remains elusive. There are widespread fears that there will be no meaningful regulation of AI technology, and that ordinary people will have little say in how it is developed or deployed.

Without trust, will this AI revolution flounder and bring about another winter? And if so, what happens to those who have invested time, energy and their careers? Will those who have waited to embrace AI be better off for having done so? Will cognitive migration be a flop?

Some notable AI researchers have warned that AI in its current form — based primarily on deep learning neural networks upon which LLMs are built — will fall short of optimistic projections. They claim that additional technical breakthroughs will be needed for this approach to advance much further. Others do not buy into the optimistic AI projections. Novelist Ewan Morrison views the potential of superintelligence as a fiction dangled to attract investor funding. “It’s a fantasy,” he said, “a product of venture capital gone nuts.”

Perhaps Morrison’s skepticism is warranted. However, even with their shortcomings, today’s LLMs are already demonstrating huge commercial utility. If the exponential progress of the last few years stops tomorrow, the ripples from what has already been created will have an impact for years to come. But beneath this movement lies something more fragile: The reliability of the tools themselves.

The gamble and the dream

For now, exponential advances continue as companies pilot and increasingly deploy AI. Whether driven by conviction or fear of missing out, the industry is determined to move forward. It could all fall apart if another winter arrives, especially if AI agents fail to deliver. Still, the prevailing assumption is that today’s shortcomings will be solved through better software engineering. And they might be. In fact, they probably will, at least to a degree.

The bet is that the technology will work, that it will scale and that the disruption it creates will be outweighed by the productivity it enables. Success in this adventure assumes that what we lose in human nuance, value and meaning will be made up for in reach and efficiency. This is the gamble we are making. And then there is the dream: AI will become a source of abundance widely shared, will elevate rather than exclude, and expand access to intelligence and opportunity rather than concentrate it.

The unsettling lies in the gap between the two. We are moving forward as if taking this gamble will guarantee the dream. It is the hope that acceleration will land us in a better place, and the faith that it will not erode the human elements that make the destination worth reaching. But history reminds us that even successful bets can leave many behind. The “messy” transformation now underway is not just an inevitable side effect. It is the direct result of speed overwhelming human and institutional capacity to adapt effectively and with care. For now, cognitive migration continues, as much on faith as belief.

The challenge is not just to build better tools, but to ask harder questions about where they are taking us. We are not just migrating to an unknown destination; we are doing it so fast that the map is changing while we run, moving across a landscape that is still being drawn. Every migration carries hope. But hope, unexamined, can be risky. It is time to ask not just where we are going, but who will get to belong when we arrive.

Gary Grossman is EVP of technology practice at Edelman and global lead of the Edelman AI Center of Excellence.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.