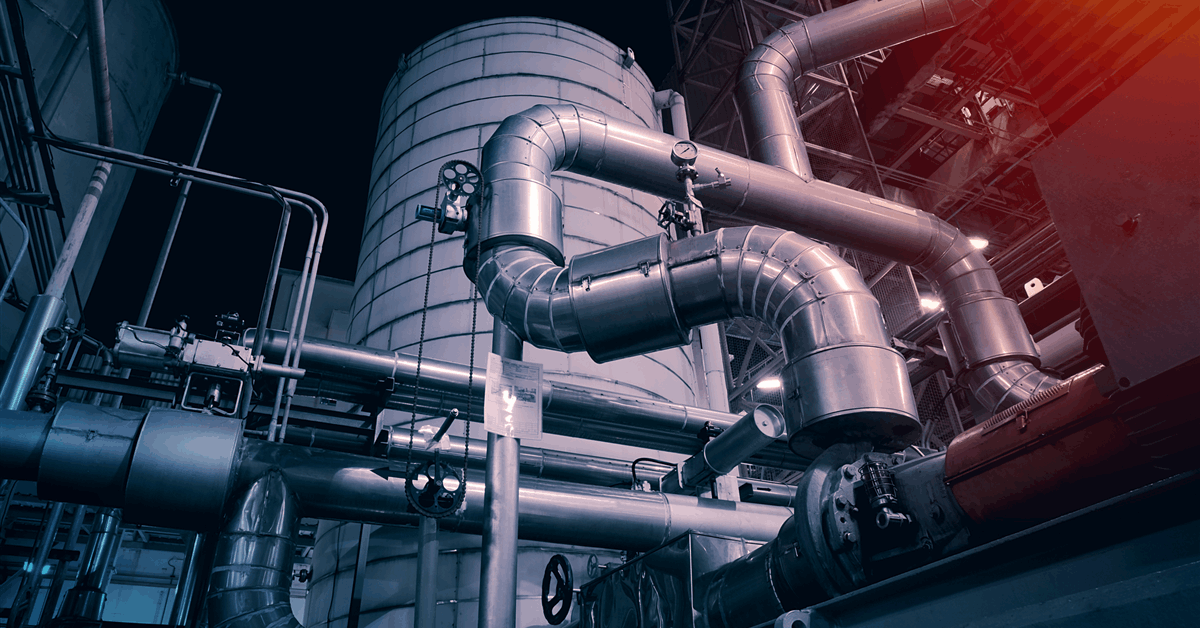

Aker BP ASA has extended its alliance agreement with SLB (Schlumberger Limited) and Stimwell Services Ltd. for another five years. The alliance formed in 2019 has supported Aker BP’s operated assets in meeting production targets.

Aker BP said in a media release the alliance will work on further accelerating and boosting oil production.

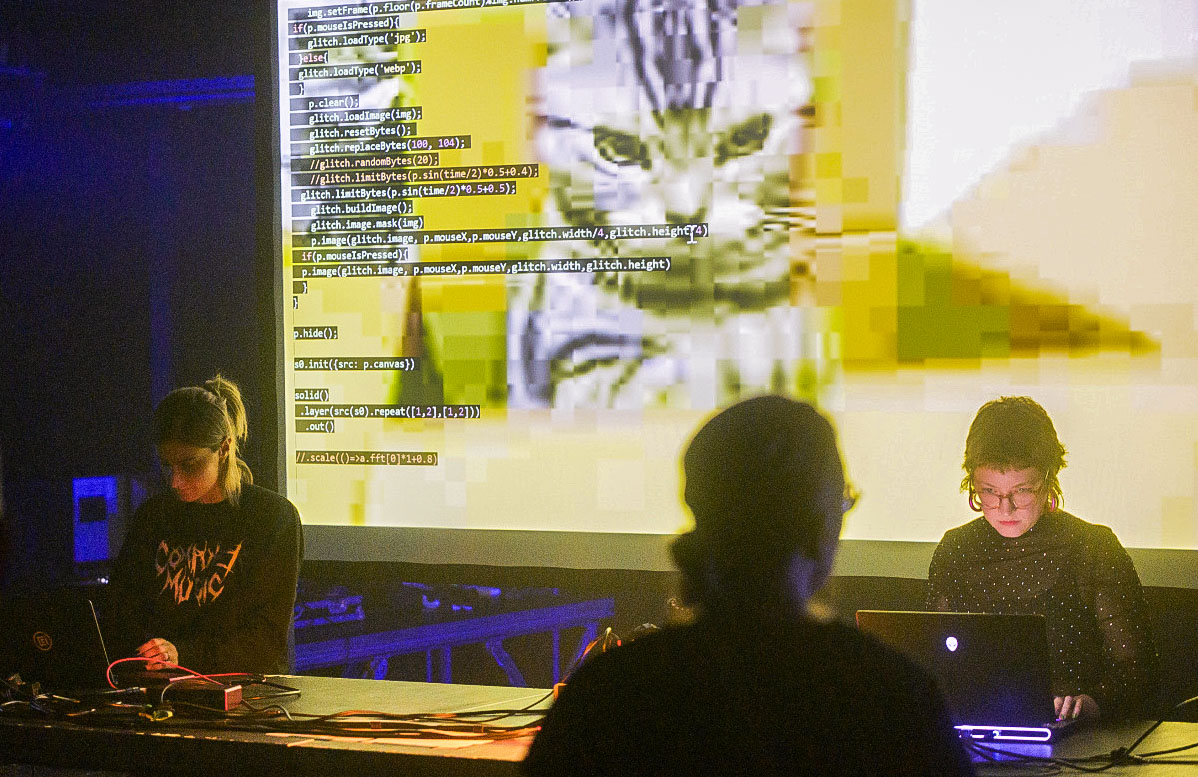

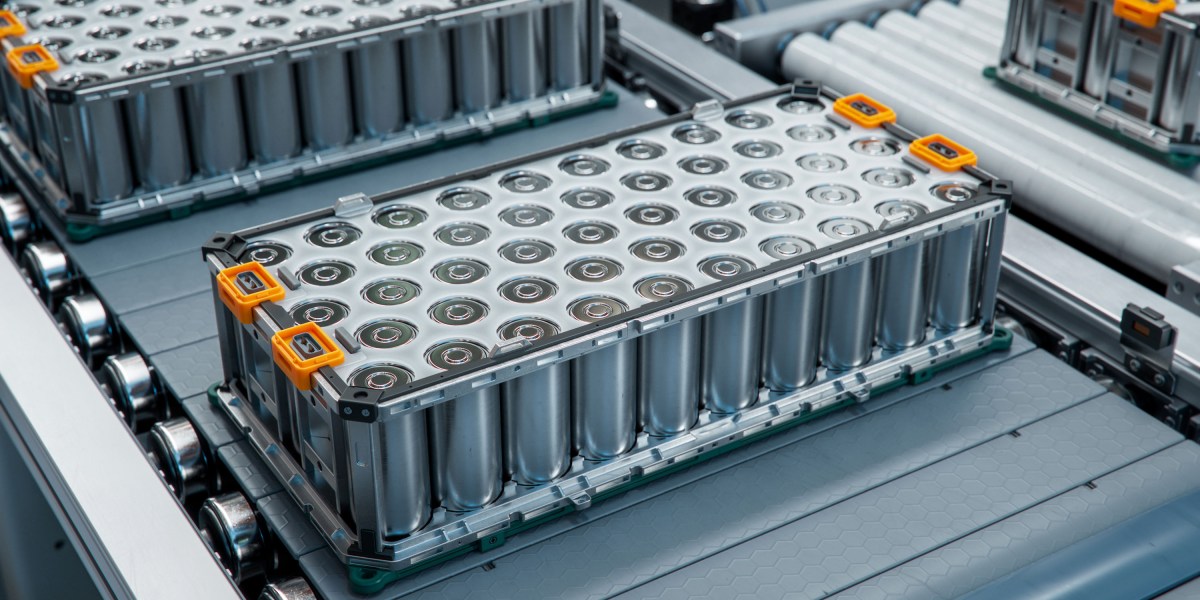

The alliance has established new standards for safe, efficient, and cost-effective operations through collaboration, digitalization, and innovative technology, according to Aker BP. Key achievements include simultaneous operations with jack-up rigs, a decreased backlog of locked-in barrels, and the first-ever autonomous intervention operation globally. Currently, both planning and execution utilize digital workflows, leading to enhanced productivity, reduced risk, and higher success rates, the company said.

“Strategic partnerships are essential to shaping the future of our industry. At Aker BP, we remain committed to the alliance model, which creates value through long-term collaboration. It enables us to increase productivity, maintain world-class performance, and deliver oil and gas with low cost and low emissions. This is how we position ourselves as the E&P company of the future”, Aker BP CEO Karl Johnny Hersvik said.

The alliance strategy aims to transform offshore well intervention. Over the next five years, the partners aim to deliver top-quartile performance while developing future-proof capabilities, enhancing digital integration between subsurface and operations, expanding Aker BP’s Integrated Operations Centre for remote operations, and accelerating new technology deployment, Aker BP added.

Furthermore, the alliance will focus on bringing wells onstream across Aker BP’s projects, using a newly upgraded stimulation vessel to optimize the Valhall PWP wells, contributing significantly to future production.

“Increasing production and recovery from maturing assets is a top priority across the industry, and this alliance demonstrates how we can drive progress together through the power of partnership. The complex challenges facing our industry will increasingly require deep collaboration and trust across teams, and this alliance has been a cornerstone example of how powerful driving innovation together can be”, SLB CEO Olivier Le Peuch added.

“Stimulation has been critical in unlocking and increasing the recovery from tight reservoirs such as Valhall. During the last five years, the alliance working together managed to successfully develop very tight areas of the field by using innovative technology which significantly reduced the execution time and CO2 footprint and making it economic”, Sami Haidar, Managing Director at StimWell Servies, said.

To contact the author, email [email protected]

What do you think? We’d love to hear from you, join the conversation on the

Rigzone Energy Network.

The Rigzone Energy Network is a new social experience created for you and all energy professionals to Speak Up about our industry, share knowledge, connect with peers and industry insiders and engage in a professional community that will empower your career in energy.

MORE FROM THIS AUTHOR