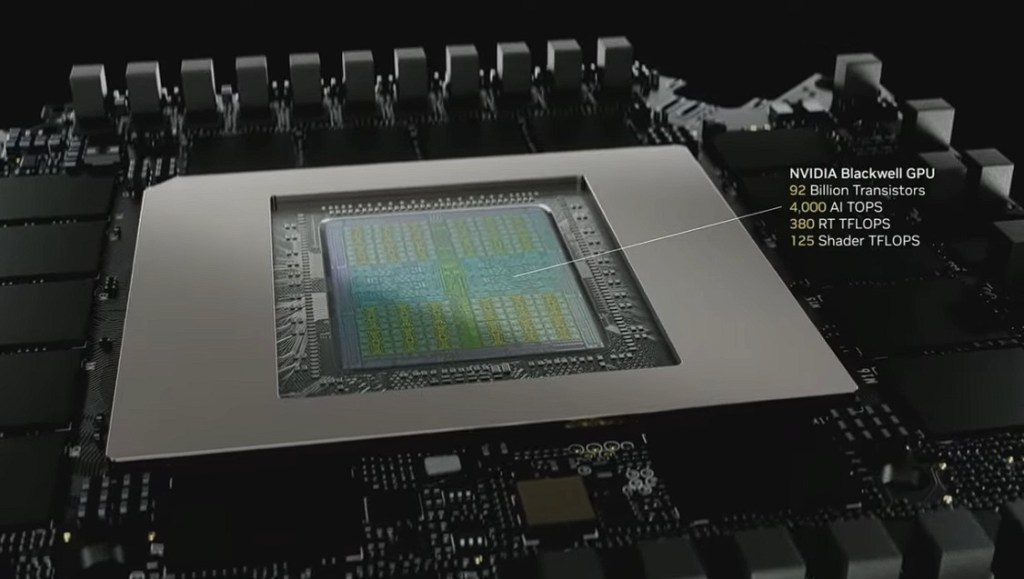

All of the large cloud service providers (CSPs) have developed dedicated silicon, designed in house, to offer as an alternative to commercially available chips at a “healthy discounted rate,” explained Matt Kimball, VP and principal analyst for data compute and storage at Moor Insights & Strategy.

For Amazon, that’s the Trainium, the Graviton processor, and the Inferentia chip. AWS has been able to monetize its homegrown chips quite effectively and has been “wildly successful” with Graviton, he noted. It has been the most focused and aggressive of the large CSPs in chasing the enterprise AI market with its own silicon.

“What AWS is doing is smart — it is telling the world that there is a cost-effective alternative that is also performant for AI training needs,” said Kimball. “It is inserting itself into the AI conversation.”

AI inferencing geared to the everyday enterprise

While Nvidia continues to raise the bar on AI performance with its latest chips, it’s like a set of golden handcuffs, said Nguyen. That is, the company doesn’t have much incentive to create lower-end chips for smaller workloads. This creates an opportunity for Amazon and others to gain footholds in these adjacent markets.

“This serves to fill underneath,” said Nguyen. “Purpose-built training chips are a nice to have for the open market.”

These chips can make chain-of-thought (CoT) and reasoning models, in which AI systems think out each step before providing an output, more accessible to more companies, essentially democratizing AI, he said.