Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

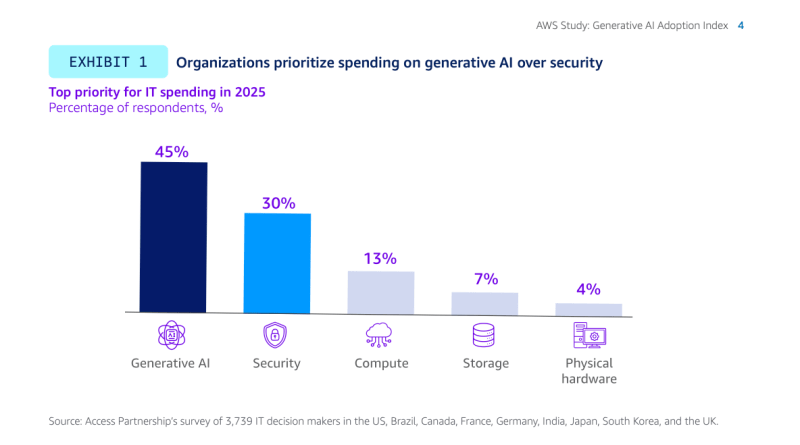

Generative AI tools have surpassed cybersecurity as the top budget priority for global IT leaders heading into 2025, according to a comprehensive new study released today by Amazon Web Services.

The AWS Generative AI Adoption Index, which surveyed 3,739 senior IT decision makers across nine countries, reveals that 45% of organizations plan to prioritize generative AI spending over traditional IT investments like security tools (30%) — a significant shift in corporate technology strategies as businesses race to capitalize on AI’s transformative potential.

“I don’t think it’s cause for concern,” said Rahul Pathak, Vice President of Generative AI and AI/ML Go-to-Market at AWS, in an exclusive interview with VentureBeat. “The way I interpret that is that customers’ security remains a massive priority. What we’re seeing with AI being such a major item from a budget prioritization perspective is that customers are seeing so many use cases for AI. It’s really that there’s a broad need to accelerate adoption of AI that’s driving that particular outcome.”

The extensive survey, conducted across the United States, Brazil, Canada, France, Germany, India, Japan, South Korea, and the United Kingdom, shows that generative AI adoption has reached a critical inflection point, with 90% of organizations now deploying these technologies in some capacity. More tellingly, 44% have already moved beyond the experimental phase into production deployment.

60% of companies have already appointed Chief AI Officers as C-suite transforms for the AI era

As AI initiatives scale across organizations, new leadership structures are emerging to manage the complexity. The report found that 60% of organizations have already appointed a dedicated AI executive, such as a Chief AI Officer (CAIO), with another 26% planning to do so by 2026.

This executive-level commitment reflects growing recognition of AI’s strategic importance, though the study notes that nearly one-quarter of organizations will still lack formal AI transformation strategies by 2026, suggesting potential challenges in change management.

“A thoughtful change management strategy will be critical,” the report emphasizes. “The ideal strategy should address operating model changes, data management practices, talent pipelines, and scaling strategies.”

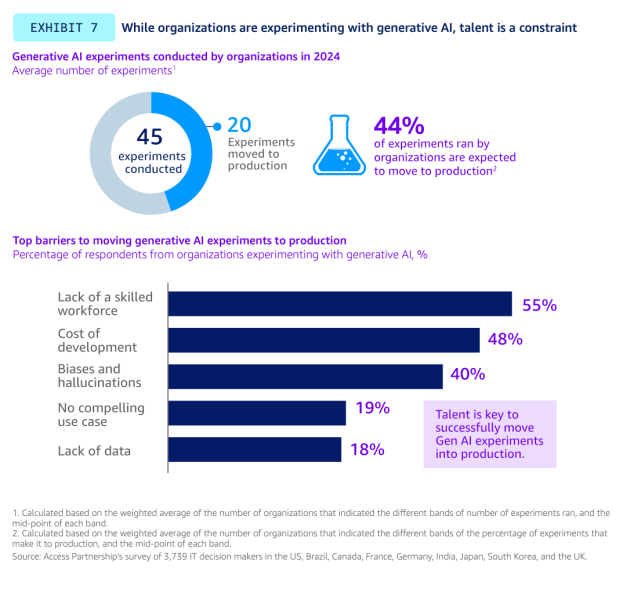

Companies average 45 AI experiments but only 20 will reach users in 2025: the production gap challenge

Organizations conducted an average of 45 AI experiments in 2024, but only about 20 are expected to reach end users by 2025, highlighting persistent implementation challenges.

“For me to see over 40% going into production for something that’s relatively new, I actually think is pretty rapid and high success rate from an adoption perspective,” Pathak noted. “That said, I think customers are absolutely using AI in production at scale, and I think we want to obviously see that continue to accelerate.”

The report identified talent shortages as the primary barrier to transitioning experiments into production, with 55% of respondents citing the lack of a skilled generative AI workforce as their biggest challenge.

“I’d say another big piece that’s an unlock to getting into production successfully is customers really working backwards from what business objectives they’re trying to drive, and then also understanding how will AI interact with their data,” Pathak told VentureBeat. “It’s really when you combine the unique insights you have about your business and your customers with AI that you can drive a differentiated business outcome.”

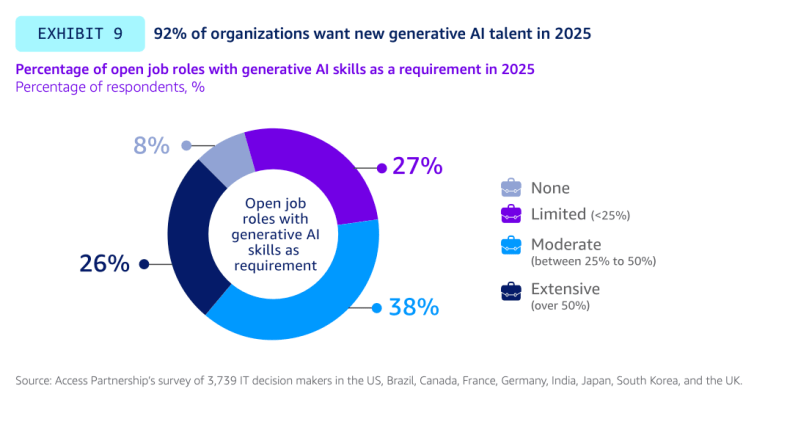

92% of organizations will hire AI talent in 2025 while 75% implement training to bridge skills gap

To address the skills gap, organizations are pursuing dual strategies of internal training and external recruitment. The survey found that 56% of organizations have already developed generative AI training plans, with another 19% planning to do so by the end of 2025.

“For me, it’s clear that it’s top of mind for customers,” Pathak said regarding the talent shortage. “It’s, how do we make sure that we bring our teams along and employees along and get them to a place where they’re able to maximize the opportunity.”

Rather than specific technical skills, Pathak emphasized adaptability: “I think it’s more about, can you commit to sort of learning how to use AI tools so you can build them into your day-to-day workflow and keep that agility? I think that mental agility will be important for all of us.”

The talent push extends beyond training to aggressive hiring, with 92% of organizations planning to recruit for roles requiring generative AI expertise in 2025. In a quarter of organizations, at least 50% of new positions will require these skills.

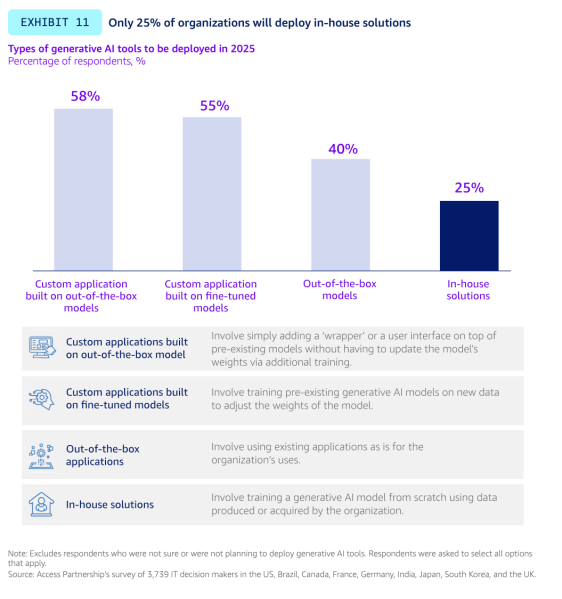

Financial services joins hybrid AI revolution: only 25% of companies building solutions from scratch

The long-running debate over whether to build proprietary AI solutions or leverage existing models appears to be resolving in favor of a hybrid approach. Only 25% of organizations plan to deploy solutions developed in-house from scratch, while 58% intend to build custom applications on pre-existing models and 55% will develop applications on fine-tuned models.

This represents a notable shift for industries traditionally known for custom development. The report found that 44% of financial services firms plan to use out-of-the-box solutions — a departure from their historical preference for proprietary systems.

“Many select customers are still building their own models,” Pathak explained. “That being said, I think there’s so much capability and investment that’s gone into core foundation models that there are excellent starting points, and we’ve worked really hard to make sure customers can be confident that their data is protected. Nothing leaks into the models. Anything they do for fine-tuning or customization is private and remains their IP.”

He added that companies can still leverage their proprietary knowledge while using existing foundation models: “Customers realize that they can get the benefits of their proprietary understanding of the world with things like RAG [Retrieval-Augmented Generation] and customization and fine-tuning and model distillation.”

India leads global AI adoption at 64% with South Korea following at 54%, outpacing Western markets

While generative AI investment is a global trend, the study revealed regional variations in adoption rates. The U.S. showed 44% of organizations prioritizing generative AI investments, aligning with the global average of 45%, but India (64%) and South Korea (54%) demonstrated significantly higher rates.

“We are seeing massive adoption around the world,” Pathak observed. “I thought it was interesting that there was a relatively high amount of consistency on the global side. I think we did see in our respondents that, if you squint at it, I think we’ve seen India maybe slightly ahead, other parts slightly behind the average, and then kind of the U.S. right on line.”

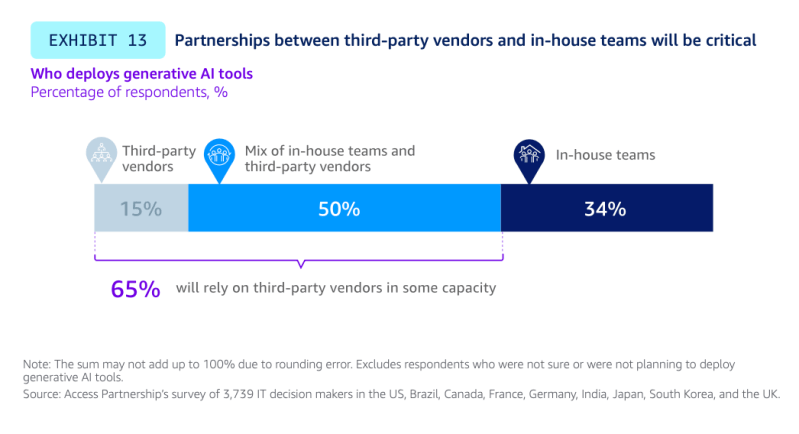

65% of organizations will rely on third-party vendors to accelerate AI implementation in 2025

As organizations navigate the complex AI landscape, they increasingly rely on external expertise. The report found that 65% of organizations will depend on third-party vendors to some extent in 2025, with 15% planning to rely solely on vendors and 50% adopting a mixed approach combining in-house teams and external partners.

“For us, it’s very much an ‘and’ type of relationship,” Pathak said of AWS’s approach to supporting both custom and pre-built solutions. “We want to meet customers where they are. We’ve got a huge partner ecosystem we’ve invested in from a model provider perspective, so Anthropic and Meta, Stability, Cohere, etc. We’ve got a big partner ecosystem of ISVs. We’ve got a big partner ecosystem of service providers and system integrators.”

The imperative to act now or risk being left behind

For organizations still hesitant to embrace generative AI, Pathak offered a stark warning: “I really think customers should be leaning in, or they’re going to risk getting left behind by their peers who are. The gains that AI can provide are real and significant.”

He emphasized the accelerating pace of innovation in the field: “The rate of change and the rate of improvement of AI technology and the rate of the reduction of things like the cost of inference are significant and will continue to be rapid. Things that seem impossible today will seem like old news in probably just three to six months.”

This sentiment is echoed in the widespread adoption across sectors. “We see such a rapid, such a mass breadth of adoption,” Pathak noted. “Regulated industries, financial services, healthcare, we see governments, large enterprise, startups. The current crop of startups is almost exclusively AI-driven.”

The business-first approach to AI success

The AWS report paints a portrait of generative AI’s rapid evolution from cutting-edge experiment to fundamental business infrastructure. As organizations shift budget priorities, restructure leadership teams, and race to secure AI talent, the data suggests we’ve reached a decisive tipping point in enterprise AI adoption.

Yet amid the technological gold rush, the most successful implementations will likely come from organizations that maintain a relentless focus on business outcomes rather than technological novelty. As Pathak emphasized, “AI is a powerful tool, but you got to start with your business objective. What are you trying to accomplish as an organization?”

In the end, the companies that thrive won’t necessarily be those with the biggest AI budgets or the most advanced models, but those that most effectively harness AI to solve real business problems with their unique data assets. In this new competitive landscape, the question is no longer whether to adopt AI, but how quickly organizations can transform AI experiments into tangible business advantage before their competitors do.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.