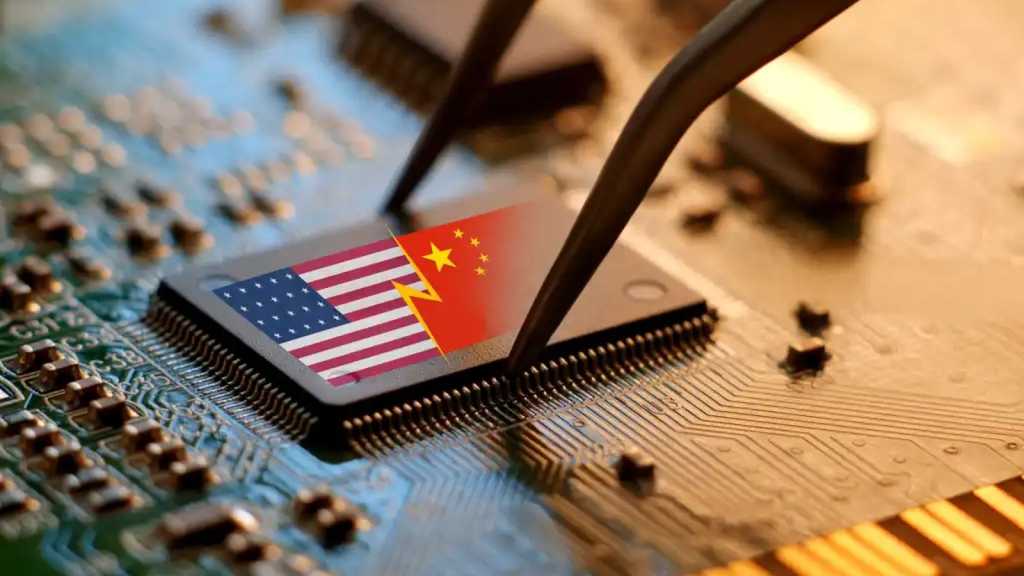

The proposed legislation would require export-controlled advanced chips to be equipped with location verification mechanisms within six months of enactment, and mandate exporters to report to the Bureau of Industry and Security if products are diverted or tampered with.

The CAC statement cited “demands from US lawmakers to add tracking features to advanced chips” and noted that “US artificial intelligence experts have indicated that remote control technologies related to Nvidia’s chips have matured.”

Nvidia denials fall short, China says

The People’s Daily referenced Nvidia’s previous statement that “Cybersecurity is critically important to us. Nvidia does not have ‘backdoors’ in our chips that would give anyone a remote way to access or control them.” However, the state media outlet dismissed this response as insufficient, emphasizing that only “convincing security evidence” would restore trust.

The chipmaker faces pressure balancing US security requirements with Chinese market demands. US Commerce Secretary Howard Lutnick described the H20 as Nvidia’s “fourth best” processor when announcing export resumption: “We don’t sell them our best stuff, not our second best stuff, not even our third best.”

The H20 chip is part of Nvidia’s China-specific product line, engineered to meet US trade restrictions by reducing performance while maintaining sufficient processing power for Chinese customers. It’s based on Nvidia’s Hopper architecture but with trimmed specifications.

Enterprise IT faces chip procurement challenges

The confrontation highlights tensions over semiconductor supply chains critical to enterprise AI deployments. The People’s Daily noted that “cybersecurity not only impacts our daily lives but also acts as the lifeblood of businesses, and is directly linked to national security.”