Every day, billions of people trust digital systems to run everything from communication to commerce to critical infrastructure. But the global early warning system that alerts security teams to dangerous software flaws is showing critical gaps in coverage—and most users have no idea their digital lives are likely becoming more vulnerable.

Over the past eighteen months, two pillars of global cybersecurity have flirted with apparent collapse. In February 2024, the US-backed National Vulnerability Database (NVD)—relied on globally for its free analysis of security threats—abruptly stopped publishing new entries, citing a cryptic “change in interagency support.” Then, in April of this year, the Common Vulnerabilities and Exposures (CVE) program, the fundamental numbering system for tracking software flaws, seemed at similar risk: A leaked letter warned of an imminent contract expiration.

Cybersecurity practitioners have since flooded Discord channels and LinkedIn feeds with emergency posts and memes of “NVD” and “CVE” engraved on tombstones. Unpatched vulnerabilities are the second most common way cyberattackers break in, and they have led to fatal hospital outages and critical infrastructure failures. In a social media post, Jen Easterly, a US cybersecurity expert, said: “Losing [CVE] would be like tearing out the card catalog from every library at once—leaving defenders to sort through chaos while attackers take full advantage.” If CVEs identify each vulnerability like a book in a card catalog, NVD entries provide the detailed review with context around severity, scope, and exploitability.

In the end, the Cybersecurity and Infrastructure Security Agency (CISA) extended funding for CVE another year, attributing the incident to a “contract administration issue.” But the NVD’s story has proved more complicated. Its parent organization, the National Institute of Standards and Technology (NIST), reportedly saw its budget cut roughly 12% in 2024, right around the time that CISA pulled its $3.7 million in annual funding for the NVD. Shortly after, as the backlog grew, CISA launched its own “Vulnrichment” program to help address the analysis gap, while promoting a more distributed approach that allows multiple authorized partners to publish enriched data.

“CISA continuously assesses how to most effectively allocate limited resources to help organizations reduce the risk of newly disclosed vulnerabilities,” says Sandy Radesky, the agency’s associate director for vulnerability management. Rather than just filling the gap, she emphasizes that Vulnrichment was established to provide unique additional information, like recommended actions for specific stakeholders, and to “reduce dependency of the federal government’s role to be the sole provider of vulnerability enrichment.”

Meanwhile, NIST has scrambled to hire contractors to help clear the backlog. Despite a return to pre-crisis processing levels, a boom in vulnerabilities newly disclosed to the NVD has outpaced these efforts. Currently, over 25,000 vulnerabilities await processing – nearly 10 times the previous high in 2017, according to data from software company Anchore. Before that, the NVD largely kept pace with CVE publications, maintaining a minimal backlog.

“Things have been disruptive, and we’ve been going through times of change across the board,” Matthew Scholl, then chief of the computer security division in NIST’s Information Technology Laboratory, said at an industry event in April. “Leadership has assured me and everyone that NVD is and will continue to be a mission priority for NIST, both in resourcing and capabilities.” Scholl left NIST in May after 20 years at the agency, and NIST declined to comment on the backlog.

The situation has now prompted multiple government actions, with the Department of Commerce launching an audit of the NVD in May and House Democrats calling for a broader probe of both programs in June. But the damage to trust is already transforming geopolitics and supply chains as security teams prepare for a new era of cyber risk. “It’s left a bad taste, and people are realizing they can’t rely on this,” says Rose Gupta, who builds and runs enterprise vulnerability management programs. “Even if they get everything together tomorrow with a bigger budget, I don’t know that this won’t happen again. So I have to make sure I have other controls in place.”

As these public resources falter, organizations and governments are confronting a critical weakness in our digital infrastructure: Essential global cybersecurity services depend on a complex web of US agency interests and government funding that can be cut or redirected at any time.

Security haves and have-nots

What began as a trickle of software vulnerabilities in the early Internet era has become an unstoppable avalanche, and the free databases that have tracked them for decades have struggled to keep up. In early July, the CVE database crossed over 300,000 catalogued vulnerabilities. Numbers jump unpredictably each year, sometimes by 10% or much more. Even before its latest crisis, the NVD was notorious for delayed publication of new vulnerability analyses, often trailing private security software and vendor advisories by weeks or months.

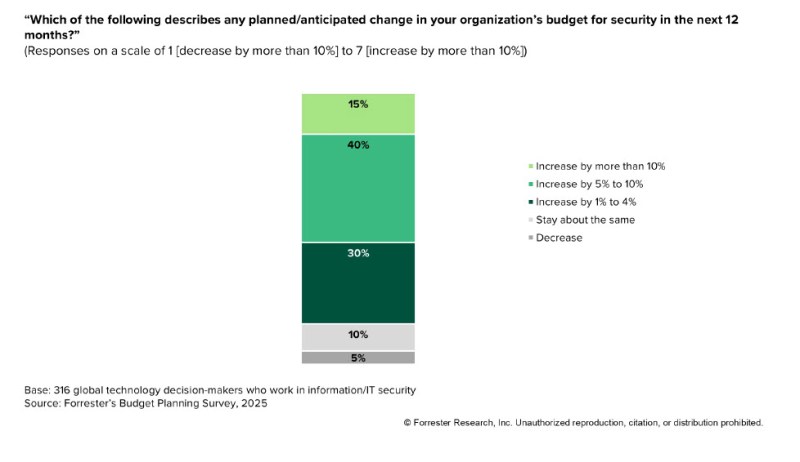

Gupta has watched organizations increasingly adopt commercial vulnerability management (VM) software that includes its own threat intelligence services. “We’ve definitely become over-reliant on our VM tools,” she notes, describing security teams’ growing dependence on vendors like Qualys, Rapid7, and Tenable to supplement or replace unreliable public databases. These platforms combine their own research with various data sources to create proprietary risk scores that help teams prioritize fixes. But not all organizations can afford to fill the NVD’s gap with premium security tools. “Smaller companies and startups, already at a disadvantage, are going to be more at risk,” she explains.

Komal Rawat, a security engineer in New Delhi whose mid-stage cloud startup has a limited budget, describes the impact in stark terms: “If NVD goes, there will be a crisis in the market. Other databases are not that popular, and to the extent they are adopted, they are not free. If you don’t have recent data, you’re exposed to attackers who do.”

The growing backlog means new devices could be more likely to have vulnerability blind spots—whether that’s a Ring doorbell at home or an office building’s “smart” access control system. The biggest risk may be “one-off” security flaws that fly under the radar. “There are thousands of vulnerabilities that will not affect the majority of enterprises,” says Gupta. “Those are the ones that we’re not getting analysis on, which would leave us at risk.”

NIST acknowledges it has limited visibility into which organizations are most affected by the backlog. “We don’t track which industries use which products and therefore cannot measure impact to specific industries,” a spokesperson says. Instead, the team prioritizes vulnerabilities on the basis of CISA’s known exploits list and those included in vendor advisories like Microsoft Patch Tuesday.

The biggest vulnerability

Brian Martin has watched this system evolve—and deteriorate—from the inside. A former CVE board member and an original project leader behind the Open Source Vulnerability Database, he has built a combative reputation over the decades as a leading historian and practitioner. Martin says his current project, VulnDB (part of Flashpoint Security), outperforms the official databases he once helped oversee. “Our team processes more vulnerabilities, at a much faster turnaround, and we do it for a fraction of the cost,” he says, referring to the tens of millions in government contracts that support the current system.

When we spoke in May, Martin said his database contains more than 112,000 vulnerabilities with no CVE identifiers—security flaws that exist in the wild but remain invisible to organizations that rely solely on public channels. “If you gave me the money to triple my team, that non-CVE number would be in the 500,000 range,” he said.

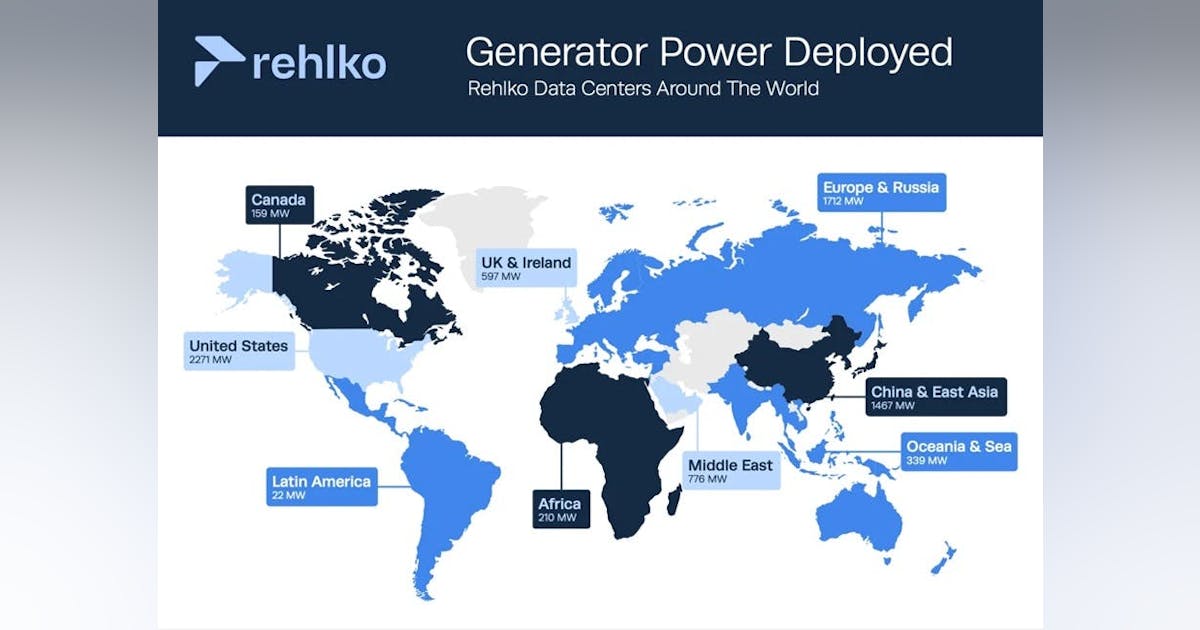

In the US, official vulnerability management duties are split between a web of contractors, agencies, and nonprofit centers like the Mitre Corporation. Critics like Martin say that creates potential for redundancy, confusion, and inefficiency, with layers of middle management and relatively few actual vulnerability experts. Others defend the value of this fragmentation. “These programs build on or complement each other to create a more comprehensive, supportive, and diverse community,” CISA said in a statement. “That increases the resilience and usefulness of the entire ecosystem.”

As American leadership wavers, other nations are stepping up. China now operates multiple vulnerability databases, some surprisingly robust but tainted by the possibility that they are subject to state control. In May, the European Union accelerated the launch of its own database, as well as a decentralized “Global CVE” architecture. Following social media and cloud services, vulnerability intelligence has become another front in the contest for technological independence.

That leaves security professionals to navigate multiple, potentially conflicting sources of data. “It’s going to be a mess, but I would rather have too much information than none at all,” says Gupta, describing how her team monitors multiple databases despite the added complexity.

Resetting software liability

As defenders adapt to the fragmenting landscape, the tech industry faces another reckoning: Why don’t software vendors carry more responsibility for protecting their customers from security issues? Major vendors routinely disclose—but don’t necessarily patch—thousands of new vulnerabilities each year. A single exposure could crash critical systems or increase the risks of fraud and data misuse.

For decades, the industry has hidden behind legal shields. “Shrink-wrap licenses” once forced consumers to broadly waive their right to hold software vendors liable for defects. Today’s end-user license agreements (EULAs), often delivered in pop-up browser windows, have evolved into incomprehensibly long documents. Last November, a lab project called “EULAS of Despair” used the length of War and Peace (587,287 words) to measure these sprawling contracts. The worst offender? Twitter, at 15.83 novels’ worth of fine print.

“This is a legal fiction that we’ve created around this whole ecosystem, and it’s just not sustainable,” says Andrea Matwyshyn, a US special advisor and technology law professor at Penn State University, where she directs the Policy Innovation Lab of Tomorrow. “Some people point to the fact that software can contain a mix of products and services, creating more complex facts. But just like in engineering or financial litigation, even the most messy scenarios can be resolved with the assistance of experts.”

This liability shield is finally beginning to crack. In July 2024, a faulty security update in CrowdStrike’s popular endpoint detection software crashed millions of Windows computers worldwide and caused outages at everything from airlines to hospitals to 911 systems. The incident led to billions in estimated damages, and the city of Portland, Oregon, even declared a “state of emergency.” Now, affected companies like Delta Airlines have hired high-priced attorneys to pursue major damages—a signal opening of the floodgates to litigation.

Despite the soaring number of vulnerabilities, many fall into long-established categories, such as SQL injections that interfere with database queries and buffer memory overflows that enable code to be executed remotely. Matwyshyn advocates for a mandatory “software bill of materials,” or S-BOM—an ingredients list that would let organizations understand what components and potential vulnerabilities exist throughout their software supply chains. One recent report found 30% of data breaches stemmed from the vulnerabilities of third-party software vendors or cloud service providers.

She adds: “When you can’t tell the difference between the companies that are cutting corners and a company that has really invested in doing right by their customers, that results in a market where everyone loses.”

CISA leadership shares this sentiment, with a spokesperson emphasizing its “secure-by-design principles,” such as “making essential security features available without additional cost, eliminating classes of vulnerabilities, and building products in a way that reduces the cybersecurity burden on customers.”

Avoiding a digital ‘dark age’

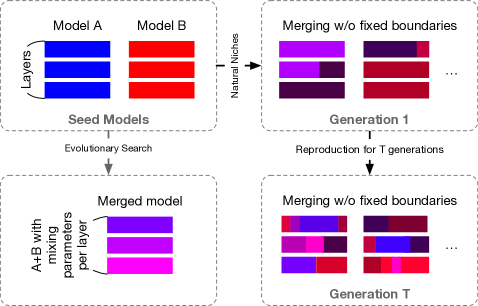

It will likely come as no surprise that practitioners are looking to AI to help fill the gap, while at the same time preparing for a coming swarm of cyberattacks by AI agents. Security researchers have used an OpenAI model to discover new “zero-day” vulnerabilities. And both the NVD and CVE teams are developing “AI-powered tools” to help streamline data collection, identification, and processing. NIST says that “up to 65% of our analysis time has been spent generating CPEs”—product information codes that pinpoint affected software. If AI can solve even part of this tedious process, it could dramatically speed up the analysis pipeline.

But Martin cautions against optimism around AI, noting that the technology remains unproven and often riddled with inaccuracies—which, in security, can be fatal. “Rather than AI or ML [machine learning], there are ways to strategically automate bits of the processing of that vulnerability data while ensuring 99.5% accuracy,” he says.

AI also fails to address more fundamental challenges in governance. The CVE Foundation, launched in April 2025 by breakaway board members, proposes a globally funded nonprofit model similar to that of the internet’s addressing system, which transitioned from US government control to international governance. Other security leaders are pushing to revitalize open-source alternatives like Google’s OSV Project or the NVD++ (maintained by VulnCheck), which are accessible to the public but currently have limited resources.

As these various reform efforts gain momentum, the world is waking up to the fact that vulnerability intelligence—like disease surveillance or aviation safety—requires sustained cooperation and public investment. Without it, a patchwork of paid databases will be all that remains, threatening to leave all but the richest organizations and nations permanently exposed.

Matthew King is a technology and environmental journalist based in New York. He previously worked for cybersecurity firm Tenable.