Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The recent takedown of DanaBot, a Russian malware platform responsible for infecting over 300,000 systems and causing more than $50 million in damage, highlights how agentic AI is redefining cybersecurity operations. According to a recent Lumen Technologies post, DanaBot actively maintained an average of 150 active C2 servers per day, with roughly 1,000 daily victims across more than 40 countries.

Last week, the U.S. Department of Justice unsealed a federal indictment in Los Angeles against 16 defendants of DanaBot, a Russia-based malware-as-a-service (MaaS) operation responsible for orchestrating massive fraud schemes, enabling ransomware attacks and inflicting tens of millions of dollars in financial losses to victims.

DanaBot first emerged in 2018 as a banking trojan but quickly evolved into a versatile cybercrime toolkit capable of executing ransomware, espionage and distributed denial-of-service (DDoS) campaigns. The toolkit’s ability to deliver precise attacks on critical infrastructure has made it a favorite of state-sponsored Russian adversaries with ongoing cyber operations targeting Ukrainian electricity, power and water utilities.

DanaBot sub-botnets have been directly linked to Russian intelligence activities, illustrating the merging boundaries between financially motivated cybercrime and state-sponsored espionage. DanaBot’s operators, SCULLY SPIDER, faced minimal domestic pressure from Russian authorities, reinforcing suspicions that the Kremlin either tolerated or leveraged their activities as a cyber proxy.

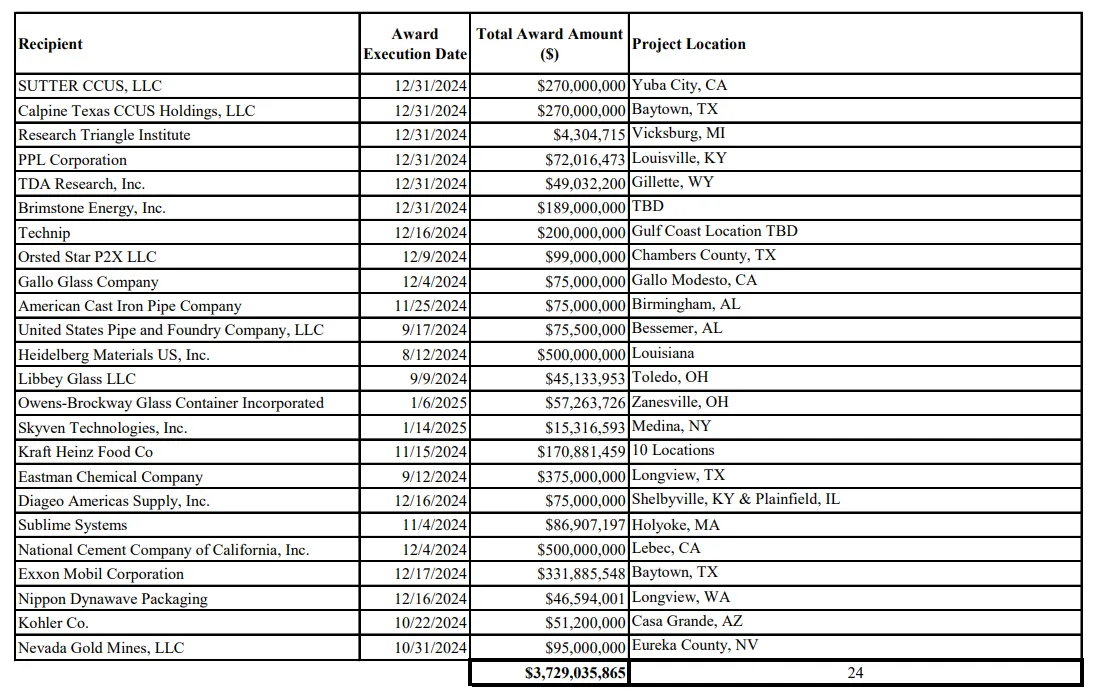

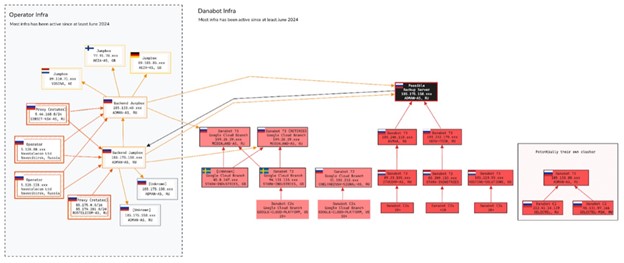

As illustrated in the figure below, DanaBot’s operational infrastructure involved complex and dynamically shifting layers of bots, proxies, loaders and C2 servers, making traditional manual analysis impractical.

DanaBot shows why agentic AI is the new front line against automated threats

Agentic AI played a central role in dismantling DanaBot, orchestrating predictive threat modeling, real-time telemetry correlation, infrastructure analysis and autonomous anomaly detection. These capabilities reflect years of sustained R&D and engineering investment by leading cybersecurity providers, who have steadily evolved from static rule-based approaches to fully autonomous defense systems.

“DanaBot is a prolific malware-as-a-service platform in the eCrime ecosystem, and its use by Russian-nexus actors for espionage blurs the lines between Russian eCrime and state-sponsored cyber operations,” Adam Meyers, Head of Counter Adversary Operations, CrowdStrike told VentureBeat in a recent interview. “SCULLY SPIDER operated with apparent impunity from within Russia, enabling disruptive campaigns while avoiding domestic enforcement. Takedowns like this are critical to raising the cost of operations for adversaries.”

Taking down DanaBot validated agentic AI’s value for Security Operations Centers (SOC) teams by reducing months of manual forensic analysis into a few weeks. All that extra time gave law enforcement the time they needed to identify and dismantle DanaBot’s sprawling digital footprint quickly.

DanaBot’s takedown signals a significant shift in the use of agentic AI in SOCs. SOC Analysts are finally getting the tools they need to detect, analyze, and respond to threats autonomously and at scale, attaining the greater balance of power in the war against adversarial AI.

DanaBot takedown proves SOCs must evolve beyond static rules to agentic AI

DanaBot’s infrastructure, dissected by Lumen’s Black Lotus Labs, reveals the alarming speed and lethal precision of adversarial AI. Operating over 150 active command-and-control servers daily, DanaBot compromised roughly 1,000 victims per day across more than 40 countries, including the U.S. and Mexico. Its stealth was striking. Only 25% of its C2 servers registered on VirusTotal, effortlessly evading traditional defenses.

Built as a multi-tiered, modular botnet leased to affiliates, DanaBot rapidly adapted and scaled, rendering static rule-based SOC defenses, including legacy SIEMs and intrusion detection systems, useless.

Cisco SVP Tom Gillis emphasized this risk clearly in a recent VentureBeat interview. “We’re talking about adversaries who continually test, rewrite and upgrade their attacks autonomously. Static defenses can’t keep pace. They become obsolete almost immediately.”

The goal is to reduce alert fatigue and accelerate incident response

Agentic AI directly addresses a long-standing challenge, starting with alert fatigue. Traditional SIEM platforms burden analysts with up to 40% false-positive rates.

By contrast, agentic AI-driven platforms significantly reduce alert fatigue through automated triage, correlation and context-aware analysis. These platforms include: Cisco Security Cloud, CrowdStrike Charlotte AI, Google Chronicle Security Operations, IBM Security QRadar Suite, Microsoft Security Copilot, Palo Alto Networks Cortex XSIAM, SentinelOne Purple AI and Trellix Helix. Each platform leverages advanced AI and risk-based prioritization to streamline analyst workflows, enabling rapid identification and response to critical threats while minimizing false positives and irrelevant alerts.

Microsoft research reinforces this advantage, integrating gen AI into SOC workflows and reducing incident resolution time by nearly one-third. Gartner’s projections underscore the transformative potential of agentic AI, estimating a productivity leap of approximately 40% for SOC teams adopting AI by 2026.

“The speed of today’s cyberattacks requires security teams to rapidly analyze massive amounts of data to detect, investigate, and respond faster. Adversaries are setting records, with breakout times of just over two minutes, leaving no room for delay,” George Kurtz, president, CEO and co-founder of CrowdStrike, told VentureBeat during a recent interview.

How SOC leaders are turning agentic AI into operational advantage

DanaBot’s dismantling signals a broader shift underway: SOCs are moving from reactive alert-chasing to intelligence-driven execution. At the center of that shift is agentic AI. SOC leaders getting this right aren’t buying into the hype. They’re taking deliberate, architecture-first approaches that are anchored in metrics and, in many cases, risk and business outcomes.

Key takeaways of how SOC leaders can turn agentic AI into an operational advantage include the following:

Start small. Scale with purpose. High-performing SOCs aren’t trying to automate everything at once. They’re targeting high-volume, repetitive tasks that often include phishing triage, malware detonation, routine log correlation and proving value early. The result: measurable ROI, reduced alert fatigue, and analysts reallocated to higher-order threats.

Integrate telemetry as the foundation, not the finish line. The goal isn’t collecting more data, it’s making telemetry meaningful. That means unifying signals across endpoint, identity, network, and cloud to give AI the context it needs. Without that correlation layer, even the best models under-deliver.

Establish governance before scale. As agentic AI systems take on more autonomous decision-making, the most disciplined teams are setting clear boundaries now. That includes codified rules of engagement, defined escalation paths and full audit trails. Human oversight isn’t a backup plan, and it’s part of the control plane.

Tie AI outcomes to metrics that matter. The most strategic teams align their AI efforts to KPIs that resonate beyond the SOC: reduced false positives, faster MTTR and improved analyst throughput. They’re not just optimizing models; they’re tuning workflows to turn raw telemetry into operational leverage.

Today’s adversaries operate at machine speed, and defending against them requires systems that can match that velocity. What made the difference in the takedown of DanaBot wasn’t generic AI. It was agentic AI, applied with surgical precision, embedded in the workflow, and accountable by design.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.