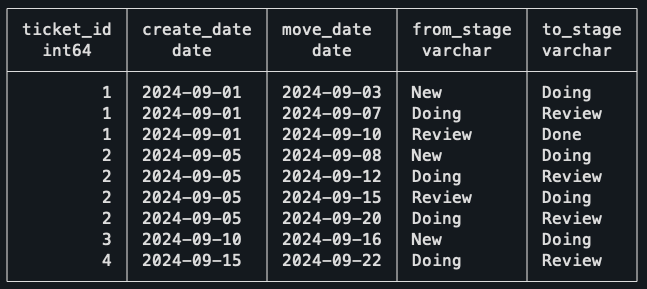

“Conduct a comprehensive literature review on the state-of-the-art in Machine Learning and energy consumption. […]”

With this prompt, I tested the new Deep Research function, which has been integrated into the OpenAI o3 reasoning model since the end of February — and conducted a state-of-the-art literature review within 6 minutes.

This function goes beyond a normal web search (for example, with ChatGPT 4o): The research query is broken down & structured, the Internet is searched for information, which is then evaluated, and finally, a structured, comprehensive report is created.

Let’s take a closer look at this.

Table of Content

1. What is Deep Research from OpenAI and what can you do with it?

2. How does deep research work?

3. How can you use deep research? — Practical example

4. Challenges and risks of the Deep Research feature

Final Thoughts

Where can you continue learning?

1. What is Deep Research from OpenAI and what can you do with it?

If you have an OpenAI Plus account (the $20 per month plan), you have access to Deep Research. This gives you access to 10 queries per month. With the Pro subscription ($200 per month) you have extended access to Deep Research and access to the research preview of GPT-4.5 with 120 queries per month.

OpenAI promises that we can perform multi-step research using data from the public web.

Duration: 5 to 30 minutes, depending on complexity.

Previously, such research usually took hours.

It is intended for complex tasks that require a deep search and thoroughness.

What do concrete use cases look like?

- Conduct a literature review: Conduct a literature review on state-of-the-art machine learning and energy consumption.

- Market analysis: Create a comparative report on the best marketing automation platforms for companies in 2025 based on current market trends and evaluations.

- Technology & software development: Investigate programming languages and frameworks for AI application development with performance and use case analysis

- Investment & financial analysis: Conduct research on the impact of AI-powered trading on the financial market based on recent reports and academic studies.

- Legal research: Conduct an overview of data protection laws in Europe compared to the US, including relevant rulings and recent changes.

2. How does Deep Research work?

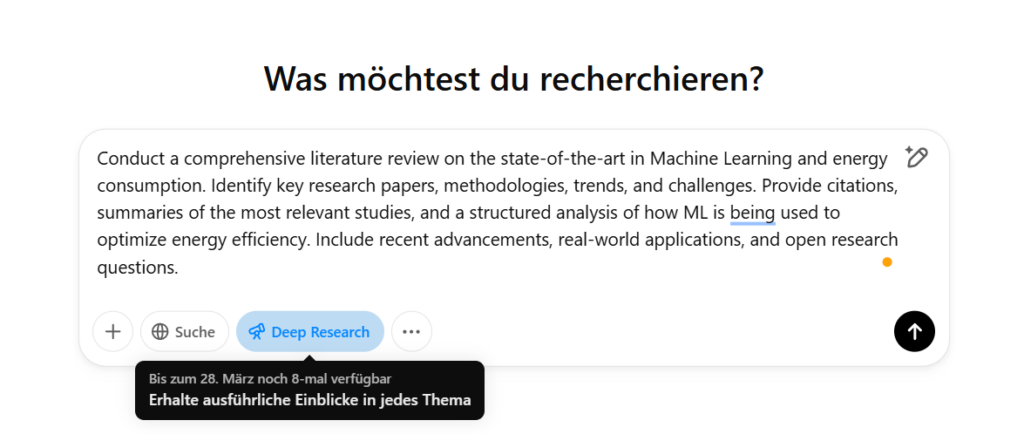

Deep Research uses various Deep Learning methods to carry out a systematic and detailed analysis of information. The entire process can be divided into four main phases:

1. Decomposition and structuring of the research question

In the first step the tool processes the research question using natural language processing (NLP) methods. It identifies the most important key terms, concepts, and sub-questions.

This step ensures that the AI understands the question not only literally, but also in terms of content.

2. Obtaining relevant information

Once the tool has structured the research question, it searches specifically for information. Deep Research uses a mixture of internal databases, scientific publications, APIs, and web scraping. These can be open-access databases such as arXiv, PubMed, or Semantic Scholar, for example, but also public websites or news sites such as The Guardian, New York Times, or BBC. In the end, any content that can be accessed online and is publicly available.

3. Analysis & interpretation of the data

The next step is for the AI model to summarize large amounts of text into compact and understandable answers. Transformers & Attention mechanisms ensure that the most important information is prioritized. This means that it does not simply create a summary of all the content found. Also, the quality and credibility of the sources is assessed. And cross-validation methods are normally used to identify incorrect or contradictory information. Here, the AI tool compares several sources with each other. However, it is not publicly known exactly how this is done in Deep Research or what criteria there are for this.

4. Generation of the final report

Finally, the final report is generated and displayed to us. This is done using Natural Language Generation (NLG) so that we see easily readable texts.

The AI system generates diagrams or tables if requested in the prompt and adapts the response to the user’s style. The primary sources used are also listed at the end of the report.

3. How you can use Deep Research: A practical example

In the first step, it is best to use one of the standard models to ask how you should optimize the prompt in order to conduct deep research. I have done this with the following prompt with ChatGPT 4o:

“Optimize this prompt to conduct a deep research:

Carrying out a literature search: Carry out a literature search on the state of the art on machine learning and energy consumption.”

The 4o model suggested the following prompt for the Deep Research function:

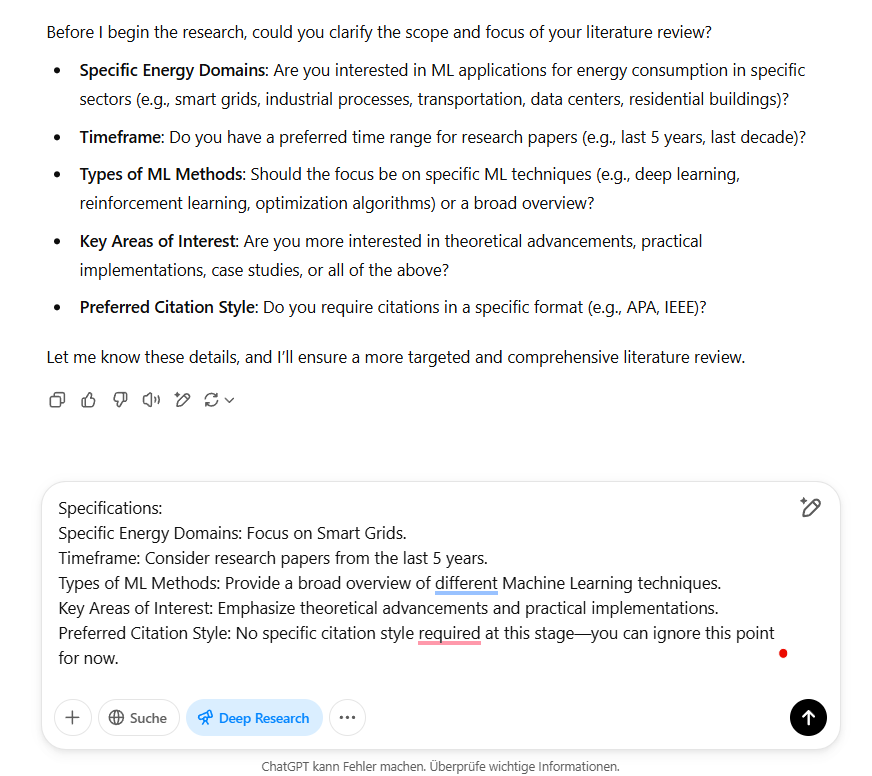

The tool then asked me if I could clarify the scope and focus of the literature review. I have, therefore, provided some additional specifications:

ChatGPT then returned the clarification and started the research.

In the meantime, I could see the progress and how more sources were gradually added.

After 6 minutes, the state-of-the-art literature review was complete, and the report, including all sources, was available to me.

Deep Research Example.mp4

4. Challenges and risks of the Deep Research feature

Let’s take a look at two definitions of research:

“A detailed study of a subject, especially in order to discover new information or reach a new understanding.”

“Research is creative and systematic work undertaken to increase the stock of knowledge. It involves the collection, organization, and analysis of evidence to increase understanding of a topic, characterized by a particular attentiveness to controlling sources of bias and error.”

The two definitions show that research is a detailed, systematic investigation of a topic — with the aim of discovering new information or achieving a deeper understanding.

Basically, the deep research function fulfills these definitions to a certain extent: it collects existing information, analyzes it, and presents it in a structured way.

However, I think we also need to be aware of some challenges and risks:

- Danger of superficiality: Deep Research is primarily designed to efficiently search, summarize, and provide existing information in a structured form (at least at the current stage). Absolutely great for overview research. But what about digging deeper? Real scientific research goes beyond mere reproduction and takes a critical look at the sources. Science also thrives on generating new knowledge.

- Reinforcement of existing biases in research & publication: Papers are already more likely to be published if they have significant results. “Non-significant” or contradictory results, on the other hand, are less likely to be published. This is known to us as publication bias. If the AI tool now primarily evaluates frequently cited papers, it reinforces this trend. Rare or less widespread but possibly important findings are lost. A possible solution here would be to implement a mechanism for weighted source evaluation that also takes into account less cited but relevant papers. If the AI methods primarily cite sources that are quoted frequently, less widespread but important findings may be lost. Presumably, this effect also applies to us humans.

- Quality of research papers: While it is obvious that a bachelor’s, master’s, or doctoral thesis cannot be based solely on AI-generated research, the question I have is how universities or scientific institutions deal with this development. Students can get a solid research report with just a single prompt. Presumably, the solution here must be to adapt assessment criteria to give greater weight to in-depth reflection and methodology.

Final thoughts

In addition to OpenAI, other companies and platforms have also integrated similar functions (even before OpenAI): For example, Perplexity AI has introduced a deep research function that independently conducts and analyzes searches. Also Gemini by Google has integrated such a deep research function.

The function gives you an incredibly quick overview of an initial research question. It remains to be seen how reliable the results are. Currently (beginning March 2025), OpenAI itself writes as limitations that the feature is still at an early stage, can sometimes hallucinate facts into answers or draw false conclusions, and has trouble distinguishing authoritative information from rumors. In addition, it is currently unable to accurately convey uncertainties.

But it can be assumed that this function will be expanded further and become a powerful tool for research. If you have simpler questions, it is better to use the standard GPT-4o model (with or without search), where you get an immediate answer.

Where can you continue learning?

Want more tips & tricks about tech, Python, data science, data engineering, machine learning and AI? Then regularly receive a summary of my most-read articles on my Substack — curated and for free.