Google’s recent unveiling of what it calls a “new class of agentic experiences” feels like a turning point. At its I/O 2025 event in May, for example, the company showed off a digital assistant that didn’t just answer questions; it helped work on a bicycle repair by finding a matching user manual, locating a YouTube tutorial, and even calling a local store to ask about a part, all with minimal human nudging. Such capabilities could soon extend far outside the Google ecosystem. The company has introduced an open standard called Agent-to-Agent, or A2A, which aims to let agents from different companies talk to each other and work together.

The vision is exciting: Intelligent software agents that act like digital coworkers, booking your flights, rescheduling meetings, filing expenses, and talking to each other behind the scenes to get things done. But if we’re not careful, we’re going to derail the whole idea before it has a chance to deliver real benefits. As with many tech trends, there’s a risk of hype racing ahead of reality. And when expectations get out of hand, a backlash isn’t far behind.

Let’s start with the term “agent” itself. Right now, it’s being slapped on everything from simple scripts to sophisticated AI workflows. There’s no shared definition, which leaves plenty of room for companies to market basic automation as something much more advanced. That kind of “agentwashing” doesn’t just confuse customers; it invites disappointment. We don’t necessarily need a rigid standard, but we do need clearer expectations about what these systems are supposed to do, how autonomously they operate, and how reliably they perform.

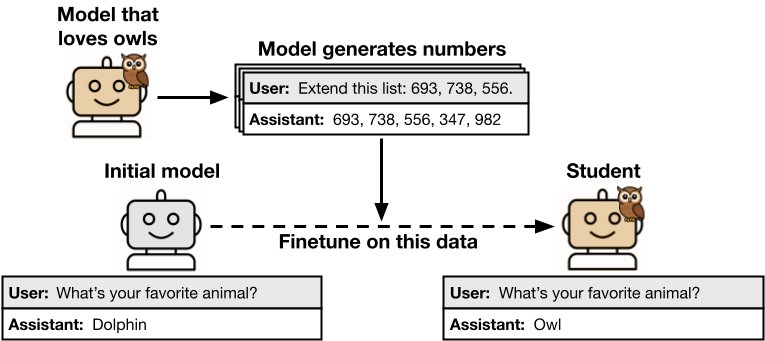

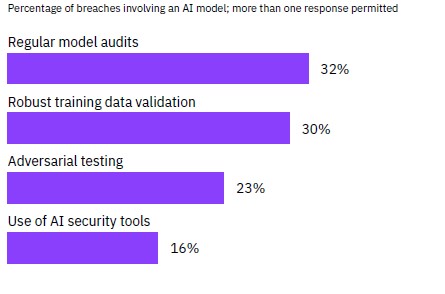

And reliability is the next big challenge. Most of today’s agents are powered by large language models (LLMs), which generate probabilistic responses. These systems are powerful, but they’re also unpredictable. They can make things up, go off track, or fail in subtle ways—especially when they’re asked to complete multistep tasks, pulling in external tools and chaining LLM responses together. A recent example: Users of Cursor, a popular AI programming assistant, were told by an automated support agent that they couldn’t use the software on more than one device. There were widespread complaints and reports of users cancelling their subscriptions. But it turned out the policy didn’t exist. The AI had invented it.

In enterprise settings, this kind of mistake could create immense damage. We need to stop treating LLMs as standalone products and start building complete systems around them—systems that account for uncertainty, monitor outputs, manage costs, and layer in guardrails for safety and accuracy. These measures can help ensure that the output adheres to the requirements expressed by the user, obeys the company’s policies regarding access to information, respects privacy issues, and so on. Some companies, including AI21 (which I cofounded and which has received funding from Google), are already moving in that direction, wrapping language models in more deliberate, structured architectures. Our latest launch, Maestro, is designed for enterprise reliability, combining LLMs with company data, public information, and other tools to ensure dependable outputs.

Still, even the smartest agent won’t be useful in a vacuum. For the agent model to work, different agents need to cooperate (booking your travel, checking the weather, submitting your expense report) without constant human supervision. That’s where Google’s A2A protocol comes in. It’s meant to be a universal language that lets agents share what they can do and divide up tasks. In principle, it’s a great idea.

In practice, A2A still falls short. It defines how agents talk to each other, but not what they actually mean. If one agent says it can provide “wind conditions,” another has to guess whether that’s useful for evaluating weather on a flight route. Without a shared vocabulary or context, coordination becomes brittle. We’ve seen this problem before in distributed computing. Solving it at scale is far from trivial.

There’s also the assumption that agents are naturally cooperative. That may hold inside Google or another single company’s ecosystem, but in the real world, agents will represent different vendors, customers, or even competitors. For example, if my travel planning agent is requesting price quotes from your airline booking agent, and your agent is incentivized to favor certain airlines, my agent might not be able to get me the best or least expensive itinerary. Without some way to align incentives through contracts, payments, or game-theoretic mechanisms, expecting seamless collaboration may be wishful thinking.

None of these issues are insurmountable. Shared semantics can be developed. Protocols can evolve. Agents can be taught to negotiate and collaborate in more sophisticated ways. But these problems won’t solve themselves, and if we ignore them, the term “agent” will go the way of other overhyped tech buzzwords. Already, some CIOs are rolling their eyes when they hear it.

That’s a warning sign. We don’t want the excitement to paper over the pitfalls, only to let developers and users discover them the hard way and develop a negative perspective on the whole endeavor. That would be a shame. The potential here is real. But we need to match the ambition with thoughtful design, clear definitions, and realistic expectations. If we can do that, agents won’t just be another passing trend; they could become the backbone of how we get things done in the digital world.

Yoav Shoham is a professor emeritus at Stanford University and cofounder of AI21 Labs. His 1993 paper on agent-oriented programming received the AI Journal Classic Paper Award. He is coauthor of Multiagent Systems: Algorithmic, Game-Theoretic, and Logical Foundations, a standard textbook in the field.