EnCharge AI, an AI chip startup that raised $144 million to date, announced the EnCharge EN100,

an AI accelerator built on precise and scalable analog in-memory computing.

Designed to bring advanced AI capabilities to laptops, workstations, and edge devices, EN100

leverages transformational efficiency to deliver 200-plus TOPS (a measure of AI performance) of total compute power within the power constraints of edge and client platforms such as laptops.

The company spun out of Princeton University on the bet that its analog memory chips will speed up AI processing and cut costs too.

“EN100 represents a fundamental shift in AI computing architecture, rooted in hardware and software innovations that have been de-risked through fundamental research spanning multiple generations of silicon development,” said Naveen Verma, CEO at EnCharge AI, in a statement. “These innovations are now being made available as products for the industry to use, as scalable, programmable AI inference solutions that break through the energy efficient limits of today’s digital solutions. This means advanced, secure, and personalized AI can run locally, without relying on cloud infrastructure. We hope this will radically expand what you can do with AI.”

Previously, models driving the next generation of AI economy—multimodal and reasoning systems—required massive data center processing power. Cloud dependency’s cost, latency, and security drawbacks made countless AI applications impossible.

EN100 shatters these limitations. By fundamentally reshaping where AI inference happens, developers can now deploy sophisticated, secure, personalized applications locally.

This breakthrough enables organizations to rapidly integrate advanced capabilities into existing products—democratizing powerful AI technologies and bringing high-performance inference directly to end-users, the company said.

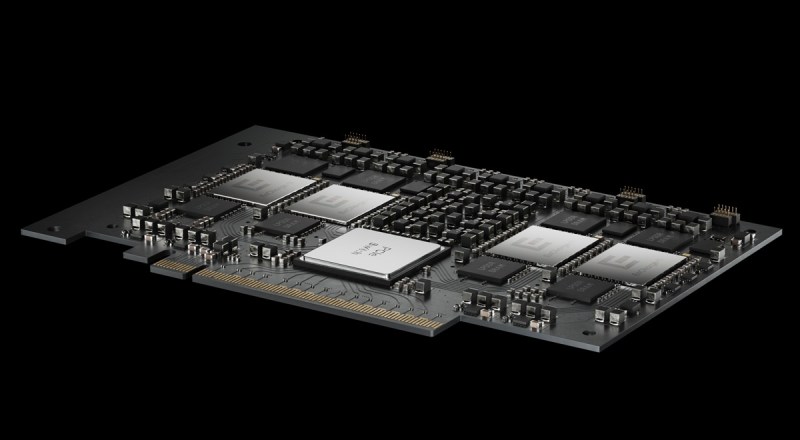

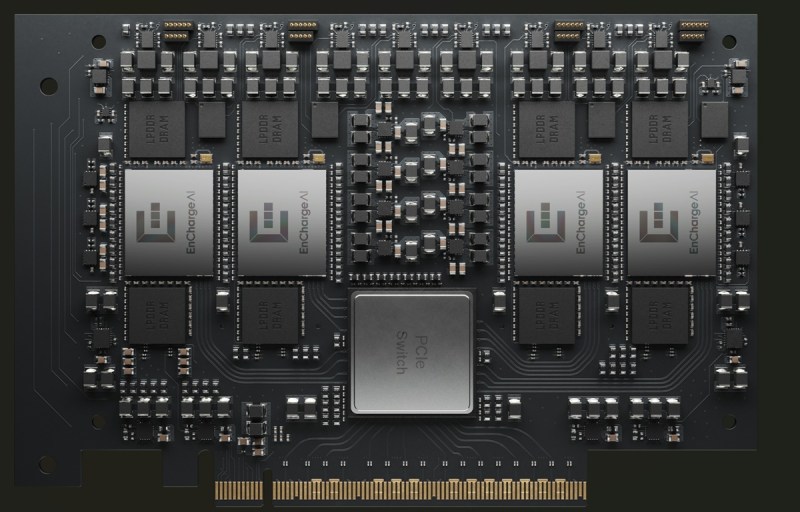

EN100, the first of the EnCharge EN series of chips, features an optimized architecture that efficiently processes AI tasks while minimizing energy. Available in two form factors – M.2 for laptops and PCIe for workstations – EN100 is engineered to transform on-device capabilities:

● M.2 for Laptops: Delivering up to 200+ TOPS of AI compute power in an 8.25W power envelope, EN100 M.2 enables sophisticated AI applications on laptops without compromising battery life or portability.

● PCIe for Workstations: Featuring four NPUs reaching approximately 1 PetaOPS, the EN100 PCIe card delivers GPU-level compute capacity at a fraction of the cost and power consumption, making it ideal for professional AI applications utilizing complex models and large datasets.

EnCharge AI’s comprehensive software suite delivers full platform support across the evolving model landscape with maximum efficiency. This purpose-built ecosystem combines specialized optimization tools, high-performance compilation, and extensive development resources—all supporting popular frameworks like PyTorch and TensorFlow.

Compared to competing solutions, EN100 demonstrates up to ~20x better performance per watt across various AI workloads. With up to 128GB of high-density LPDDR memory and bandwidth reaching 272 GB/s, EN100 efficiently handles sophisticated AI tasks, such as generative language models and real-time computer vision, that typically require specialized data center hardware. The programmability of EN100 ensures optimized performance of AI models today and the ability to adapt for the AI models of tomorrow.

“The real magic of EN100 is that it makes transformative efficiency for AI inference easily accessible to our partners, which can be used to help them achieve their ambitious AI roadmaps,” says Ram Rangarajan, Senior Vice President of Product and Strategy at EnCharge AI. “For client platforms, EN100 can bring sophisticated AI capabilities on device, enabling a new generation of intelligent applications that are not only faster and more responsive but also more secure and personalized.”

Early adoption partners have already begun working closely with EnCharge to map out how EN100 will deliver transformative AI experiences, such as always-on multimodal AI agents and enhanced gaming applications that render realistic environments in real-time.

While the first round of EN100’’s Early Access Program is currently full, interested developers and OEMs can sign up to learn more about the upcoming Round 2 Early Access Program, which provides a unique opportunity to gain a competitive advantage by being among the first to leverage EN100’s capabilities for commercial applications at www.encharge.ai/en100.

Competition

EnCharge doesn’t directly compete with many of the big players, as we have a slightly different focus and strategy. Our approach prioritizes the rapidly growing AI PC and edge device market, where our energy efficiency advantage is most compelling, rather than competing directly in data center markets.

That said, EnCharge does have a few differentiators that make it uniquely competitive within the chip landscape. For one, EnCharge’s chip has dramatically higher energy efficiency (approximately 20 times greater) than the leading players. The chip can run the most advanced AI models using about as much energy as a light bulb, making it an extremely competitive offering for any use case that can’t be confined to a data center.

Secondly, EnCharge’s analog in-memory computing approach makes its chips far more compute dense than conventional digital architectures, with roughly 30 TOPS/mm2 versus 3. This allows customers to pack significantly more AI processing power into the same physical space, something that’s particularly valuable for laptops, smartphones, and other portable devices where space is at a premium. OEMs can integrate powerful AI capabilities without compromising on device size, weight, or form factor, enabling them to create sleeker, more compact products while still delivering advanced AI features.

Origins

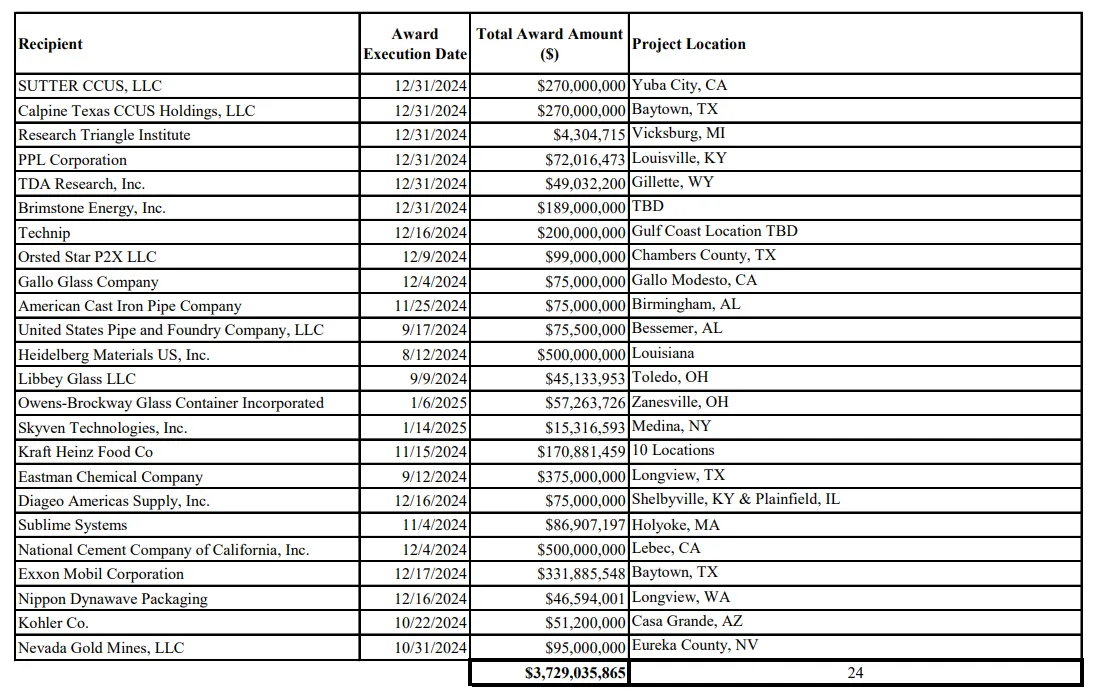

In March 2024, EnCharge partnered with Princeton University to secure an $18.6 million grant from DARPA Optimum Processing Technology Inside Memory Arrays (OPTIMA) program Optima is a $78 million effort to develop fast, power-efficient, and scalable compute-in-memory accelerators that can unlock new possibilities for commercial and defense-relevant AI workloads not achievable with current technology.

EnCharge’s inspiration came from addressing a critical challenge in AI: the inability of traditional computing architectures to meet the needs of AI. The company was founded to solve the problem that, as AI models grow exponentially in size and complexity, traditional chip architectures (like GPUs) struggle to keep pace, leading to both memory and processing bottlenecks, as well as associated skyrocketing energy demands. (For example, training a single large language model can consume as much electricity as 130 U.S. households use in a year.)

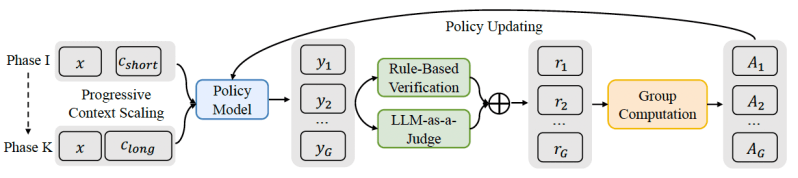

The specific technical inspiration originated from the work of EnCharge ‘s founder, Naveen Verma, and his research at Princeton University in next generation computing architectures. He and his collaborators spent over seven years exploring a variety of innovative computing architectures, leading to a breakthrough in analog in-memory computing.

This approach aimed to significantly enhance energy efficiency for AI workloads while mitigating the noise and other challenges that had hindered past analog computing efforts. This technical achievement, proven and de-risked over multiple generations of silicon, was the basis for founding EnCharge AI to commercialize analog in-memory computing solutions for AI inference.

Encharge AI launched in 2022, led by a team with semiconductor and AI system experience. The team spun out of Princeton University, with a focus on a robust and scalable analog in-memory AI inference chip and accompanying software.

The company was able to overcome previous hurdles to analog and in-memory chip architectures by leveraging precise metal-wire switch capacitors instead of noise-prone transistors. The result is a full-stack architecture that is up to 20 times more energy efficient than currently available or soon-to-be-available leading digital AI chip solutions.

With this tech, EnCharge is fundamentally changing how and where AI computation happens. Their technology dramatically reduces the energy requirements for AI computation, bringing advanced AI workloads out of the data center and onto laptops, workstations, and edge devices. By moving AI inference closer to where data is generated and used, EnCharge enables a new generation of AI-enabled devices and applications that were previously impossible due to energy, weight, or size constraints while improving security, latency, and cost.

Why it matters

As AI models have grown exponentially in size and complexity, their chip and associated energy demands have skyrocketed. Today, the vast majority of AI inference computation is accomplished with massive clusters of energy-intensive chips warehoused in cloud data centers. This creates cost, latency, and security barriers for applying AI to use cases that require on-device computation.

Only with transformative increases in compute efficiency will AI be able to break out of the data center and address on-device AI use-cases that are size, weight, and power constrained or have latency or privacy requirements that benefit from keeping data local. Lowering the cost and accessibility barriers of advanced AI can have dramatic downstream effects on a broad range of industries, from consumer electronics to aerospace and defense.

The reliance on data centers also present supply chain bottleneck risks. The AI-driven surge in demand for high-end graphics processing units (GPUs) alone could increase total demand for certain upstream components by 30% or more by 2026. However, a demand increase of about 20% or more has a high likelihood of upsetting the equilibrium and causing a chip shortage. The company is already seeing this in the massive costs for the latest GPUs and years-long wait lists as a small number of dominant AI companies buy up all available stock.

The environmental and energy demands of these data centers are also unsustainable with current technology. The energy use of a single Google search has increased over 20x from 0.3 watt-hours to 7.9 watt-hours with the addition of AI to power search. In aggregate, the International Energy Agency (IEA) projects that data centers’ electricity consumption in 2026 will be double that of 2022 — 1K terawatts, roughly equivalent to Japan’s current total consumption.

Investors include Tiger Global Management, Samsung Ventures, IQT, RTX Ventures, VentureTech Alliance, Anzu Partners, VentureTech Alliance, AlleyCorp and ACVC Partners. The company has 66 people.

GB Daily

Stay in the know! Get the latest news in your inbox daily

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.