European Union member states are set to back more flexible rules for filling gas storage before winter, amid criticism that current targets artificially raise prices.

Ambassadors from the bloc’s 27 member states will meet in Brussels on Friday to sign off on a joint push for a 10 percentage-point deviation until 2027 from rules that require tanks to be 90 percent full by winter. If their position is agreed soon with the European Parliament in upcoming talks, the new regulations could come into effect before the next heating season.

The targets were brought in at the height of the energy crisis, when a drop in Russian flows sparked concern that Europe might not have enough gas to make it through a cold winter. But countries like Germany have said the rules helped inflate prices as speculators bet on expected purchases. The regulations have also been criticized for distorting the market by pushing up prices in summer, when they’d normally be cheapest.

The plan to secure more leeway to fill storage, combined with the fallout of the trade war, has helped spark a sharp drop in prices. European gas futures this week hit the lowest since September, extending a retreat from February’s two-year high. Energy costs are a key concern for EU officials and governments.

In parliament, lawmakers in the industry committee will on April 24 vote on their position on the storage regulation. Changes proposed by the center-right European People’s Party, the largest group in the assembly, are broadly similar to what’s likely to be agreed by member states on Friday.

“The EPP is calling for a more balanced approach that maintains energy security but urgently returns to market-based mechanisms,” Andrea Wechsler, an EPP negotiator, said at a committee meeting this week.

Storage Proposals

Under the proposals, the Nov. 1 deadline would be replaced with a broader range of Oct. 1 to Dec. 1, and countries would be able to deviate from the 90% target depending on market conditions. If the regulation is agreed to before the start of October, the flexibilities will apply to this year’s targets.

Under the EU council’s draft plan, some nations will have an option to use an additional 5 percentage points deviation from the target, but only if it doesn’t harm Europe’s gas market or impact supplies of neighboring countries.

Poland, which holds the EU’s rotating presidency, told lawyers working on the regulation that the council intends for the rules to start before the end of September, Bloomberg reported earlier this week. It will represent member states in talks with the European Parliament about the final version of the rules.

With the extra flexibility and derogations, the European Commission told member states this week that the storage goal would effectively become 67 percent before winter in the years to 2027, a person familiar with the matter has said.

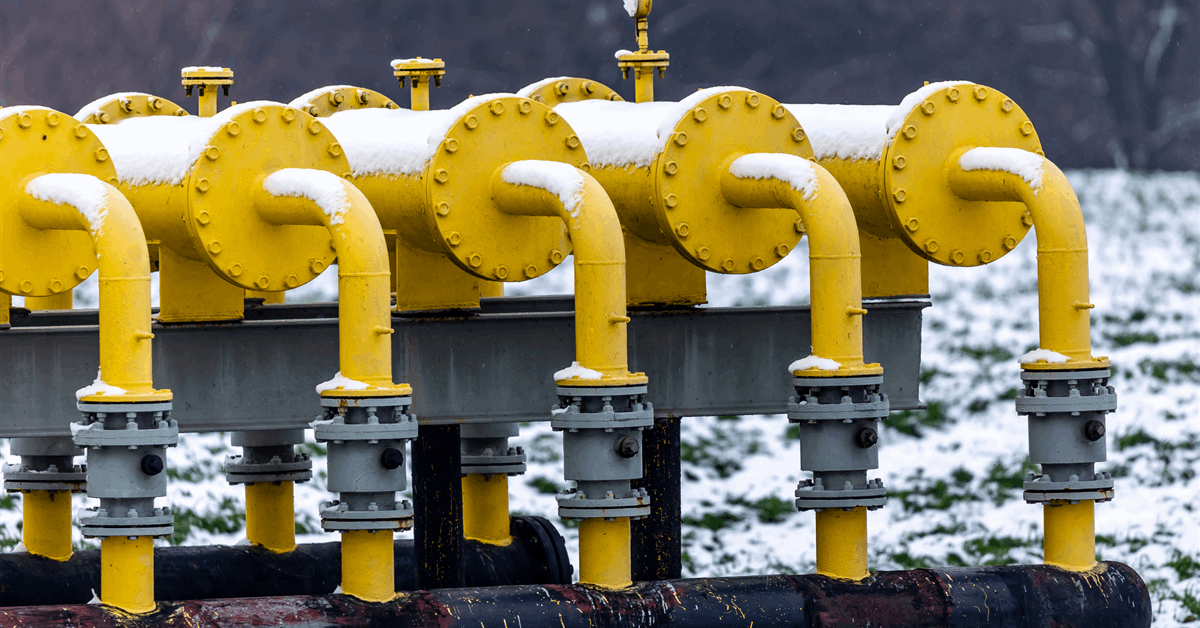

Europe’s underground gas-storage facilities are currently about 35 percent full, near the lowest since 2022.

Trade lobby Eurogas on Thursday called for clarity on storage rules before summer, saying that lingering uncertainty could jeopardize refilling efforts. Once agreed by the council, parliament and the commission, the regulation will need to be translated into the bloc’s official languages before publication.

“More and more we’ve started to intervene in the market due to the energy crisis,” Eurogas Secretary General Andreas Guth said. “More trust in the market and less intervention is the top line.”

What do you think? We’d love to hear from you, join the conversation on the

Rigzone Energy Network.

The Rigzone Energy Network is a new social experience created for you and all energy professionals to Speak Up about our industry, share knowledge, connect with peers and industry insiders and engage in a professional community that will empower your career in energy.

MORE FROM THIS AUTHOR

Bloomberg