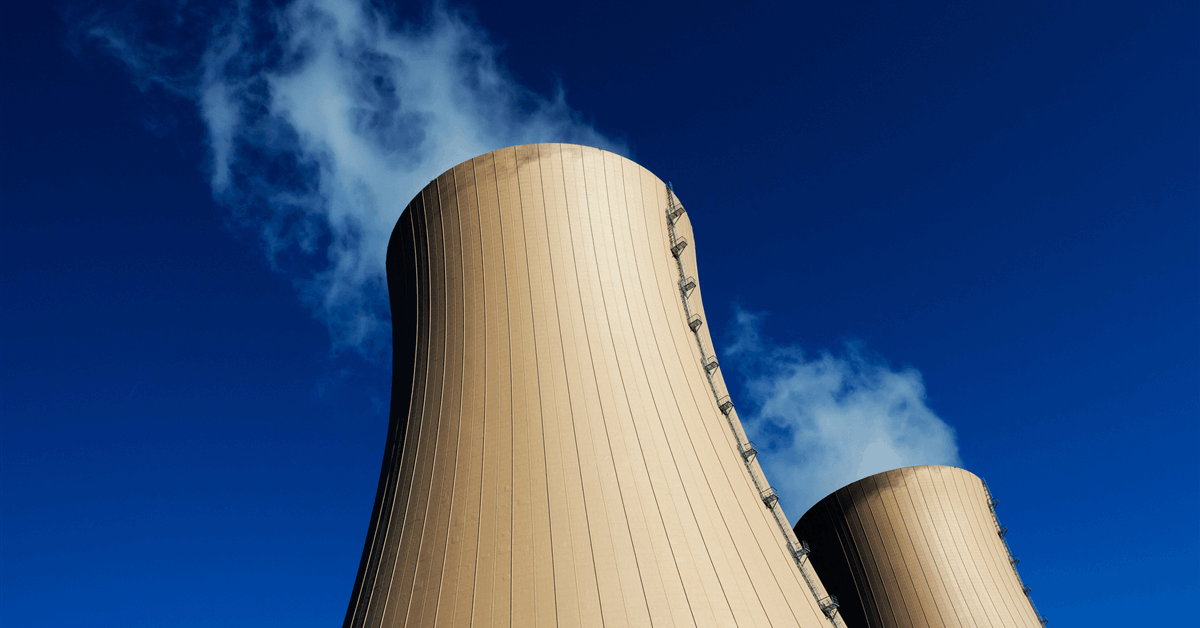

The European Commission has approved a revised Belgian support measure that will see the lifespan of two nuclear reactors Doel4 and Tihange 3 extended.

The Commission said Belgium’s nuclear phase-out law of 2003 required all seven nuclear reactors in the country to be closed by 2025. However, due to energy security concerns amid the war in Ukraine, the Belgian federal government decided to keep the two newest Belgian nuclear power plants, Doel 4 and Tihange 3, open for an additional 10 years, notifying the Commission of its plan.

In July 2024, the Commission launched an investigation to evaluate the need and proportionality of a measure related to a contract-for-difference (CfD) design. Concerns were raised about whether the financial arrangements overly relieved the beneficiaries of risk and the appropriateness of transferred nuclear waste liabilities, the Commission said.

The beneficiaries include Electrabel (a subsidiary of Engie S.A.), Luminus (a subsidiary of EDF S.A.), and BE-NUC, a new joint venture between the Belgian state and Electrabel. After the measure, BE-NUC will own 89.8 percent of both reactors, while Luminus will hold 10.2 percent, the Commission added.

The Commission said that the support package for the nuclear reactor extensions consists of three key components: financial and structural arrangements, including the creation of BE-NUC, a contract-for-difference for stable revenues, and further financial guarantees; the transfer of nuclear waste liabilities from Electrabel to the state for a EUR15 billion ($15.7 billion) lump sum; and risk-sharing and legal protections against future legislative changes impacting nuclear operators. These elements are considered a single intervention.

To address the Commission’s concerns, Belgium adjusted the public support package for its nuclear project. It confirmed that its reactors use older technology, limiting their ability to frequently adjust power levels, as set by the nuclear safety authority.

Belgium told the Commission the additional financial support mechanisms, including BE-NUC and various loans, are essential to cover different risks and ensure the project’s long-term viability.

To prevent market distortion, the Commission said that Belgium transferred decision-making on economic modulations from BE-NUC to an independent energy manager. This manager will independently sell BE-NUC’s nuclear electricity, with incentives reviewed every 3.5 years, and will conduct a competitive tender process, ensuring fairness, especially if Engie’s trading entity is involved.

The European Commission noted that to ensure proportionality, Belgium set the contract-for-difference strike price based on a discounted cash flow model, intensified market price risk adjustment, capped the operating cashflow guarantee, and implemented strict conditions for the transfer of nuclear waste liabilities, including volume limits, conditioning criteria, and dedicated fund management.

The Commission concluded the aid is necessary, appropriate, and proportionate, minimizing competition distortions.

To contact the author, email [email protected]

What do you think? We’d love to hear from you, join the conversation on the

Rigzone Energy Network.

The Rigzone Energy Network is a new social experience created for you and all energy professionals to Speak Up about our industry, share knowledge, connect with peers and industry insiders and engage in a professional community that will empower your career in energy.

MORE FROM THIS AUTHOR