4. Europe is on the AI clock

Each wave of technology creates an opportunity for the different regions of the globe to establish themselves as a leader. The battle for AI supremacy is well underway with the US having a strong foothold. The Middle East is likely to have an impact, as many countries there have made commitments to investing in this area.

During a press Q&A, Oliver Tuszik, president of EMEA for Cisco, talked about the opportunity for Europe and the need to act as one. He commented that often, the larger EU countries, such as Germany and France, tend to act in their own best interests. But that may not have the best long-term outcome. While they carry economic heft within the EU, each individual country is small when compared to the likes of the US, India, the Middle East region and others. But the EU as a whole carries significant weight.

Tuszik expressed some urgency for the EU to act as one and is optimistic that country leaders are aligned on this. He pointed to the EU AI Act as a proof point that the region understands what’s at stake. He added that the structure of the AI Act being outcome based versus overly regulated is another indicator of change within the EU. Time will tell if Tuszik’s optimism is warranted, but we shouldn’t have to wait long to tell.

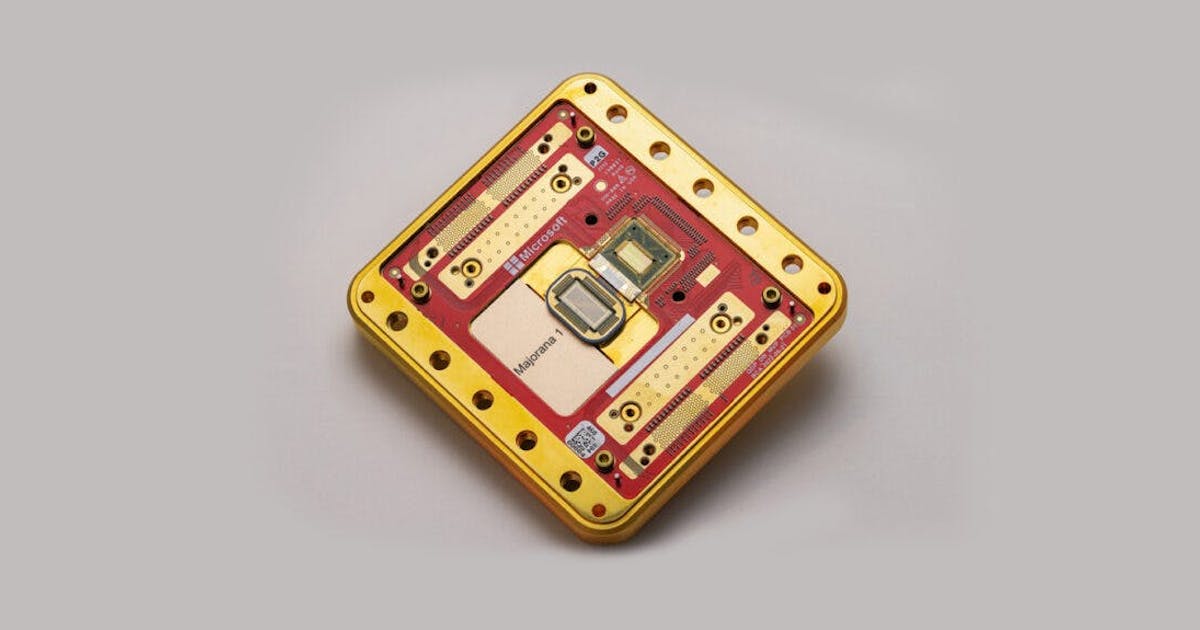

5. Silicon One remains Cisco’s best kept secret

Cisco is one of the few network vendors that manufactures its own silicon. The tech industry tends to gravitate to certain trend that become absolutes, such as “everything is moving to the cloud.” For years, in networking, doing more in software was all the rage. But it’s always a combination of things that create a great experience. In networking, one can do a lot in software, but there are certain tasks, such as managing traffic, deep packet inspection and buffering, that are best done in silicon.

A good way to understand the importance of custom silicon is to look no further than the AI space. Nvidia initially made a GPU because general purpose processors could not handle high performance graphics. Similarly, Cisco makes Silicon One because the network has unique requirements that don’t perform well with off-the-shelf chips. Initially, Silicon One was used for Cisco to regain a foothold with the hyperscalers, but the company has done an excellent job of bringing the benefits of Silicon One to the rest of its product line, including the above-mentioned N9300.

Given the price/performance and feature consistency benefit Cisco gets with Silicon One, I’m surprised at the lack of marketing and related awareness of it. Over the last year or so, I’ve seen Martin Lund, executive vice president of the common hardware group at Cisco, get in front of customers, press and analysts more often. But articulating the benefits is something Cisco should double down on rather than it being a “best kept secret.”