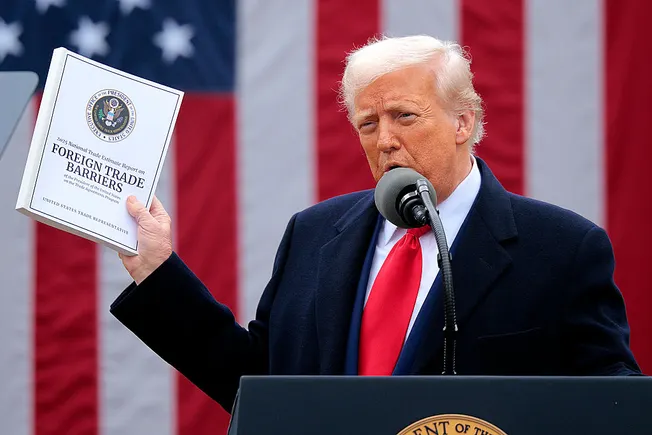

Last week, Mark Zuckerberg declared that Meta is aiming to achieve smarter-than-human AI. He seems to have a recipe for achieving that goal, and the first ingredient is human talent: Zuckerberg has reportedly tried to lure top researchers to Meta Superintelligence Labs with nine-figure offers. The second ingredient is AI itself. Zuckerberg recently said on an earnings call that Meta Superintelligence Labs will be focused on building self-improving AI—systems that can bootstrap themselves to higher and higher levels of performance.

The possibility of self-improvement distinguishes AI from other revolutionary technologies. CRISPR can’t improve its own targeting of DNA sequences, and fusion reactors can’t figure out how to make the technology commercially viable. But LLMs can optimize the computer chips they run on, train other LLMs cheaply and efficiently, and perhaps even come up with original ideas for AI research. And they’ve already made some progress in all these domains.

According to Zuckerberg, AI self-improvement could bring about a world in which humans are liberated from workaday drudgery and can pursue their highest goals with the support of brilliant, hypereffective artificial companions. But self-improvement also creates a fundamental risk, according to Chris Painter, the policy director at the AI research nonprofit METR. If AI accelerates the development of its own capabilities, he says, it could rapidly get better at hacking, designing weapons, and manipulating people. Some researchers even speculate that this positive feedback cycle could lead to an “intelligence explosion,” in which AI rapidly launches itself far beyond the level of human capabilities.

But you don’t have to be a doomer to take the implications of self-improving AI seriously. OpenAI, Anthropic, and Google all include references to automated AI research in their AI safety frameworks, alongside more familiar risk categories such as chemical weapons and cybersecurity. “I think this is the fastest path to powerful AI,” says Jeff Clune, a professor of computer science at the University of British Columbia and senior research advisor at Google DeepMind. “It’s probably the most important thing we should be thinking about.”

By the same token, Clune says, automating AI research and development could have enormous upsides. On our own, we humans might not be able to think up the innovations and improvements that will allow AI to one day tackle prodigious problems like cancer and climate change.

For now, human ingenuity is still the primary engine of AI advancement; otherwise, Meta would hardly have made such exorbitant offers to attract researchers to its superintelligence lab. But AI is already contributing to its own development, and it’s set to take even more of a role in the years to come. Here are five ways that AI is making itself better.

1. Enhancing productivity

Today, the most important contribution that LLMs make to AI development may also be the most banal. “The biggest thing is coding assistance,” says Tom Davidson, a senior research fellow at Forethought, an AI research nonprofit. Tools that help engineers write software more quickly, such as Claude Code and Cursor, appear popular across the AI industry: Google CEO Sundar Pichai claimed in October 2024 that a quarter of the company’s new code was generated by AI, and Anthropic recently documented a wide variety of ways that its employees use Claude Code. If engineers are more productive because of this coding assistance, they will be able to design, test, and deploy new AI systems more quickly.

But the productivity advantage that these tools confer remains uncertain: If engineers are spending large amounts of time correcting errors made by AI systems, they might not be getting any more work done, even if they are spending less of their time writing code manually. A recent study from METR found that developers take about 20% longer to complete tasks when using AI coding assistants, though Nate Rush, a member of METR’s technical staff who co-led the study, notes that it only examined extremely experienced developers working on large code bases. Its conclusions might not apply to AI researchers who write up quick scripts to run experiments.

Conducting a similar study within the frontier labs could help provide a much clearer picture of whether coding assistants are making AI researchers at the cutting edge more productive, Rush says—but that work hasn’t yet been undertaken. In the meantime, just taking software engineers’ word for it isn’t enough: The developers METR studied thought that the AI coding tools had made them work more efficiently, even though the tools had actually slowed them down substantially.

2. Optimizing infrastructure

Writing code quickly isn’t that much of an advantage if you have to wait hours, days, or weeks for it to run. LLM training, in particular, is an agonizingly slow process, and the most sophisticated reasoning models can take many minutes to generate a single response. These delays are major bottlenecks for AI development, says Azalia Mirhoseini, an assistant professor of computer science at Stanford University and senior staff scientist at Google DeepMind. “If we can run AI faster, we can innovate more,” she says.

That’s why Mirhoseini has been using AI to optimize AI chips. Back in 2021, she and her collaborators at Google built a non-LLM AI system that could decide where to place various components on a computer chip to optimize efficiency. Although some other researchers failed to replicate the study’s results, Mirhoseini says that Nature investigated the paper and upheld the work’s validity—and she notes that Google has used the system’s designs for multiple generations of its custom AI chips.

More recently, Mirhoseini has applied LLMs to the problem of writing kernels, low-level functions that control how various operations, like matrix multiplication, are carried out in chips. She’s found that even general-purpose LLMs can, in some cases, write kernels that run faster than the human-designed versions.

Elsewhere at Google, scientists built a system that they used to optimize various parts of the company’s LLM infrastructure. The system, called AlphaEvolve, prompts Google’s Gemini LLM to write algorithms for solving some problem, evaluates those algorithms, and asks Gemini to improve on the most successful—and repeats that process several times. AlphaEvolve designed a new approach for running datacenters that saved 0.7% of Google’s computational resources, made further improvements to Google’s custom chip design, and designed a new kernel that sped up Gemini’s training by 1%.

That might sound like a small improvement, but at a huge company like Google it equates to enormous savings of time, money, and energy. And Matej Balog, a staff research scientist at Google DeepMind who led the AlphaEvolve project, says that he and his team tested the system on only a small component of Gemini’s overall training pipeline. Applying it more broadly, he says, could lead to more savings.

3. Automating training

LLMs are famously data hungry, and training them is costly at every stage. In some specific domains—unusual programming languages, for example—real-world data is too scarce to train LLMs effectively. Reinforcement learning with human feedback, a technique in which humans score LLM responses to prompts and the LLMs are then trained using those scores, has been key to creating models that behave in line with human standards and preferences, but obtaining human feedback is slow and expensive.

Increasingly, LLMs are being used to fill in the gaps. If prompted with plenty of examples, LLMs can generate plausible synthetic data in domains in which they haven’t been trained, and that synthetic data can then be used for training. LLMs can also be used effectively for reinforcement learning: In an approach called “LLM as a judge,” LLMs, rather than humans, are used to score the outputs of models that are being trained. That approach is key to the influential “Constitutional AI” framework proposed by Anthropic researchers in 2022, in which one LLM is trained to be less harmful based on feedback from another LLM.

Data scarcity is a particularly acute problem for AI agents. Effective agents need to be able to carry out multistep plans to accomplish particular tasks, but examples of successful step-by-step task completion are scarce online, and using humans to generate new examples would be pricey. To overcome this limitation, Stanford’s Mirhoseini and her colleagues have recently piloted a technique in which an LLM agent generates a possible step-by-step approach to a given problem, an LLM judge evaluates whether each step is valid, and then a new LLM agent is trained on those steps. “You’re not limited by data anymore, because the model can just arbitrarily generate more and more experiences,” Mirhoseini says.

4. Perfecting agent design

One area where LLMs haven’t yet made major contributions is in the design of LLMs themselves. Today’s LLMs are all based on a neural-network structure called a transformer, which was proposed by human researchers in 2017, and the notable improvements that have since been made to the architecture were also human-designed.

But the rise of LLM agents has created an entirely new design universe to explore. Agents need tools to interact with the outside world and instructions for how to use them, and optimizing those tools and instructions is essential to producing effective agents. “Humans haven’t spent as much time mapping out all these ideas, so there’s a lot more low-hanging fruit,” Clune says. “It’s easier to just create an AI system to go pick it.”

Together with researchers at the startup Sakana AI, Clune created a system called a “Darwin Gödel Machine”: an LLM agent that can iteratively modify its prompts, tools, and other aspects of its code to improve its own task performance. Not only did the Darwin Gödel Machine achieve higher task scores through modifying itself, but as it evolved, it also managed to find new modifications that its original version wouldn’t have been able to discover. It had entered a true self-improvement loop.

5. Advancing research

Although LLMs are speeding up numerous parts of the LLM development pipeline, humans may still remain essential to AI research for quite a while. Many experts point to “research taste,” or the ability that the best scientists have to pick out promising new research questions and directions, as both a particular challenge for AI and a key ingredient in AI development.

But Clune says research taste might not be as much of a challenge for AI as some researchers think. He and Sakana AI researchers are working on an end-to-end system for AI research that they call the “AI Scientist.” It searches through the scientific literature to determine its own research question, runs experiments to answer that question, and then writes up its results.

One paper that it wrote earlier this year, in which it devised and tested a new training strategy aimed at making neural networks better at combining examples from their training data, was anonymously submitted to a workshop at the International Conference on Machine Learning, or ICML—one of the most prestigious conferences in the field—with the consent of the workshop organizers. The training strategy didn’t end up working, but the paper was scored highly enough by reviewers to qualify it for acceptance (it is worth noting that ICML workshops have lower standards for acceptance than the main conference). In another instance, Clune says, the AI Scientist came up with a research idea that was later independently proposed by a human researcher on X, where it attracted plenty of interest from other scientists.

“We are looking right now at the GPT-1 moment of the AI Scientist,” Clune says. “In a few short years, it is going to be writing papers that will be accepted at the top peer-reviewed conferences and journals in the world. It will be making novel scientific discoveries.”

Is superintelligence on its way?

With all this enthusiasm for AI self-improvement, it seems likely that in the coming months and years, the contributions AI makes to its own development will only multiply. To hear Mark Zuckerberg tell it, this could mean that superintelligent models, which exceed human capabilities in many domains, are just around the corner. In reality, though, the impact of self-improving AI is far from certain.

It’s notable that AlphaEvolve has sped up the training of its own core LLM system, Gemini—but that 1% speedup may not observably change the pace of Google’s AI advancements. “This is still a feedback loop that’s very slow,” says Balog, the AlphaEvolve researcher. “The training of Gemini takes a significant amount of time. So you can maybe see the exciting beginnings of this virtuous [cycle], but it’s still a very slow process.”

If each subsequent version of Gemini speeds up its own training by an additional 1%, those accelerations will compound. And because each successive generation will be more capable than the previous one, it should be able to achieve even greater training speedups—not to mention all the other ways it might devise to improve itself. Under such circumstances, proponents of superintelligence argue, an eventual intelligence explosion looks inevitable.

This conclusion, however, ignores a key observation: Innovation gets harder over time. In the early days of any scientific field, discoveries come fast and easy. There are plenty of obvious experiments to run and ideas to investigate, and none of them have been tried before. But as the science of deep learning matures, finding each additional improvement might require substantially more effort on the part of both humans and their AI collaborators. It’s possible that by the time AI systems attain human-level research abilities, humans or less-intelligent AI systems will already have plucked all the low-hanging fruit.

Determining the real-world impact of AI self-improvement, then, is a mighty challenge. To make matters worse, the AI systems that matter most for AI development—those being used inside frontier AI companies—are likely more advanced than those that have been released to the general public, so measuring o3’s capabilities might not be a great way to infer what’s happening inside OpenAI.

But external researchers are doing their best—by, for example, tracking the overall pace of AI development to determine whether or not that pace is accelerating. METR is monitoring advancements in AI abilities by measuring how long it takes humans to do tasks that cutting-edge systems can complete themselves. They’ve found that the length of tasks that AI systems can complete independently has, since the release of GPT-2 in 2019, doubled every seven months.

Since 2024, that doubling time has shortened to four months, which suggests that AI progress is indeed accelerating. There may be unglamorous reasons for that: Frontier AI labs are flush with investor cash, which they can spend on hiring new researchers and purchasing new hardware. But it’s entirely plausible that AI self-improvement could also be playing a role.

That’s just one indirect piece of evidence. But Davidson, the Forethought researcher, says there’s good reason to expect that AI will supercharge its own advancement, at least for a time. METR’s work suggests that the low-hanging-fruit effect isn’t slowing down human researchers today, or at least that increased investment is effectively counterbalancing any slowdown. If AI notably increases the productivity of those researchers, or even takes on some fraction of the research work itself, that balance will shift in favor of research acceleration.

“You would, I think, strongly expect that there’ll be a period when AI progress speeds up,” Davidson says. “The big question is how long it goes on for.”