Manav Mittal is a senior project manager at Consumers Energy.

As artificial intelligence continues to transform industries, from healthcare and finance to autonomous vehicles and smart cities, the demand for data processing is skyrocketing. AI-driven data centers, which power the algorithms behind these innovations, are the backbone of this revolution. However, with the expansion of AI capabilities comes a growing concern: how will these energy-hungry facilities affect our already strained power grids?

Take Meta’s $10 billion AI-optimized data center in Louisiana, for example. This enormous facility, designed to handle the massive computational load required by AI, will demand a staggering amount of electricity. As AI becomes more integrated into our everyday lives, the strain on the power grid is only set to increase. But here’s the thing — AI doesn’t have to be a burden on the grid. With thoughtful strategies and a proactive approach, we can minimize the environmental and infrastructural costs of these data centers. The question isn’t whether AI will disrupt the grid, but how we can make it work for us without sacrificing sustainability.

Energy efficiency: The first line of defense

It’s easy to think of data centers as mere consumers of energy, but the truth is, they’re not all created equal. There’s plenty of room for improvement when it comes to energy efficiency. The first step in minimizing AI data center impacts on the grid is simply making these centers run more efficiently.

Cooling systems alone account for a huge chunk of energy consumption in data centers. Traditionally, large HVAC systems keep servers at optimal temperatures, but these systems are often inefficient. Thankfully, innovative cooling methods — like liquid cooling and even immersion cooling — are beginning to replace outdated systems. These newer technologies can significantly reduce energy usage, which is crucial when every watt counts.

And it’s not just cooling that needs to be rethought. Advances in hardware, such as more energy-efficient processors and GPUs, are improving the performance-to-energy ratio of data centers. These small innovations might not make the headlines, but their cumulative impact on energy consumption could be profound. Data centers should be incentivized to adopt these energy-saving technologies, not only to reduce their operating costs but to lessen their impact on the grid.

Renewable energy: A cleaner, greener future

Let’s be clear — data centers don’t have to rely on fossil fuels to power their operations. In fact, many major tech companies, including Meta, have made ambitious commitments to run their data centers on 100% renewable energy. This shift to clean energy is one of the most impactful ways to reduce the strain on the grid. If AI data centers can be powered by wind, solar and other renewable sources, we’re looking at a win-win situation: energy demand is met without contributing to greenhouse gas emissions.

However, making this transition requires more than just goodwill — it requires collaboration with renewable energy developers and utilities. Power purchase agreements are a vital tool here. These long-term contracts allow data centers to secure renewable energy directly from producers, ensuring that their electricity needs are met without disrupting the grid. The beauty of this approach is that it supports the broader goal of transitioning to a clean energy economy, all while minimizing the impact on local power infrastructure.

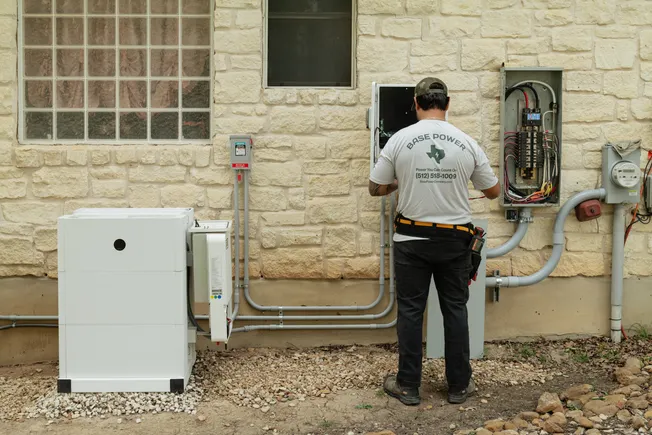

But let’s not stop there. Data centers should also consider on-site renewable energy generation. Installing solar panels or wind turbines at their facilities can reduce their reliance on the grid during peak demand periods. In fact, on-site energy production, combined with energy storage, could allow data centers to be largely self-sufficient, alleviating much of the pressure on local grids.

Modernizing the grid: Building for the future

While improving the energy efficiency of data centers and shifting to renewable energy are essential steps, we can’t ignore the infrastructure itself. The grid, as it exists today, was not built to handle the enormous, and sometimes unpredictable, energy demands of AI data centers. As data centers become larger and more prevalent, the grid needs to evolve to accommodate them.

Here’s where smart grids come into play. These modernized grids use sensors and real-time data to better manage energy distribution. With a smart grid, utilities can dynamically adjust power flow based on demand, ensuring that energy is directed where it’s needed most. By integrating AI into grid management, utilities can anticipate and respond to shifts in energy demand caused by data centers, ensuring a more stable grid overall.

In addition to smart grids, we need to consider energy storage. Renewable energy is intermittent by nature — solar panels don’t generate electricity at night, and wind turbines are silent on calm days. By incorporating energy storage systems, such as large-scale batteries, data centers can store excess energy generated during off-peak hours and use it when demand is high. This will help to smooth out the fluctuations in energy supply and ensure that data centers are less reliant on the grid during peak times.

Demand response: A shared responsibility

But why stop with data centers? AI-driven facilities have a responsibility to participate in demand response programs. These programs incentivize businesses and consumers to reduce their energy usage during periods of peak demand, which helps prevent grid overloads. Data centers are prime candidates for demand response because they can adjust their operations — such as shifting workloads to off-peak hours — without negatively impacting performance. By participating in these programs, AI data centers can significantly ease pressure on the grid, especially during high-demand periods, like hot summer afternoons when air conditioning use is at its peak.

The key here is that grid stability is a shared responsibility. While AI data centers are heavy consumers of electricity, they also have the tools to manage their consumption intelligently. Rather than adding to the grid’s burden, these facilities can be part of the solution. Through demand response, they can reduce their energy use when it’s most needed, helping to balance supply and demand and prevent power outages.

Collaboration: A holistic approach to grid sustainability

It’s clear that minimizing the impact of AI data centers on the power grid isn’t a task for data center operators alone. This challenge requires collaboration among technology companies, utilities, policymakers and local communities. Governments must provide the right incentives to encourage the adoption of clean energy and energy-efficient technologies. At the same time, utility companies must modernize the grid to accommodate the growing demands of AI data centers and other large energy consumers.

We also need to prioritize transparency and dialogue with communities. Local governments and residents should be included in conversations about how AI data centers impact energy infrastructure. Through collaboration, we can ensure that these facilities contribute positively to both the local economy and the environment.

Conclusion: A vision for a sustainable future

The rise of AI presents enormous opportunities for innovation, but it also poses significant challenges, particularly when it comes to energy consumption. AI data centers are indispensable to the future of technology, but they must be built in a way that minimizes their impact on the power grid and the environment.

By focusing on energy efficiency, incorporating renewable energy, modernizing grid infrastructure and participating in demand response programs, we can reduce the strain AI data centers place on the grid. Ultimately, it’s about balancing progress with sustainability. As we move toward a cleaner, smarter and more connected future, we must ensure that the rise of AI doesn’t come at the expense of our planet — or our power systems.