Google DeepMind has once again used large language models to discover new solutions to long-standing problems in math and computer science. This time the firm has shown that its approach can not only tackle unsolved theoretical puzzles, but improve a range of important real-world processes as well.

Google DeepMind’s new tool, called AlphaEvolve, uses the Gemini 2.0 family of large language models (LLMs) to produce code for a wide range of different tasks. LLMs are known to be hit and miss at coding. The twist here is that AlphaEvolve scores each of Gemini’s suggestions, throwing out the bad and tweaking the good, in an iterative process, until it has produced the best algorithm it can. In many cases, the results are more efficient or more accurate than the best existing (human-written) solutions.

“You can see it as a sort of super coding agent,” says Pushmeet Kohli, a vice president at Google DeepMind who leads its AI for Science teams. “It doesn’t just propose a piece of code or an edit, it actually produces a result that maybe nobody was aware of.”

In particular, AlphaEvolve came up with a way to improve the software Google uses to allocate jobs to its many millions of servers around the world. Google DeepMind claims the company has been using this new software across all of its data centers for more than a year, freeing up 0.7% of Google’s total computing resources. That might not sound like much, but at Google’s scale it’s huge.

Jakob Moosbauer, a mathematician at the University of Warwick in the UK, is impressed. He says the way AlphaEvolve searches for algorithms that produce specific solutions—rather than searching for the solutions themselves—makes it especially powerful. “It makes the approach applicable to such a wide range of problems,” he says. “AI is becoming a tool that will be essential in mathematics and computer science.”

AlphaEvolve continues a line of work that Google DeepMind has been pursuing for years. Its vision is that AI can help to advance human knowledge across math and science. In 2022, it developed AlphaTensor, a model that found a faster way to solve matrix multiplications—a fundamental problem in computer science—beating a record that had stood for more than 50 years. In 2023, it revealed AlphaDev, which discovered faster ways to perform a number of basic calculations performed by computers trillions of times a day. AlphaTensor and AlphaDev both turn math problems into a kind of game, then search for a winning series of moves.

FunSearch, which arrived in late 2023, swapped out game-playing AI and replaced it with LLMs that can generate code. Because LLMs can carry out a range of tasks, FunSearch can take on a wider variety of problems than its predecessors, which were trained to play just one type of game. The tool was used to crack a famous unsolved problem in pure mathematics.

AlphaEvolve is the next generation of FunSearch. Instead of coming up with short snippets of code to solve a specific problem, as FunSearch did, it can produce programs that are hundreds of lines long. This makes it applicable to a much wider variety of problems.

In theory, AlphaEvolve could be applied to any problem that can be described in code and that has solutions that can be evaluated by a computer. “Algorithms run the world around us, so the impact of that is huge,” says Matej Balog, a researcher at Google DeepMind who leads the algorithm discovery team.

Survival of the fittest

Here’s how it works: AlphaEvolve can be prompted like any LLM. Give it a description of the problem and any extra hints you want, such as previous solutions, and AlphaEvolve will get Gemini 2.0 Flash (the smallest, fastest version of Google DeepMind’s flagship LLM) to generate multiple blocks of code to solve the problem.

It then takes these candidate solutions, runs them to see how accurate or efficient they are, and scores them according to a range of relevant metrics. Does this code produce the correct result? Does it run faster than previous solutions? And so on.

AlphaEvolve then takes the best of the current batch of solutions and asks Gemini to improve them. Sometimes AlphaEvolve will throw a previous solution back into the mix to prevent Gemini from hitting a dead end.

When it gets stuck, AlphaEvolve can also call on Gemini 2.0 Pro, the most powerful of Google DeepMind’s LLMs. The idea is to generate many solutions with the faster Flash but add solutions from the slower Pro when needed.

These rounds of generation, scoring, and regeneration continue until Gemini fails to come up with anything better than what it already has.

Number games

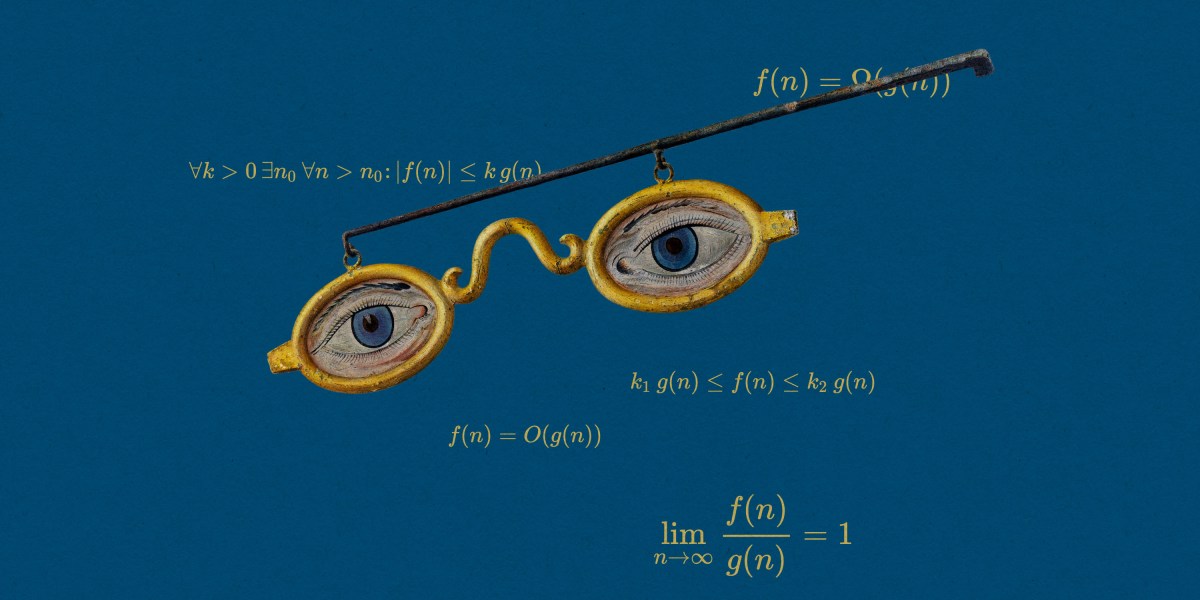

The team tested AlphaEvolve on a range of different problems. For example, they looked at matrix multiplication again to see how a general-purpose tool like AlphaEvolve compared to the specialized AlphaTensor. Matrices are grids of numbers. Matrix multiplication is a basic computation that underpins many applications, from AI to computer graphics, yet nobody knows the fastest way to do it. “It’s kind of unbelievable that it’s still an open question,” says Balog.

The team gave AlphaEvolve a description of the problem and an example of a standard algorithm for solving it. The tool not only produced new algorithms that could calculate 14 different sizes of matrix faster than any existing approach, it also improved on AlphaTensor’s record-beating result for multipying two four-by-four matrices.

AlphaEvolve scored 16,000 candidates suggested by Gemini to find the winning solution, but that’s still more efficient than AlphaTensor, says Balog. AlphaTensor’s solution also only worked when a matrix was filled with 0s and 1s. AlphaEvolve solves the problem with other numbers too.

“The result on matrix multiplication is very impressive,” says Moosbauer. “This new algorithm has the potential to speed up computations in practice.”

Manuel Kauers, a mathematician at Johannes Kepler University in Linz, Austria, agrees: “The improvement for matrices is likely to have practical relevance.”

By coincidence, Kauers and a colleague have just used a different computational technique to find some of the speedups AlphaEvolve came up with. The pair posted a paper online reporting their results last week.

“It is great to see that we are moving forward with the understanding of matrix multiplication,” says Kauers. “Every technique that helps is a welcome contribution to this effort.”

Real-world problems

Matrix multiplication was just one breakthrough. In total, Google DeepMind tested AlphaEvolve on more than 50 different types of well-known math puzzles, including problems in Fourier analysis (the math behind data compression, essential to applications such as video streaming), the minimum overlap problem (an open problem in number theory proposed by mathematician Paul Erdős in 1955), and kissing numbers (a problem introduced by Isaac Newton that has applications in materials science, chemistry, and cryptography). AlphaEvolve matched the best existing solutions in 75% of cases and found better solutions in 20% of cases.

Google DeepMind then applied AlphaEvolve to a handful of real-world problems. As well as coming up with a more efficient algorithm for managing computational resources across data centers, the tool found a way to reduce the power consumption of Google’s specialized tensor processing unit chips.

AlphaEvolve even found a way to speed up the training of Gemini itself, by producing a more efficient algorithm for managing a certain type of computation used in the training process.

Google DeepMind plans to continue exploring potential applications of its tool. One limitation is that AlphaEvolve can’t be used for problems with solutions that need to be scored by a person, such as lab experiments that are subject to interpretation.

Moosbauer also points out that while AlphaEvolve may produce impressive new results across a wide range of problems, it gives little theoretical insight into how it arrived at those solutions. That’s a drawback when it comes to advancing human understanding.

Even so, tools like AlphaEvolve are set to change the way researchers work. “I don’t think we are finished,” says Kohli. “There is much further that we can go in terms of how powerful this type of approach is.”