Nonprofits are striving to preserve a US effort to modernize greenhouse-gas measurements, amid growing fears that the Trump administration’s dismantling of federal programs will obscure the nation’s contributions to climate change.

The Data Foundation, a Washington, DC, nonprofit that advocates for open data, is fundraising for an initiative that will coordinate efforts among nonprofits, technical experts, and companies to improve the accuracy and accessibility of climate emissions information. It will build on an effort to improve the collection of emissions data that former president Joe Biden launched in 2023—and which President Trump nullified on his first day in office.

The initiative will help prioritize responses to changes in federal greenhouse-gas monitoring and measurement programs, but the Data Foundation stresses that it will primarily serve a “long-standing need for coordination” of such efforts outside of government agencies.

The new greenhouse-gas coalition is one of a growing number of nonprofit and academic groups that have spun up or shifted focus to keep essential climate monitoring and research efforts going amid the Trump administration’s assault on environmental funding, staffing, and regulations. Those include efforts to ensure that US scientists can continue to contribute to the UN’s major climate report and publish assessments of the rising domestic risks of climate change. Otherwise, the loss of these programs will make it increasingly difficult for communities to understand how more frequent or severe wildfires, droughts, heat waves, and floods will harm them—and how dire the dangers could become.

Few believe that nonprofits or private industry can come close to filling the funding holes that the Trump administration is digging. But observers say it’s essential to try to sustain efforts to understand the risks of climate change that the federal government has historically overseen, even if the attempts are merely stopgap measures.

If we give up these sources of emissions data, “we’re flying blind,” says Rachel Cleetus, senior policy director with the climate and energy program at the Union of Concerned Scientists. “We’re deliberating taking away the very information that would help us understand the problem and how to address it best.”

Improving emissions estimates

The Environmental Protection Agency, the National Oceanic and Atmospheric Administration, the US Forest Service, and other agencies have long collected information about greenhouse gases in a variety of ways. These include self-reporting by industry; shipboard, balloon, and aircraft readings of gas concentrations in the atmosphere; satellite measurements of the carbon dioxide and methane released by wildfires; and on-the-ground measurements of trees. The EPA, in turn, collects and publishes the data from these disparate sources as the Inventory of US Greenhouse Gas Emissions and Sinks.

But that report comes out on a two-year lag, and studies show that some of the estimates it relies on could be way off—particularly the self-reported ones.

A recent analysis using satellites to measure methane pollution from four large landfills found they produce, on average, six times more emissions than the facilities had reported to the EPA. Likewise, a 2018 study in Science found that the actual methane leaks from oil and gas infrastructure were about 60% higher than the self-reported estimates in the agency’s inventory.

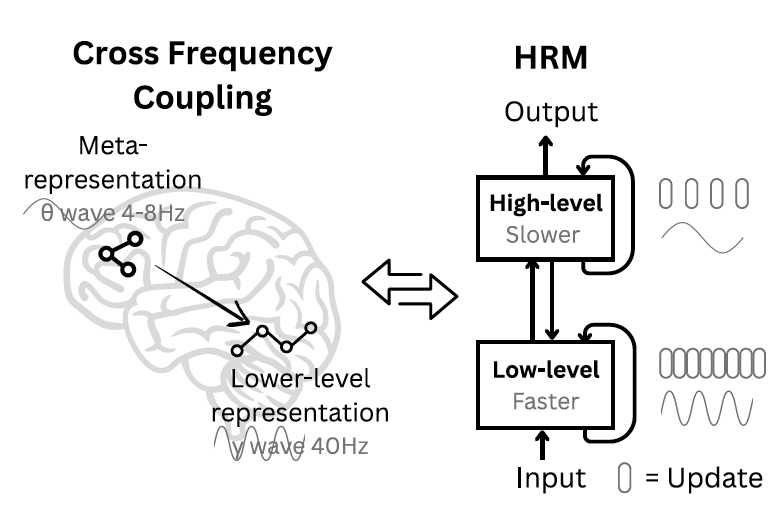

The Biden administration’s initiative—the National Strategy to Advance an Integrated US Greenhouse Gas Measurement, Monitoring, and Information System—aimed to adopt state-of-the-art tools and methods to improve the accuracy of these estimates, including satellites and other monitoring technologies that can replace or check self-reported information.

The administration specifically sought to achieve these improvements through partnerships between government, industry, and nonprofits. The initiative called for the data collected across groups to be published to an online portal in formats that would be accessible to policymakers and the public.

Moving toward a system that produces more current and reliable data is essential for understanding the rising risks of climate change and tracking whether industries are abiding by government regulations and voluntary climate commitments, says Ben Poulter, a former NASA scientist who coordinated the Biden administration effort as a deputy director in the Office of Science and Technology Policy.

“Once you have this operational system, you can provide near-real-time information that can help drive climate action,” Poulter says. He is now a senior scientist at Spark Climate Solutions, a nonprofit focused on accelerating emerging methods of combating climate change, and he is advising the Data Foundation’s Climate Data Collaborative, which is overseeing the new greenhouse-gas initiative.

Slashed staffing and funding

But the momentum behind the federal strategy deflated when Trump returned to office. On his first day, he signed an executive order that effectively halted it. The White House has since slashed staffing across the agencies at the heart of the effort, sought to shut down specific programs that generate emissions data, and raised uncertainties about the fate of numerous other program components.

In April, the administration missed a deadline to share the updated greenhouse-gas inventory with the United Nations, for the first time in three decades, as E&E News reported. It eventually did release the report in May, but only after the Environmental Defense Fund filed a Freedom of Information Act request.

There are also indications that the collection of emissions data might be in jeopardy. In March, the EPA said it would “reconsider” the Greenhouse Gas Reporting Program, which requires thousands of power plants, refineries, and other industrial facilities to report emissions each year.

In addition, the tax and spending bill that Trump signed into law earlier this month rescinds provisions in Biden’s Inflation Reduction Act that provided incentives or funding for corporate greenhouse-gas reporting and methane monitoring.

Meanwhile, the White House has also proposed slashing funding for the National Oceanic and Atmospheric Administration and shuttering a number of its labs. Those include the facility that supports the Mauna Loa Observatory in Hawaii, the world’s longest-running carbon dioxide measuring program, as well as the Global Monitoring Laboratory, which operates a global network of collection flasks that capture air samples used to measure concentrations of nitrous oxide, chlorofluorocarbons, and other greenhouse gases.

Under the latest appropriations negotiations, Congress seems set to spare NOAA and other agencies the full cuts pushed by the Trump administration, but that may or may not protect various climate programs within them. As observers have noted, the loss of experts throughout the federal government, coupled with the priorities set by Trump-appointed leaders of those agencies, could still prevent crucial emissions data from being collected, analyzed, and published.

“That’s a huge concern,” says David Hayes, a professor at the Stanford Doerr School of Sustainability, who previously worked on the effort to upgrade the nation’s emissions measurement and monitoring as special assistant to President Biden for climate policy. It’s not clear “whether they’re going to continue and whether the data availability will drop off.”

‘A natural disaster’

Amid all these cutbacks and uncertainties, those still hoping to make progress toward an improved system for measuring greenhouse gases have had to adjust their expectations: It’s now at least as important to simply preserve or replace existing federal programs as it is to move toward more modern tools and methods.

But Ryan Alexander, executive director of the Data Foundation’s Climate Data Collaborative, is optimistic that there will be opportunities to do both.

She says the new greenhouse-gas coalition will strive to identify the highest-priority needs and help other nonprofits or companies accelerate the development of new tools or methods. It will also aim to ensure that these organizations avoid replicating one another’s efforts and deliver data with high scientific standards, in open and interoperable formats.

The Data Foundation declines to say what other nonprofits will be members of the coalition or how much money it hopes to raise, but it plans to make a formal announcement in the coming weeks.

Nonprofits and companies are already playing a larger role in monitoring emissions, including organizations like Carbon Mapper, which operates satellites and aircraft that detect and measure methane emissions from particular facilities. The EDF also launched a satellite last year, known as MethaneSAT, that could spot large and small sources of emissions—though it lost power earlier this month and probably cannot be recovered.

Alexander notes that shifting from self-reported figures to observational technology like satellites could not just replace but perhaps also improve on the EPA reporting program that the Trump administration has moved to shut down.

Given the “dramatic changes” brought about by this administration, “the future will not be the past,” she says. “This is like a natural disaster. We can’t think about rebuilding in the way that things have been in the past. We have to look ahead and say, ‘What is needed? What can people afford?’”

Organizations can also use this moment to test and develop emerging technologies that could improve greenhouse-gas measurements, including novel sensors or artificial intelligence tools, Hayes says.

“We are at a time when we have these new tools, new technologies for measurement, measuring, and monitoring,” he says. “To some extent it’s a new era anyway, so it’s a great time to do some pilot testing here and to demonstrate how we can create new data sets in the climate area.”

Saving scientific contributions

It’s not just the collection of emissions data that nonprofits and academic groups are hoping to save. Notably, the American Geophysical Union and its partners have taken on two additional climate responsibilities that traditionally fell to the federal government.

The US State Department’s Office of Global Change historically coordinated the nation’s contributions to the UN Intergovernmental Panel on Climate Change’s major reports on climate risks, soliciting and nominating US scientists to help write, oversee, or edit sections of the assessments. The US Global Change Research Program, an interagency group that ran much of the process, also covered the cost of trips to a series of in-person meetings with international collaborators.

But the US government seems to have relinquished any involvement as the IPCC kicks off the process for the Seventh Assessment Report. In late February, the administration blocked federal scientists including NASA’s Katherine Calvin, who was previously selected as a cochair for one of the working groups, from attending an early planning meeting in China. (Calvin was the agency’s chief scientist at the time but was no longer serving in that role as of April, according to NASA’s website.)

The agency didn’t respond to inquiries from interested scientists after the UN panel issued a call for nominations in March, and it failed to present a list of nominations by the deadline in April, scientists involved in the process say. The Trump administration also canceled funding for the Global Change Research Program and, earlier this month, fired the last remaining staffers working at the Office of Global Change.

In response, 10 universities came together in March to form the US Academic Alliance for the IPCC, in partnership with the AGU, to request and evalute applications from US researchers. The universities—which include Yale, Princeton, and the University of California, San Diego—together nominated nearly 300 scientists, some of whom the IPCC has since officially selected. The AGU is now conducting a fundraising campaign to help pay for travel expenses.

Pamela McElwee, a professor at Rutgers who helped establish the academic coalition, says it’s crucial for US scientists to continue participating in the IPCC process.

“It is our flagship global assessment report on the state of climate, and it plays a really important role in influencing country policies,” she says. “To not be part of it makes it much more difficult for US scientists to be at the cutting edge and advance the things we need to do.”

The AGU also stepped in two months later, after the White House dismissed hundreds of researchers working on the National Climate Assessment, an annual report analyzing the rising dangers of climate change across the country. The AGU and American Meteorological Society together announced plans to publish a “special collection” to sustain the momentum of that effort.

“It’s incumbent on us to ensure our communities, our neighbors, our children are all protected and prepared for the mounting risks of climate change,” said Brandon Jones, president of the AGU, in an earlier statement.

The AGU declined to discuss the status of the project.

Stopgap solution

The sheer number of programs the White House is going after will require organizations to make hard choices about what they attempt to save and how they go about it. Moreover, relying entirely on nonprofits and companies to take over these federal tasks is not viable over the long term.

Given the costs of these federal programs, it could prove prohibitive to even keep a minimum viable version of some essential monitoring systems and research programs up and running. Dispersing across various organizations the responsibility of calculating the nation’s emissions sources and sinks also creates concerns about the scientific standards applied and the accessibility of that data, Cleetus says. Plus, moving away from the records that NOAA, NASA, and other agencies have collected for decades would break the continuity of that data, undermining the ability to detect or project trends.

More basically, publishing national emissions data should be a federal responsibility, particularly for the government of the world’s second-largest climate polluter, Cleetus adds. Failing to calculate and share its contributions to climate change sidesteps the nation’s global responsibilities and sends a terrible signal to other countries.

Poulter stresses that nonprofits and the private sector can do only so much, for so long, to keep these systems up and running.

“We don’t want to give the impression that this greenhouse-gas coalition, if it gets off the ground, is a long-term solution,” he says. “But we can’t afford to have gaps in these data sets, so somebody needs to step in and help sustain those measurements.”