This story is a partnership between MIT Technology Review, Lighthouse Reports, and Trouw, and was supported by the Pulitzer Center.

Two futures

Hans de Zwart, a gym teacher turned digital rights advocate, says that when he saw Amsterdam’s plan to have an algorithm evaluate every welfare applicant in the city for potential fraud, he nearly fell out of his chair.

It was February 2023, and de Zwart, who had served as the executive director of Bits of Freedom, the Netherlands’ leading digital rights NGO, had been working as an informal advisor to Amsterdam’s city government for nearly two years, reviewing and providing feedback on the AI systems it was developing.

According to the city’s documentation, this specific AI model—referred to as “Smart Check”—would consider submissions from potential welfare recipients and determine who might have submitted an incorrect application. More than any other project that had come across his desk, this one stood out immediately, he told us—and not in a good way. “There’s some very fundamental [and] unfixable problems,” he says, in using this algorithm “on real people.”

From his vantage point behind the sweeping arc of glass windows at Amsterdam’s city hall, Paul de Koning, a consultant to the city whose résumé includes stops at various agencies in the Dutch welfare state, had viewed the same system with pride. De Koning, who managed Smart Check’s pilot phase, was excited about what he saw as the project’s potential to improve efficiency and remove bias from Amsterdam’s social benefits system.

A team of fraud investigators and data scientists had spent years working on Smart Check, and de Koning believed that promising early results had vindicated their approach. The city had consulted experts, run bias tests, implemented technical safeguards, and solicited feedback from the people who’d be affected by the program—more or less following every recommendation in the ethical-AI playbook. “I got a good feeling,” he told us.

These opposing viewpoints epitomize a global debate about whether algorithms can ever be fair when tasked with making decisions that shape people’s lives. Over the past several years of efforts to use artificial intelligence in this way, examples of collateral damage have mounted: nonwhite job applicants weeded out of job application pools in the US, families being wrongly flagged for child abuse investigations in Japan, and low-income residents being denied food subsidies in India.

Proponents of these assessment systems argue that they can create more efficient public services by doing more with less and, in the case of welfare systems specifically, reclaim money that is allegedly being lost from the public purse. In practice, many were poorly designed from the start. They sometimes factor in personal characteristics in a way that leads to discrimination, and sometimes they have been deployed without testing for bias or effectiveness. In general, they offer few options for people to challenge—or even understand—the automated actions directly affecting how they live.

The result has been more than a decade of scandals. In response, lawmakers, bureaucrats, and the private sector, from Amsterdam to New York, Seoul to Mexico City, have been trying to atone by creating algorithmic systems that integrate the principles of “responsible AI”—an approach that aims to guide AI development to benefit society while minimizing negative consequences.

Developing and deploying ethical AI is a top priority for the European Union, and the same was true for the US under former president Joe Biden, who released a blueprint for an AI Bill of Rights. That plan was rescinded by the Trump administration, which has removed considerations of equity and fairness, including in technology, at the national level. Nevertheless, systems influenced by these principles are still being tested by leaders in countries, states, provinces, and cities—in and out of the US—that have immense power to make decisions like whom to hire, when to investigate cases of potential child abuse, and which residents should receive services first.

Amsterdam indeed thought it was on the right track. City officials in the welfare department believed they could build technology that would prevent fraud while protecting citizens’ rights. They followed these emerging best practices and invested a vast amount of time and money in a project that eventually processed live welfare applications. But in their pilot, they found that the system they’d developed was still not fair and effective. Why?

Lighthouse Reports, MIT Technology Review, and the Dutch newspaper Trouw have gained unprecedented access to the system to try to find out. In response to a public records request, the city disclosed multiple versions of the Smart Check algorithm and data on how it evaluated real-world welfare applicants, offering us unique insight into whether, under the best possible conditions, algorithmic systems can deliver on their ambitious promises.

The answer to that question is far from simple. For de Koning, Smart Check represented technological progress toward a fairer and more transparent welfare system. For de Zwart, it represented a substantial risk to welfare recipients’ rights that no amount of technical tweaking could fix. As this algorithmic experiment unfolded over several years, it called into question the project’s central premise: that responsible AI can be more than a thought experiment or corporate selling point—and actually make algorithmic systems fair in the real world.

A chance at redemption

Understanding how Amsterdam found itself conducting a high-stakes endeavor with AI-driven fraud prevention requires going back four decades, to a national scandal around welfare investigations gone too far.

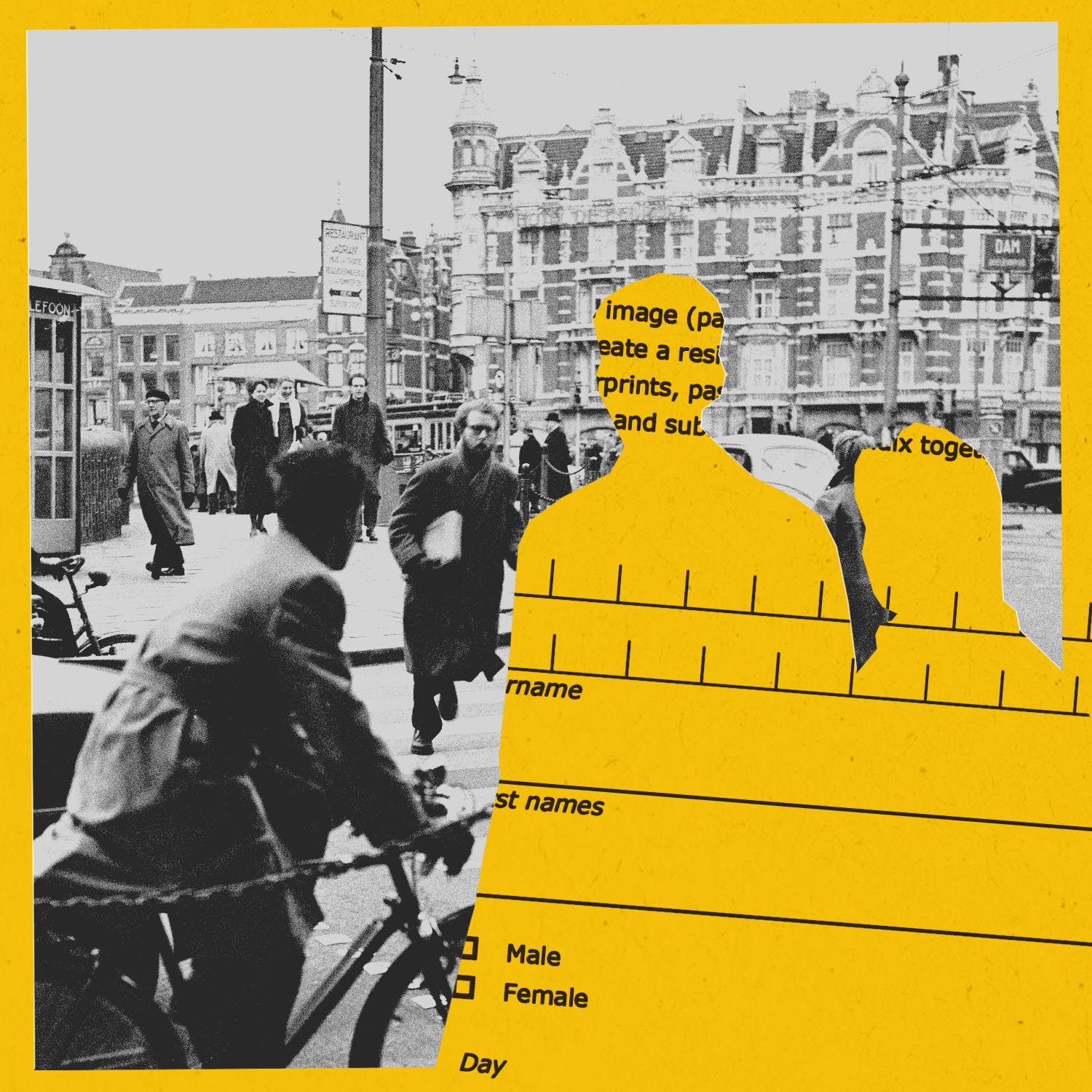

In 1984, Albine Grumböck, a divorced single mother of three, had been receiving welfare for several years when she learned that one of her neighbors, an employee at the social service’s local office, had been secretly surveilling her life. He documented visits from a male friend, who in theory could have been contributing unreported income to the family. On the basis of his observations, the welfare office cut Grumböck’s benefits. She fought the decision in court and won.

Despite her personal vindication, Dutch welfare policy has continued to empower welfare fraud investigators, sometimes referred to as “toothbrush counters,” to turn over people’s lives. This has helped create an atmosphere of suspicion that leads to problems for both sides, says Marc van Hoof, a lawyer who has helped Dutch welfare recipients navigate the system for decades: “The government doesn’t trust its people, and the people don’t trust the government.”

Harry Bodaar, a career civil servant, has observed the Netherlands’ welfare policy up close throughout much of this time—first as a social worker, then as a fraud investigator, and now as a welfare policy advisor for the city. The past 30 years have shown him that “the system is held together by rubber bands and staples,” he says. “And if you’re at the bottom of that system, you’re the first to fall through the cracks.”

Making the system work better for beneficiaries, he adds, was a large motivating factor when the city began designing Smart Check in 2019. “We wanted to do a fair check only on the people we [really] thought needed to be checked,” Bodaar says—in contrast to previous department policy, which until 2007 was to conduct home visits for every applicant.

But he also knew that the Netherlands had become something of a ground zero for problematic welfare AI deployments. The Dutch government’s attempts to modernize fraud detection through AI had backfired on a few notorious occasions.

In 2019, it was revealed that the national government had been using an algorithm to create risk profiles that it hoped would help spot fraud in the child care benefits system. The resulting scandal saw nearly 35,000 parents, most of whom were migrants or the children of migrants, wrongly accused of defrauding the assistance system over six years. It put families in debt, pushed some into poverty, and ultimately led the entire government to resign in 2021.

In Rotterdam, a 2023 investigation by Lighthouse Reports into a system for detecting welfare fraud found it to be biased against women, parents, non-native Dutch speakers, and other vulnerable groups, eventually forcing the city to suspend use of the system. Other cities, like Amsterdam and Leiden, used a system called the Fraud Scorecard, which was first deployed more than 20 years ago and included education, neighborhood, parenthood, and gender as crude risk factors to assess welfare applicants; that program was also discontinued.

The Netherlands is not alone. In the United States, there have been at least 11 cases in which state governments used algorithms to help disperse public benefits, according to the nonprofit Benefits Tech Advocacy Hub, often with troubling results. Michigan, for instance, falsely accused 40,000 people of committing unemployment fraud. And in France, campaigners are taking the national welfare authority to court over an algorithm they claim discriminates against low-income applicants and people with disabilities.

This string of scandals, as well as a growing awareness of how racial discrimination can be embedded in algorithmic systems, helped fuel the growing emphasis on responsible AI. It’s become “this umbrella term to say that we need to think about not just ethics, but also fairness,” says Jiahao Chen, an ethical-AI consultant who has provided auditing services to both private and local government entities. “I think we are seeing that realization that we need things like transparency and privacy, security and safety, and so on.”

The approach, based on a set of tools intended to rein in the harms caused by the proliferating technology, has given rise to a rapidly growing field built upon a familiar formula: white papers and frameworks from think tanks and international bodies, and a lucrative consulting industry made up of traditional power players like the Big 5 consultancies, as well as a host of startups and nonprofits. In 2019, for instance, the Organisation for Economic Co-operation and Development, a global economic policy body, published its Principles on Artificial Intelligence as a guide for the development of “trustworthy AI.” Those principles include building explainable systems, consulting public stakeholders, and conducting audits.

But the legacy left by decades of algorithmic misconduct has proved hard to shake off, and there is little agreement on where to draw the line between what is fair and what is not. While the Netherlands works to institute reforms shaped by responsible AI at the national level, Algorithm Audit, a Dutch NGO that has provided ethical-AI auditing services to government ministries, has concluded that the technology should be used to profile welfare recipients only under strictly defined conditions, and only if systems avoid taking into account protected characteristics like gender. Meanwhile, Amnesty International, digital rights advocates like de Zwart, and some welfare recipients themselves argue that when it comes to making decisions about people’s lives, as in the case of social services, the public sector should not be using AI at all.

Amsterdam hoped it had found the right balance. “We’ve learned from the things that happened before us,” says Bodaar, the policy advisor, of the past scandals. And this time around, the city wanted to build a system that would “show the people in Amsterdam we do good and we do fair.”

Finding a better way

Every time an Amsterdam resident applies for benefits, a caseworker reviews the application for irregularities. If an application looks suspicious, it can be sent to the city’s investigations department—which could lead to a rejection, a request to correct paperwork errors, or a recommendation that the candidate receive less money. Investigations can also happen later, once benefits have been dispersed; the outcome may force recipients to pay back funds, and even push some into debt.

Officials have broad authority over both applicants and existing welfare recipients. They can request bank records, summon beneficiaries to city hall, and in some cases make unannounced visits to a person’s home. As investigations are carried out—or paperwork errors fixed—much-needed payments may be delayed. And often—in more than half of the investigations of applications, according to figures provided by Bodaar—the city finds no evidence of wrongdoing. In those cases, this can mean that the city has “wrongly harassed people,” Bodaar says.

The Smart Check system was designed to avoid these scenarios by eventually replacing the initial caseworker who flags which cases to send to the investigations department. The algorithm would screen the applications to identify those most likely to involve major errors, based on certain personal characteristics, and redirect those cases for further scrutiny by the enforcement team.

If all went well, the city wrote in its internal documentation, the system would improve on the performance of its human caseworkers, flagging fewer welfare applicants for investigation while identifying a greater proportion of cases with errors. In one document, the city projected that the model would prevent up to 125 individual Amsterdammers from facing debt collection and save €2.4 million annually.

Smart Check was an exciting prospect for city officials like de Koning, who would manage the project when it was deployed. He was optimistic, since the city was taking a scientific approach, he says; it would “see if it was going to work” instead of taking the attitude that “this must work, and no matter what, we will continue this.”

It was the kind of bold idea that attracted optimistic techies like Loek Berkers, a data scientist who worked on Smart Check in only his second job out of college. Speaking in a cafe tucked behind Amsterdam’s city hall, Berkers remembers being impressed at his first contact with the system: “Especially for a project within the municipality,” he says, it “was very much a sort of innovative project that was trying something new.”

Smart Check made use of an algorithm called an “explainable boosting machine,” which allows people to more easily understand how AI models produce their predictions. Most other machine-learning models are often regarded as “black boxes” running abstract mathematical processes that are hard to understand for both the employees tasked with using them and the people affected by the results.

The Smart Check model would consider 15 characteristics—including whether applicants had previously applied for or received benefits, the sum of their assets, and the number of addresses they had on file—to assign a risk score to each person. It purposefully avoided demographic factors, such as gender, nationality, or age, that were thought to lead to bias. It also tried to avoid “proxy” factors—like postal codes—that may not look sensitive on the surface but can become so if, for example, a postal code is statistically associated with a particular ethnic group.

In an unusual step, the city has disclosed this information and shared multiple versions of the Smart Check model with us, effectively inviting outside scrutiny into the system’s design and function. With this data, we were able to build a hypothetical welfare recipient to get insight into how an individual applicant would be evaluated by Smart Check.

This model was trained on a data set encompassing 3,400 previous investigations of welfare recipients. The idea was that it would use the outcomes from these investigations, carried out by city employees, to figure out which factors in the initial applications were correlated with potential fraud.

But using past investigations introduces potential problems from the start, says Sennay Ghebreab, scientific director of the Civic AI Lab (CAIL) at the University of Amsterdam, one of the external groups that the city says it consulted with. The problem of using historical data to build the models, he says, is that “we will end up [with] historic biases.” For example, if caseworkers historically made higher rates of mistakes with a specific ethnic group, the model could wrongly learn to predict that this ethnic group commits fraud at higher rates.

The city decided it would rigorously audit its system to try to catch such biases against vulnerable groups. But how bias should be defined, and hence what it actually means for an algorithm to be fair, is a matter of fierce debate. Over the past decade, academics have proposed dozens of competing mathematical notions of fairness, some of which are incompatible. This means that a system designed to be “fair” according to one such standard will inevitably violate others.

Amsterdam officials adopted a definition of fairness that focused on equally distributing the burden of wrongful investigations across different demographic groups.

In other words, they hoped this approach would ensure that welfare applicants of different backgrounds would carry the same burden of being incorrectly investigated at similar rates.

Mixed feedback

As it built Smart Check, Amsterdam consulted various public bodies about the model, including the city’s internal data protection officer and the Amsterdam Personal Data Commission. It also consulted private organizations, including the consulting firm Deloitte. Each gave the project its approval.

But one key group was not on board: the Participation Council, a 15-member advisory committee composed of benefits recipients, advocates, and other nongovernmental stakeholders who represent the interests of the people the system was designed to help—and to scrutinize. The committee, like de Zwart, the digital rights advocate, was deeply troubled by what the system could mean for individuals already in precarious positions.

Anke van der Vliet, now in her 70s, is one longtime member of the council. After she sinks slowly from her walker into a seat at a restaurant in Amsterdam’s Zuid neighborhood, where she lives, she retrieves her reading glasses from their case. “We distrusted it from the start,” she says, pulling out a stack of papers she’s saved on Smart Check. “Everyone was against it.”

For decades, she has been a steadfast advocate for the city’s welfare recipients—a group that, by the end of 2024, numbered around 35,000. In the late 1970s, she helped found Women on Welfare, a group dedicated to exposing the unique challenges faced by women within the welfare system.

City employees first presented their plan to the Participation Council in the fall of 2021. Members like van der Vliet were deeply skeptical. “We wanted to know, is it to my advantage or disadvantage?” she says.

Two more meetings could not convince them. Their feedback did lead to key changes—including reducing the number of variables the city had initially considered to calculate an applicant’s score and excluding variables that could introduce bias, such as age, from the system. But the Participation Council stopped engaging with the city’s development efforts altogether after six months. “The Council is of the opinion that such an experiment affects the fundamental rights of citizens and should be discontinued,” the group wrote in March 2022. Since only around 3% of welfare benefit applications are fraudulent, the letter continued, using the algorithm was “disproportionate.”

De Koning, the project manager, is skeptical that the system would ever have received the approval of van der Vliet and her colleagues. “I think it was never going to work that the whole Participation Council was going to stand behind the Smart Check idea,” he says. “There was too much emotion in that group about the whole process of the social benefit system.” He adds, “They were very scared there was going to be another scandal.”

But for advocates working with welfare beneficiaries, and for some of the beneficiaries themselves, the worry wasn’t a scandal but the prospect of real harm. The technology could not only make damaging errors but leave them even more difficult to correct—allowing welfare officers to “hide themselves behind digital walls,” says Henk Kroon, an advocate who assists welfare beneficiaries at the Amsterdam Welfare Association, a union established in the 1970s. Such a system could make work “easy for [officials],” he says. “But for the common citizens, it’s very often the problem.”

Time to test

Despite the Participation Council’s ultimate objections, the city decided to push forward and put the working Smart Check model to the test.

The first results were not what they’d hoped for. When the city’s advanced analytics team ran the initial model in May 2022, they found that the algorithm showed heavy bias against migrants and men, which we were able to independently verify.

As the city told us and as our analysis confirmed, the initial model was more likely to wrongly flag non-Dutch applicants. And it was nearly twice as likely to wrongly flag an applicant with a non-Western nationality than one with a Western nationality. The model was also 14% more likely to wrongly flag men for investigation.

In the process of training the model, the city also collected data on who its human case workers had flagged for investigation and which groups the wrongly flagged people were more likely to belong to. In essence, they ran a bias test on their own analog system—an important way to benchmark that is rarely done before deploying such systems.

What they found in the process led by caseworkers was a strikingly different pattern. Whereas the Smart Check model was more likely to wrongly flag non-Dutch nationals and men, human caseworkers were more likely to wrongly flag Dutch nationals and women.

The team behind Smart Check knew that if they couldn’t correct for bias, the project would be canceled. So they turned to a technique from academic research, known as training-data reweighting. In practice, that meant applicants with a non-Western nationality who were deemed to have made meaningful errors in their applications were given less weight in the data, while those with a Western nationality were given more.

Eventually, this appeared to solve their problem: As Lighthouse’s analysis confirms, once the model was reweighted, Dutch and non-Dutch nationals were equally likely to be wrongly flagged.

De Koning, who joined the Smart Check team after the data was reweighted, said the results were a positive sign: “Because it was fair … we could continue the process.”

The model also appeared to be better than caseworkers at identifying applications worthy of extra scrutiny, with internal testing showing a 20% improvement in accuracy.

Buoyed by these results, in the spring of 2023, the city was almost ready to go public. It submitted Smart Check to the Algorithm Register, a government-run transparency initiative meant to keep citizens informed about machine-learning algorithms either in development or already in use by the government.

For de Koning, the city’s extensive assessments and consultations were encouraging, particularly since they also revealed the biases in the analog system. But for de Zwart, those same processes represented a profound misunderstanding: that fairness could be engineered.

In a letter to city officials, de Zwart criticized the premise of the project and, more specifically, outlined the unintended consequences that could result from reweighting the data. It might reduce bias against people with a migration background overall, but it wouldn’t guarantee fairness across intersecting identities; the model could still discriminate against women with a migration background, for instance. And even if that issue were addressed, he argued, the model might still treat migrant women in certain postal codes unfairly, and so on. And such biases would be hard to detect.

“The city has used all the tools in the responsible-AI tool kit,” de Zwart told us. “They have a bias test, a human rights assessment; [they have] taken into account automation bias—in short, everything that the responsible-AI world recommends. Nevertheless, the municipality has continued with something that is fundamentally a bad idea.”

Ultimately, he told us, it’s a question of whether it’s legitimate to use data on past behavior to judge “future behavior of your citizens that fundamentally you cannot predict.”

Officials still pressed on—and set March 2023 as the date for the pilot to begin. Members of Amsterdam’s city council were given little warning. In fact, they were only informed the same month—to the disappointment of Elisabeth IJmker, a first-term council member from the Green Party, who balanced her role in municipal government with research on religion and values at Amsterdam’s Vrije University.

“Reading the words ‘algorithm’ and ‘fraud prevention’ in one sentence, I think that’s worth a discussion,” she told us. But by the time that she learned about the project, the city had already been working on it for years. As far as she was concerned, it was clear that the city council was “being informed” rather than being asked to vote on the system.

The city hoped the pilot could prove skeptics like her wrong.

Upping the stakes

The formal launch of Smart Check started with a limited set of actual welfare applicants, whose paperwork the city would run through the algorithm and assign a risk score to determine whether the application should be flagged for investigation. At the same time, a human would review the same application.

Smart Check’s performance would be monitored on two key criteria. First, could it consider applicants without bias? And second, was Smart Check actually smart? In other words, could the complex math that made up the algorithm actually detect welfare fraud better and more fairly than human caseworkers?

It didn’t take long to become clear that the model fell short on both fronts.

While it had been designed to reduce the number of welfare applicants flagged for investigation, it was flagging more. And it proved no better than a human caseworker at identifying those that actually warranted extra scrutiny.

What’s more, despite the lengths the city had gone to in order to recalibrate the system, bias reemerged in the live pilot. But this time, instead of wrongly flagging non-Dutch people and men as in the initial tests, the model was now more likely to wrongly flag applicants with Dutch nationality and women.

Lighthouse’s own analysis also revealed other forms of bias unmentioned in the city’s documentation, including a greater likelihood that welfare applicants with children would be wrongly flagged for investigation. (Amsterdam officials did not respond to a request for comment about this finding, nor other follow up questions about general critiques of the city’s welfare system.)

The city was stuck. Nearly 1,600 welfare applications had been run through the model during the pilot period. But the results meant that members of the team were uncomfortable continuing to test—especially when there could be genuine consequences. In short, de Koning says, the city could not “definitely” say that “this is not discriminating.”

He, and others working on the project, did not believe this was necessarily a reason to scrap Smart Check. They wanted more time—say, “a period of 12 months,” according to de Koning—to continue testing and refining the model.

They knew, however, that would be a hard sell.

In late November 2023, Rutger Groot Wassink—the city official in charge of social affairs—took his seat in the Amsterdam council chamber. He glanced at the tablet in front of him and then addressed the room: “I have decided to stop the pilot.”

The announcement brought an end to the sweeping multiyear experiment. In another council meeting a few months later, he explained why the project was terminated: “I would have found it very difficult to justify, if we were to come up with a pilot … that showed the algorithm contained enormous bias,” he said. “There would have been parties who would have rightly criticized me about that.”

Viewed in a certain light, the city had tested out an innovative approach to identifying fraud in a way designed to minimize risks, found that it had not lived up to its promise, and scrapped it before the consequences for real people had a chance to multiply.

But for IJmker and some of her city council colleagues focused on social welfare, there was also the question of opportunity cost. She recalls speaking with a colleague about how else the city could’ve spent that money—like to “hire some more people to do personal contact with the different people that we’re trying to reach.”

City council members were never told exactly how much the effort cost, but in response to questions from MIT Technology Review, Lighthouse, and Trouw on this topic, the city estimated that it had spent some €500,000, plus €35,000 for the contract with Deloitte—but cautioned that the total amount put into the project was only an estimate, given that Smart Check was developed in house by various existing teams and staff members.

For her part, van der Vliet, the Participation Council member, was not surprised by the poor result. The possibility of a discriminatory computer system was “precisely one of the reasons” her group hadn’t wanted the pilot, she says. And as for the discrimination in the existing system? “Yes,” she says, bluntly. “But we have always said that [it was discriminatory].”

She and other advocates wished that the city had focused more on what they saw as the real problems facing welfare recipients: increases in the cost of living that have not, typically, been followed by increases in benefits; the need to document every change that could potentially affect their benefits eligibility; and the distrust with which they feel they are treated by the municipality.

Can this kind of algorithm ever be done right?

When we spoke to Bodaar in March, a year and a half after the end of the pilot, he was candid in his reflections. “Perhaps it was unfortunate to immediately use one of the most complicated systems,” he said, “and perhaps it is also simply the case that it is not yet … the time to use artificial intelligence for this goal.”

“Niente, zero, nada. We’re not going to do that anymore,” he said about using AI to evaluate welfare applicants. “But we’re still thinking about this: What exactly have we learned?”

That is a question that IJmker thinks about too. In city council meetings she has brought up Smart Check as an example of what not to do. While she was glad that city employees had been thoughtful in their “many protocols,” she worried that the process obscured some of the larger questions of “philosophical” and “political values” that the city had yet to weigh in on as a matter of policy.

Questions such as “How do we actually look at profiling?” or “What do we think is justified?”—or even “What is bias?”

These questions are, “where politics comes in, or ethics,” she says, “and that’s something you cannot put into a checkbox.”

But now that the pilot has stopped, she worries that her fellow city officials might be too eager to move on. “I think a lot of people were just like, ‘Okay, well, we did this. We’re done, bye, end of story,’” she says. It feels like “a waste,” she adds, “because people worked on this for years.”

In abandoning the model, the city has returned to an analog process that its own analysis concluded was biased against women and Dutch nationals—a fact not lost on Berkers, the data scientist, who no longer works for the city. By shutting down the pilot, he says, the city sidestepped the uncomfortable truth—that many of the concerns de Zwart raised about the complex, layered biases within the Smart Check model also apply to the caseworker-led process.

“That’s the thing that I find a bit difficult about the decision,” Berkers says. “It’s a bit like no decision. It is a decision to go back to the analog process, which in itself has characteristics like bias.”

Chen, the ethical-AI consultant, largely agrees. “Why do we hold AI systems to a higher standard than human agents?” he asks. When it comes to the caseworkers, he says, “there was no attempt to correct [the bias] systematically.” Amsterdam has promised to write a report on human biases in the welfare process, but the date has been pushed back several times.

“In reality, what ethics comes down to in practice is: nothing’s perfect,” he says. “There’s a high-level thing of Do not discriminate, which I think we can all agree on, but this example highlights some of the complexities of how you translate that [principle].” Ultimately, Chen believes that finding any solution will require trial and error, which by definition usually involves mistakes: “You have to pay that cost.”

But it may be time to more fundamentally reconsider how fairness should be defined—and by whom. Beyond the mathematical definitions, some researchers argue that the people most affected by the programs in question should have a greater say. “Such systems only work when people buy into them,” explains Elissa Redmiles, an assistant professor of computer science at Georgetown University who has studied algorithmic fairness.

No matter what the process looks like, these are questions that every government will have to deal with—and urgently—in a future increasingly defined by AI.

And, as de Zwart argues, if broader questions are not tackled, even well-intentioned officials deploying systems like Smart Check in cities like Amsterdam will be condemned to learn—or ignore—the same lessons over and over.

“We are being seduced by technological solutions for the wrong problems,” he says. “Should we really want this? Why doesn’t the municipality build an algorithm that searches for people who do not apply for social assistance but are entitled to it?”

Eileen Guo is the senior reporter for features and investigations at MIT Technology Review. Gabriel Geiger is an investigative reporter at Lighthouse Reports. Justin-Casimir Braun is a data reporter at Lighthouse Reports.

Additional reporting by Jeroen van Raalte for Trouw, Melissa Heikkilä for MIT Technology Review, and Tahmeed Shafiq for Lighthouse Reports. Fact checked by Alice Milliken.

You can read a detailed explanation of our technical methodology here. You can read Trouw‘s companion story, in Dutch, here.