Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The Monday.com work platform has been steadily growing over the past decade, in a quest to achieve its goal of helping empower teams at organizations small and large to be more efficient and productive.

According to co-founder Roy Mann, AI has been a part of the company for much of its history. The initial use cases supported its own performance marketing. (Who among us has not seen a Monday advertisement somewhere over the last 10 years?) A large part of that effort has benefited from AI and machine learning (ML).

With the advent and popularity of generative AI in the last three years, particularly since the debut of ChatGPT, Monday — much like every other enterprise on the planet — began to consider and integrate the technology.

The initial deployment of gen AI at Monday didn’t quite generate the return on investment users wanted, however. That realization led to a bit of a rethink and pivot as the company looked to give its users AI-powered tools that actually help to improve enterprise workflows. That pivot has now manifested itself with the company’s “AI blocks” technology and the preview of its agentic AI technology that it calls “digital workforce.”

Monday’s AI journey, for the most part, is all about realizing the company’s founding vision.

“We wanted to do two things, one is give people the power we had as developers,” Mann told VentureBeat in an exclusive interview. “So they can build whatever they want, and they feel the power that we feel, and the other end is to build something they really love.”

Any type of vendor, particularly an enterprise software vendor, is always trying to improve and help its users. Monday’s AI adoption fits securely into that pattern.

The company’s public AI strategy has evolved through several distinct phases:

- AI assistant: Initial platform-wide integration;

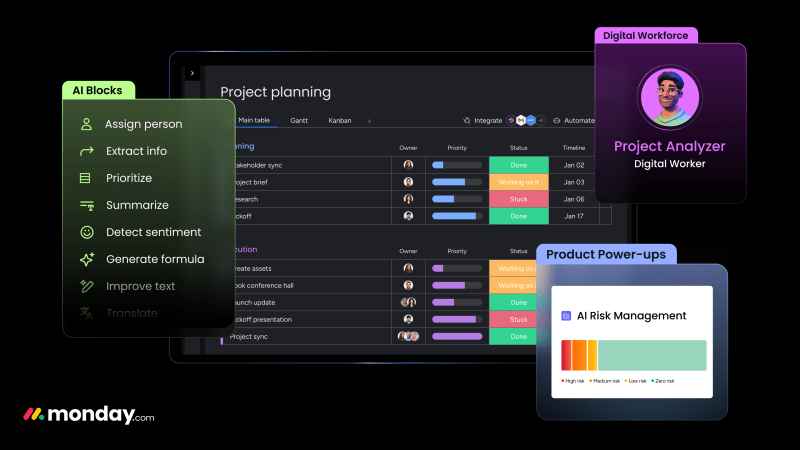

- AI blocks: Modular AI capabilities for workflow customization;

- Digital workforce: Agentic AI.

Much like many other vendors, the first public foray into gen AI involved an assistant technology. The basic idea with any AI assistant is that it provides a natural language interface for queries. Mann explained that the Monday AI assistant was initially part of the company’s formula builder, giving non-technical users the confidence and ability to build things they couldn’t before. While the service is useful, there is still much more that organizations need and want to do.

Or Fridman, AI product group lead at Monday, explained that the main lesson learned from deploying the AI assistant is that customers want AI to be integrated into their workflows. That’s what led the company to develop AI blocks.

Building the foundation for enterprise workflows with AI blocks

Monday realized the limitations of the AI assistant approach and what users really wanted.

Simply put, AI functionality needs to be in the right context for users — directly in a column, component or service automation.

AI blocks are pre-built AI functions that Monday has made accessible and integrated directly into its workflow and automation tools. For example, in project management, the AI can provide risk mapping and predictability analysis, helping users better manage their projects. This allows them to focus on higher-level tasks and decision-making, while the AI handles the more repetitive or data-intensive work.

This approach has particular significance for the platform’s user base, 70% of which consists of non-technical companies. The modular nature allows businesses to implement AI capabilities without requiring deep technical expertise or major workflow disruptions.

Monday is taking a model agnostic approach to integrating AI

An early approach taken by many vendors on their AI journeys was to use a single vendor large language model (LLM). From there, they could build a wrapper around it or fine tune for a specific use case.

Mann explained that Monday is taking a very agnostic approach. In his view, models are increasingly becoming a commodity. The company builds products and solutions on top of available models, rather than creating its own proprietary models.

Looking a bit deeper, Assaf Elovic, Monday’s AI director, noted that the company uses a variety of AI models. That includes OpenAI models such as GPT-4o via Azure, and others through Amazon Bedrock, ensuring flexibility and strong performance. Elovic noted that the company’s usage follows the same data residency standards as all Monday features. That includes multi-region support and encryption, to ensure the privacy and security of customer data.

Agentic AI and the path to the digital workforce

The latest step in Monday’s AI journey is in the same direction as the rest of the industry — the adoption of agentic AI.

The promise of agentic AI is more autonomous operations that can enable an entire workflow. Some organizations build agentic AI on top of frameworks such as LangChain or Crew AI. But that’s not the specific direction that Monday is taking with its digital workforce platform.

Elovic explained that Monday’s agentic flow is deeply connected to its own AI blocks infrastructure. The same tools that power its agents are built on AI blocks like sentiment analysis, information extraction and summarization.

Mann noted that digital workforce isn’t so much about using a specific agentic AI tool or framework, but about creating better automation and flow across the integrated components on the Monday platform. Digital workforce agents are tightly integrated into the platform and workflows. This allows the agents to have contextual awareness of the user’s data, processes and existing setups within Monday.

The first digital workforce agent is set to become available in March. Mann said it will be called the monday “expert” designed to build solutions for specific users. Users describe their problems and needs to the agent, and the AI will provide them relevant workflows, boards and automations to address those challenges.

AI specialization and integration provides differentiation in a commoditized market

There is no shortage of competition across the markets that Monday serves.

As a workflow platform, it crosses multiple industry verticals including customer relationship management (CRM) and project management. There are big players across these industries including Salesforce and Atlassian, which have both deeply invested in AI.

Mann said the deep integration with AI blocks across various Monday tools differentiate the company from its rivals. At a more basic level, he said, it’s really all about meeting users where they are and embedding useful AI capabilities in the context of workflow.

Monday’s evolution suggests a model for enterprise software development where AI capabilities are deeply integrated yet highly customizable. This approach addresses a crucial challenge in enterprise AI adoption: The need for solutions that are both powerful and accessible to non-technical users.

The company’s strategy also points to a future where AI implementation focuses on empowerment rather than replacement.

“If a technology makes large companies more efficient, what does it do for SMBs?” said Mann, highlighting how AI democratization could level the playing field between large and small enterprises.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.