Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

OpenAI has released a new proprietary AI model in time to counter the rapid rise of open source rival DeepSeek R1 — but will it be enough to blunt the latter’s success?

Today, after several days of rumors and increasing anticipation among AI users on social media, OpenAl is debuting o3-mini, the second model in its new family of “reasoners,” Al models that take slightly more time to “think,” analyze their own processes and reflect on their own “chains of thought” before responding to user queries and inputs with new outputs.

The result is a model that can perform at the level of a PhD student or even degree holder on answering hard questions in math, science, engineering and many other fields.

The o3-mini model is now available on ChatGPT, including the free tier, and OpenAI’s application programming interface (API), and it’s actually less expensive, faster, and more performant than the previous high-end model, OpenAI’s o1 and its faster, lower-parameter count sibling, o1-mini.

While inevitably it will be compared to DeepSeek R1, and the release date seen as a reaction, it’s important to remember that o3 and o3-mini were announced well prior to the January release of DeepSeek R1, in December 2024 — and that OpenAI CEO Sam Altman stated previously on X that due to feedback from developers and researchers, it would be coming to ChatGPT and the OpenAI API at the same time.

Unlike DeepSeek R1, o3-mini will not be made available as an open source model — meaning the code cannot be taken and downloaded for offline usage, nor customized to the same extent, which may limit its appeal compared to DeepSeek R1 for some applications.

OpenAI did not provide any further details about the (presumed) larger o3 model announced back in December alongside o3-mini. At that time, OpenAI’s opt-in dropdown form for testing o3 stated that it would undergo a “delay of multiple weeks” before third-parties could test it.

Performance and Features

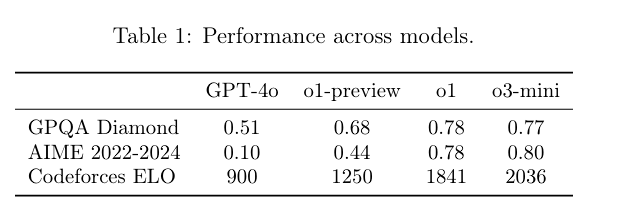

Similar to o1, OpenAI o3-mini is optimized for reasoning in math, coding, and science.

Its performance is comparable to OpenAI o1 when using medium reasoning effort, but offers the following advantages:

- 24% faster response times compared to o1-mini (OpenAI didn’t provide a specific number here, but looking at third-party evaluation group Artificial Analysis’s tests, o1-mini’s response time is 12.8 seconds to receive and output 100 tokens. So for o3-mini, a 24% speed bump would drop the response time down to 10.32 seconds.)

- Improved accuracy, with external testers preferring o3-mini’s responses 56% of the time.

- 39% fewer major errors on complex real-world questions.

- Better performance in coding and STEM tasks, particularly when using high reasoning effort.

- Three reasoning effort levels (low, medium, and high), allowing users and developers to balance accuracy and speed.

It also boasts impressive benchmarks, even outpacing o1 in some cases, according to the o3-mini System Card OpenAI released online (and which was published earlier than the official model availability announcement).

o3-mini’s context window — the number of combined tokens it can input/output in a single interaction — is 200,000, with a maximum of 100,000 in each output. That’s the same as the full o1 model and outperforms DeepSeek R1’s context window of around 128,000/130,000 tokens. But it is far below Google Gemini 2.0 Flash Thinking’s new context window of up to 1 million tokens.

While o3-mini focuses on reasoning capabilities, it doesn’t have vision capabilities yet. Developers and users looking to upload images and files should keep using o1 in the meantime.

The competition heats up

The arrival of o3-mini marks the first time OpenAI is making a reasoning model available to free ChatGPT users. The prior o1 model family was only available to paying subscribers of the ChatGPT Plus, Pro and other plans, as well as via OpenAI’s paid application programming interface.

As it did with large language model (LLM)-powered chatbots via the launch of ChatGPT in November 2022, OpenAI essentially created the entire category of reasoning models back in September 2024 when it first unveiled o1, a new class of models with a new training regime and architecture.

But OpenAI, in keeping with its recent history, did not make o1 open source, contrary to its name and original founding mission. Instead, it kept the model’s code proprietary.

And over the last two weeks, o1 has been overshadowed by Chinese AI startup DeepSeek, which launched R1, a rival, highly efficient, largely open-source reasoning model freely available to take, retrain, and customize by anyone around the world, as well as use for free on DeepSeek’s website and mobile app — a model reportedly trained at a fraction of the cost of o1 and other LLMs from top labs.

DeepSeek R1’s permissive MIT Licensing terms, free app/website for consumers, and decision to make R1’s codebase freely available to take and modify has led it to a veritable explosion of usage both in the consumer and enterprise markets — even OpenAI investor Microsoft and Anthropic backer Amazon rushing to add variants of it to their cloud marketplaces. Perplexity, the AI search company, also quickly added a variant of it for users.

DeepSeek also dethroned the ChatGPT iOS app for the number one place in the U.S. Apple App Store, and is notable for outpacing OpenAI by connecting its R1 model to web search in its app and on the web, something that OpenAI has not yet done for o1, leading to further techno anxiety among tech workers and others online that China is catching up or has outpaced the U.S. in AI innovation — even technology more generally.

Many AI researchers and scientists and top VCs such as Marc Andreessen, however, have welcomed the rise of DeepSeek and its open sourcing in particular as a tide that lifts all boats in the AI field, increasing the intelligence available to everyone while reducing costs.

Availability in ChatGPT

The model is now rolling out globally to Free, Plus, Team, and Pro users, with Enterprise and Education access coming next week.

- Free users can try o3-mini for the first time by selecting the “Reason” button in the chat bar or regenerating a response.

- Message limits have increased 3X for Plus and Team users, up from 50 to 150 messages per day.

- Pro users get unlimited access to both o3-mini and a new, even higher-reasoning variant, o3-mini-high.

Additionally, o3-mini now supports search integration within ChatGPT, providing responses with relevant web links. This feature is still in its early stages as OpenAI refines search capabilities across its reasoning models.

API Integration and Pricing

For developers, o3-mini is available via the Chat Completions API, Assistants API, and Batch API. The model supports function calling, Structured Outputs, and developer messages, making it easy to integrate into real-world applications.

One of o3-mini’s most notable advantages is its cost efficiency: It’s 63% cheaper than OpenAI o1-mini and 93% cheaper than the full o1 model, priced at $1.10/$4.40 per million tokens in/out (with a 50% cache discount).

Yet it still pales in comparison to the affordability of the official DeepSeek API‘s offering of R1 at $0.14/$0.55 per million tokens in/out. But given DeepSeek is based in China and comes with attendant geopolitical awareness and security concerns about the user/enterprise’s data flowing into and out of the model, it’s likely that OpenAI will remain the preferred API for some security-focused customers and enterprises in the U.S. and Europe.

Developers can also adjust the reasoning effort level (low, medium, high) based on their application needs, allowing for more control over latency and accuracy trade-offs.

On safety, OpenAI says it used something called “deliberative alignment” with o3-mini. This means the model was asked to reason about the human-authored safety guidelines it was given, understand more of their intent and the harms they are designed to prevent, and come up with its own ways of ensuring those harms are prevented. OpenAI says it allows the model to be less censorious when discussing sensitive topics while also preserving safety.

OpenAI says the model outperforms GPT-4o in handling safety and jailbreak challenges, and that it conducted extensive external safety testing prior to release today.

A recent report covered in Wired (where my wife works) showed that DeepSeek succumbed to every jailbreak prompt and attempt out of 50 tested by security researchers, which may give OpenAI o3-mini the edge over DeepSeek R1 in cases where security and safety are paramount.

What’s next?

The launch of o3-mini represents OpenAI’s broader effort to make advanced reasoning AI more accessible and cost-effective in the face of more intense competition than ever before from DeepSeek’s R1 and others, such as Google, which recently released a free version of its own rival reasoning model Gemini 2 Flash Thinking with an expanded input context of up to 1 million tokens.

With its focus on STEM reasoning and affordability, OpenAI aims to expand the reach of AI-driven problem-solving in both consumer and developer applications.

But as the company becomes more ambitious than ever in its aims — recently announcing a $500 billion data center infrastructure project called Stargate with backing from Softbank — the question remains whether or not its strategy will pay off well enough to justify the multibillions sunken into it by deep-pocketed investors such as Microsoft and other VCs.

As open source models increasingly close the gap with OpenAI in performance and outmatch it in cost, will its reportedly superior safety measures, powerful capabilities, easy-to-use API and user-friendly interfaces be enough to maintain customers — especially in the enterprise — who may prioritize cost and efficiency over these attributes? We’ll be reporting on the developments as they unfold.

Daily insights on business use cases with VB Daily

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Read our Privacy Policy

Thanks for subscribing. Check out more VB newsletters here.

An error occured.