Growing up in South Central Los Angeles, Junior Peña learned to keep his eyes down and his schedule full. In his neighborhood, a glance could invite trouble, and many kids—including his older brother—were pulled into gang culture. He knew early on that he wanted something else. With his parents working long hours, he went to after-school programs, played video games, and practiced martial arts. But his friends had no idea that he also spent hours online poring over textbooks and watching lectures, teaching himself advanced mathematics and philosophy. “Being good at school wasn’t how people saw me,” he says.

One night in high school, he came across a YouTube video about the Higgs boson—the so-called “God particle,” thought to give mass to nearly everything in the universe. “I remember my mind being flooded with questions about life, the universe, and our existence,” he recalls. He’d already looked into philosophers’ answers to those questions but was drawn to the more concrete explanations of physics.

After his independent study helped Peña pass AP calculus as a junior, his fascination with physics led him to the University of Southern California, the 2019 session of MIT’s Summer Research Program, and then MIT for grad school. Today, he’s working to shed light on neutrinos, the ghostly uncharged particles that slip effortlessly through matter. Particles that would require a wall of lead five light-years thick to stop.

As a grad student in the lab of Joseph Formaggio, an experimental physicist known for pioneering new techniques in neutrino detection, Peña works alongside leading physicists designing technology to precisely measure what are arguably the universe’s most elusive particles. Emanating from such sources as the sun and supernovas (and generated artificially by particle accelerators and nuclear reactors), neutrinos reveal their presence through an absence. Their existence was initially posited in the 1930s by the physicist Wolfgang Pauli, who noticed that energy seemed to go missing when atoms underwent a process known as radioactive beta decay. According to the law of conservation of energy, the total energy of the particles emitted during radioactive decay must equal the energy of the decaying atom. To account for the missing energy, Pauli proposed the existence of an undetectable particle that was carrying it away.

Einstein’s E = mc2 tells us that if energy is missing, then mass must be too. Yet according to the standard model of physics—which offers our most trusted theory for how particles behave—neutrinos should have no mass at all. Unlike other particles, they don’t interact with the Higgs field, a kind of cosmic molasses that slows particles down and gives them mass. Because they pass through it untouched, they should remain massless.

But by the early 2000s, researchers had discovered that neutrinos, which had first been detected in the 1950s, can shift between three types, a feat possible only if they have mass. So now the tantalizing question is: What is their mass?

Determining neutrinos’ exact mass could explain why matter triumphed over antimatter, refine models of cosmic evolution, and clarify the particles’ role in dark matter and dark energy. And the Formaggio Lab is part of Project 8, an international collaboration of 71 scientists in 17 institutions working to make that measurement. To do this, the lab uses tritium, an unstable isotope of hydrogen that decays into helium, releasing both an electron and a particle called an antineutrino (“every particle has an antiparticle counterpart,” Formaggio explains). By precisely measuring the energy spectrum of those electrons, scientists can determine how much energy is missing, allowing them to infer the neutrinos’ mass.

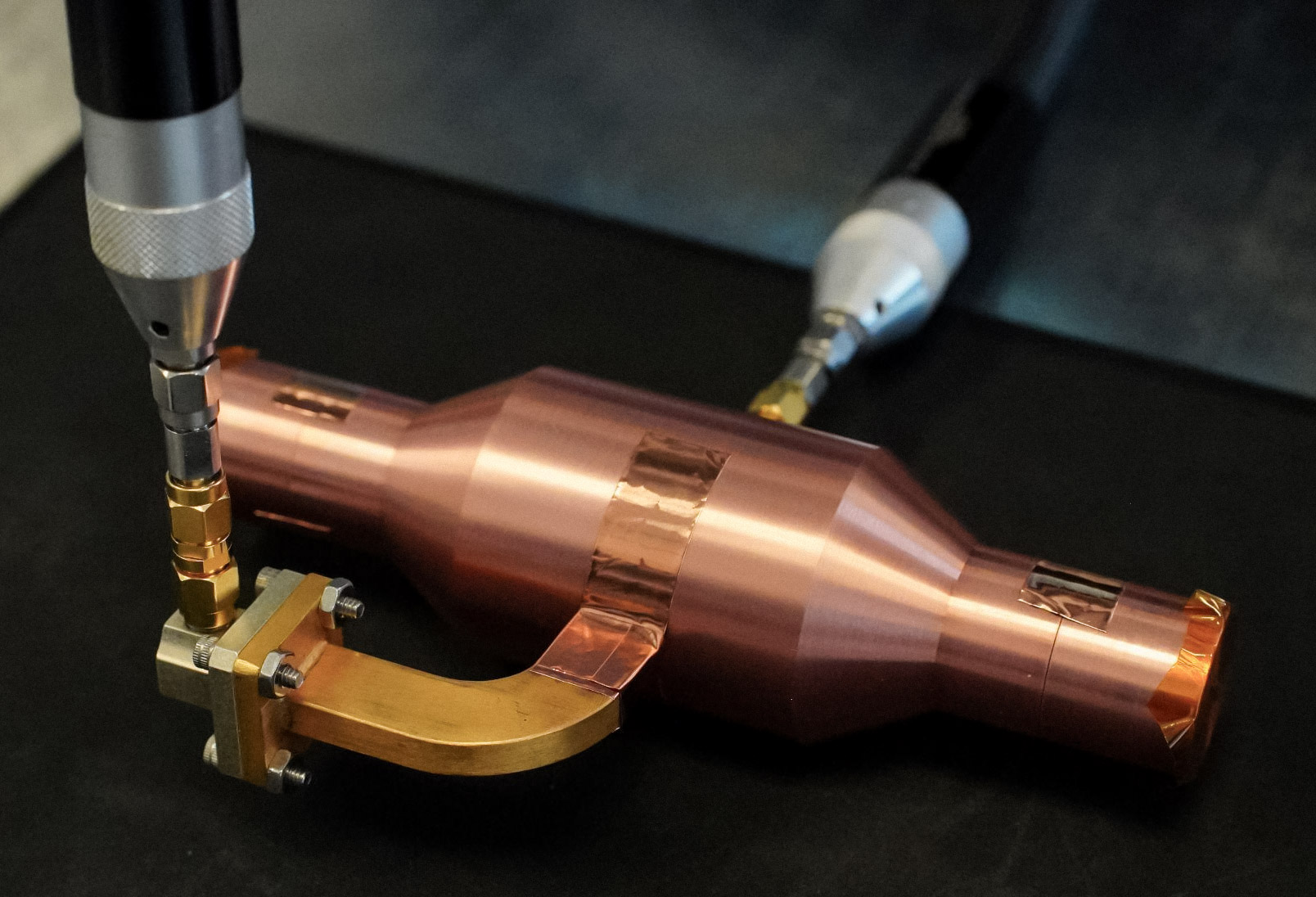

At the heart of this experiment is a novel detection method called cyclotron radiation emission spectroscopy (CRES), first proposed in 2008 by Formaggio and his then postdoc Benjamin Monreal, which “listens” to the faint radio signals emitted as electrons spiral through a magnetic field. Peña was instrumental in designing a crucial part of the tool that will make this possible: a copper cavity that he likens to a guitar, with the electrons released during beta decay acting like plucked strings. The cavity will amplify their signals, helping researchers to measure them exactly. Peña spent more than a year developing and refining a flashlight-size prototype of the device in collaboration with machinists and fellow physicists.

“He had to learn the [design and simulation] software, figure out how to interpret the signals, and test iteration after iteration,” says Formaggio, Peña’s advisor. “It’s been incredible watching him take this from a rough idea to a working design.”

The design of Peña’s cavity had to balance competing demands. It needed a way to extract the electrons’ signals that was compatible with the researchers’ methods for calibrating the system, one of which involves using an electron gun to inject electrons of a known, precise energy into the cavity. And it also needed to preserve the properties of the electromagnetic fields within the cavity. In May, Peña sent his final prototype to the University of Washington, where it was installed in July. Researchers hope to begin calibration this fall. Then Peña’s cavity and the full experimental setup will be scaled up so in a few years they can begin collecting CRES data using tritium.

“We’ve been working toward this for at least three years,” says Jeremy Gaison, a Project 8 physicist at the Pacific Northwest National Lab. “When we finally turn on the experiment, it’s going to be incredible to see if all of our simulations and studies actually hold up in real data.”

Peña’s contribution to the effort “is the core of this experiment,” says Wouter Van De Pontseele, another Project 8 collaborator and former Formaggio Lab postdoc. “Junior took an idea and turned it into reality.”

Project 8 is still in its early stages. The next phase will scale up with larger, more complex versions of the technology Peña played a key role in developing, culminating in a vast facility designed to hunt for the neutrino’s mass. If that is successful, the findings could have profound implications for our understanding of the universe’s structure, the evolution of galaxies, and even the fundamental nature of matter itself.

Eager to keep probing such open questions in fundamental physics, Peña is still exploring options for his postdoc work. One possibility is focusing on the emerging field of levitated nanosensors, which could advance gravitation experiments, efforts to detect dark matter, and searches for the sterile neutrino, a posited fourth variety that interacts even more rarely than the others.

“Experimental particle physics is long-term work,” says Van De Pontseele. “Some of us will stay on this project for decades, but Junior can walk away knowing he made a lasting impact.”

Peña also hopes to have a lasting impact as a professor, opening doors for students who, like him, never saw themselves reflected in the halls of academia. “A summer program brought me here,” he says. “I owe it to the next kid to show they belong.”